Throughout machine studying mannequin coaching, there are seven widespread errors that engineers and knowledge scientists usually run into. Whereas receiving errors is irritating, groups should know easy methods to handle these and the way they’ll keep away from them sooner or later.

Within the following, we’ll provide in-depth explanations, preventative measures, and fast fixes for identified mannequin coaching points whereas addressing the query of “What does this mannequin error imply?”.

We’ll cowl an important mannequin coaching errors, corresponding to:

- Overfitting and Underfitting

- Information Imbalance

- Information Leakage

- Outliers and Minima

- Information and Labeling Issues

- Information Drift

- Lack of Mannequin Experimentation

About us: At viso.ai, we provide the Viso Suite, the primary end-to-end laptop imaginative and prescient platform. It allows enterprises to create and implement laptop imaginative and prescient options, that includes built-in ML instruments for knowledge assortment, annotation, and mannequin coaching. Be taught extra about Viso Suite and guide a demo.

Mannequin Error No. 1: Overfitting and Underfitting

What’s Underfitting and Overfitting?

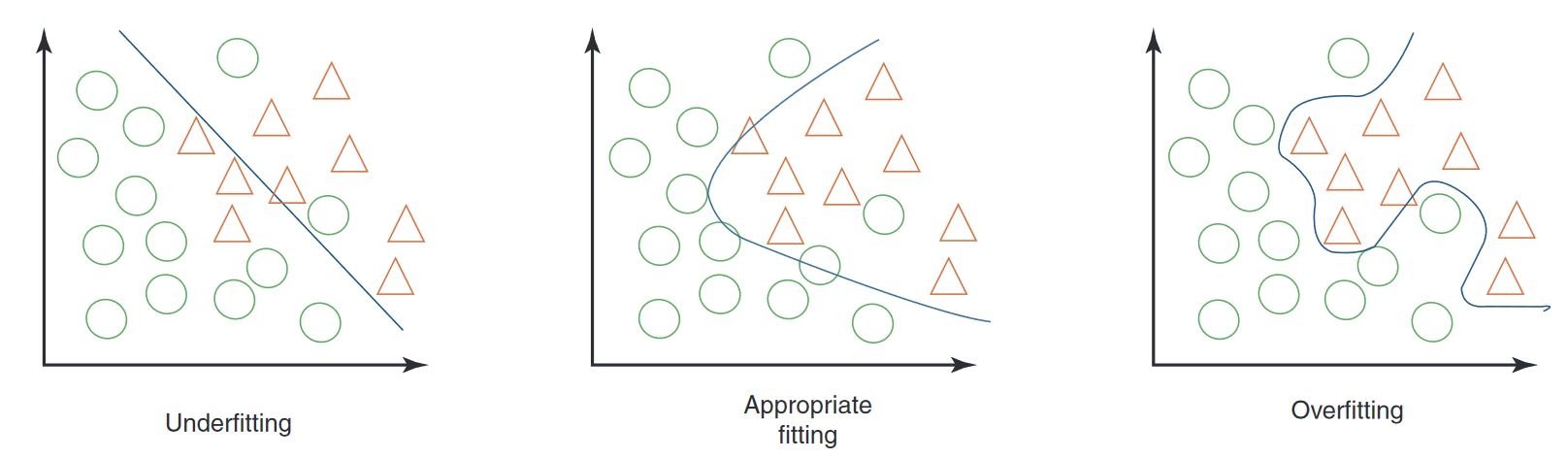

Some of the widespread issues in machine studying occurs when the coaching knowledge is just not properly fitted to what the machine studying mannequin is meant to be taught.

In overfitting, there are too few examples for the ML mannequin to work on. It fails to give you the kind of sturdy evaluation that it’s imagined to do. Underfitting is the alternative drawback – there’s an excessive amount of to be taught, and the machine studying program is, in a way, overwhelmed by an excessive amount of knowledge.

Methods to Repair Underfitting and Overfitting

On this case, fixing the difficulty has rather a lot to do with framing a machine-learning course of accurately. Correct becoming is like matching knowledge factors to a map that isn’t too detailed, however simply detailed sufficient. Builders try to make use of the precise dimensionality – to be sure that the coaching knowledge matches its supposed job.

To repair an overfitting drawback, it’s endorsed to cut back layers and pursue cross-validation of the coaching set. Characteristic discount and regularization can even assist. For underfitting, however, the objective is commonly to construct in complexity, though consultants additionally counsel that eradicating noise from the information set would possibly assist, too.

For the pc imaginative and prescient activity of classification, you should utilize a confusion matrix to judge mannequin efficiency, together with underfitting and overfitting eventualities, on a separate check set.

Mannequin Error No. 2: Information Imbalance Points in Machine Studying

What’s Information Imbalance?

Even for those who’re certain that you’ve got given the machine studying mannequin sufficient examples to do its job, however not too many, you would possibly run into one thing referred to as ‘class imbalance’ or ‘knowledge imbalance’.

Information imbalance, a standard difficulty in linear regression fashions and prediction fashions, represents a scarcity of a consultant coaching knowledge set for no less than a few of the outcomes that you really want. In different phrases, if you wish to examine 4 or 5 courses of photographs or objects, all the coaching knowledge is from one or two of these courses. Which means the opposite three aren’t represented within the coaching knowledge in any respect, so the mannequin won’t be able to work on them. It’s unfamiliar with these examples.

Methods to Repair Information Imbalance

To handle an imbalance, groups will need to be sure that each a part of the main target class set is represented within the coaching knowledge. Instruments for troubleshooting bias and variance embody auditing packages and detection fashions like IBM’s AI Fairness 360.

There’s additionally the bias/variance drawback, the place some affiliate bias with coaching knowledge units which can be too easy, and extreme variance with coaching knowledge units which can be too advanced. In some methods, although, that is simplistic.

Bias has to do with improper clustering or grouping of knowledge, and variance has to do with knowledge that’s too unfold out. There’s much more element to this, however when it comes to fixing bias, the objective is so as to add extra related knowledge that’s numerous, to cut back bias that method. For variance, it typically helps so as to add knowledge factors that may make traits clearer to the ML.

Mannequin Error No. 3: Information Leakage

What’s Information Leakage?

If this brings to thoughts the picture of a pipe leaking water, and you are concerned about knowledge being misplaced from the system, that’s not likely what knowledge leakage is about within the context of machine studying.

As a substitute, it is a state of affairs the place data from the coaching knowledge leaks into this system’s operational capability – the place the coaching knowledge goes to have an excessive amount of of an impact on the evaluation of real-world outcomes.

In knowledge leakage conditions, fashions can, as an example, return excellent outcomes which can be “too good to be true”, which can be an instance of knowledge leakage.

Methods to Repair Information Leakage?

Throughout mannequin growth, there are a few methods to attenuate the danger of knowledge leakage occurring:

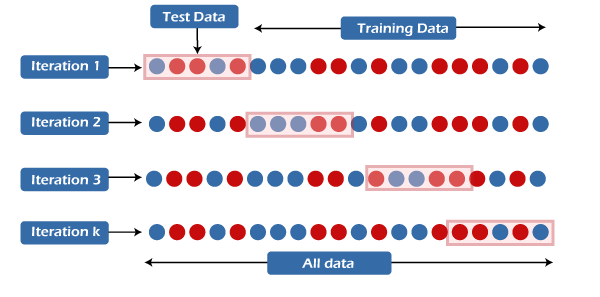

- Information Preparation inside Cross-Validation Folds is carried out by recalculating scaling parameters individually for every fold.

- Withholding the Validation Dataset till the mannequin growth course of is full, lets you see whether or not the estimated efficiency metrics had been overly optimistic and indicative of leakage.

Platforms like R and scikit-learn in Python are helpful right here. They use automation instruments just like the caret bundle in R and Pipelines in scikit-learn.

Mannequin Error No. 4: Outliers and Minima

What are Information Outliers?

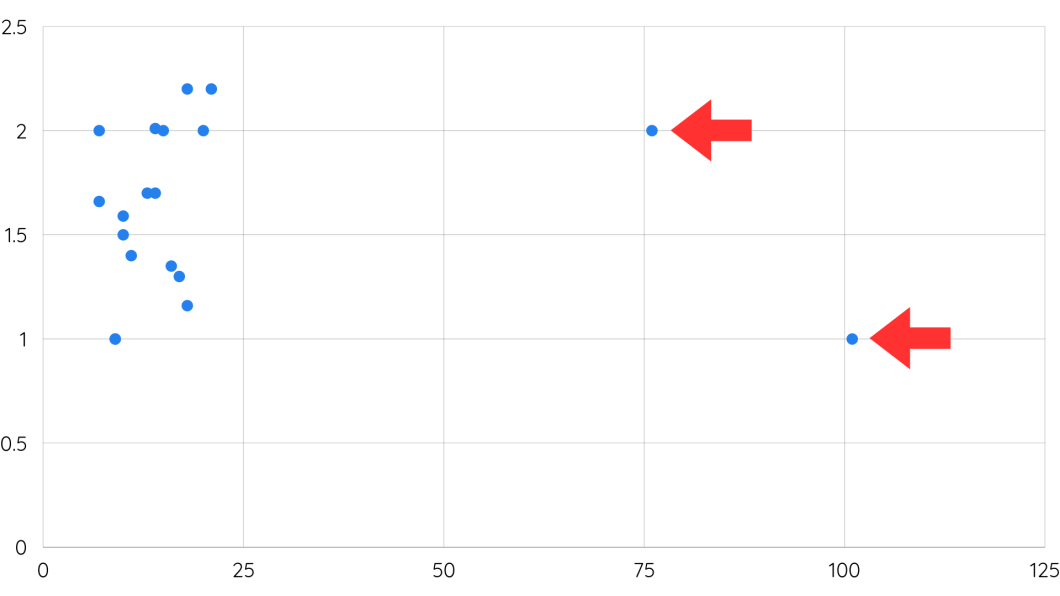

It’s vital to look out for knowledge outliers, or extra excessive knowledge factors in a coaching knowledge set, which may throw the mannequin off throughout coaching or later present false positives.

In machine studying, outliers can disrupt mannequin coaching by resulting in untimely convergence or suboptimal options, notably in native minima. Taking good care of outliers will assist be sure that the mannequin converges in the direction of patterns within the knowledge distribution.

Methods to Repair Information Outliers

Some consultants focus on challenges with international and native minima – once more, the concept the information factors are altering dynamically throughout a spread. Nonetheless, the machine studying program would possibly get trapped in a cluster of extra native outcomes, with out recognizing a few of the outlier knowledge factors within the international set. The precept of wanting globally at knowledge is vital. It’s one thing that engineers ought to at all times construct into fashions.

- Algorithms like Random Forests or Gradient Boosting Machines are much less delicate to outliers typically.

- Algorithms like Isolation Forests and Native Outlier Issue handle outliers individually from the primary dataset.

- You may alter skewed options or create new options much less delicate to outliers through the use of strategies corresponding to log transformations or scaling strategies.

Mannequin Error No. 5: Clerical Errors – Information and Labeling Issues

What are Some Information Labeling Issues?

Other than all of those engineering points with machine studying, there’s a complete set of different AI mannequin errors that may be problematic. These must do with poor knowledge hygiene.

These are very completely different sorts of points. Right here, it’s not this system’s technique that’s in error – as an alternative, there are errors within the knowledge itself that educated the mannequin on.

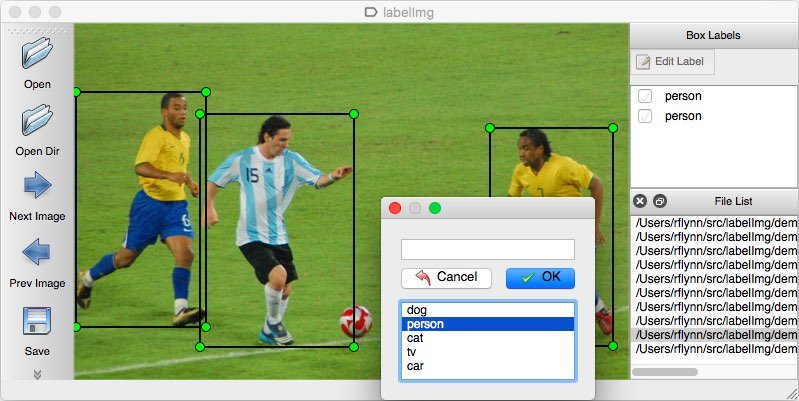

Certainly one of most of these errors is known as a labeling error. In supervised or semi-supervised studying programs, knowledge is usually labeled. If the labels are fallacious, you’re not going to get the precise outcome. So labeling errors are one thing to look out for early on within the course of.

Methods to Repair Information Labeling Issues

Then, there’s the issue of partial knowledge units or lacking values. It is a greater cope with uncooked or unstructured knowledge that engineers and builders is perhaps utilizing to feed the machine studying program.

Information scientists know in regards to the perils of unstructured knowledge – however it’s not at all times one thing that ML engineers take into consideration – till it’s too late. Ensuring that the coaching knowledge is appropriate is crucial within the course of.

When it comes to labeling and knowledge errors, the true resolution is precision. Or name it ‘due diligence’ or ‘doing all of your homework’. Going by way of the information with a fine-toothed comb is commonly what’s referred to as for. Nonetheless, earlier than the coaching course of, preventative measures are going to be key:

- Information high quality assurance processes

- Iterative labeling

- Human-in-the-loop labeling (for verifying and correcting labels)

- Energetic studying

Mannequin Error No. 6: Information Drift

What’s Information Drift?

There’s one other elementary kind of mannequin coaching error in ML to look out for: it’s generally referred to as knowledge drift.

Information drift occurs when a mannequin turns into much less in a position to carry out properly, due to modifications in knowledge over time. Generally knowledge drift occurs when a mannequin’s efficiency on new knowledge differs from the way it offers with the coaching or check knowledge.

There are several types of knowledge drift, together with idea drift and a drift within the precise enter knowledge. When the distribution of the enter knowledge modifications over time, that may throw off this system.

In different instances, this system is probably not designed to deal with the sorts of change that programmers and engineers topic it to. That may be, once more, a problem of scope, concentrating on, or the timeline that individuals use for growth. In the true world, knowledge modifications typically.

Methods to Repair Information Drift

There are a number of approaches you may take to fixing knowledge drift, together with implementing algorithms such because the Kolmogorov-Smirrnov check, Inhabitants Stability Index, and Web page-Hinkley technique. This resource from Datacamp mentions a few of the finer factors of every kind of knowledge drift instance:

- Steady Monitoring: Recurrently analyzing incoming knowledge and evaluating it to historic knowledge to detect any shifts.

- Characteristic Engineering: Deciding on options which can be much less delicate to reworking options to make them extra secure over time.

- Adaptive Mannequin Coaching: Algorithms can modify the mannequin parameters in response to modifications within the knowledge distribution.

- Ensemble Studying: Ensemble studying strategies to mix a number of fashions educated on completely different subsets of knowledge or utilizing completely different algorithms.

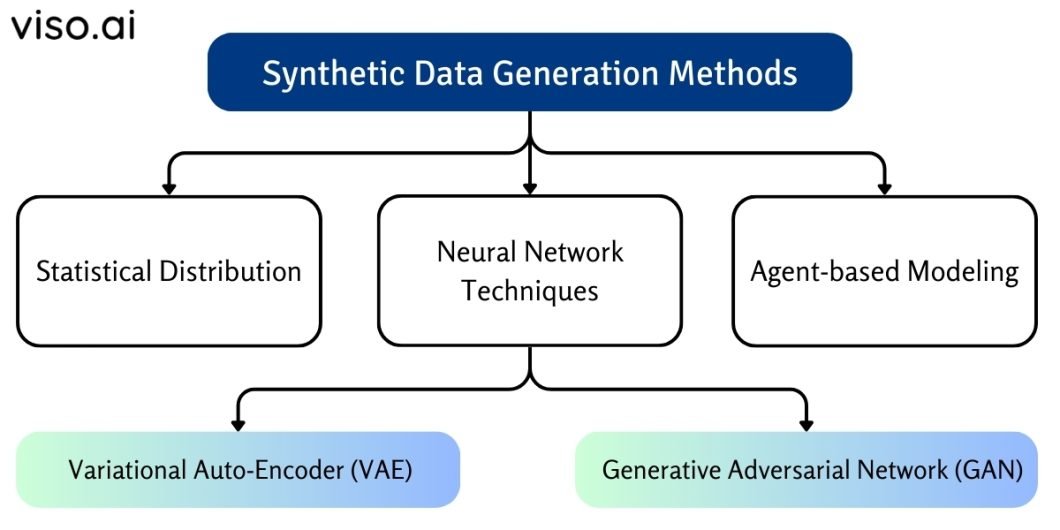

- Information Preprocessing and Resampling: Recurrently preprocess the information and resample it with strategies corresponding to knowledge augmentation, artificial knowledge technology, or stratified sampling so it stays consultant of the inhabitants.

Mannequin Error No. 7: Lack of Mannequin Experimentation

What’s Mannequin Experimentation?

Mannequin experimentation is the iterative technique of testing and refining mannequin designs to optimize efficiency. It entails exploring completely different architectures, hyperparameters, and coaching methods to determine the best mannequin for a given activity.

Nonetheless, a significant AI mannequin coaching error can come up when builders don’t solid a large sufficient web in designing ML fashions. This occurs when customers prematurely decide on the primary mannequin they practice with out exploring different designs or contemplating alternate prospects.

Methods to Repair Lack of Mannequin Experimentation

Somewhat than making the primary mannequin the one mannequin, many consultants would advocate attempting out a number of designs, and triangulating which one goes to work finest for a specific venture. A step-by-step course of for this might appear like:

- Step One: Set up a framework for experimentation to look at varied mannequin architectures and configurations. To evaluate mannequin efficiency, you’ll check different algorithms, modify hyperparameters, and experiment with completely different coaching datasets.

- Step Two: Use instruments and strategies for systematic mannequin analysis, corresponding to cross-validation strategies and efficiency metrics evaluation.

- Step Three: Prioritize steady enchancment in the course of the mannequin coaching course of.

Staying Forward of Mannequin Coaching Errors

When fine-tuning your mannequin, you’ll must proceed to create an error evaluation. This may assist you maintain observe of errors and encourage steady enhancements. Thus, serving to you maximize efficiency to yield precious outcomes.

Coping with machine studying mannequin errors will grow to be extra acquainted as you go. Like testing for software program packages, error correction for machine studying is a key a part of the worth course of.