Generative AI has been a driving power within the AI neighborhood for a while now, and the developments made within the area of generative picture modeling particularly with using diffusion fashions have helped the generative video fashions progress considerably not solely in analysis, but in addition by way of actual world functions. Conventionally, generative video fashions are both skilled from scratch, or they’re partially or utterly finetuned from pretrained picture fashions with additional temporal layers, on a combination of picture and video datasets.

Taking ahead the developments in generative video fashions, on this article, we’ll speak in regards to the Secure Video Diffusion Mannequin, a latent video diffusion mannequin able to producing high-resolution, state-of-the-art picture to video, and textual content to video content material. We are going to discuss how latent diffusion fashions skilled for synthesizing 2D photographs have improved the talents & effectivity of generative video fashions by including temporal layers, and fine-tuning the fashions on small datasets consisting of high-quality movies. We can be having a deeper dive into the structure and dealing of the Secure Video Diffusion Mannequin, and consider its efficiency on varied metrics and evaluate it with present cutting-edge frameworks for video technology. So let’s get began.

Because of its nearly limitless potential, Generative AI has been the first topic of analysis for AI and ML practitioners for some time now, and the previous few years have seen speedy developments each by way of effectivity and efficiency of generative picture fashions. The learnings from generative picture fashions have allowed researchers and builders to make progress on generative video fashions leading to enhanced practicality and real-world functions. Nevertheless, many of the analysis trying to enhance the capabilities of generative video fashions focus totally on the precise association of temporal and spatial layers, with little consideration being paid to analyze the affect of choosing the suitable knowledge on the result of those generative fashions.

Because of the progress made by generative picture fashions, researchers have noticed that the influence of coaching knowledge distribution on the efficiency of generative fashions is certainly important and undisputed. Moreover, researchers have additionally noticed that pretraining a generative picture mannequin on a big and various dataset adopted by fine-tuning it on a smaller dataset with higher high quality typically leads to enhancing the efficiency considerably. Historically, generative video fashions implement the learnings obtained from profitable generative picture fashions, and researchers are but to check the impact of knowledge, and coaching methods are but to be studied. The Secure Video Diffusion Mannequin is an try to reinforce the talents of generative video fashions by venturing into beforehand uncharted territories with particular focus being on choosing knowledge.

Latest generative video fashions depend on diffusion fashions, and textual content conditioning or picture conditioning approaches to synthesize a number of constant video or picture frames. Diffusion fashions are recognized for his or her means to learn to regularly denoise a pattern from regular distribution by implementing an iterative refinement course of, they usually have delivered fascinating outcomes on high-resolution video, and textual content to picture synthesis. Utilizing the identical precept at its core, the Secure Video Diffusion Mannequin trains a latent video diffusion mannequin on its video dataset together with using Generative Adversarial Networks or GANs, and even autoregressive fashions to some extent.

The Secure Video Diffusion Mannequin follows a novel technique by no means carried out by any generative video mannequin because it depends on latent video diffusion baselines with a hard and fast structure, and a hard and fast coaching technique adopted by assessing the impact of curating the info. The Secure Video Diffusion Mannequin goals to make the next contributions within the area of generative video modeling.

- To current a scientific and efficient knowledge curation workflow in an try to show a big assortment of uncurated video samples to high-quality dataset that’s then utilized by the generative video fashions.

- To coach cutting-edge picture to video, and textual content to video fashions that outperforms the prevailing frameworks.

- Conducting domain-specific experiments to probe the 3D understanding, and powerful prior of movement of the mannequin.

Now, the Secure Video Diffusion Mannequin implements the learnings from Latent Video Diffusion Fashions, and Information Curation strategies on the core of its basis.

Latent Video Diffusion Fashions

Latent Video Diffusion Fashions or Video-LDMs comply with the strategy of coaching the first generative mannequin in a latent area with lowered computational complexity, and most Video-LDMs implement a pre skilled textual content to picture mannequin coupled with the addition of temporal mixing layers within the pretraining structure. In consequence, most Video Latent Diffusion Fashions both solely prepare temporal layers, or skip the coaching course of altogether in contrast to the Secure Video Diffusion Mannequin that fine-tunes your complete framework. Moreover, for synthesizing textual content to video knowledge, the Secure Video Diffusion Mannequin straight circumstances itself on a textual content immediate, and the outcomes point out that the ensuing framework will be finetuned right into a multi-view synthesis or a picture to video mannequin simply.

Information Curation

Information Curation is an integral part not solely of the Secure Video Diffusion Mannequin, however for generative fashions as an entire as a result of it’s important to pretrain giant fashions on large-scale datasets to spice up efficiency throughout totally different duties together with language modeling, or discriminative textual content to picture technology, and way more. Information Curation has been carried out efficiently on generative picture fashions by leveraging the capabilities of environment friendly language-image representations, though such such discussions have by no means been focussed on for growing generative video fashions. There are a number of hurdles builders face when curating knowledge for generative video fashions, and to deal with these challenges, the Secure Video Diffusion Mannequin implements a three-stage coaching technique, leading to enhanced outcomes, and a major increase in efficiency.

Information Curation for Excessive High quality Video Synthesis

As mentioned within the earlier part, the Secure Video Diffusion Mannequin implements a three-stage coaching technique, leading to enhanced outcomes, and a major increase in efficiency. Stage I is an picture pretraining stage that makes use of a 2D textual content to picture diffusion mannequin. Stage II is for video pretraining during which the framework trains on a considerable amount of video knowledge. Lastly, we’ve got Stage III for video finetuning during which the mannequin is refined on a small subset of top of the range and excessive decision movies.

Nevertheless, earlier than the Secure Video Diffusion Mannequin implements these three levels, it’s critical to course of and annotate the info because it serves as the bottom for Stage II or the video pre-training stage, and performs a important function in guaranteeing the optimum output. To make sure most effectivity, the framework first implements a cascaded lower detection pipeline at 3 various FPS or Frames Per Second ranges, and the necessity for this pipeline is demonstrated within the following picture.

Subsequent, the Secure Video Diffusion Mannequin annotates every video clip utilizing three various artificial captioning strategies. The next desk compares the datasets used within the Secure Diffusion Framework earlier than & after the filtration course of.

Stage I : Picture Pre-Coaching

The primary stage within the three-stage pipeline carried out within the Secure Video Diffusion Mannequin is picture pre-training, and to attain this, the preliminary Secure Video Diffusion Mannequin framework is grounded in opposition to a pre-trained picture diffusion mannequin particularly the Secure Diffusion 2.1 mannequin that equips it with stronger visible representations.

Stage II : Video Pre-Coaching

The second stage is the Video Pre-Coaching stage, and it builds on the findings that using knowledge curation in multimodal generative picture fashions typically leads to higher outcomes, and enhanced effectivity together with highly effective discriminative picture technology. Nevertheless, owing to the shortage of comparable highly effective off the shelf representations to filter out undesirable samples for generative video fashions, the Secure Video Diffusion Mannequin depends on human preferences as enter alerts for the creation of an applicable dataset used for pre-training the framework. The next determine show the optimistic impact of pre-training the framework on a curated dataset that helps in boosting the general efficiency for video pre-training on smaller datasets.

To be extra particular, the framework makes use of totally different strategies to curate subsets of Latent Video Diffusion, and considers the rating of LVD fashions skilled on these datasets. Moreover, the Secure Video Diffusion framework additionally finds that using curated datasets for coaching the frameworks helps in boosting the efficiency of the framework, and diffusion fashions normally. Moreover, knowledge curation technique additionally works on bigger, extra related, and extremely sensible datasets. The next determine demonstrates the optimistic impact of pre-training the framework on a curated dataset that helps in boosting the general efficiency for video pre-training on smaller datasets.

Stage III : Excessive-High quality Tremendous-tuning

Until stage II, the Secure Video Diffusion framework focuses on enhancing the efficiency previous to video pretraining, and within the third stage, the framework lays its emphasis on optimizing or additional boosting the efficiency of the framework after prime quality video fine-tuning, and the way the transition from Stage II to Stage III is achieved within the framework. In Stage III, the framework attracts on coaching strategies borrowed from latent picture diffusion fashions, and will increase the coaching examples’ decision. To investigate the effectiveness of this strategy, the framework compares it with three an identical fashions that differ solely by way of their initialization. The primary an identical mannequin has its weights initialized, and the video coaching course of is skipped whereas the remaining two an identical fashions are initialized with the weights borrowed from different latent video fashions.

Outcomes and Findings

It is time to take a look at how the Secure Video Diffusion framework performs on real-world duties, and the way it compares in opposition to the present cutting-edge frameworks. The Secure Video Diffusion framework first makes use of the optimum knowledge strategy to coach a base mannequin, after which performs fine-tuning to generate a number of cutting-edge fashions, the place every mannequin performs a particular activity.

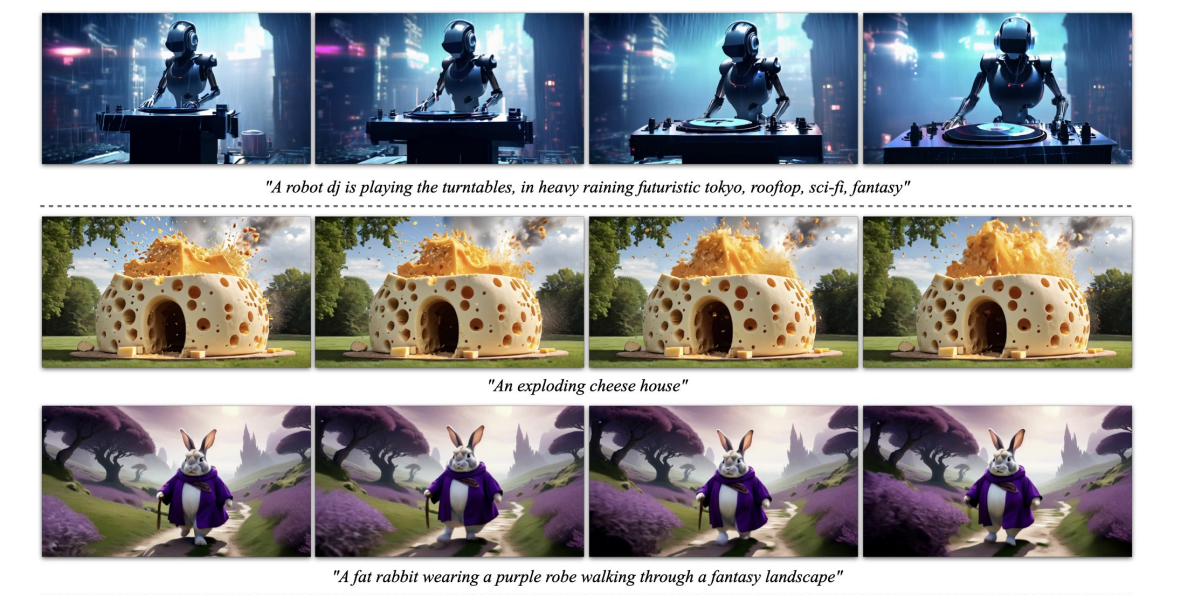

The above image represents the high-resolution picture to video samples generated by the framework whereas the next determine demonstrates the power of the framework to generate high-quality textual content to video samples.

Pre-Educated Base Model

As mentioned earlier, the Secure Video Diffusion mannequin is constructed on the Secure Diffusion 2.1 framework, and on the premise of current findings, it was essential for builders to undertake the noise schedule and enhance the noise to acquire photographs with higher decision when coaching picture diffusion fashions. Because of this strategy, the Secure Video Diffusion base mannequin learns highly effective movement representations, and within the course of, outperforms baseline fashions for textual content to video technology in a zero shot setting, and the outcomes are displayed within the following desk.

Body Interpolation and Multi-View Technology

The Secure Video Diffusion framework finetunes the picture to video mannequin on multi-view datasets to acquire a number of novel views of an object, and this mannequin is named SVD-MV or Secure Video Diffusion- Multi View mannequin. The unique SVD mannequin is finetuned with the assistance of two datasets in a means that the framework inputs a single picture, and returns a sequence of multi-view photographs as its output.

As it may be seen within the following photographs, the Secure Video Diffusion Multi View framework delivers excessive efficiency similar to cutting-edge Scratch Multi View framework, and the outcomes are a transparent demonstration of SVD-MV’s means to make the most of the learnings obtained from the unique SVD framework for multi-view picture technology. Moreover, the outcomes additionally point out that operating the mannequin for a comparatively smaller variety of iterations helps in delivering optimum outcomes as is the case with most fashions fine-tuned from the SVD framework.

Within the above determine, the metrics are indicated on the left-hand aspect and as it may be seen, the Secure Video Diffusion Multi View framework outperforms Scratch-MV and SD2.1 Multi-View framework by an honest margin. The second picture demonstrates the impact of the variety of coaching iterations on the general efficiency of the framework by way of Clip Rating, and the SVD-MV frameworks ship sustainable outcomes.

Last Ideas

On this article, we’ve got talked about Secure Video Diffusion, a latent video diffusion mannequin able to producing high-resolution, state-of-the-art picture to video, and textual content to video content material. The Secure Video Diffusion Mannequin follows a novel technique by no means carried out by any generative video mannequin because it depends on latent video diffusion baselines with a hard and fast structure, and a hard and fast coaching technique adopted by assessing the impact of curating the info.

We now have talked about how latent diffusion fashions skilled for synthesizing 2D photographs have improved the talents & effectivity of generative video fashions by including temporal layers, and fine-tuning the fashions on small datasets consisting of high-quality movies. To assemble the pre-training knowledge, the framework conducts scaling research and follows systematic knowledge assortment practices, and finally proposes a technique to curate a considerable amount of video knowledge, and converts noisy movies into enter knowledge appropriate for generative video fashions.

Moreover, the Secure Video Diffusion framework employs three distinct video mannequin coaching levels which are analyzed independently to evaluate their influence on the framework’s efficiency. The framework finally outputs a video illustration highly effective sufficient to finetune the fashions for optimum video synthesis, and the outcomes are similar to cutting-edge video technology fashions already in use.