ONNX is an open commonplace for representing laptop imaginative and prescient and machine studying fashions. The ONNX commonplace gives a standard format enabling the switch of fashions between totally different machine studying frameworks equivalent to TensorFlow, PyTorch, MXNet, and others.

ONNX (Open Neural Community Change) is an open-source format. It promotes interoperability between totally different deep studying frameworks for easy mannequin sharing and deployment.

This interoperability is essential for builders and researchers. It permits for using fashions throughout totally different frameworks, omitting the necessity for retraining or vital modifications.

Key Points of ONNX:

- Format Flexibility. ONNX helps a variety of mannequin varieties, together with deep studying and conventional machine studying.

- Framework Interoperability. Fashions educated in a single framework could be exported to ONNX format and imported into one other suitable framework. That is notably helpful for deployment or for persevering with improvement in a special surroundings.

- Efficiency Optimizations. ONNX fashions can profit from optimizations obtainable in numerous frameworks and effectively run on varied {hardware} platforms.

- Neighborhood-Pushed. Being an open-source venture, it’s supported and developed by a neighborhood of firms and particular person contributors. This ensures a continuing inflow of updates and enhancements.

- Instruments and Ecosystem. Quite a few instruments can be found for changing, visualizing, and optimizing ONNX fashions. Builders can even discover libraries for operating these fashions on totally different AI {hardware}, together with GPU and CPU.

- Versioning and Compatibility. ONNX is commonly up to date with new variations. Every model maintains a stage of backward compatibility to make sure that fashions created with older variations stay usable.

This commonplace is especially helpful in eventualities the place flexibility and interoperability between totally different instruments and platforms are important. As an illustration, a mannequin educated in a analysis setting may be exported to ONNX for deployment in a manufacturing surroundings of a special framework.

Actual-World Purposes of ONNX

We will view ONNX as a form of Rosetta stone of synthetic intelligence (AI). It presents unparalleled flexibility and interoperability throughout varied frameworks and instruments. Its design allows seamless mannequin portability and optimization, making it a useful asset inside and throughout varied industries.

Beneath are a few of the purposes by which ONNX has already begun to make a big impression. Actual-world use circumstances are broad, starting from facial recognition and sample recognition to object recognition:

- Healthcare. In medical imaging, ONNX facilitates using deep studying fashions for diagnosing ailments from MRI or CT scans. As an illustration, a mannequin educated in TensorFlow for tumor detection could be transformed to ONNX for deployment in scientific diagnostic instruments that function on a special framework.

- Automotive. ONNX aids in growing autonomous car methods and self-driving vehicles. It permits for the combination of object detection fashions into real-time driving resolution methods. It additionally offers a stage of interoperability whatever the unique coaching surroundings.

- Retail. ONNX allows suggestion methods educated in a single framework to be deployed in numerous e-commerce platforms. This enables retailers to reinforce buyer engagement by way of customized buying experiences.

- Manufacturing. In predictive upkeep, ONNX fashions can forecast gear failures. You may prepare it in a single framework and deploy it in manufacturing facility methods utilizing one other, making certain operational effectivity.

- Finance. Fraud detection fashions developed in a single framework could be simply transferred for integration into banking methods. It is a very important part in implementing strong, real-time fraud prevention.

- Agriculture. ONNX assists in precision farming by integrating crop and soil fashions into varied agricultural administration methods, aiding in environment friendly useful resource utilization.

- Leisure. ONNX can switch conduct prediction fashions into recreation engines. This has the potential to reinforce participant expertise by way of AI-driven personalization and interactions.

- Schooling. Adaptive studying methods can combine AI fashions that personalize studying content material, permitting for various studying types throughout varied platforms.

- Telecommunications. ONNX streamlines the deployment of community optimization fashions. This enables operators to optimize bandwidth allocation and streamline customer support in telecom infrastructures.

- Environmental Monitoring. ONNX helps local weather change fashions, permitting for the sharing and deployment throughout platforms. Environmental fashions are infamous for his or her complexity, particularly when making an attempt to mix them to make predictions.

Standard Frameworks and Instruments Appropriate with ONNX

A cornerstone of ONNX’s usefulness is its potential to seamlessly interface with frameworks and instruments already being utilized in totally different purposes.

This compatibility ensures that AI builders can leverage the strengths of numerous platforms whereas sustaining mannequin portability and effectivity.

With that in thoughts, right here’s an inventory of notable and suitable frameworks and instruments:

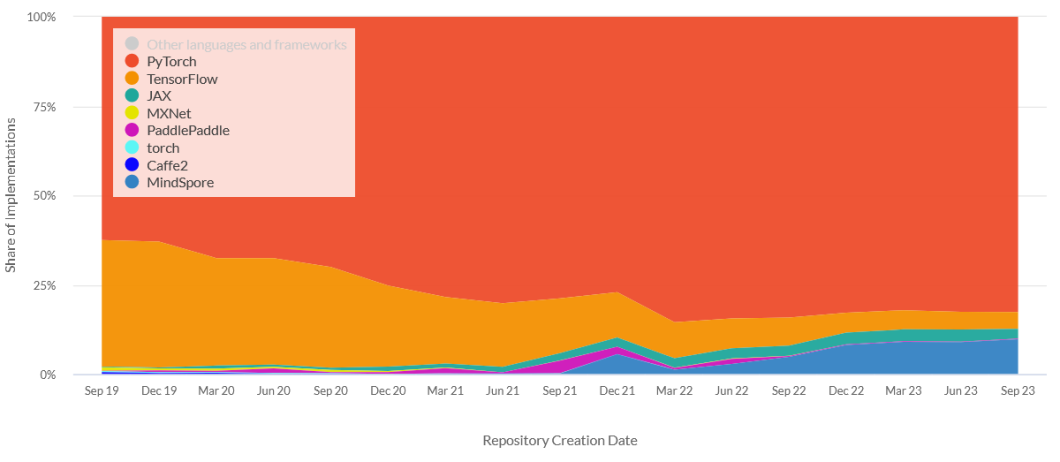

- PyTorch. A widely-used open-source machine studying library from Fb. Pytorch is understood for its ease of use and dynamic computational graph. The neighborhood favors PyTorch for analysis and improvement due to its flexibility and intuitive design.

- TensorFlow. Developed by Google, TensorFlow is a complete framework. TensorFlow presents each high-level and low-level APIs for constructing and deploying machine studying fashions.

- Microsoft Cognitive Toolkit (CNTK). A deep studying framework from Microsoft. Recognized for its effectivity in coaching convolutional neural networks, CNTK is particularly notable in speech and picture recognition duties.

- Apache MXNet. A versatile and environment friendly open-source deep studying framework supported by Amazon. MXNet deploys deep neural networks on a wide selection of platforms, from cloud infrastructure to cell units.

- Scikit-Learn. A preferred library for conventional machine studying algorithms. Whereas in a roundabout way suitable, fashions from Scikit-Be taught could be transformed with sklearn-onnx.

- Keras. A high-level neural networks API, Keras operates on high of TensorFlow, CNTK, and Theano. It focuses on enabling quick experimentation.

- Apple Core ML. For integrating ML fashions into iOS purposes, fashions could be transformed from varied frameworks to ONNX after which to Core ML format.

- ONNX Runtime. A cross-platform, high-performance scoring engine. It optimizes mannequin inference throughout {hardware} and is essential for deployment.

- NVIDIA TensorRT. An SDK for high-performance deep studying inference. TensorRT consists of an ONNX parser and is used for optimized inference on NVIDIA GPUs.

- ONNX.js. A JavaScript library for operating ONNX fashions on browsers and Node.js. It permits web-based ML purposes to leverage ONNX fashions.

Understanding the Intricacies and Implications of ONNX Runtime in AI Deployments

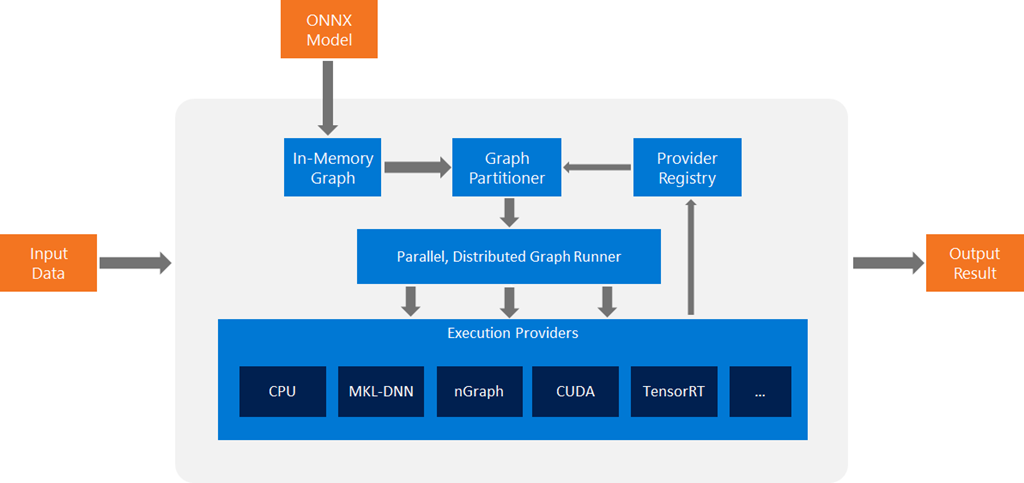

ONNX Runtime is a performance-focused engine for operating fashions. It ensures environment friendly and scalable execution throughout a wide range of platforms and {hardware}. With a hardware-agnostic structure, it permits deploying AI fashions constantly throughout totally different environments.

This high-level system structure begins with changing an ONNX mannequin into an in-memory graph illustration. This proceeds provider-independent optimizations and graph partitioning based mostly on obtainable execution suppliers. Every subgraph is then assigned to an execution supplier, making certain that it may well deal with the given operations.

The design of ONNX Runtime facilitates a number of threads invoking the Run() technique. This happens concurrently on the identical inference session, with all kernels being stateless. The design ensures help for all operators by the default execution supplier. It additionally permits execution suppliers to make use of inner tensor representations, with the accountability of changing these on the boundaries of their subgraphs.

The optimistic implications for AI practitioners, builders, and enterprise are manifold:

- Potential to execute fashions throughout varied {hardware} and execution suppliers.

- Graph partitioning and optimizations enhance effectivity and efficiency.

- Compatibility with a number of customized accelerators and runtimes.

- Helps a variety of purposes and environments, from cloud to edge.

- A number of threads can concurrently run inference periods.

- Ensures help for all operators, offering reliability in mannequin execution.

- Execution suppliers handle their reminiscence allocators, optimizing useful resource utilization.

- Straightforward integration with varied frameworks and instruments for streamlined AI workflows.

Advantages and Challenges of Adopting the ONNX Mannequin

As with every new revolutionary expertise, ONNX comes with its personal challenges and issues.

Advantages

- Framework Interoperability. Facilitates using fashions throughout totally different ML frameworks, enhancing flexibility.

- Deployment Effectivity. Streamlines the method of deploying fashions throughout varied platforms and units.

- Neighborhood Help. Advantages from a rising, collaborative neighborhood contributing to its improvement and help.

- Optimization Alternatives. Presents mannequin optimizations for improved efficiency and effectivity.

- {Hardware} Agnostic. Appropriate with a variety of {hardware}, making certain broad applicability.

- Consistency. Maintains mannequin constancy throughout totally different environments and frameworks.

- Common Updates. Constantly evolving with the most recent developments in AI and machine studying.

Challenges

- Complexity in Conversion. Changing fashions to ONNX format could be complicated and time-consuming, particularly for fashions utilizing non-standard layers or operations.

- Model Compatibility. Guaranteeing compatibility with totally different variations of ONNX and ML frameworks could be difficult.

- Restricted Help for Sure Operations. Some superior or proprietary operations will not be totally supported.

- Efficiency Overheads. In some circumstances, there could be efficiency overheads in changing and operating.

- Studying Curve. Requires understanding of ONNX format and suitable instruments, including to the educational curve for groups.

- Dependency on Neighborhood. Some options might depend on neighborhood contributions for updates and fixes, which might differ in timeliness.

- Intermittent Compatibility Points. Occasional compatibility points with sure frameworks or instruments can come up, requiring troubleshooting.

ONNX – An Open Commonplace Pushed by a Thriving Neighborhood

As a well-liked open-source framework, a key part of ONNX’s continued improvement and success is neighborhood involvement. Its GitHub project has almost 300 contributors, and its present consumer base is over 19.4k.

With 27 releases and over 3.6k forks on the time of writing, it’s a dynamic and ever-evolving venture. There have additionally been over 3,000 pull requests (with 40 nonetheless energetic) and over 2,300 resolved points (with 268 energetic).

The involvement of a various vary of contributors and customers has made it a vibrant and progressive venture. And initiatives by ONNX, particular person contributors, main companions, and different events are holding it that means:

- Broad Trade Adoption. ONNX is common amongst each particular person builders and main tech firms for varied AI and ML purposes. Examples embrace:

- Microsoft. Makes use of ONNX in varied companies, together with Azure Machine Studying and Home windows ML.

- Fb. As a founding member of the ONNX venture, Fb has built-in ONNX help in PyTorch, one of many main deep studying frameworks.

- IBM. Makes use of ONNX in its Watson companies, enabling seamless mannequin deployment throughout numerous platforms.

- Amazon Internet Providers (AWS). Helps ONNX fashions in its machine studying companies like Amazon SageMaker, for instance.

- Lively Discussion board Discussions. The neighborhood participates in boards and discussions, offering help, sharing greatest practices, and guiding the path of the venture.

- Common Neighborhood Conferences. ONNX maintains common neighborhood conferences, the place members talk about developments, and roadmaps, and tackle neighborhood questions.

- Instructional Assets. The neighborhood actively works on growing and sharing instructional assets, tutorials, and documentation.

ONNX Case Research

Quite a few case research have demonstrated ONNX’s effectiveness and impression in varied purposes. Nonetheless, listed below are two of probably the most vital ones lately:

Optimizing Deep Studying Mannequin Coaching

Microsoft’s case research showcases how ONNX Runtime (ORT) can optimize the coaching of enormous deep-learning fashions like BERT. ORT implements reminiscence utilization optimizations by reusing buffer segments throughout a sequence of operations, equivalent to gradient accumulation and weight replace computations.

Amongst its most necessary findings was the way it enabled coaching BERT with double the batch measurement in comparison with PyTorch. Thus, resulting in extra environment friendly GPU utilization and higher efficiency. Moreover, ORT integrates Zero Redundancy Optimizer (ZeRO) for GPU reminiscence consumption discount, additional boosting batch measurement capabilities.

Failures and Dangers in Mannequin Converters

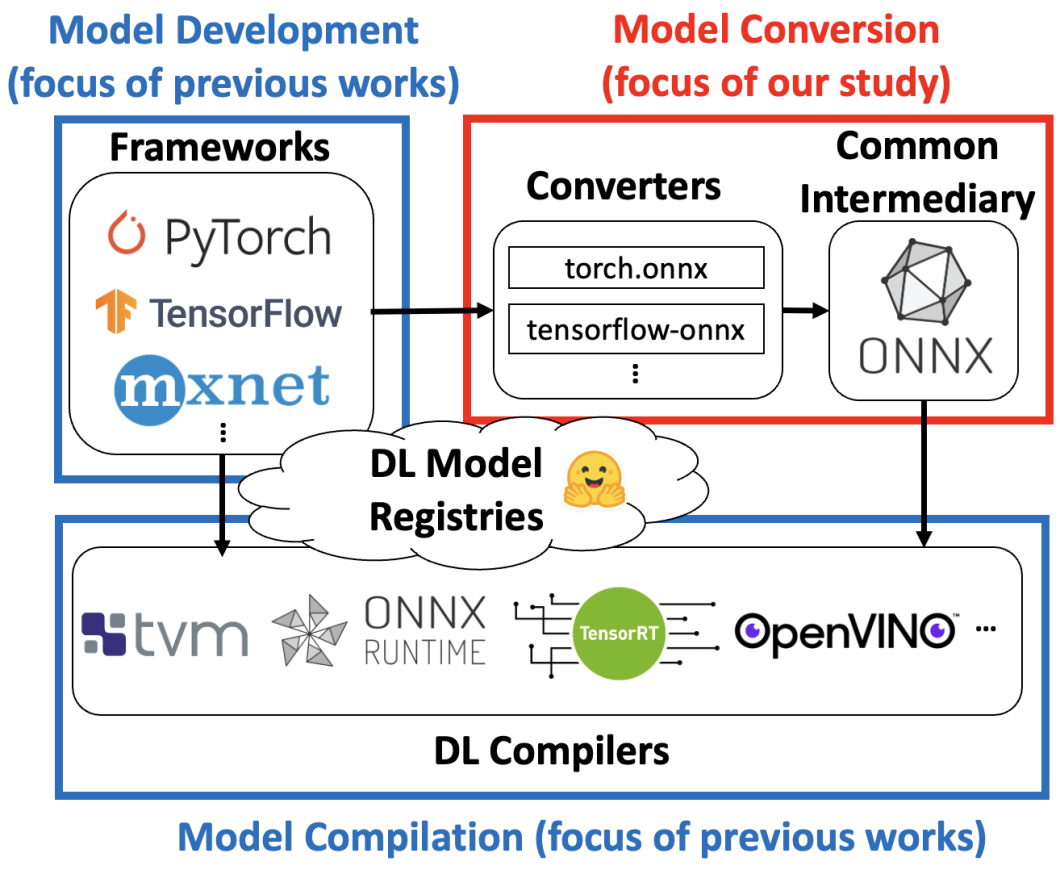

A research within the ONNX ecosystem centered on analyzing the failures and dangers in deep studying mannequin converters. This analysis highlights the rising complexity of the deep studying ecosystem. This included the evolution of frameworks, mannequin registries, and compilers.

Within the research, the information scientists level out the growing significance of widespread intermediaries for interoperability because the ecosystem expands. It addresses the challenges in sustaining compatibility between frameworks and DL compilers, illustrating how ONNX aids in navigating these complexities.

The analysis additionally delves into the character and prevalence of failures in DL mannequin converters, offering insights into the dangers and alternatives for enchancment within the ecosystem.

Getting Began

ONNX stands as a pivotal framework in open-source machine studying, fostering interoperability and collaboration throughout varied AI platforms. Its versatile construction, supported by an in depth array of frameworks, empowers builders to deploy fashions effectively. ONNX presents a standardized strategy to bridging the gaps and reaching new heights of usability and mannequin efficiency.