The human mind has spurred numerous investigations into the basic ideas that govern our ideas, feelings, and actions. On the coronary heart of this exploration lies the idea of neuron activation. This course of is key to the transmission of knowledge all through our intensive neural community.

On this article, we’ll talk about the function that neuron activation performs in synthetic intelligence and machine studying:

- Be taught what Neuron activation is

- The organic ideas of the human mind vs. technical ideas

- Features and real-world purposes of neuron activation

- Present analysis tendencies and challenges

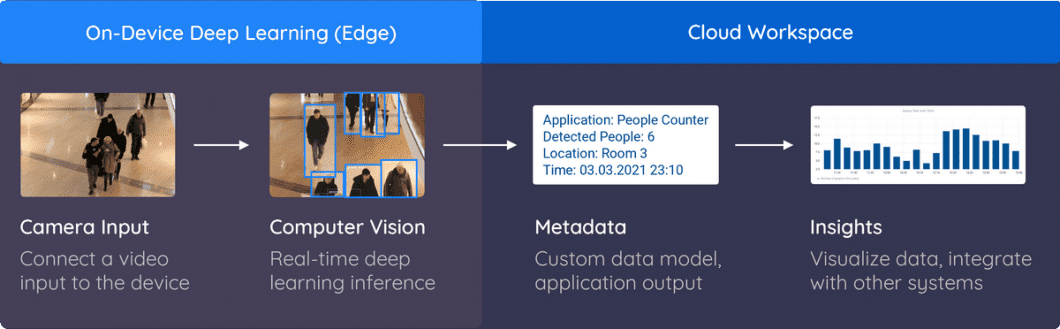

About us: We’re Viso AI, the creators of Viso Suite. Viso Suite is a no-code laptop imaginative and prescient platform for enterprises to construct and ship all their real-world laptop imaginative and prescient techniques in a single place. To be taught extra, guide a demo.

Neuron Activation: Neuronal Firing within the Mind

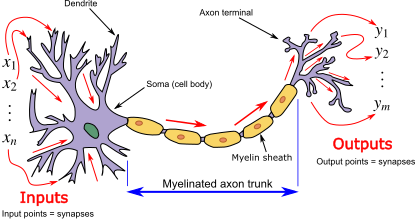

The human mind has roughly 100 billion neurons, every related to hundreds of different neurons by means of trillions of synapses. This advanced community types the premise for cognitive talents, sensory notion, and motor capabilities. On the core of neuron firing is the motion potential. That is an electrochemical sign that travels alongside the size of a neuron’s axon.

The method begins when a neuron receives excitatory or inhibitory indicators from its synaptic connections. If the sum of those indicators surpasses a sure threshold, an motion potential is initiated. This electrical impulse travels quickly down the axon, facilitated by the opening and shutting of voltage-gated ion channels.

Upon reaching the axon terminals, the motion potential triggers the discharge of neurotransmitters into the synapse. Neurotransmitters are chemical messengers that journey the synaptic hole and bind to receptors on the dendrites of neighboring neurons. This binding can both excite or inhibit the receiving neuron, influencing whether or not it is going to fireplace an motion potential. The ensuing interaction of excitatory and inhibitory indicators types the premise of knowledge processing and transmission throughout the neural community.

Neuron firing isn’t a uniform course of however a nuanced orchestration {of electrical} and chemical occasions. The frequency and timing of motion potentials contribute to the coding of knowledge within the mind areas. This firing and speaking is the muse of our potential to course of sensory enter, type reminiscences, and make selections.

Neural Networks Replicate Organic Activation

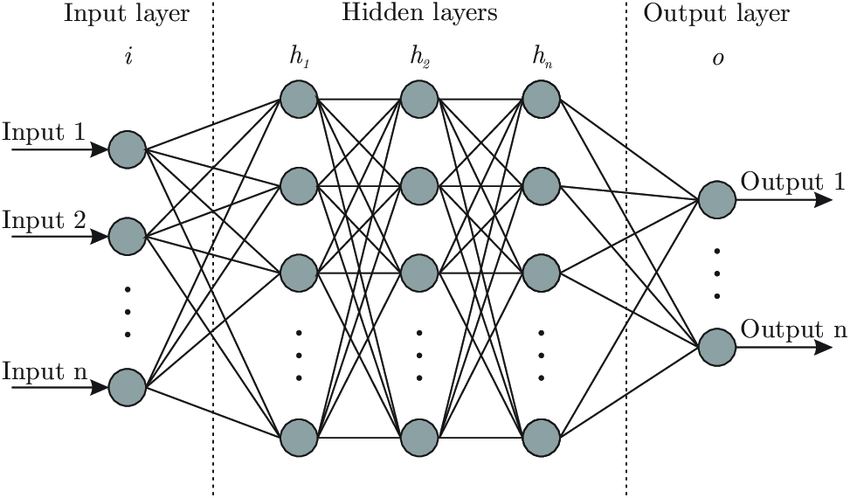

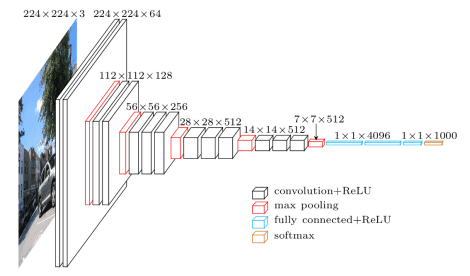

Activation capabilities play a pivotal function in enabling Synthetic Neural Networks (ANNs) to be taught from knowledge and adapt to new patterns. By adjusting the weights of connections between neurons, ANNs can refine their responses to inputs. This step by step improves their potential to carry out duties corresponding to picture recognition, pure language processing (NLP), and speech recognition.

Impressed by the functioning of the human mind, ANNs leverage neuron activation to course of info, make selections, and be taught from knowledge. Activation capabilities, mathematical operations inside neurons, introduce non-linearities to the community, enabling it to seize intricate patterns and relationships in advanced datasets. This non-linearity is essential for the community’s potential to be taught and adapt.

In a nutshell, neuron activation in machine studying is the basic mechanism that permits Synthetic Neural Networks to emulate the adaptive and clever options noticed in human brains.

Activation Synthesis Concept

In line with the Activation-Synthesis Theory launched by Allan Hobson and Robert McCarley in 1977, activation refers back to the spontaneous firing of neurons within the brainstem throughout REM sleep. This earlier research discovered that spontaneous firing results in random neural exercise in numerous mind areas. This randomness is then synthesized by the mind into dream content material.

In machine studying, notably in ANNs, activation capabilities play a necessary function: These capabilities decide whether or not a neuron ought to fireplace, and the output then passes to the following layer of neurons.

In each contexts, the connection lies within the concept of neural activation to interpret the indicators. In ML, the activation capabilities are designed and skilled to extract patterns and data from enter knowledge. In contrast to the random firing within the mind throughout dreaming, the activations in ANNs are purposeful and directed towards particular duties.

Whereas the Activation-Synthesis Concept itself doesn’t instantly inform machine studying practices, the analogy highlights the idea of deciphering neural activations or indicators in several contexts. One applies to neuroscience to elucidate dreaming and the opposite to the sector of AI and ML.

Kinds of Neural Activation Features

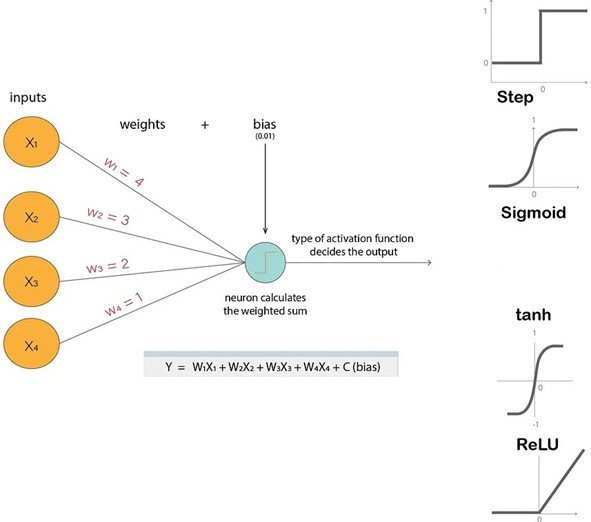

Neural activation capabilities decide whether or not a neuron ought to be activated or not. These capabilities introduce non-linearity to the community, enabling it to be taught and mannequin advanced relationships in knowledge. There are the next varieties of neural activation capabilities:

- Sigmoid Operate. A easy, S-shaped perform that outputs values between 0 and 1. That is generally used for classification duties.

- Hyperbolic Tangent (tanh) Operate. Just like the sigmoid perform, however outputs values between -1 and 1, usually utilized in recurrent neural networks.

- ReLU (Rectified Linear Unit) Operate. A newer activation perform that outputs the enter instantly whether it is constructive, and nil in any other case. This helps forestall neural networks from vanishing gradients.

- Leaky ReLU Operate. A variant of ReLU that permits a small constructive output for damaging inputs, addressing the issue of lifeless neurons.

Challenges of Neuron Activation

Overfitting Drawback

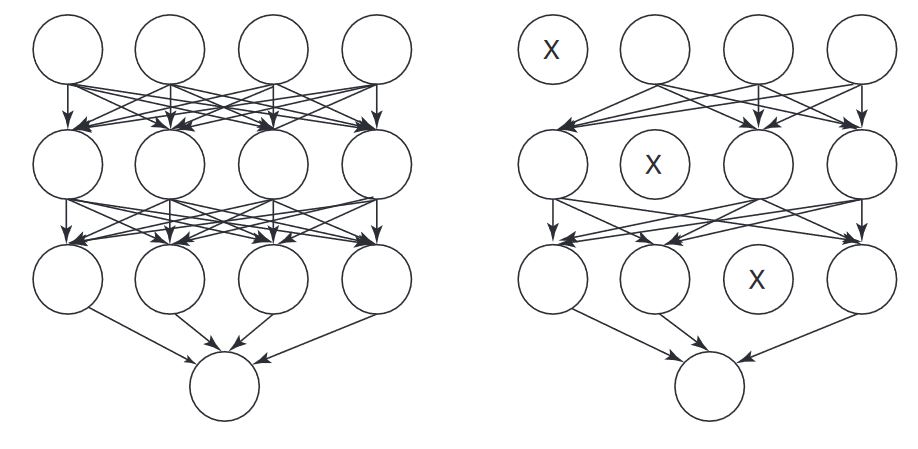

Overfitting happens when a mannequin learns the coaching knowledge too properly. Thus, capturing noise and particulars particular to that dataset however failing to generalize successfully to new, unseen knowledge. In neuron activation, this could hinder the efficiency and reliability of ANNs.

When activation capabilities and the community’s parameters are fine-tuned to suit the coaching knowledge too intently, the danger of overfitting will increase. It is because the community might turn into overly specialised within the particulars of the coaching dataset. In flip, it loses the power to generalize properly to totally different knowledge distributions.

To scale back overfitting, researchers make use of strategies corresponding to regularization and dropout strategies. Regularization introduces penalties for overly advanced fashions, discouraging the community from becoming the noise within the coaching knowledge. Dropout entails randomly “dropping out” neurons throughout coaching, stopping them from contributing to the training course of quickly (see the instance under). These methods encourage the community to seize important patterns within the knowledge whereas avoiding the memorization of noise.

Rising Complexity

As ANNs develop in dimension and depth to deal with more and more advanced duties, the selection and design of activation capabilities turn into essential. Complexity in neuron activation arises from the necessity to mannequin extremely nonlinear relationships current in real-world knowledge. Conventional activation capabilities like sigmoid and tanh have limitations in capturing advanced patterns. That is due to their saturation habits, which might result in the vanishing gradient drawback in deep networks.

This limitation has pushed the event of extra subtle activation capabilities like ReLU and its variants. These can higher deal with advanced, nonlinear mappings.

Nonetheless, as networks turn into extra advanced, the problem shifts to selecting activation capabilities that strike a steadiness between expressiveness and avoiding points like lifeless neurons or exploding gradients. Deep neural networks with quite a few layers and complicated activation capabilities enhance computational calls for and should result in challenges in coaching. Thus, requiring cautious optimization and architectural issues.

Actual-World Functions of Neuron Activation

The impression of neuron activation extends far past machine studying and synthetic intelligence. We’ve seen neuron activation utilized throughout numerous industries, together with:

Finance Use Circumstances

- Fraud Detection. Activation capabilities can assist establish anomalous patterns in monetary transactions. By making use of activation capabilities in neural networks, fashions can be taught to discern refined irregularities which may point out fraudulent actions.

- Credit score Scoring Fashions. Neuron activation contributes to credit score scoring fashions by processing monetary knowledge inputs to evaluate one’s creditworthiness. It contributes to the advanced decision-making course of that determines credit score scores, impacting lending selections.

- Market Forecasting. In market forecasting instruments, activation capabilities help in analyzing historic monetary knowledge and figuring out tendencies. Neural networks with applicable activation capabilities can seize intricate patterns in market habits. Thus, helping in making extra knowledgeable funding selections.

Healthcare Examples

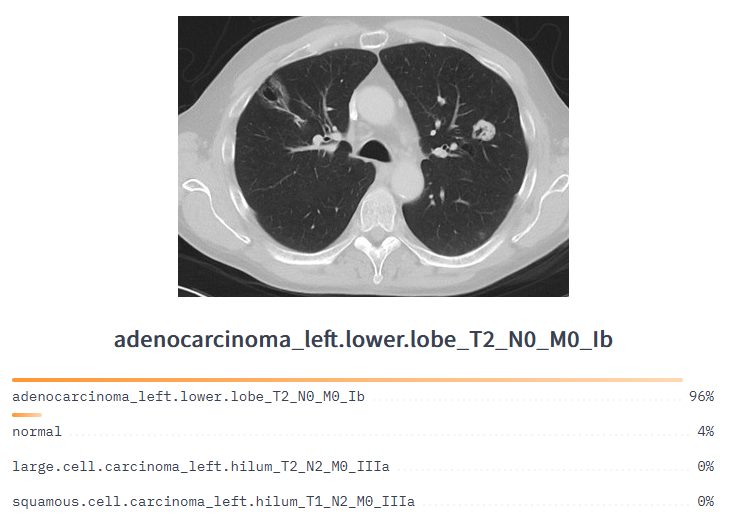

- Medical Imaging Evaluation. Medical imaging duties can apply neuron activation in cases corresponding to abnormality detection in X-rays or MRIs. They contribute to the mannequin’s potential to acknowledge patterns related to totally different medical circumstances.

- Drug Discovery. Neural networks in drug discovery make the most of activation capabilities to foretell the potential efficacy of recent compounds. By processing molecular knowledge, these networks can help researchers in figuring out promising candidates for additional exploration.

- Personalised Medication. In personalised medication, activation capabilities assist tailor remedies based mostly on one’s genetic and molecular profile. Neural networks can analyze numerous knowledge sources to advocate therapeutic approaches.

Robotics

- Choice-Making. Activation capabilities allow robots to make selections based mostly on sensory enter. By processing knowledge from sensors, robots can react to their surroundings and make selections in actual time.

- Navigation. Neural networks with activation capabilities assist the robotic perceive its environment and transfer safely by studying from sensory knowledge.

- Human Interplay. Activation capabilities enable robots to reply to human gestures, expressions, or instructions. The robotic processes these inputs by means of neural networks.

Autonomous Automobiles

- Notion. Neuron activation is key for the notion capabilities and self-driving of autonomous automobiles. Neural networks use activation capabilities to course of knowledge from numerous sensors. These embrace cameras and LiDAR to acknowledge objects, pedestrians, and obstacles within the car’s surroundings.

- Choice-Making. Activation capabilities contribute to the decision-making course of in self-driving vehicles. They assist interpret the perceived surroundings, assess potential dangers, and make car management and navigation selections.

- Management. Activation capabilities help in controlling the car’s actions, like steering, acceleration, and braking. They contribute to the system’s total potential to reply to altering highway circumstances.

Personalised Suggestions

- Product Recommendations. Recommender techniques can course of consumer habits knowledge and generate personalised product strategies. By understanding consumer preferences, these techniques improve the accuracy of product suggestions.

- Film Suggestions. In leisure, activation capabilities contribute to recommender techniques that counsel motion pictures based mostly on particular person viewing historical past and preferences. They assist tailor suggestions to match customers’ tastes.

- Content material Personalization. Activation capabilities work in numerous content material advice engines, offering personalised strategies for articles, music, or different types of content material. This enhances consumer engagement and satisfaction by delivering content material aligned with particular person pursuits.

Analysis Developments in Neuron Activation

We’ve seen an emphasis on growing extra expressive activation capabilities, in a position to seize advanced relationships between inputs and outputs and thereby enhancing the general capabilities of ANNs. The exploration of non-linear activation capabilities, addressing challenges associated to overfitting and mannequin complexity, stays a focus.

Moreover, researchers are delving into adaptive activation capabilities, contributing to the flexibleness and generalizability of ANNs. These tendencies underscore the continual evolution of neuron activation analysis, with a concentrate on advancing the capabilities and understanding of synthetic neural networks.

- Integrating Organic Insights. Utilizing neuroscientific information within the design of activation capabilities, researchers goal to develop fashions that extra intently resemble the mind’s neural circuitry.

- Creating Extra Expressive Activation Features. Researchers are investigating activation capabilities that may seize extra advanced relationships between inputs and outputs. Thus, enhancing the capabilities of ANNs in duties corresponding to picture technology and pure language understanding.

- Exploring Non-Linear Activation Features. Conventional activation capabilities are linear within the sense that they predictably remodel the enter sign. Researchers are exploring activation capabilities that exhibit non-linear habits. These might probably allow ANNs to be taught extra advanced patterns and remedy more difficult issues.

- Adaptive Activation Features. Some activation capabilities are being developed to adapt their habits based mostly on the enter knowledge, additional enhancing the flexibleness and generalizability of ANNs.

Moral Concerns and Challenges

The usage of ANNs raises issues associated to knowledge privateness, algorithmic bias, and the societal impacts of clever techniques. Privateness points come up as ANNs usually require huge datasets, resulting in issues in regards to the confidentiality of delicate info. Moreover, Algorithmic bias can perpetuate and amplify societal inequalities if coaching knowledge displays present biases.

Deploying ANNs in vital purposes, corresponding to medication or finance, poses challenges in accountability, transparency, and making certain truthful and unbiased decision-making. Placing a steadiness between technological innovation and moral duty is important to navigate these challenges and guarantee accountable improvement and deployment.

- Privateness Issues. Neural activation usually entails dealing with delicate knowledge. Guaranteeing sturdy knowledge safety measures is essential to forestall unauthorized entry and potential misuse.

- Bias and Equity. Neural networks skilled on biased datasets can amplify present social biases. Moral issues contain addressing bias in coaching knowledge and algorithms to make sure truthful and equitable outcomes.

- Transparency and Explainability. Complexity raises challenges in understanding decision-making processes. Moral issues name for efforts to make fashions extra clear and interpretable to create belief amongst customers.

- Knowledgeable Consent. In purposes with private knowledge, receiving knowledgeable consent from people turns into a vital moral consideration. Customers ought to perceive how their knowledge is used, notably in relation to areas like personalised medication.

- Accountability and Accountability. Figuring out duty for the actions of neural networks poses challenges. Moral issues contain establishing accountability frameworks and ensuring that builders, organizations, and customers perceive their roles and obligations.

- Regulatory Frameworks. Establishing complete authorized and moral frameworks for neural activation applied sciences is important. Moral issues embrace advocating for laws that steadiness innovation with safety in opposition to potential hurt.

Implementing Neuron Activation

As analysis advances, we will count on to see extra highly effective ANNs to deal with real-world challenges. A deeper understanding of neuron activation will assist unlock the complete potential of each human and synthetic intelligence.

To get began with laptop imaginative and prescient and machine studying, try Viso Suite. Viso Suite is our end-to-end no-code enterprise platform. E book a demo to be taught extra.