Convey this venture to life

Now we have been residing in a Golden Age of text-to-image era for the previous few years. For the reason that preliminary launch of Secure Diffusion to the open supply group, the potential of the know-how has exploded because it has been built-in in a wider and wider number of pipelines to reap the benefits of the progressive, laptop imaginative and prescient mannequin. From ControlNets to LoRAs to Gaussian Splatting to instantaneous type seize, it is evident that we this innovation is simply going to proceed to blow up in scope.

On this article, we’re going to have a look at the thrilling new venture “Enhancing Diffusion Fashions for Genuine Digital Strive-on” or IDM-VTON. This venture is among the newest and best Secure Diffusion primarily based pipelines to create an actual world utility for the inventive mannequin: attempting on outfits. With the unimaginable pipeline, its now potential to adorn nearly any human determine with practically any piece of clothes conceivable. Within the close to future, we are able to count on to see this know-how on retail web sites all over the place as procuring is advanced by the unimaginable AI.

Going a bit additional, after we introduce the pipeline in broad strokes, we additionally need to introduce a novel enchancment we now have made to the pipeline by including Grounded Phase Something to the masking pipeline. Comply with alongside to the top of the article for the demo rationalization, together with hyperlinks to run the appliance in a Paperspace Pocket book.

What’s IDM-VTON?

At its core, IDM-VTON is a pipeline for nearly clothes a determine in a garment utilizing two photos. In their very own phrases, the digital try-on “renders a picture of an individual sporting a curated garment, given a pair of photos depicting the particular person and the garment, respectively” (Supply).

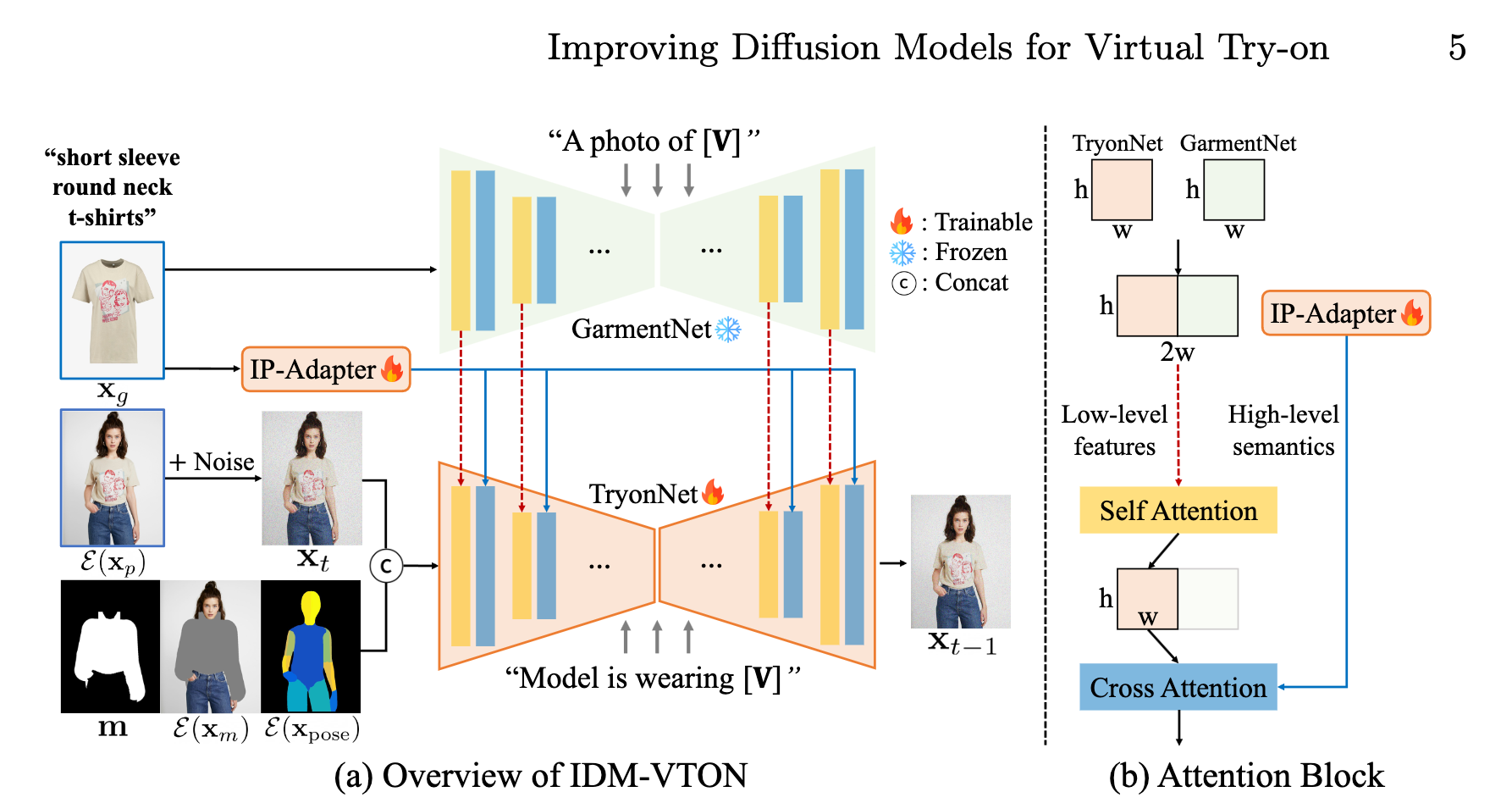

We are able to see the mannequin structure within the determine above. It consists of a parallel pipeline of two personalized Diffusion UNet’s, TyonNet and GarmentNet, and an Picture Immediate Adapter (IP-Adapter) module. The TryonNet is the principle UNet that processes the particular person picture. In the meantime, the IP-Adapter encodes the high-level semantics of the garment picture, for use later with the TryonNet. Additionally concurrently, the GarmentNet encodes the low-level options of the garment picture.

Because the enter for the TryonNet UNet, the mannequin concatenates the noised latents for the human mannequin with a masks extracted of their clothes and a DensePose illustration. The TryonNet makes use of the now concatenated latents with the person supplied, detailed garment caption [V] because the enter for the TryonNet. In parallel, the GarmentNet takes the detailed caption alone as its enter.

To realize the ultimate output, midway by means of the diffusion steps in TryonNet, the pipeline concatenates the intermediate options of TryonNet and GarmentNet to move them to the self-attention layer. The ultimate output is then acquired after fusing it the options from the textual content encoder and IP-adapter with the cross-attention layer.

What does IDM-VTON allow us to do?

In brief, IDM-VTON let’s us nearly attempt on garments. This course of is extremely strong and versatile, and is ready to basically apply any upper-torso clothes (shirts, shirt, and so on.) to any determine. Because of the intricate pipeline we described above, the unique pose and basic options of the enter topic are retained beneath the brand new clothes. Whereas this course of continues to be fairly sluggish due to the computational necessities of diffusion modeling, this nonetheless provides and spectacular different to bodily attempting garments on. We are able to count on to see this know-how proliferate in retail tradition because the run value goes down over time.

Enhancing IDM-VTON

On this demo, we need to showcase some small enhancements we now have added to the IDM-VTON Gradio utility. Particularly, we now have prolonged the mannequin’s potential to dress the actors past the higher physique to all the physique, barring sneakers and hats.

To make this potential, we now have built-in IDM-VTON with the unimaginable Grounded Phase Something venture. This venture makes use of GroundingDINO with Phase Something to make it potential to phase, masks, and detect something in any picture utilizing simply textual content prompts.

In apply, Grounded Phase Something let’s us mechanically dress folks’s decrease our bodies by extending the protection of the automatic-masking to all clothes on the physique. The unique masking methodology utilized in IDM-VTON simply masks the higher physique, and is pretty lossy with regard to how carefully it matches the define of the determine. Grounded Phase Something masking is considerably increased constancy and correct to the physique.

Within the demo, we now have added Grounded Phase Something to work with the unique masking methodology. Use the Grounded Phase Something toggle on the backside left of the appliance to show it on when operating the demo.

IDM-VTON Demo

Convey this venture to life

To run the IDM-VTON Demo with our Grounded Phase Something updates, all we have to do is click on the hyperlink right here or with the Run on Paperspace buttons above or on the prime of the article. After getting clicked the hyperlink, begin the machine to start the demo. That is defaulted to run on an A100-80G GPU, however you’ll be able to manually change the Machine code to any of the opposite obtainable GPU or CPU machines.

Setup

As soon as your machine is spun up, we are able to start organising the setting. First, copy and paste every line individually from the next cell into your terminal. That is essential to set the setting variables.

export AM_I_DOCKER=False

export BUILD_WITH_CUDA=True

export CUDA_HOME=/usr/native/cuda-11.6/Afterwards, we are able to copy all the following code block, and paste into the terminal. This may set up all of the wanted libraries for this utility to run, and obtain a number of the essential checkpoints.

## Set up packages

pip uninstall -y jax jaxlib tensorflow

git clone https://github.com/IDEA-Analysis/Grounded-Phase-Something

cp -r Grounded-Phase-Something/segment_anything ./

cp -r Grounded-Phase-Something/GroundingDino ./

python -m pip set up -e segment_anything

pip set up --no-build-isolation -e GroundingDINO

pip set up -r necessities.txt

## Get fashions

wget https://huggingface.co/areas/abhishek/StableSAM/resolve/most important/sam_vit_h_4b8939.pth

wget -qq -O ckpt/densepose/model_final_162be9.pkl https://huggingface.co/areas/yisol/IDM-VTON/resolve/most important/ckpt/densepose/model_final_162be9.pkl

wget -qq -O ckpt/humanparsing/parsing_atr.onnx https://huggingface.co/areas/yisol/IDM-VTON/resolve/most important/ckpt/humanparsing/parsing_atr.onnx

wget -qq -O ckpt/humanparsing/parsing_lip.onnx https://huggingface.co/areas/yisol/IDM-VTON/resolve/most important/ckpt/humanparsing/parsing_lip.onnx

wget -O ckpt/openpose/ckpts/body_pose_model.pth https://huggingface.co/areas/yisol/IDM-VTON/resolve/most important/ckpt/openpose/ckpts/body_pose_model.pthAs soon as these have end operating, we are able to start operating the appliance.

IDM-VTON Utility demo

Operating the demo could be finished utilizing the next name in both a code cell or the identical terminal we now have been utilizing. The code cell within the pocket book is crammed in for us already, so we are able to run it to proceed.

!python app.py Click on the shared Gradio hyperlink to open the appliance in an internet web page. From right here, we are able to now add our garment and human determine photos to the web page to run IDM-VTON! One factor to notice is that we now have modified the default settings a bit from the unique launch, notably reducing the inference steps and including the choices for Grounded Phase Something and to search for further areas on the physique to attract on. Grounded Phase Something will prolong the potential of the mannequin to all the physique of the topic, and permit us to decorate them in a greater variety of clothes. Right here is an instance we made utilizing the pattern photos supplied by the unique demo and, in an effort to search out an absurd outfit selection, a clown costume:

You’ll want to attempt it out on all kinds of poses and bodytypes! It is extremely versatile.

Closing ideas

The large potential for IDM-VTON is straight away obvious. The times the place we are able to nearly attempt on any outfit earlier than buy is quickly approaching, and this know-how represents a notable step in direction of that improvement. We sit up for seeing extra work finished on related tasks going ahead!