The European Union Synthetic Intelligence Act (EU AI Act) is the primary complete authorized framework to control the design, improvement, implementation, and use of AI techniques inside the European Union. The first goals of this laws are to:

- Make sure the protected and moral use of AI

- Defend elementary rights

- Foster innovation by setting clear guidelines— most significantly for high-risk AI functions

The AI Act brings construction into the authorized panorama for corporations which might be immediately or not directly counting on AI-driven options. We want to say that this AI Act is a complete strategy to AI regulation internationally and can influence companies and builders far past the European Union’s borders.

On this article, we go deep into the EU AI Act: its tips, what corporations could also be anticipated of them, and the higher implications this Act can have on the enterprise ecosystem.

About us: Viso Suite supplies an all-in-one platform for corporations to carry out pc imaginative and prescient duties in a enterprise setting. From individuals monitoring to stock administration, Viso Suite helps clear up challenges throughout industries. To study extra about Viso Suite’s enterprise capabilities, e-book a demo with our group of consultants.

What’s the EU AI Act? A Excessive-Degree Overview

The European Fee revealed a regulatory doc in April 2021 to create a uniform legislative framework for the regulation of AI functions amongst its member states. After greater than three years of negotiation, the regulation was revealed on 12 July 2024, going into impact on 1 August 2024.

Following is a four-point abstract of this act:

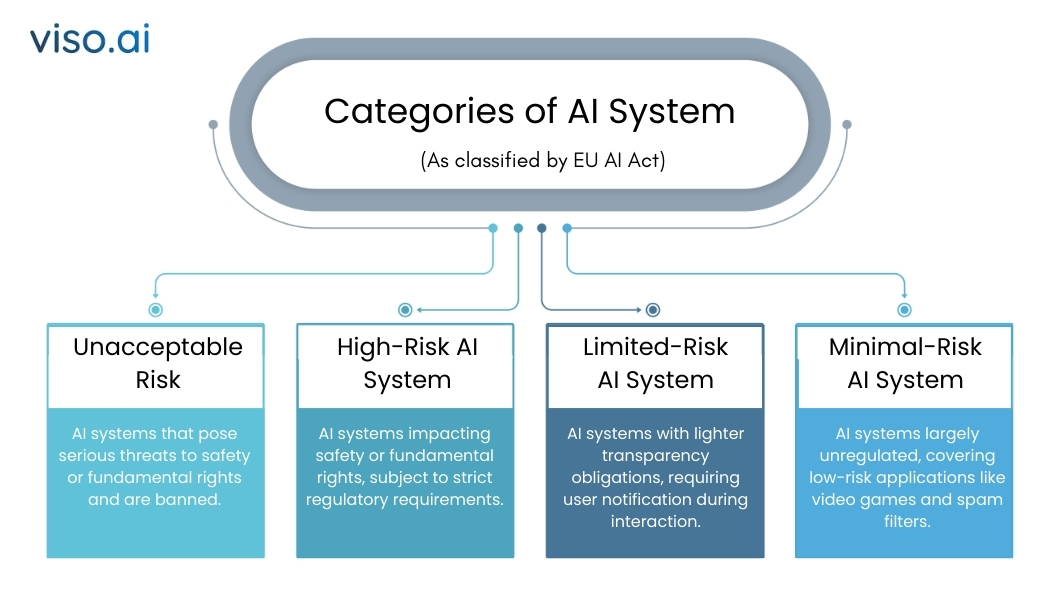

Threat-based Classification of AI Methods

The chance-based strategy classifies AI techniques into one in all 4 threat classes of threat:

Unacceptable Threat:

AI techniques pose a grave hazard and harm to security and elementary rights. This could additionally embody any system making use of social scoring or manipulative AI practices.

Excessive-Threat AI Methods:

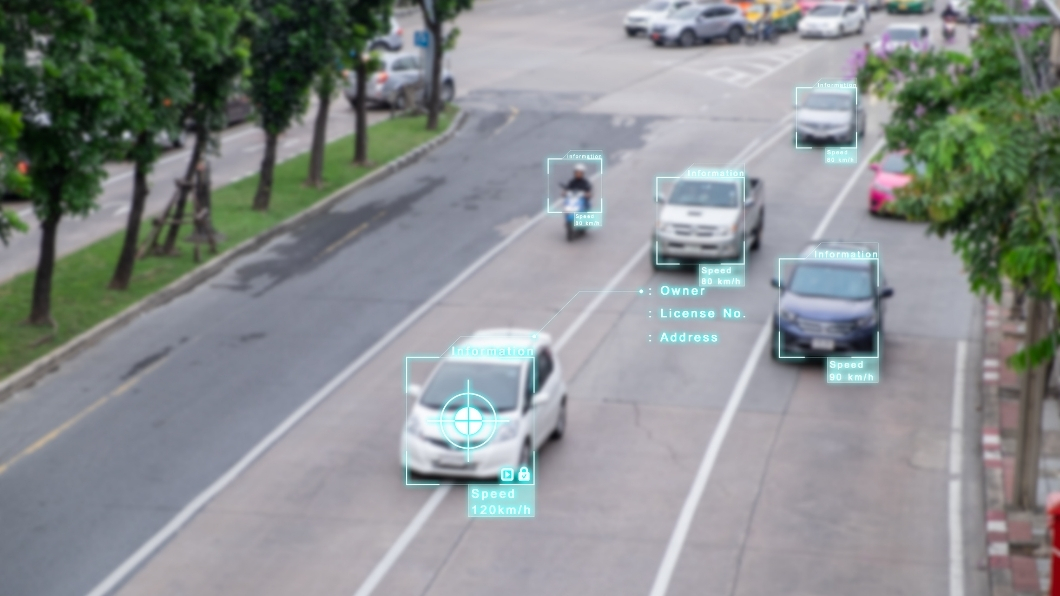

This entails AI techniques with a direct influence both on security or on primary rights. Examples embrace these within the healthcare, regulation enforcement, and transportation sectors, together with different vital areas. These techniques will probably be topic to probably the most rigorous regulatory necessities which will embrace rigorous conformity assessments, obligatory human oversight, and the adoption of strong threat administration techniques.

Restricted Threat:

Methods of restricted threat can have lighter calls for for transparency; nevertheless, builders and deployers ought to guarantee that transparency to the end-user is given concerning the presence of AI, as an illustration, chatbots and deepfakes.

Minimal Threat AI Methods:

Most of those techniques presently are unregulated, resembling functions like AI in video video games or spam filters. Nevertheless, as generative AI matures, potential adjustments to the regulatory regime for such techniques are usually not precluded.

Obligations on Suppliers of Excessive-Threat AI:

A lot of the compliance burdens builders. In any occasion, whether or not inside or outdoors the EU, these obligations apply to any developer that’s advertising or working high-risk AI fashions emanating inside or into the European Union states.

Conformity with these rules additional extends to high-risk AI techniques supplied by third nations whose output is used inside the Union.

Person’s Tasks (Deployers):

Customers means any pure or authorized individuals deploying an AI system in knowledgeable context. Builders have much less stringent obligations as in comparison with builders. They do, nevertheless, have to make sure that when deploying high-risk AI techniques both within the Union or when the output of their system is used within the Union states.

All these obligations are utilized to customers primarily based each within the EU and in third nations.

Basic-Objective AI (GPAI):

The builders of general-purpose AI fashions ought to present technical documentation and directions to be used and likewise comply with copyright legal guidelines. Their AI Mannequin shouldn’t create a systemic threat.

Free and Open-license suppliers of GPAI would adjust to the copyright and publication of the coaching knowledge except their AI mannequin creates a systemic threat.

No matter whether or not being licensed or not, the identical mannequin analysis, adversarial check, incident monitoring and monitoring, and cybersecurity practices ought to be performed on GPAI fashions that current systemic dangers.

What Can Be Anticipated From Corporations?

Organizations utilizing or growing AI applied sciences ought to be ready to count on vital adjustments in compliance, transparency, and operational oversight. They will put together for the next:

Excessive-Threat AI Management Necessities:

Corporations deploying high-risk AI techniques should be answerable for strict documentation, testing, and reporting. They are going to be anticipated to undertake ongoing threat evaluation, high quality administration techniques, and human oversight. We will, in flip, require correct documentation of the system’s performance, security, and compliance. Certainly, non-compliance may appeal to heavy fines below the GDPR.

Transparency Necessities:

Corporations should talk this nicely to customers, whether or not the AI system is evident sufficient to point to the consumer when he’s coping with an AI system or sufficiently unclear within the case of limited-risk AI. It should therefore enhance consumer autonomy and compliance with the precept of the EU when it comes to transparency and equity. This rule will cowl using issues like deepfakes; they should disclose if a factor is AI-generated or AI-modified.

Information Governance and AI Coaching Information:

Because of this AI techniques shall be educated, validated, and examined with various, consultant datasets, unbiased in nature. This shall require enterprise to look at extra fastidiously its sources of information and transfer towards way more rigorous types of knowledge governance in order that AI fashions yield nondiscriminatory outcomes.

Impression on Product Growth and Innovation:

The Act introduces AI builders to a higher extent of latest testing and validation procedures which will decelerate the tempo of improvement. Corporations that may incorporate compliance measures from an early stage of their lifecycle of AI merchandise can have key differentiators in the long term. Strict regulation might curtail the tempo of innovation in AI at first, however companies in a position to regulate rapidly to such requirements will discover themselves well-positioned to increase confidently into the EU market.

Tips to Know About

Corporations have to stick to the next key instructions to adjust to the EU Synthetic Intelligence Act:

Timeline for Enforcement

The EU AI Act proposes a phase-in enforcement schedule to offer organizations time to adapt to new necessities.

- 2 August 2024: The official implementation date of the Act.

- 2 February 2025: AI techniques falling below the classes of “unacceptable threat” will probably be banned.

- 2 Could 2025: Codes of conduct apply. These codes are tips to AI builders on finest practices to adjust to the Act and certainly align their operations with EU rules.

- 2 August 2025: Governance guidelines concerning tasks for Basic Objective AI, or GPAI, are in pressure. For GPAI techniques, together with giant language fashions or generative AI, there are specific calls for on transparency and security. On this respect, the calls for on such techniques are usually not interfered with throughout this stage however fairly given time to get ready.

- 2 August 2026: Full implementation of GPAI commitments begins.

- 2 August 2027: Necessities for high-risk AI techniques will totally apply, and thus, corporations can have extra time to align with probably the most demanding components of the regulation.

Threat Administration Methods

The suppliers of high-risk AI have to ascertain a threat administration system offering for fixed monitoring of the efficiency of AIs, periodic assessments regarding compliance points, and the instigation of fallback plans in case any mistaken operation or malfunction of AI techniques happens.

Publish-Market Surveillance

Corporations will probably be required to keep up post-market monitoring packages for so long as the AI system is in use. That is to make sure ongoing compliance with the necessities outlined of their functions. This would come with actions resembling suggestions solicitation, operational knowledge evaluation, and routine auditing.

Human Oversight

The Act requires high-risk AI techniques to supply for human oversight. That’s, as an illustration, people want to have the ability to intervene with, or override AI selections, the place that’s vital; as an illustration, concerning healthcare, the AI prognosis or therapy suggestion needs to be checked by a healthcare skilled earlier than it’s utilized.

Registration of Excessive-Threat AI Methods

Excessive-risk AI techniques have to be registered within the database of the EU and permit entry to the authorities and public with related data concerning the deployment and operation of that AI system.

Third-Social gathering Evaluation

Third-party assessments of some AI techniques might be wanted earlier than deployment, relying on the chance concerned. Audits, certification, and different types of analysis would verify their conformity with EU rules.

Impression on Enterprise Panorama

The introduction of the EU AI Act is predicted to have far-reaching results on the enterprise panorama.

Equalizing the Enjoying Discipline

The Act will stage the playground for companies by imposing new rules on AI over corporations of all sizes in issues of security and transparency. This might additionally result in an enormous benefit for smaller AI-driven companies.

Constructing Belief in AI

The brand new EU AI Act will little doubt breed extra client confidence in AI applied sciences by espousing the values of transparency and security inside its provisions. Corporations that comply with these rules can additional this belief as a differentiator. In flip, advertising their companies as moral and accountable AI suppliers.

Potential Compliance Prices

For some companies, and particularly smaller ones, the price of compliance might be insufferable. Conformity to the brand new regulatory setting may nicely require heavy funding in compliance infrastructure, knowledge governance, and human oversight. The fines for non-conformity may go as excessive as 7% of worldwide revenue-a monetary threat corporations can’t afford to miss.

Elevated Accountability in Circumstances of AI Failure

Companies will probably be held extra accountable when there’s a failure within the AI system or another misuse that results in harm to individuals or a neighborhood. There might also be a rise within the authorized liabilities of corporations if they don’t check and monitor AI functions appropriately.

Geopolitical Implications

The EU AI Act lastly can set a globally main instance in regulating AI. Non-EU corporations performing within the EU market are topic to the respective guidelines. Thus, fostering cooperation and alignment internationally with questions of AI requirements. This may occasionally additionally name upon different jurisdictions, resembling america, to take comparable regulatory steps.

Steadily Requested Questions

Q1. In accordance with the EU AI Act, that are the high-risk AI techniques?

A: Excessive-risk AI techniques are functions in fields which have direct contact with a person citizen’s security, rights, and freedoms. This contains AI in vital infrastructures, like transport; in healthcare, like in prognosis; in regulation enforcement, enhanced by way of biometrics; in employment processes; and even in schooling. These shall be techniques of sturdy compliance necessities, resembling threat evaluation, transparency, and steady monitoring.

Q2. Does each enterprise growing AI must comply with the EU AI Act?

A: Not all AI techniques are regulated uniformly. Typically, the Act classifies AI techniques into the next classes in keeping with their potential for threat. These classes embrace unacceptable threat, excessive, restricted, and minimal threat. This laws solely lays excessive ranges of compliance for high-risk AI techniques, primary ranges of transparency for limited-risk techniques, and minimal-risk AI techniques, which embrace manifestly trivial functions resembling video video games and spam filters, stay largely unregulated.

Companies growing high-risk AI should comply if their AI is deployed within the EU market, whether or not they’re primarily based inside or outdoors the EU.

Q3. How does the EU AI Act have an effect on corporations outdoors the EU?

A: The EU Synthetic Intelligence Act AI would apply to corporations with a spot of firm outdoors the Union when their AI techniques are deployed or used inside the Union. As an illustration, if an AI system developed in a 3rd nation points outputs used inside the Union, it then would wish to adjust to the necessities below the EU Act. On this vein, all AI techniques affecting EU residents would meet the an identical regulatory bar, irrespective of the place they’re constructed.

This fall. What are the penalties for any non-compliance with the EU AI Act?

A: The EU Synthetic Intelligence AI Act punishes the occasion of non-compliance with vital fines. Certainly, for extreme infringements, resembling makes use of of prohibited AI techniques and non-compliance with obligations for high-risk AI, fines of as much as 7% of the corporate’s total worldwide annual turnover or €35 million apply.

Beneficial Reads

When you get pleasure from studying this text, we have now some extra advisable reads