by Rick Korzekwa, 2 February, 2022

On this put up I define obvious regularities in how main new technological capabilities and strategies come about. I’ve not rigorously checked to see how broadly they maintain, however it appears more likely to me that they generalize sufficient to be helpful. For every sample, I give examples, then some comparatively speculative explanations for why they occur. I end the put up with an overview of how AI progress will look if it sticks to those patterns. The patterns are:

- The primary model of a brand new know-how is sort of all the time of very low sensible worth

- After the primary model comes out, issues enhance in a short time

- Many massive, well-known, spectacular, and vital advances are preceded by lesser-known, however nonetheless notable advances. These lesser-known advances usually observe an extended interval of stagnation

- Main advances are inclined to emerge whereas related consultants are nonetheless in broad disagreement about which issues are more likely to work

Whereas investigating main technological developments, I’ve seen patterns among the many applied sciences which were dropped at our consideration at AI Impacts. I’ve not rigorously investigated these patterns to see in the event that they generalize properly, however I believe it’s worthwhile to jot down about them for a number of causes. First, I hope that others can share examples, counterexamples, or intuitions that can assist make it clearer whether or not these patterns are “actual” and whether or not they generalize. Second, supposing they do generalize, these patterns may assist us develop a view of the default method by which main technological developments occur. AI is unlikely to evolve to this default, however it ought to assist inform our priors and function a reference for the way AI differs from different applied sciences. Lastly, I believe there may be worth in discussing and creating widespread information round which facets of previous technological progress are most informative and decision-relevant to mitigating AI threat.

What I imply by ‘main technological developments’

All through this put up I’m referring to a considerably slim class of advances in technological progress. I received’t attempt to rigorously outline the boundaries for classification, however the sorts of applied sciences I’m writing about are related to vital new capabilities or clear turning factors in how civilization solves issues. This contains, amongst many others, flight, the telegraph, nuclear weapons, the laser, penicillin, and the transistor. It doesn’t embrace achievements that aren’t primarily pushed by enhancements in our technological capabilities. For instance, most of the construction top or ship dimension advances included in our discontinuities investigation appear to have occurred primarily as a result of somebody had the means and want to construct a big ship or construction and the supplies and strategies have been out there, not due to a considerable change in identified strategies or out there supplies. It additionally doesn’t embrace applied sciences granting spectacular new capabilities that haven’t but been helpful outdoors of scientific analysis, comparable to gravitational wave interferometers.

I’ve tried to be according to terminology, however I’ve most likely been a bit sloppy in locations. I take advantage of ‘development’ to imply any discrete occasion by which a brand new functionality is demonstrated or a tool is created that demonstrates a brand new precept of operation. In some locations, I take advantage of ‘achievement’ to imply issues like flying throughout the ocean for the primary time, and ‘new technique’ to imply issues like utilizing nuclear reactions to launch vitality as an alternative of chemical reactions.

Issues to remember whereas studying this:

- The first function of this put up is to current claims, to not justify them. I give examples and the reason why we would count on the sample to exist, however my purpose is to not make the strongest case that the patterns are actual.

- I make these claims based mostly on my total impression of how issues go, having spent a part of the previous few years doing analysis associated to technological progress. I’ve achieved some trying to find counterexamples, however I’ve not made a severe effort to research them statistically.

- The proposed explanations for why the patterns exist (in the event that they do certainly exist) are largely speculative, although they’re partially based mostly on well-accepted phenomena like expertise curves.

- There are some apparent sources of bias within the pattern of applied sciences I’ve checked out. They’re principally issues that started off as candidates for discontinuities, which have been crowd sourced after which examined in a method that strongly favored giving consideration to developments with clear metrics for progress.

- I’m unsure learn how to exactly provide my credence on these claims, however I estimate roughly 50% that at the very least one is usually incorrect. My greatest guess for the way I’ll replace on new info is by narrowing the related reference class of developments.

- Though I did discover these patterns at the very least considerably by myself, I don’t declare these are unique to me. I’ve seen associated issues in a wide range of locations, significantly the ebook Patterns of Technological Innovation by Devendra Sahal.

The primary model of a brand new system or functionality is sort of all the time horrible

More often than not when a serious milestone is crossed, it’s achieved in a minimal method that’s so dangerous it has little or no sensible worth. Examples embrace:

- The primary ship to cross the Atlantic utilizing steam energy was slower than a quick ship with sails.

- The primary transatlantic telegraph cable took a complete day to ship a message the size of a pair Tweets, would cease working for lengthy durations of time, and failed utterly after three weeks.

- The primary flight throughout the Atlantic took the shortest cheap path and crash-landed in Eire.

- The primary equipment for purifying penicillin didn’t produce sufficient to avoid wasting the primary affected person.

- The primary laser didn’t produce a vivid sufficient spot to {photograph} for publication

Though I can’t say I predicted this may be the case earlier than studying about it, looking back I don’t assume it’s stunning. As we work towards an invention or achievement, we enhance issues alongside a restricted variety of axes with every design modification, oftentimes on the expense of different axes. Within the case of bettering an current know-how till it’s match for some new activity, this implies optimizing closely on whichever axes are most vital for conducting that activity. For instance, to switch a World Battle I bomber to cross the ocean, you could optimize closely for vary, even when it requires giving up different options of sensible worth, like the power to hold ordinance. Growing vary with out making such tradeoffs makes the issue a lot more durable. Not solely does this make it much less doubtless you’ll beat rivals to the achievement, it means you could do design work in a method that’s much less iterative and requires extra inference.

This additionally applies to constructing a tool that demonstrates a brand new precept of operation, just like the laser or transistor. The simplest path to creating the system work in any respect will, by default, ignore many pointless sensible concerns. Skipping straight previous the dinky minimal model to the sensible model is tough, not solely as a result of designing one thing is more durable with extra constraints, however as a result of we are able to be taught so much from the dinky minimal model on our solution to the extra sensible model. This seems associated to why Theodore Maiman received the race to construct a laser. His rivals have been pursuing designs that had clearer sensible worth however have been considerably tougher to get proper.

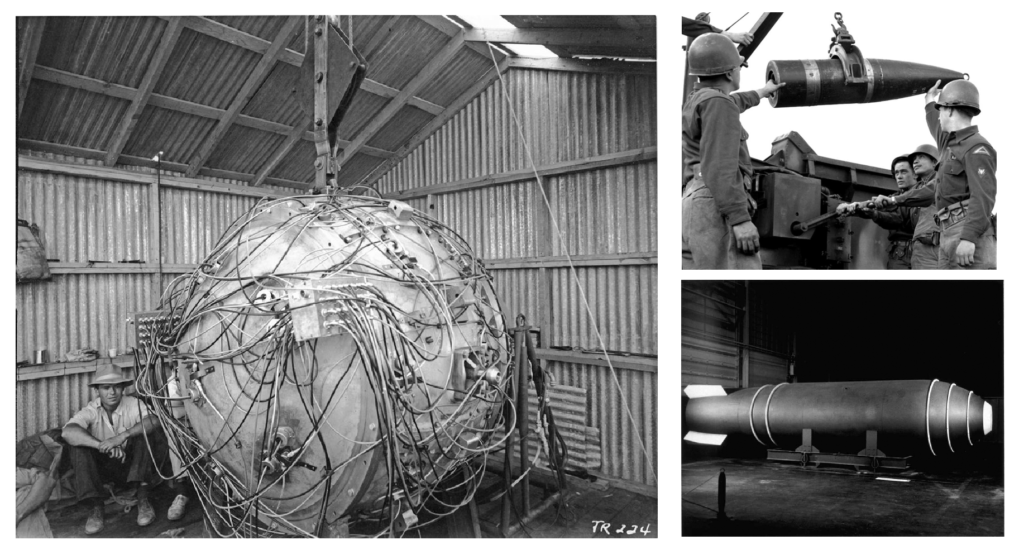

The clearest instance I’m conscious of for a know-how that doesn’t match this sample is the primary nuclear weapon. The necessity to create a weapon that was helpful in the actual world rapidly and in secret utilizing extraordinarily scarce supplies drove the scientists and engineers of the Manhattan Mission to resolve many sensible issues earlier than constructing and testing a tool that demonstrated the fundamental rules. Absent these constraints, it might doubtless have been simpler to construct at the very least one take a look at system alongside the way in which, which might most definitely have been ineffective as a weapon.

Progress usually occurs extra rapidly following a serious development

New applied sciences could begin out horrible, however they have an inclination to enhance rapidly. This isn’t only a matter of making use of a relentless fee of fractional enchancment per yr to a rise in efficiency—the doubling time for efficiency decreases. Examples:

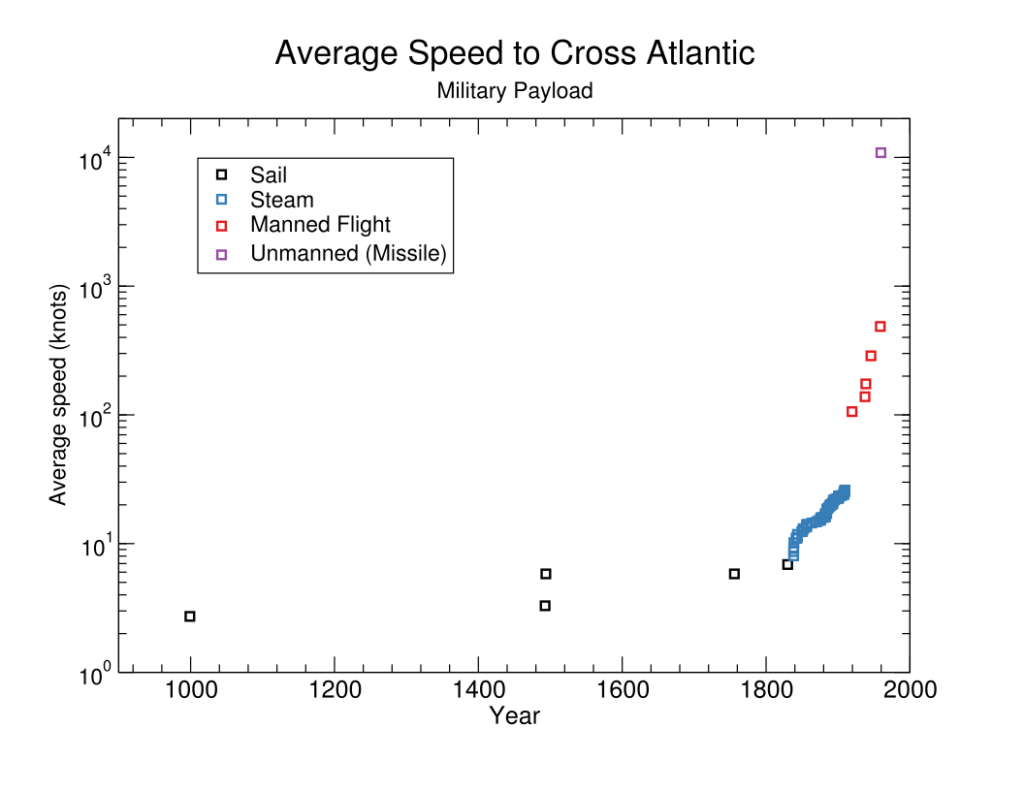

- Common velocity to cross the Atlantic in wind-powered ships doubled over roughly 300 years, after which crossing velocity for steam-powered ships doubled in about 50 years, and crossing velocity for plane doubled twice in lower than 40 years.

- Telecommunications efficiency, measured because the product of bandwidth and distance, doubled each 6 years earlier than the introduction of fiber optics and doubled each 2 years after

- The vitality launched per mass of typical explosive elevated by 20% through the 100 years main as much as the primary nuclear weapon in 1945, and by 1960 there have been units with 1000x the vitality density of the primary nuclear weapon.

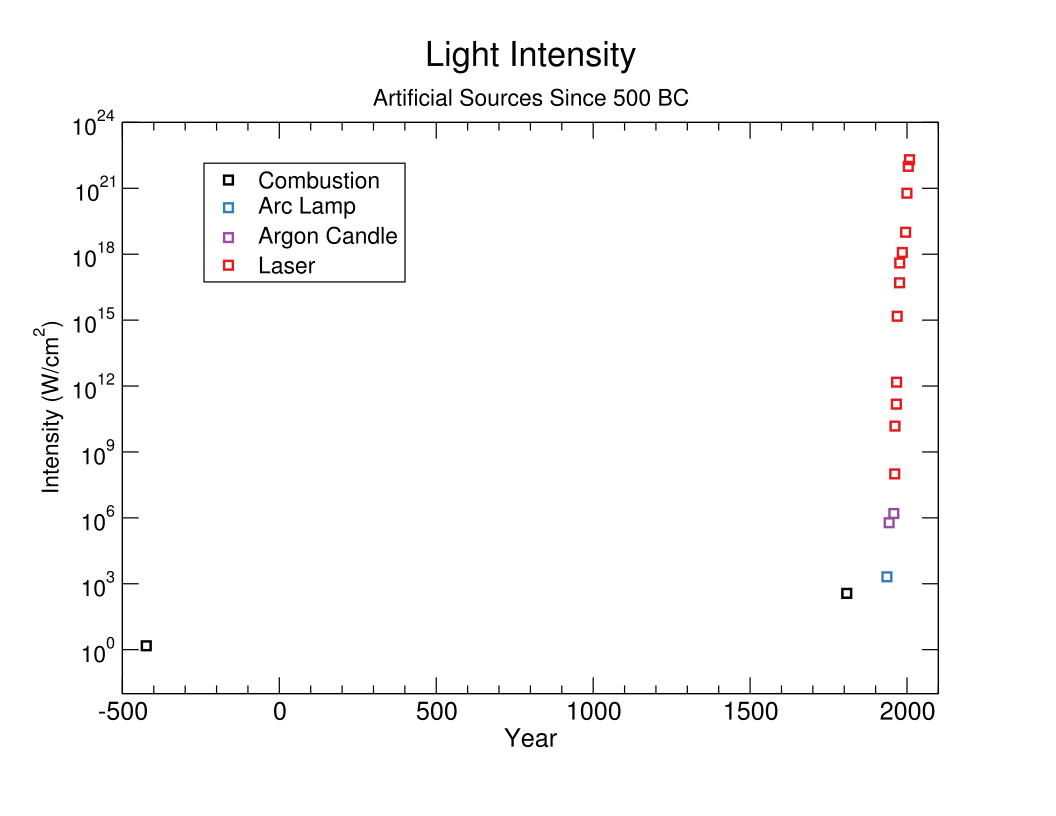

- The very best depth out there from synthetic gentle sources had a mean doubling time of about 10 years from 1800 to 1945. Following the invention of the laser in 1960, the doubling time averaged simply six months for over 15 years. Lasers additionally discovered sensible purposes in a short time, with profitable laser eye surgical procedure inside 18 months and the laser rangefinders a number of years after that.

This sample is much less sturdy than the others. An apparent counterexample is Moore’s Legislation and associated trajectories for progress in laptop {hardware}, which stay remarkably easy throughout main adjustments in underlying know-how. Nonetheless, even when main technological advances don’t all the time speed up progress, they appear to be one of many main causes of accelerated progress.

Brief-term will increase within the fee of progress could also be defined by the wealth of potential enhancements to a brand new know-how. The primary model will virtually all the time neglect quite a few enhancements which are straightforward to search out. Moreover, as soon as a the know-how is out on the earth, it turns into a lot simpler to discover ways to enhance it. This may be seen in expertise curves for manufacturing, which usually present giant enhancements per unit produced in manufacturing time and price when a brand new know-how is launched.

I’m unsure if this will clarify long-running will increase within the fee of progress, comparable to these for telecommunications and transatlantic journey by steamship. Perhaps these applied sciences benefited from a big area of design enhancements and ample, sustained development in adoption to push down the expertise curve rapidly. It could be associated to exterior elements, like the appearance of steam energy rising basic financial and technological progress sufficient to keep up the robust development in developments for crossing the ocean.

Main developments are often preceded by speedy or accelerating progress

Main new technological advances are sometimes preceded by lesser-known, however substantial developments. Whereas we have been investigating candidate applied sciences for the discontinuities mission, I used to be usually shocked to search out that this previous progress was speedy sufficient to remove or drastically scale back the calculated dimension of the candidate discontinuity. For instance:

- The invention of the laser in 1960 was preceded by different sources of intense gentle, together with the argon flash within the early Nineteen Forties and numerous electrical discharge lamps by the Nineteen Thirties. Progress was pretty sluggish from ~1800 to the Nineteen Thirties, and more-or-less nonexistent for hundreds of years earlier than that.

- Penicillin was found throughout a interval of main enhancements in public well being and coverings for bacterial infections, together with a drug that was such an enchancment over current remedies for an infection it was referred to as the “magic bullet”.

- The Haber Course of for fixing nitrogen, usually given substantial credit score for enhancements in farming output that enabled the large inhabitants development of the previous century, was invented in 1920. It was preceded by the invention of two different processes, in 1910 and 1912, the latter of which was greater than an element of two enchancment over the previous.

- The telegraph and flight each crossed the Atlantic for the primary time throughout a roughly 100 yr interval of speedy progress in steamships, throughout which crossing occasions decreased by an element of 4.

-

Progress in gentle depth for synthetic sources exploded within the mid-1900s. Most progress is from enhancements in lasers, however the Manhattan Mission led to the event of explosively-driven flashes 15-20 years prior. -

Progress in intense gentle sources since 1800

This sample appears much less sturdy than the earlier one and it’s, to me, much less hanging. The accelerated progress previous a serious development varies broadly when it comes to total impressiveness, and there may be not a transparent cutoff for what ought to qualify as becoming the sample. Nonetheless, once I to provide you with a transparent instance of one thing that fails to match the sample, I had some problem. Even nuclear weapons have been preceded by at the very least one notable advance in typical explosives a number of years earlier, following what appears to have been a long time of comparatively sluggish progress.

An apparent and maybe boring rationalization for that is that progress on many metrics was accelerating through the roughly 200-300 yr interval when most of those befell. Technological progress exploded between 1700 and 2000, in a method that appears to have been accelerating fairly quickly till round 1950. Each level on an aggressively accelerating efficiency development follows a interval of unprecedented progress. It’s believable to me that this totally explains the sample, however I’m not totally satisfied.

An extra contributor could also be that the drivers for analysis and innovation that end in main advances are inclined to trigger different developments alongside the way in which. For instance, wars improve curiosity in explosives, so perhaps it’s not stunning that nuclear weapons have been developed across the identical time as some advances in typical explosives. One other potential driver for such innovation is a disruption to incremental progress by way of current strategies that requires exploration of a broader answer area. For instance, the invention of the argon flash wouldn’t have been obligatory if it had been potential to enhance the sunshine output of arc lamps.

Main developments are produced by unsure scientific communities

Almost each time I look into the small print of the work that went into producing a serious technological development, I discover that the related consultants had substantial disagreements about which strategies have been more likely to work, proper up till the development takes place, generally about pretty basic items. For instance:

- The workforce accountable for designing and working the primary transatlantic telegraph cable had disagreements about primary properties of sign propagation on very lengthy cables, which ultimately led to the cable’s failure.

- The scientists of the Manhattan Mission had broadly various estimates for the weapon’s yield, together with scientists who anticipated the system to fail totally.

- Widespread skepticism, misunderstanding, and disagreement of penicillin’s chemical and therapeutic properties have been largely accountable for a ten yr delay in its improvement.

- Few researchers concerned within the race to construct a functioning laser anticipated the design that finally prevailed to be viable.

The default rationalization for this appears fairly clear to me—empirical work is a vital a part of clearing up scientific uncertainty, so we should always count on profitable demonstrations of, novel, impactful applied sciences to remove a variety of uncertainty. Nonetheless, it didn’t should be the case that the eradicated uncertainty is substantial and associated to primary information in regards to the functioning of the know-how. For instance, the groups competing for the primary flight throughout the Atlantic didn’t appear to have something main to disagree about, although they could have made totally different predictions in regards to the success of assorted designs.

There’s a distinction to be made right here, between excessive ranges of uncertainty on the one hand, and low ranges of understanding on the opposite. The communities of researchers and engineers concerned in these tasks could have collectively assigned substantial credence to varied mistaken concepts about how issues work, however my impression is that they did at the very least agree on the fundamental outlines of the issues they have been making an attempt to resolve. They principally knew what they didn’t know. For instance, many of the disagreement about penicillin was in regards to the particulars of issues like its solubility, stability, and therapeutic efficacy. There wasn’t a disagreement about, for instance, whether or not the penicillium fungus was inhibiting bacterial development by producing an antibacterial substance or by another mechanism. I’m unsure learn how to operationalize this distinction extra exactly.

Relevance to AI threat

As I defined in the beginning of this put up, one in all my objectives is to develop a typical case for the event of main technological developments, so we are able to inform our priors about AI progress and take into consideration the methods by which it could or could not differ from previous progress. I’ve my very own views on the methods by which it’s more likely to differ, however I don’t need to get into them right here. To that finish, here’s what I count on AI progress will appear like if it matches the patterns of previous progress.

- Main new strategies or capabilities for AI will likely be demonstrated in methods which are typically fairly poor.

- Below the proper circumstances, comparable to a multi-billion-dollar effort by a state actor, the primary model of an vital new AI functionality or technique could also be sufficiently superior to be a serious world threat or of very giant strategic worth.

- An early system with poor sensible efficiency is more likely to be adopted by very speedy progress towards a system that’s extra useful or harmful

- Progress main as much as an vital new technique or functionality in AI is extra more likely to be accelerating than it’s to be stagnant. Notable advances previous a brand new functionality is probably not direct ancestors to it.

- Though high-risk and transformative AI capabilities are more likely to emerge in an setting of much less uncertainty than in the present day, the feasibility of such capabilities and which strategies can produce them are more likely to be contentious points inside the AI group proper up till these capabilities are demonstrated.

Because of Katja Grace, Daniel Kokotajlo, Asya Bergal, and others for the mountains of information and evaluation that the majority of this text is predicated on. Because of Jalex Stark, Eli Tyre, and Richard Ngo for his or her useful feedback. All views and errors are my very own.

All charts on this put up are unique to AI Impacts. All images are within the public area.