Deep studying fashions are identified to be ‘black bins’ as a result of they comprise a level of randomness which they use to foretell ‘magical’ outcomes that make sense to us people. Picture era fashions are deep studying fashions which are educated on 1000’s if not tens of millions of photos in the present day and it’s not possible to element all the data they study from the coaching information which is then used to generate photorealistic photos. What we do know for certain is that enter photos from the coaching information are encoded into an intermediate kind which the mannequin completely examines to find out an important options of the enter information. The hyperspace through which these intermediate representations (vectors) are examined is called the latent house.

On this article, I’ll evaluate some options of the overall latent house and give attention to a particular latent house generally known as the Model House. Model House is the hyperspace of the channel-wise fashion parameters, $S$, which will be analyzed to find out which fashion codes correspond to particular attributes.

How Have Earlier Fashions Used The Latent House?

Generative Adversarial Networks (GANs) encode enter information into latent vectors utilizing discovered encoder MultiLayer Perceptrons. As soon as the info has been encoded, it may possibly then be decoded and upsampled into an inexpensive picture utilizing discovered de-convolutional layers.

StyleGAN, arguably essentially the most iconic GAN, is greatest identified for its generator mannequin which converts latent vectors into an intermediate latent house utilizing a discovered mapping community. The explanation for the intermediate latent house is to implement disentanglement. The generator attracts latent vectors from this intermediate house after which applies transformations on them to encode fashion both by normalizing characteristic maps or injecting noise.

After these operations, the generator decodes and upsamples these latent vectors encoded with the fashion to generate new synthetic photos bearing the visible illustration of the fashion. Though this text isn’t meant to focus solely on StyleGAN, it helps to know how the mannequin works as a result of its structure paved the best way for some expansive analysis on picture illustration within the latent house.

What’s ‘Disentanglement’? What Does It Imply To Be ‘Entangled’?

Picture coaching information is remodeled from its high-dimensional tensor construction into latent vectors which symbolize essentially the most descriptive options of the info. These options are sometimes called the ‘excessive variance’ options. With this new illustration of the info, there’s a risk that the encoding of 1 attribute, $a$, holds details about a special attribute, $b$. Due to this fact picture era fashions that alter attribute, $a$, will inadvertently alter attribute $b$. $a$ and $b$ are known as entangled representations and the hyperspace that these vector representations exist in is an entangled latent house. Representations (vectors) representations are mentioned to be disentangled if every latent dimension controls a single visible attribute of the picture. If every latent dimension controls a single visible attribute and every attribute is managed by a single latent dimension, then the representations are known as full representations.

What Does StyleGAN Educate Us About The Latent House?

Most GANs are educated to invert the coaching information into latent house representations by compressing the info into lower-dimension representations. The latent house is a hyperspace created by coaching an encoder to map enter photos to their applicable representations within the latent house. The encoder is educated utilizing an auto-encoder structure; the encoder compresses the enter picture right into a low-dimensional vector and the decoder upsamples the vector right into a full high-dimensional picture. The loss perform compares the generated picture and the enter picture and adjusts the parameters of the encoder and decoder accordingly.

The preliminary StyleGAN mannequin launched the concept of an intermediate latent house $W$ which is created by passing the latent vectors within the authentic latent house $Z$ by means of a discovered mapping community. The explanation for reworking the unique vectors $z in Z$ into the intermediate representations $w in W$ is to realize a disentangled latent house.

Manipulating a picture within the latent characteristic house requires discovering an identical latent code $w$ for it first. In StyleGAN 2 the authors found that a greater approach to enhance the outcomes is when you use a special $w$ for every layer of the generator. Extending the latent house by utilizing a special $w$ for every layer finds a better picture to the given enter picture nonetheless it additionally allows projecting arbitrary photos that should not have any illustration. To repair this, they think about discovering latent codes within the authentic, unextended latent house which correspond to pictures the generator may have produced.

In StyleGAN XL, the GAN was tasked to generate photos from a large-scale multi-class dataset. Along with encoding the picture attributes inside the vector representations within the latent house, the vectors additionally must encode the category data of the enter information. StyleGAN inherently works with a latent dimension of measurement 512. This diploma of dimensionality is fairly excessive for pure picture datasets (ImageNet’s dimension is ~40). A latent measurement code of 512 is extremely redundant and makes the mapping community’s process tougher firstly of coaching. To repair this, the authors cut back StyleGAN’s latent code $z$ to 64 to have secure coaching and decrease FID values. Conditioning the mannequin on class data is crucial to regulate the pattern class and enhance general efficiency. In a class-conditional StyleGAN, a one-hot encoded label is embedded right into a 512-dimensional vector and concatenated with z. For the discriminator, class data is projected onto the final layer.

How Does StyleGAN Model The Photos?

As soon as a picture has been inverted into the latent house $z in Z$ after which mapped into the intermediate latent house $w in W$, “discovered affine transformations then specialize $w$ into kinds that management Adaptive Occasion Normalization (AdaIN) operations after every convolution layer of the synthesis community $G$. The AdaIN operations normalize every characteristic map of the enter information individually, thereafter the normalized vectors are scaled and biased by the corresponding fashion vector that’s injected into the operation.” (Oanda 2023) Varied fashion vectors encode totally different modifications to totally different options of the picture e.g. coiffure fashion, pores and skin tone, illumination of the picture, and so forth. To tell apart the fashion vectors that have an effect on particular picture attributes, we have to flip our consideration to the fashion house.

Is There A Extra Disentangled House Than The Latent House $W$? Sure — Model House

The Model House is the hyperspace of the channel-wise fashion parameters, $S$, and is considerably extra disentangled than the opposite intermediate latent spaced explored by earlier fashions. The fashion vectors a.okay.a method codes referred to within the earlier part comprise numerous fashion channels that have an effect on totally different native areas of a picture. My understanding of the distinction between fashion vectors and magnificence channels will be defined by the next instance, “A mode vector that edits the particular person’s lip make-up can comprise fashion channels that decide the boundaries of the lips within the edited generated picture.” The authors who found the Model House describe a technique for locating a big assortment of favor channels, every of which is proven to regulate a definite visible attribute in a extremely localized and disentangled method. They suggest a easy technique for figuring out fashion channels that management a particular attribute utilizing a pre-trained channel classifier or a small variety of instance photos. Along with creating a technique to categorise totally different fashion channels, the authors additionally create a brand new metric “Attribute Dependency Metric” to measure the disentanglement in generated and edited photos.

After the community processes the intermediate latent vector $w$, the latent code is used to change the channel-wise activation statistics at every of the generator’s convolution layers. To modulate channel-wise means and variances, StyleGAN makes use of AdaIN operations described above. Some management over the generated outcomes could also be obtained by conditioning, which requires coaching the mannequin with annotated information. The style-based design allows the invention of quite a lot of interpretable generator controls after coaching the generator. Nonetheless, most style-based design strategies require pretrained classifiers, a big set of paired examples, or handbook examination of many candidate management instructions and this limits the flexibility of those strategies. Moreover, the person controls found by these strategies are usually entangled and non-local.

Proving the Validity of Model House, $S$

The primary latent house $Z$ is usually usually distributed. The random noise vectors $z in Z$ are remodeled into an intermediate latent house $W$ through a sequence of totally related layers. $W$ house was discovered to higher replicate the disentangled nature of the discovered distribution with the inception of StyleGAN. To create the fashion house, every $w in W$ is additional remodeled to channel-wise fashion parameters $s$, utilizing a special discovered affine transformation for every layer of the generator. These channel-wise parameters exist in a brand new distribution generally known as the $textual content{Model House or S}$.

After the introduction of the intermediate house, $W$, different researchers launched one other latent house $W+$ as a result of they seen it was troublesome to reliably embed photos into the $Z textual content{ and }W$ areas. $W +$ is a concatenation of 18 totally different 512-dimensional w vectors, one for every layer of the StyleGAN structure that may obtain enter through AdaIN. The $Z$ house has 512 dimensions, the $W$ house has 512 dimensions, the $W+$ house has 9216 dimensions, and the $S$ house has 9088 dimensions. Inside the $S$ house, 6048 dimensions are utilized to characteristic maps, and 3040 dimensions are utilized to tRGB blocks. The aim of this challenge is to find out which of those latent areas gives essentially the most disentangled illustration primarily based on the disentanglement, completeness, and informativeness (DCI) metrics.

Disentanglement is the measurement of the diploma to which every latent dimension captures at most one attribute. Completeness is the measure of the diploma to which every attribute is managed by at most one latent dimension. Informativeness is the measure of the classification accuracy of the attributes given the latent illustration.

To check the DCI of the three totally different latent areas, they educated 40 binary attribute classifiers primarily based on the 40 CelebA attributes however find yourself basing their experiments on about ~30 of the best-represented attributes. The subsequent step was to randomly pattern 500K latent vectors $z in Z$ and compute their corresponding $w$ and $s$ vectors, in addition to the pictures these vectors generate. Thereafter, they annotate every picture by every of the ~30 classifiers and file the logit reasonably than the binary final result. The upper the logit worth, the upper the diploma of DCI of the hyperspace for every attribute every classifier is educated on. The informativeness of $W$ and $S$ is excessive and comparable, $S$ scores a lot larger when it comes to disentanglement and completeness.

There are two foremost questions that the Model House tries to reply:

- What fashion channels management the visible look of native semantic areas? This query can take the shape, “What fashion channel(s) management a particular area of the picture even when the area can’t be conventionally labeled as a identified class e.g. nostril?”

- What fashion channels management particular attributes specified by a set of optimistic examples? This query can take the shape, “What fashion channel(s) controls the era of photos of a human with crimson hair, given just a few photos of individuals with crimson hair?”

Figuring out the Channels that Management Particular Native Semantic Areas

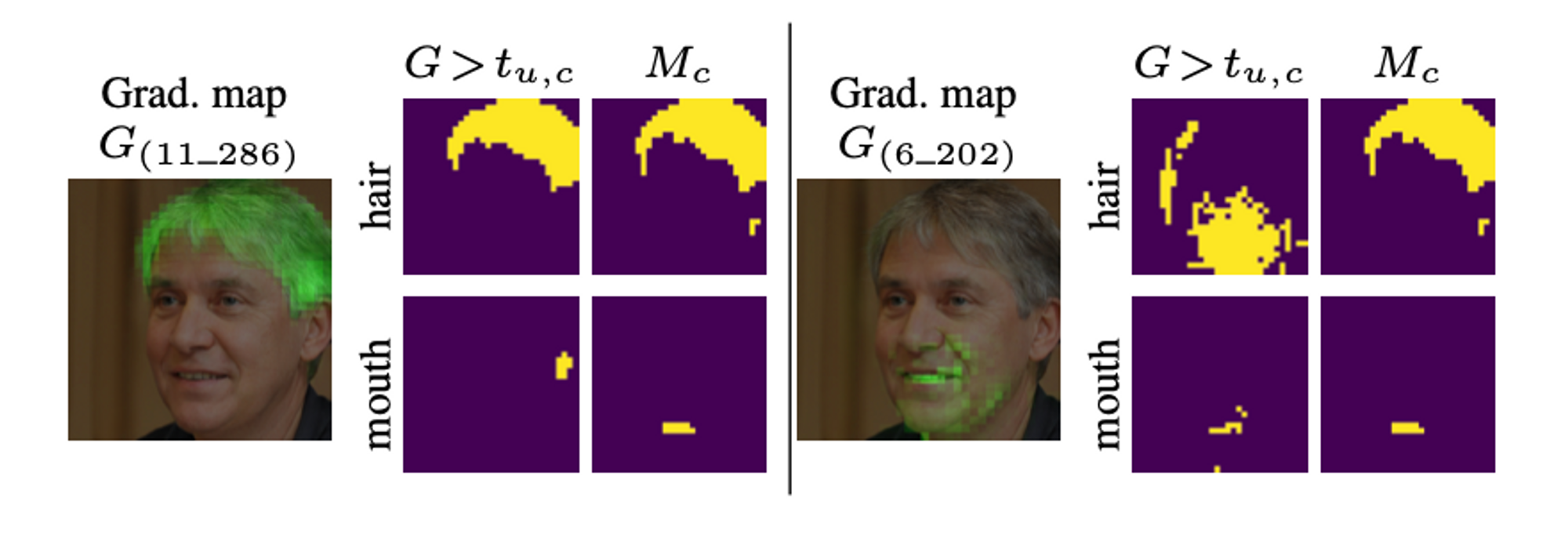

After they proved that Model House, $S$, was one of the best suited to symbolize kinds within the latent house, the authors proposed a technique of detecting the $S$ channels that management the visible look of native semantic areas. To do that, they look at the gradient maps of generated photos w.r.t totally different channels, measure the overlap with particular semantic areas, after which with this data, they will establish these modifications which are constantly lively in every area.

How do they get the gradient map?

For every picture generated with fashion code $s in S$, apply back-propagation to compute the gradient map of the picture w.r.t every channel of $s$. The gradient map helps us establish which area of the picture modified when the fashion channel $s$ was utilized after which highlights these pixels. For implementation functions, you will need to be aware that the gradient maps are computed at a diminished spatial decision $r instances r$ to avoid wasting computation.

A pretrained picture segmentation community is used to acquire the semantic map $M^s$ of the generated picture ($M^s$ can also be resized to $r instances r$). For every semantic class $c$ decided by the pre-trained mannequin (e.g. hair) and every channel $u$ that we’re evaluating, we measure the overlap between the semantic area $M_c^s$ and the gradient map $G_u^s$. The aim right here is to establish distinct semantic attributes in a generated picture, decide the areas which have modified on account of a method channel $u$ , after which discover the overlap between these areas and the segmented attribute areas. To make sure consistency throughout quite a lot of photos, they pattern 1K totally different fashion codes and compute for every code $s$ and every channel $u$ the semantic class with the very best overlap. The aim is to detect channels for which the very best overlap class is identical for almost all of the sampled photos.

Within the picture above, fashion channels that have an effect on parts of the hair area have been decided and are represented by the quantity on the decrease left a part of the picture. For each channel $x$, there’s a pair of photos with the channel values $+x, -x$. The distinction between the pictures produces by the channels $+x, -x$ exhibits the precise area that channel $x$ has altered.

Detecting the Channels that Management Attributes Specified by Optimistic Examples

To find out the channels that management particular attributes, we have to gather and examine just a few optimistic and destructive picture samples the place the attribute has been modified. The authors of the Model House argue that we are able to make this inference utilizing solely 30 samples. Variations between the fashion vectors of the optimistic examples and the imply vector of all the generated distribution reveal which channels are essentially the most related for the goal attribute. They obtained a set of optimistic photos — photos whose visible attributes matched the textual content definition — and tried to find out the fashion channels that led to their styling. They obtained latent fashion vectors of the optimistic photos and in contrast them to the fashion latent vectors of all the dataset to substantiate that these vectors are distinctive to the goal fashion. We all know from earlier paragraphs that we are able to get the fashion channel vector by making use of affine transformations to the intermediate latent vector $w$. As soon as we generate the fashion channel vector we decide its relevance to the specified attribute utilizing the ratio $theta_u$

How do they decide the channels related to a particular attribute?

Step one is getting a set of pretrained classifiers to establish the highest 1K optimistic picture examples for every of the chosen attributes. For every attribute, they rank all of the fashion channels predicted for that attribute by their relevance utilizing the ‘relevance ratio $theta_u$’.

Within the analysis paper, the authors manually examined the highest 30 channels to confirm that they certainly management the goal attribute. They decide that 16 CelebA attributes could also be managed by a minimum of one fashion channel. For well-distinguished visible attributes (gender, black hair and so forth.) the tactic was solely capable of finding one controlling attribute. The extra particular the attribute, the less channels are wanted to regulate it.

As a result of a lot of the detected attribute-specific management channels had been extremely ranked by our proposed significance rating $theta_u$. This implies {that a} small variety of $+ve$ examples supplied by a person could be ample for figuring out such channels. The authors be aware that utilizing solely 30 optimistic samples you’ll be able to establish the channels required to create the given fashion modifications. Rising the variety of $+ve$ examples and solely contemplating locally-active channels in areas associated to the goal attribute improves detection accuracy.

Abstract

The work on Model House established a approach for us to know and establish fashion channels that management each native semantic areas and particular attributes outlined by optimistic samples. The good thing about distinguishing between fashion channels that have an effect on particular native areas is that, the researcher doesn’t must have a particular attribute in thoughts. It’s an open-ended mapping that enables the researcher to give attention to areas of curiosity that aren’t restricted by typical labels of face areas e.g nostril, lips, and eye. The gradient map can unfold throughout a number of typical areas or cluster round a small portion of a standard area. Having an open-ended mapping permits the researcher to establish fashion modifications with out bias e.g the hair modifying channel (above) that modifications the sparsity of hair on somebody’s bangs. The strategy that makes use of optimistic attribute examples permits the researcher to establish the fashion channels that management particular attributes with out the necessity for a gradient map or any picture segmentation approach. This technique could be actually useful when you had just a few optimistic examples of a uncommon attribute and also you wished to search out the corresponding fashion channels. As soon as you discover the fashion vectors and channels that management the given attribute, you would deliberately generate extra photos with the uncommon attribute to reinforce some form of coaching information. There may be much more floor to cowl when it comes to exploring and exploiting the latent house however this can be a complete evaluate of the instruments we are able to achieve from specializing in the Model House $S$.

Citations:

- Karras, Tero, Samuli Laine, and Timo Aila. “A mode-based generator structure for generative adversarial networks.” Proceedings of the IEEE/CVF convention on laptop imaginative and prescient and sample recognition. 2019.

- Karras, Tero, et al. “Analyzing and bettering the picture high quality of stylegan.” Proceedings of the IEEE/CVF convention on laptop imaginative and prescient and sample recognition. 2020.

- Karras, Tero, et al. “Alias-free generative adversarial networks.” Advances in Neural Info Processing Techniques 34 (2021): 852-863.

- Abdal, Rameen, Yipeng Qin, and Peter Wonka. “Image2stylegan: Learn how to embed photos into the stylegan latent house?.” Proceedings of the IEEE/CVF Worldwide Convention on Pc Imaginative and prescient. 2019.

- Wu, Zongze, Dani Lischinski, and Eli Shechtman. “Stylespace evaluation: Disentangled controls for stylegan picture era.” Proceedings of the IEEE/CVF Convention on Pc Imaginative and prescient and Sample Recognition. 2021.