At a digital occasion this morning, Meta lifted the curtains on its efforts to develop in-house infrastructure for AI workloads, together with generative AI like the kind that underpins its just lately launched advert design and creation instruments.

It was an try at a projection of energy from Meta, which traditionally has been gradual to undertake AI-friendly {hardware} techniques — hobbling its means to maintain tempo with rivals reminiscent of Google and Microsoft.

“Constructing our personal [hardware] capabilities provides us management at each layer of the stack, from datacenter design to coaching frameworks,” Alexis Bjorlin, VP of Infrastructure at Meta, instructed TechCrunch. “This stage of vertical integration is required to push the boundaries of AI analysis at scale.”

Over the previous decade or so, Meta has spent billions of {dollars} recruiting high information scientists and constructing new sorts of AI, together with AI that now powers the invention engines, moderation filters and advert recommenders discovered all through its apps and providers. However the firm has struggled to show lots of its extra formidable AI analysis improvements into merchandise, significantly on the generative AI entrance.

Till 2022, Meta largely ran its AI workloads utilizing a mixture of CPUs — which are usually much less environment friendly for these kinds of duties than GPUs — and a {custom} chip designed for accelerating AI algorithms. Meta pulled the plug on a large-scale rollout of the {custom} chip, which was deliberate for 2022, and as an alternative positioned orders for billions of {dollars}’ value of Nvidia GPUs that required main redesigns of a number of of its information facilities.

In an effort to show issues round, Meta made plans to start out growing a extra formidable in-house chip, due out in 2025, able to each coaching AI fashions and operating them. And that was the principle subject of right this moment’s presentation.

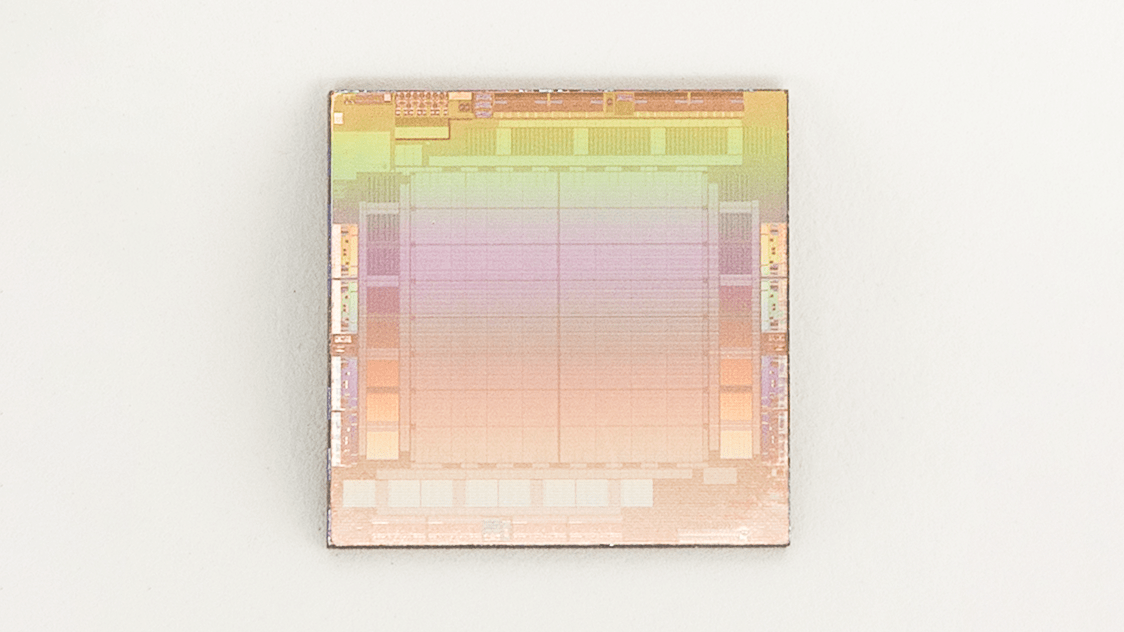

Meta calls the brand new chip the Meta Coaching and Inference Accelerator, or MTIA for brief, and describes it as part of a “household” of chips for accelerating AI coaching and inferencing workloads. (“Inferencing” refers to operating a skilled mannequin.) The MTIA is an ASIC, a form of chip that mixes totally different circuits on one board, permitting it to be programmed to hold out one or many duties in parallel.

An AI chip Meta custom-designed for AI workloads. Picture Credit: Meta

“To realize higher ranges of effectivity and efficiency throughout our vital workloads, we wanted a tailor-made resolution that’s co-designed with the mannequin, software program stack and the system {hardware},” Bjorlin continued. “This gives a greater expertise for our customers throughout quite a lot of providers.”

Customized AI chips are more and more the secret among the many Huge Tech gamers. Google created a processor, the TPU (brief for “tensor processing unit”), to coach massive generative AI techniques like PaLM-2 and Imagen. Amazon provides proprietary chips to AWS clients each for coaching (Trainium) and inferencing (Inferentia). And Microsoft, reportedly, is working with AMD to develop an in-house AI chip referred to as Athena.

Meta says that it created the primary era of the MTIA — MTIA v1 — in 2020, constructed on a 7-nanometer course of. It could scale past its inside 128 MB of reminiscence to as much as 128 GB, and in a Meta-designed benchmark check — which, after all, must be taken with a grain of salt — Meta claims that the MTIA dealt with “low-complexity” and “medium-complexity” AI fashions extra effectively than a GPU.

Work stays to be executed within the reminiscence and networking areas of the chip, Meta says, which current bottlenecks as the scale of AI fashions develop, requiring workloads to be break up up throughout a number of chips. (Not coincidentally, Meta just lately acquired an Oslo-based group constructing AI networking tech at British chip unicorn Graphcore.) And for now, the MTIA’s focus is strictly on inference — not coaching — for “suggestion workloads” throughout Meta’s app household.

However Meta careworn that the MTIA, which it continues to refine, “enormously” will increase the corporate’s effectivity by way of efficiency per watt when operating suggestion workloads — in flip permitting Meta to run “extra enhanced” and “cutting-edge” (ostensibly) AI workloads.

A supercomputer for AI

Maybe someday, Meta will relegate the majority of its AI workloads to banks of MTIAs. However for now, the social community’s counting on the GPUs in its research-focused supercomputer, the Analysis SuperCluster (RSC).

First unveiled in January 2022, the RSC — assembled in partnership with Penguin Computing, Nvidia and Pure Storage — has accomplished its second-phase buildout. Meta says that it now comprises a complete of two,000 Nvidia DGX A100 techniques sporting 16,000 Nvidia A100 GPUs.

So why construct an in-house supercomputer? Nicely, for one, there’s peer strain. A number of years in the past, Microsoft made a giant to-do about its AI supercomputer inbuilt partnership with OpenAI, and extra just lately mentioned that it might group up with Nvidia to construct a brand new AI supercomputer within the Azure cloud. Elsewhere, Google’s been touting its personal AI-focused supercomputer, which has 26,000 Nvidia H100 GPUs — placing it forward of Meta’s.

Meta’s supercomputer for AI analysis. Picture Credit: Meta

However past maintaining with the Joneses, Meta says that the RSC confers the advantage of permitting its researchers to coach fashions utilizing real-world examples from Meta’s manufacturing techniques. That’s in contrast to the corporate’s earlier AI infrastructure, which leveraged solely open supply and publicly obtainable datasets.

“The RSC AI supercomputer is used for pushing the boundaries of AI analysis in a number of domains, together with generative AI,” a Meta spokesperson mentioned. “It’s actually about AI analysis productiveness. We wished to supply AI researchers with a state-of-the-art infrastructure for them to have the ability to develop fashions and empower them with a coaching platform to advance AI.”

At its peak, the RSC can attain almost 5 exaflops of computing energy, which the corporate claims makes it among the many world’s quickest. (Lest that impress, it’s value noting some consultants view the exaflops efficiency metric with a pinch of salt and that the RSC is way outgunned by lots of the world’s quickest supercomputers.)

Meta says that it used the RSC to coach LLaMA, a tortured acronym for “Giant Language Mannequin Meta AI” — a big language mannequin that the corporate shared as a “gated launch” to researchers earlier within the 12 months (and which subsequently leaked in numerous web communities). The most important LLaMA mannequin was skilled on 2,048 A100 GPUs, Meta says, which took 21 days.

“Constructing our personal supercomputing capabilities provides us management at each layer of the stack; from datacenter design to coaching frameworks,” the spokesperson added. “RSC will assist Meta’s AI researchers construct new and higher AI fashions that may be taught from trillions of examples; work throughout lots of of various languages; seamlessly analyze textual content, photos, and video collectively; develop new augmented actuality instruments; and far more.”

Video transcoder

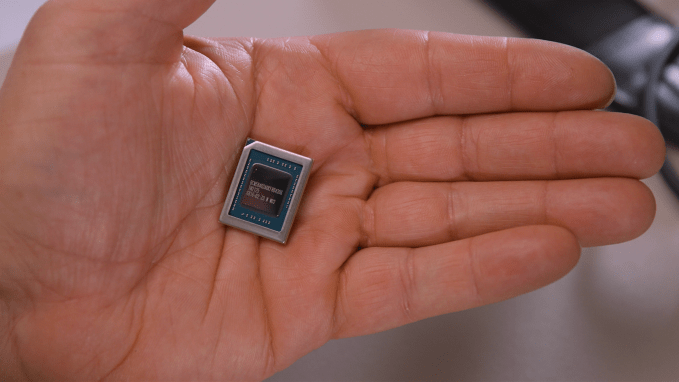

Along with MTIA, Meta is growing one other chip to deal with specific sorts of computing workloads, the corporate revealed at right this moment’s occasion. Known as the Meta Scalable Video Processor, or MSVP, the chip is Meta’s first in-house-developed ASIC resolution designed for the processing wants of video on demand and dwell streaming.

Meta started ideating {custom} server-side video chips years in the past, readers would possibly recall, saying an ASIC for video transcoding and inferencing work in 2019. That is the fruit of a few of these efforts, in addition to a renewed push for a aggressive benefit within the space of dwell video particularly.

“On Fb alone, folks spend 50% of their time on the app watching video,” Meta technical lead managers Harikrishna Reddy and Yunqing Chen wrote in a co-authored weblog submit printed this morning. “To serve the big variety of units everywhere in the world (cellular units, laptops, TVs, and many others.), movies uploaded to Fb or Instagram, for instance, are transcoded into a number of bitstreams, with totally different encoding codecs, resolutions and high quality … MSVP is programmable and scalable, and will be configured to effectively help each the high-quality transcoding wanted for VOD in addition to the low latency and sooner processing occasions that dwell streaming requires.”

Meta’s {custom} chip designed to speed up video workloads, like streaming and transcoding. Picture Credit: Meta

Meta says that its plan is to finally offload the vast majority of its “steady and mature” video processing workloads to the MSVP and use software program video encoding just for workloads that require particular customization and “considerably” increased high quality. Work continues on bettering video high quality with MSVP utilizing preprocessing strategies like good denoising and picture enhancement, Meta says, in addition to post-processing strategies reminiscent of artifact elimination and super-resolution.

“Sooner or later, MSVP will permit us to help much more of Meta’s most vital use instances and wishes, together with short-form movies — enabling environment friendly supply of generative AI, AR/VR and different metaverse content material,” Reddy and Chen mentioned.

AI focus

If there’s a standard thread in right this moment’s {hardware} bulletins, it’s that Meta’s making an attempt desperately to choose up the tempo the place it issues AI, particularly generative AI.

As a lot had been telegraphed prior. In February, CEO Mark Zuckerberg — which has reportedly made upping Meta’s compute capability for AI a high precedence — announced a brand new top-level generative AI group to, in his phrases, “turbocharge” the corporate’s R&D. CTO Andrew Bosworth likewise said recently that generative AI was the world the place he and Zuckerberg have been spending probably the most time. And chief scientist Yann LeCun has said that Meta plans to deploy generative AI instruments to create gadgets in digital actuality.

“We’re exploring chat experiences in WhatsApp and Messenger, visible creation instruments for posts in Fb and Instagram and adverts, and over time video and multi-modal experiences as properly,” Zuckerberg mentioned throughout Meta’s Q1 earnings name in April. “I count on that these instruments might be invaluable for everybody from common folks to creators to companies. For instance, I count on that plenty of curiosity in AI brokers for enterprise messaging and buyer help will come as soon as we nail that have. Over time, it will lengthen to our work on the metaverse, too, the place folks will far more simply be capable to create avatars, objects, worlds, and code to tie all of them collectively.”

Partly, Meta’s feeling growing strain from buyers involved that the corporate’s not shifting quick sufficient to seize the (doubtlessly massive) marketplace for generative AI. It has no reply — but — to chatbots like Bard, Bing Chat or ChatGPT. Nor has it made a lot progress on picture era, one other key phase that’s seen explosive progress.

If the predictions are proper, the overall addressable marketplace for generative AI software program could be $150 billion. Goldman Sachs predicts that it’ll elevate GDP by 7%.

Even a small slice of that might erase the billions Meta’s misplaced in investments in “metaverse” applied sciences like augmented actuality headsets, conferences software program and VR playgrounds like Horizon Worlds. Actuality Labs, Meta’s division liable for augmented actuality tech, reported a web lack of $4 billion final quarter, and the corporate mentioned throughout its Q1 name that it expects “working losses to extend 12 months over 12 months in 2023.”