Maintaining with an business as fast-moving as AI is a tall order. So till an AI can do it for you, right here’s a helpful roundup of current tales on the earth of machine studying, together with notable analysis and experiments we didn’t cowl on their very own.

This week, SpeedyBrand, an organization utilizing generative AI to create Search engine optimisation-optimized content material, emerged from stealth with backing from Y Combinator. It hasn’t attracted lots of funding but ($2.5 million), and its buyer base is comparatively small (about 50 manufacturers). Nevertheless it received me interested by how generative AI is starting to alter the make-up of the online.

As The Verge’s James Vincent wrote in a current piece, generative AI fashions are making it cheaper and simpler to generate lower-quality content material. Newsguard, an organization that gives instruments for vetting information sources, has exposed a whole bunch of ad-supported websites with generic-sounding names that includes misinformation created with generative AI.

It’s inflicting an issue for advertisers. Lots of the websites spotlighted by Newsguard appear completely constructed to abuse programmatic promoting, or the automated techniques for placing adverts on pages. In its report, Newsguard discovered near 400 situations of adverts from 141 main manufacturers that appeared on 55 of the junk information websites.

It’s not simply advertisers who must be anxious. As Gizmodo’s Kyle Barr points out, it’d simply take one AI-generated article to drive mountains of engagement. And even when each AI-generated article solely generates just a few {dollars}, that’s lower than the price of producing the textual content within the first place — and potential promoting cash not being despatched to reputable websites.

So what’s the answer? Is there one? It’s a pair of questions that’s more and more retaining me up at evening. Barr suggests it’s incumbent on search engines like google and yahoo and advert platforms to train a tighter grip and punish the unhealthy actors embracing generative AI. However given how briskly the sector is transferring — and the infinitely scalable nature of generative AI — I’m not satisfied that they will sustain.

After all, spammy content material isn’t a brand new phenomenon, and there’s been waves earlier than. The net has tailored. What’s completely different this time is that the barrier to entry is dramatically low — each by way of the fee and time that needs to be invested.

Vincent strikes an optimistic tone, implying that if the online is finally overrun with AI junk, it might spur the event of better-funded platforms. I’m not so positive. What’s not doubtful, although, is that we’re at an inflection level, and that the choices made now round generative AI and its outputs will impression the operate of the online for a while to return.

Listed below are different AI tales of observe from the previous few days:

OpenAI formally launches GPT-4: OpenAI this week introduced the final availability of GPT-4, its newest text-generating mannequin, by means of its paid API. GPT-4 can generate textual content (together with code) and settle for picture and textual content inputs — an enchancment over GPT-3.5, its predecessor, which solely accepted textual content — and performs at “human degree” on numerous skilled and educational benchmarks. Nevertheless it’s not excellent, as we observe in our earlier protection. (In the meantime ChatGPT adoption is reported to be down, however we’ll see.)

Bringing ‘superintelligent’ AI underneath management: In different OpenAI information, the corporate is forming a brand new crew led by Ilya Sutskever, its chief scientist and considered one of OpenAI’s co-founders, to develop methods to steer and management “superintelligent” AI techniques.

Anti-bias legislation for NYC: After months of delays, New York Metropolis this week started imposing a legislation that requires employers utilizing algorithms to recruit, rent or promote workers to submit these algorithms for an unbiased audit — and make the outcomes public.

Valve tacitly greenlights AI-generated video games: Valve issued a uncommon assertion after claims it was rejecting video games with AI-generated belongings from its Steam video games retailer. The notoriously close-lipped developer mentioned its coverage was evolving and never a stand towards AI.

Humane unveils the Ai Pin: Humane, the startup launched by ex-Apple design and engineering duo Imran Chaudhri and Bethany Bongiorno, this week revealed particulars about its first product: The Ai Pin. Because it seems, Humane’s product is a wearable gadget with a projected show and AI-powered options — like a futuristic smartphone, however in a vastly completely different kind issue.

Warnings over EU AI regulation: Main tech founders, CEOs, VCs and business giants throughout Europe signed an open letter to the EU Fee this week, warning that Europe might miss out on the generative AI revolution if the EU passes legal guidelines stifling innovation.

Deepfake rip-off makes the rounds: Take a look at this clip of U.Ok. shopper finance champion Martin Lewis apparently shilling an funding alternative backed by Elon Musk. Appears regular, proper? Not precisely. It’s an AI-generated deepfake — and doubtlessly a glimpse of the AI-generated distress quick accelerating onto our screens.

AI-powered intercourse toys: Lovense — maybe finest recognized for its remote-controllable intercourse toys — this week introduced its ChatGPT Pleasure Companion. Launched in beta within the firm’s distant management app, the “Superior Lovense ChatGPT Pleasure Companion” invitations you to bask in juicy and erotic tales that the Companion creates based mostly in your chosen subject.

Different machine learnings

Our analysis roundup commences with two very completely different initiatives from ETH Zurich. First is aiEndoscopic, a smart intubation spinoff. Intubation is critical for a affected person’s survival in lots of circumstances, however it’s a difficult handbook process often carried out by specialists. The intuBot makes use of pc imaginative and prescient to acknowledge and reply to a reside feed from the mouth and throat, guiding and correcting the place of the endoscope. This might permit folks to securely intubate when wanted moderately than ready on the specialist, doubtlessly saving lives.

Right here’s them explaining it in just a little extra element:

In a completely completely different area, ETH Zurich researchers additionally contributed second-hand to a Pixar film by pioneering the know-how wanted to animate smoke and fire with out falling prey to the fractal complexity of fluid dynamics. Their strategy was observed and built on by Disney and Pixar for the film Elemental. Curiously, it’s not a lot a simulation answer as a mode switch one — a intelligent and apparently fairly useful shortcut. (Picture up high is from this.)

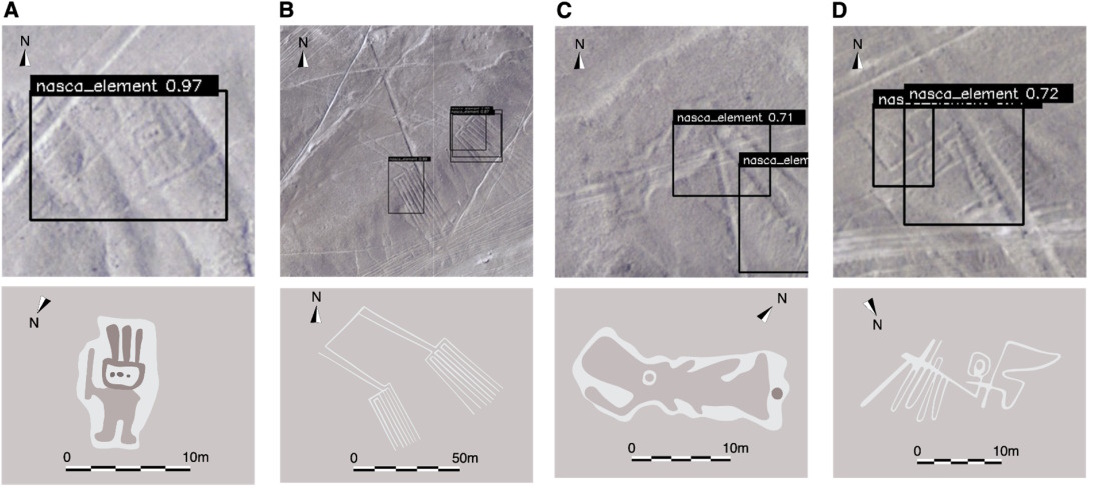

AI in nature is all the time fascinating, however nature AI as utilized to archaeology is much more so. Analysis led by Yamagata College aimed to identify new Nasca lines — the big “geoglyphs” in Peru. You would possibly assume that, being seen from orbit, they’d be fairly apparent — however erosion and tree cowl from the millennia since these mysterious formations had been created imply there are an unknown quantity hiding simply out of sight. After being skilled on aerial imagery of recognized and obscured geoglyphs, a deep studying mannequin was let loose on different views, and amazingly it detected not less than 4 new ones, as you may see beneath. Fairly thrilling!

4 Nasca geoglyphs newly found by an AI agent.

In a extra instantly related sense, AI-adjacent tech is all the time discovering new work detecting and predicting pure disasters. Stanford engineers are putting together data to train future wildfire prediction models with by performing simulations of heated air above a forest cover in a 30-foot water tank. If we’re to mannequin the physics of flames and embers touring outdoors the bounds of a wildfire, we’ll want to know them higher, and this crew is doing what they will to approximate that.

At UCLA they’re trying into the right way to predict landslides, that are extra frequent as fires and different environmental components change. However whereas AI has already been used to foretell them with some success, it doesn’t “present its work,” that means a prediction doesn’t clarify whether or not it’s due to erosion, or a water desk shifting, or tectonic exercise. A new “superposable neural network” approach has the layers of the community utilizing completely different information however operating in parallel moderately than all collectively, letting the output be just a little extra particular through which variables led to elevated threat. It’s additionally far more environment friendly.

Google is an fascinating problem: how do you get a machine studying system to study from harmful data but not propagate it? As an illustration, if its coaching set contains the recipe for napalm, you don’t need it to repeat it — however with a view to know to not repeat it, it must know what it’s not repeating. A paradox! So the tech big is looking for a method of “machine unlearning” that lets this kind of balancing act happen safely and reliably.

Should you’re on the lookout for a deeper take a look at why folks appear to belief AI fashions for no good purpose, look no additional than this Science editorial by Celeste Kidd (UC Berkeley) and Abeba Birhane (Mozilla). It will get into the psychological underpinnings of belief and authority and reveals how present AI brokers mainly use these as springboards to escalate their very own value. It’s a extremely fascinating article if you wish to sound sensible this weekend.

Although we frequently hear in regards to the notorious Mechanical Turk pretend chess-playing machine, that charade did encourage folks to create what it pretended to be. IEEE Spectrum has a fascinating story in regards to the Spanish physicist and engineer Torres Quevedo, who created an precise mechanical chess participant. Its capabilities had been restricted, however that’s how you recognize it was actual. Some even suggest that his chess machine was the primary “pc recreation.” Meals for thought.