DynamoFL, which gives software program to convey massive language fashions (LLMs) to enterprises and fine-tune these fashions on delicate information, immediately introduced that it raised $15.1 million in a Collection A funding spherical co-led by Canapi Ventures and Nexus Enterprise Companions.

The tranche, with had participation from Formus Capital and Soma Capital, brings DynamoFL’s complete raised to $19.3 million. Co-founder and CEO Vaikkunth Mugunthan says that the proceeds will likely be put towards increasing DynamoFL’s product choices and rising its workforce of privateness researchers.

“Taken collectively, DynamoFL’s product providing permits enterprises to develop personal and compliant LLM options with out compromising on efficiency,” Mugunthan instructed TechCrunch in an e mail interview.

San Francisco-based DynamoFL was based in 2021 by Mugunthan and Christian Lau, each graduates of MIT’s Division of Electrical Engineering and Pc Science. Mugunthan says that they have been motivated to launch the corporate by a shared want to handle “essential” information safety vulnerabilities in AI fashions.

“Generative AI has delivered to the fore new dangers, together with the flexibility for LLMs to ‘memorize’ delicate coaching information and leak this information to malicious actors,” Mugunthan stated. “Enterprises have been ill-equipped to handle these dangers, as correctly addressing these LLM vulnerabilities would require recruiting groups of extremely specialised privateness machine studying researchers to create a streamlined infrastructure for repeatedly testing their LLMs in opposition to rising information safety vulnerabilities.”

Enterprises are actually encountering challenges — primarily compliance-related — in adopting LLMs for his or her functions. Corporations are frightened about their confidential information ending up with builders who educated the fashions on person information; in latest months, main companies together with Apple, Walmart and Verizon have banned workers from utilizing instruments like OpenAI’s ChatGPT.

In a latest report, Gartner recognized six authorized and compliance dangers that organizations want to judge for “accountable” LLM danger, together with LLMs’ potential to reply questions inaccurately (a phenomenon often called hallucination), information privateness and confidentiality and mannequin bias (for instance, when a mannequin stereotypically associates sure genders with sure professions). The report notes that these necessities may fluctuate relying on the state and nation, complicating issues; California, for instance, mandates that organizations should disclose when a buyer is speaking with a bot.

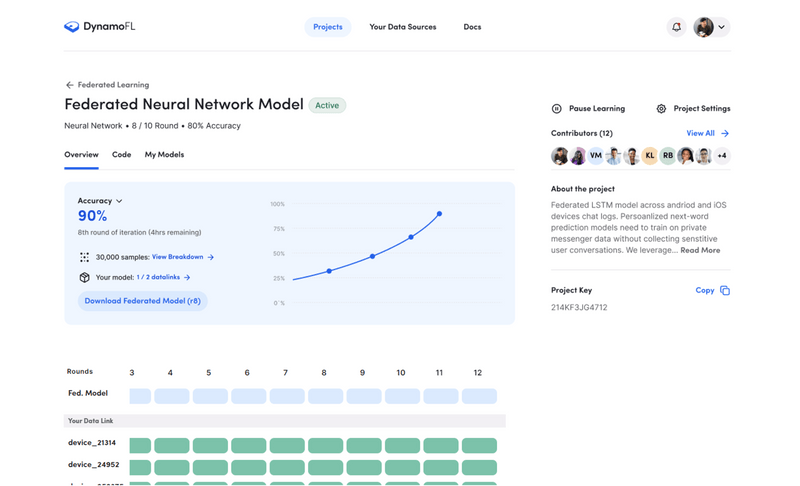

Picture Credit: DynamoFL

DynamoFL, which is deployed on a buyer’s digital personal cloud or on-premises, makes an attempt to resolve for these issues in a variety of the way, together with with an LLM penetration testing software that detects and paperwork LLM information safety dangers like whether or not an LLM has memorized or may leak delicate information. Several studies have proven that LLMs, relying on how they’re educated and prompted, can expose private data — an apparent anathema to massive companies working with proprietary information.

As well as, DynamoFL gives an LLM improvement platform that comes with strategies geared toward mitigating mannequin information leakage dangers and safety vulnerabilities. Utilizing the platform, devs can combine numerous optimizations into fashions, additionally, enabling them to run on hardware-constrained environments reminiscent of cell units and edge servers.

These capabilities aren’t notably distinctive, to be clear — no less than not on their face. Startups like OctoML, Seldon and Deci present instruments to optimize AI fashions to run extra effectively on quite a lot of {hardware}. Others, like LlamaIndex and Contextual AI, are centered on privateness and compliance — offering privacy-preserving methods to coach LLMs on first-party information.

So what’s DynamoFL’s differentiator? The “thoroughness” of its options, Mugunthan argues. That features working with authorized specialists to draft up tips on how to use DynamoFL to develop LLMs in compliance with U.S., European and Asian privateness legal guidelines.

The strategy attracted a number of Fortune 500 clients, notably within the finance, electronics, insurance coverage and automotive sectors.

“Whereas merchandise exist immediately to redact personally identifiable data from queries despatched to LLM providers, these don’t meet strict regulatory necessities in sectors like monetary providers and insurance coverage, the place redacted personally identifiable data is often re-identified via refined malicious assaults,” he stated. “DynamoFL has drawn upon its workforce’s experience in AI privateness vulnerabilities to construct essentially the most complete resolution for enterprises searching for to fulfill regulatory necessities for LLM information safety.”

DynamoFL doesn’t deal with one of many extra sticky points with immediately’s LLMs: IP and copyright dangers. Business LLMs are educated on a considerable amount of web information, and typically, they regurgitate this information — placing any firm that makes use of them prone to violating copyright.

However Mugunthan hinted at an expanded set of instruments and options to return, fueled by DynamoFL’s latest funding.

“Addressing regulator calls for is a essential duty for C-suite stage managers within the IT division, notably in sectors like monetary providers and insurance coverage,” he stated. “Regulatory non-compliance may end up in irreparable harm to the belief of consumers if delicate data is leaked, carries extreme fines and may end up in main disruptions within the operations of an enterprise. DynamoFL’s privateness analysis suite gives out-of-the-box testing for information extraction vulnerabilities and automatic documentation required to fulfill safety and compliance necessities.”

DynamoFL, which presently employs a workforce of round 17, expects to have 35 staffers by the tip of the 12 months.