Jeffrey Heninger, 22 November 2022

I used to be just lately studying the outcomes of a survey asking local weather consultants about their opinions on geoengineering. The outcomes shocked me: “We discover that respondents who count on extreme international local weather change damages and who’ve little confidence in present mitigation efforts are extra against geoengineering than respondents who’re much less pessimistic about international damages and mitigation efforts.” This appears backwards. Shouldn’t individuals who suppose that local weather change shall be unhealthy and that our present efforts are inadequate be extra keen to debate and analysis different methods, together with deliberately cooling the planet?

I have no idea what they’re pondering, however I could make a guess that may clarify the outcome: individuals are responding utilizing a ‘normal issue of doom’ as an alternative of contemplating the questions independently. Every local weather skilled has a p(Doom) for local weather change, or maybe a extra obscure feeling of doominess. Their acknowledged beliefs on particular questions are principally simply expressions of their p(Doom).

If my guess is right, then individuals first determine how doomy local weather change is, after which they use this normal issue of doom to reply the questions on severity, mitigation efforts, and geoengineering. I don’t know the way individuals set up their doominess: it may be because of fascinated with one particular query, or it may be primarily based on whether or not they’re extra optimistic or pessimistic total, or it may be one thing else. As soon as they’ve a normal issue of doom, it determines how they reply to particular questions they subsequently encounter. I feel that folks ought to as an alternative determine their solutions to particular questions independently, mix them to kind a number of believable future pathways, after which use these to find out p(Doom). Utilizing a mannequin with extra particulars is harder than utilizing a normal issue of doom, so it might not be stunning if few individuals did it.

To differentiate between these two potentialities, we may ask individuals a set of particular questions which can be all doom-related, however usually are not clearly linked to one another. For instance:

- How a lot would the Asian monsoon weaken with 1°C of warming?

- How many individuals can be displaced by a 50 cm rise in sea ranges?

- How a lot carbon dioxide will the US emit in 2040?

- How would vegetation progress be totally different if 2% of incoming daylight had been scattered by stratospheric aerosols?

If the solutions to all of those questions had been correlated, that may be proof for individuals utilizing a normal issue of doom to reply these questions as an alternative of utilizing a extra detailed mannequin of the world.

I’m wondering if the same phenomenon could possibly be taking place in AI Alignment analysis.

We are able to assemble an inventory of particular questions which can be related to AI doom:

- How lengthy are the timelines till somebody develops AGI?

- How laborious of a takeoff will we see after AGI is developed?

- How fragile are good values? Are two related moral techniques equally good?

- How laborious is it for individuals to show a worth system to an AI?

- How laborious is it to make an AGI corrigible?

- Ought to we count on easy alignment failures to happen earlier than catastrophic alignment failures?

- How doubtless is human extinction if we don’t discover a answer to the Alignment Downside?

- How laborious is it to design a very good governance mechanism for AI capabilities analysis?

- How laborious is it to implement and implement a very good governance mechanism for AI capabilities analysis?

I don’t have any good proof for this, however my obscure impression is that many individuals’s solutions to those questions are correlated.

It could not be too stunning if some pairs of those questions must be correlated. Totally different individuals would doubtless disagree on which issues must be correlated. For instance, Paul Christiano appears to suppose that brief timelines and quick takeoff speeds are anti-correlated. Another person may categorize these questions as ‘AGI is straightforward’ vs. ‘Aligning issues is tough’ and count on correlations inside however not between these classes. Folks may also disagree on whether or not individuals and AGI shall be related (so aligning AGI and aligning governance are equally laborious) or very totally different (so educating AGI good values is far tougher than educating individuals good values). With all of those numerous arguments, it might be stunning if beliefs throughout all of those questions had been correlated. In the event that they had been, it might counsel {that a} normal issue of doom is driving individuals’s beliefs.

There are a number of biases which appear to be associated to the overall issue of doom. The halo impact (or horns impact) is when a single good (or unhealthy) perception about an individual or model causes somebody to consider that that particular person or model is sweet (or unhealthy) in lots of different methods. The fallacy of temper affiliation is when somebody’s response to an argument relies on how the argument impacts the temper surrounding the difficulty, as an alternative of responding to the argument itself. The final issue of doom is a extra particular bias, and feels much less like an emotional response: Folks have detailed arguments describing why the longer term shall be maximally or minimally doomy. The futures described are believable, however contemplating how a lot disagreement there may be, it might be stunning if only some believable futures are targeted on and if these futures have equally doomy predictions for a lot of particular questions. I’m additionally reminded of Beware Stunning and Suspicious Convergence, though it focuses extra on beliefs that aren’t up to date when somebody’s worldview modifications, as an alternative of on beliefs inside a worldview that are surprisingly correlated.

The AI Impacts survey might be not related to figuring out if AI security researchers have a normal issue of doom. The survey was of machine studying researchers, not AI security researchers. I spot checked a number of random pairs of doom-related questions anyway, they usually didn’t look correlated. I’m unsure whether or not to interpret this to imply that they’re utilizing a number of detailed fashions or that they don’t also have a easy mannequin.

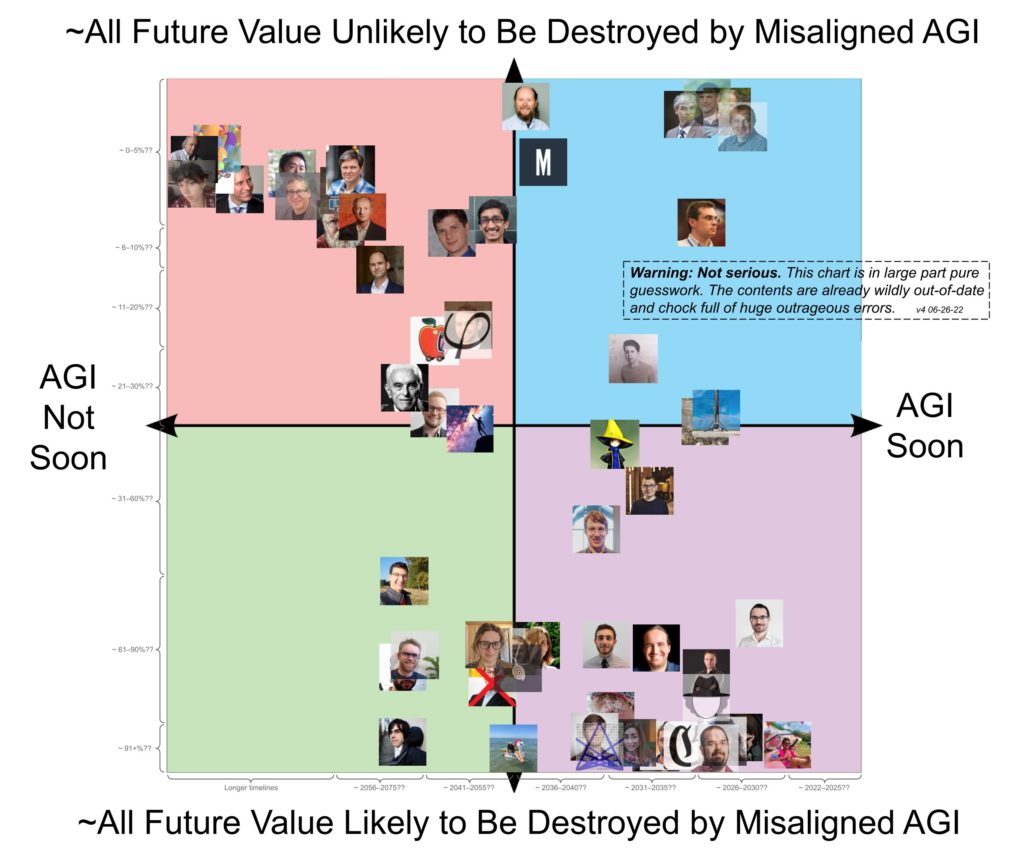

There may be additionally this graph, which claims to be “wildly out-of-date and chock full of giant outrageous errors.” This graph appears to counsel some correlation between two totally different doom-related questions, and that the distribution is surprisingly bimodal. If we had been to take this extra critically than we in all probability ought to, we may use it as proof for a normal issue of doom, and that most individuals’s p(Doom) is near 0 or 1. I don’t suppose that this graph is especially robust proof even whether it is correct, nevertheless it does gesture in the identical path that I’m pointing at.

It could be attention-grabbing to do an precise survey of AI security researchers, with extra than simply two questions, to see how carefully all the responses are correlated with one another. It could even be attention-grabbing to see whether or not doominess in a single subject is correlated with doominess in different fields. I don’t know whether or not this survey would present proof for a normal issue of doom amongst AI security researchers, nevertheless it appears believable that it might.