AI is showing in seemingly each nook of contemporary life, from music and media to enterprise and productiveness, even relationship. There’s a lot it may be arduous to maintain up — so learn on to seek out out the whole lot from the newest large developments to the phrases and corporations you should know to be able to keep present on this fast-moving discipline.

To start with, let’s simply be sure we’re all on the identical web page: what is AI?

Synthetic intelligence, additionally known as machine studying, is a form of software program system based mostly on neural networks, a way that was really pioneered a long time in the past however very lately has blossomed because of highly effective new computing assets. AI has enabled efficient voice and picture recognition, in addition to the power to generate artificial imagery and speech. And researchers are arduous at work making it potential for an AI to browse the net, ebook tickets, tweak recipes, and extra.

Oh, however when you’re anxious a couple of Matrix-type rise of the machines — don’t be. We’ll speak about that later!

Our information to AI has three predominant components, every of which we replace repeatedly and might be learn in any order:

- First, essentially the most basic ideas you should know in addition to extra lately essential ones.

- Next, an outline of the most important gamers in AI and why they matter.

- And last, a curated listing of the current headlines and developments that you have to be conscious of.

By the top of this text you’ll be about as updated as anybody can hope to be today. We may even be updating and increasing it as we press additional into the age of AI.

AI 101

Picture Credit: Andrii Shyp / Getty Photographs

One of many wild issues about AI is that though the core ideas date again greater than 50 years, few of them had been acquainted to even the tech-savvy earlier than very lately. So when you really feel misplaced, don’t fear — everyone seems to be.

And one factor we wish to clarify up entrance: though it’s known as “synthetic intelligence,” that time period is a little bit deceptive. There’s nobody definition of intelligence on the market, however what these methods do is unquestionably nearer to calculators than brains. The enter and output of this calculator is simply much more versatile. You may consider synthetic intelligence like synthetic coconut — it’s imitation intelligence.

With that stated, listed here are the fundamental phrases you’ll discover in any dialogue of AI.

Neural community

Our brains are largely product of interconnected cells known as neurons, which mesh collectively to kind complicated networks that carry out duties and retailer info. Recreating this wonderful system in software program has been tried because the ’60s, however the processing energy required wasn’t broadly accessible till 15-20 years in the past, when GPUs let digitally outlined neural networks flourish. At their coronary heart they’re simply plenty of dots and contours: the dots are knowledge and the traces are statistical relationships between these values. As within the mind, this could create a flexible system that shortly takes an enter, passes it by the community, and produces an output. This method is named a mannequin.

Mannequin

The mannequin is the precise assortment of code that accepts inputs and returns outputs. The similarity in terminology to a statistical mannequin or a modeling system that simulates a fancy pure course of just isn’t unintended. In AI, mannequin can refer to an entire system like ChatGPT, or just about any AI or machine studying assemble, no matter it does or produces. Fashions are available in varied sizes, which means each how a lot cupboard space they take up and the way a lot computational energy they take to run. And these rely on how the mannequin is educated.

Coaching

To create an AI mannequin, the neural networks making up the bottom of the system are uncovered to a bunch of data in what’s known as a dataset or corpus. In doing so, these big networks create a statistical illustration of that knowledge. This coaching course of is essentially the most computation-intensive half, which means it takes weeks or months (you may form of go so long as you need) on large banks of high-powered computer systems. The explanation for that is that not solely are the networks complicated, however datasets might be extraordinarily massive: billions of phrases or pictures that should be analyzed and given illustration within the big statistical mannequin. Alternatively, as soon as the mannequin is completed cooking it may be a lot smaller and fewer demanding when it’s getting used, a course of known as inference.

Picture Credit: Google

Inference

When the mannequin is definitely doing its job, we name that inference, very a lot the normal sense of the phrase: stating a conclusion by reasoning about accessible proof. In fact it isn’t precisely “reasoning,” however statistically connecting the dots within the knowledge it has ingested and, in impact, predicting the subsequent dot. As an example, saying “Full the next sequence: pink, orange, yellow…” it could discover that these phrases correspond to the start of a listing it has ingested, the colours of the rainbow, and infers the subsequent merchandise till it has produced the remainder of that listing. Inference is usually a lot much less computationally expensive than coaching: consider it like wanting by a card catalog slightly than assembling it. Huge fashions nonetheless should run on supercomputers and GPUs, however smaller ones might be run on a smartphone or one thing even easier.

Generative AI

Everyone seems to be speaking about generative AI, and this broad time period simply means an AI mannequin that produces an unique output, like a picture or textual content. Some AIs summarize, some reorganize, some establish, and so forth — however an AI that really generates one thing (whether or not or not it “creates” is debatable) is very widespread proper now. Simply do not forget that simply because an AI generated one thing, that doesn’t imply it’s appropriate, and even that it displays actuality in any respect! Solely that it didn’t exist earlier than you requested for it, like a narrative or portray.

Right now’s prime phrases

Past the fundamentals, listed here are the AI phrases which are most related right here in mid-2023.

Giant language mannequin

Essentially the most influential and versatile type of AI accessible at this time, massive language fashions are educated on just about all of the textual content making up the net and far of English literature. Ingesting all this leads to a basis mannequin (learn on) of huge dimension. LLMs are in a position to converse and reply questions in pure language and imitate quite a lot of types and kinds of written paperwork, as demonstrated by the likes of ChatGPT, Claude, and LLaMa. Whereas these fashions are undeniably spectacular, it should be saved in thoughts that they’re nonetheless sample recognition engines, and once they reply it’s an try to finish a sample it has recognized, whether or not or not that sample displays actuality. LLMs ceaselessly hallucinate of their solutions, which we are going to come to shortly.

If you wish to study extra about LLMs and ChatGPT, we now have a complete separate article on these!

Basis mannequin

Coaching an enormous mannequin from scratch on large datasets is expensive and sophisticated, and so that you don’t wish to should do it any greater than you must. Basis fashions are the large from-scratch ones that want supercomputers to run, however they are often trimmed down to slot in smaller containers, often by lowering the variety of parameters. You may consider these as the whole dots the mannequin has to work with, and today it may be within the hundreds of thousands, billions, and even trillions.

Wonderful tuning

A basis mannequin like GPT-4 is sensible, nevertheless it’s additionally a generalist by design — it absorbed the whole lot from Dickens to Wittgenstein to the principles of Dungeons & Dragons, however none of that’s useful in order for you it that will help you write a canopy letter in your resumé. Luckily, fashions might be advantageous tuned by giving them a bit of additional coaching utilizing a specialised dataset, as an example a number of thousand job purposes that occur to be laying round. This offers the mannequin a a lot better sense of how that will help you in that area with out throwing away the overall information it has collected from the remainder of its coaching knowledge.

Reinforcement studying from human suggestions, or RLHF, is a particular form of advantageous tuning you’ll hear about loads — it makes use of knowledge from people interacting with the LLM to enhance its communication expertise.

Diffusion

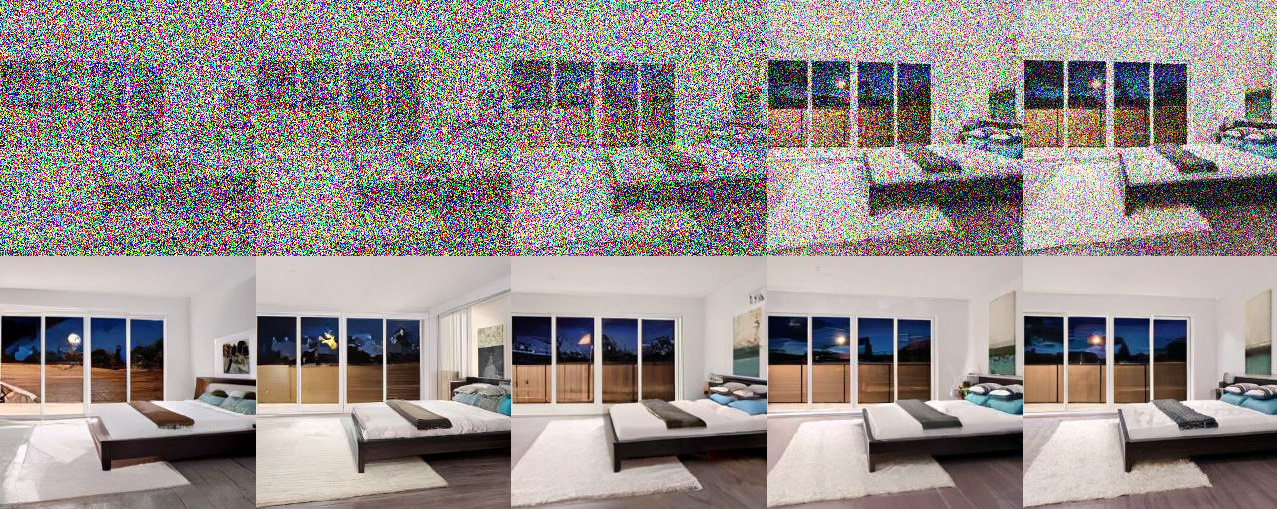

From a paper on a sophisticated post-diffusion method, you may see how a picture might be reproduced from even very noisy knowledge.

Picture technology might be executed in quite a few methods, however by far essentially the most profitable as of at this time is diffusion, which is the method on the coronary heart of Secure Diffusion, Midjourney, and different widespread generative AIs. Diffusion fashions are educated by displaying them pictures which are progressively degraded by including digital noise till there may be nothing left of the unique. By observing this, diffusion fashions study to do the method in reverse as nicely, progressively including element to pure noise to be able to kind an arbitrarily outlined picture. We’re already beginning to transfer past this for pictures, however the method is dependable and comparatively nicely understood, so don’t anticipate it to vanish any time quickly.

Hallucination

Initially this was an issue of sure imagery in coaching slipping into unrelated output, comparable to buildings that gave the impression to be product of canines attributable to an an over-prevalence of canines within the coaching set. Now an AI is claimed to be hallucinating when, as a result of it has inadequate or conflicting knowledge in its coaching set, it simply makes one thing up.

This may be both an asset or a legal responsibility; an AI requested to create unique and even spinoff artwork is hallucinating its output; an LLM might be informed to put in writing a love poem within the type of Yogi Berra, and it’ll fortunately accomplish that — regardless of such a factor not present wherever in its dataset. However it may be a difficulty when a factual reply is desired; fashions will confidently current an response that’s half actual, half hallucination. At current there isn’t a straightforward option to inform which is which besides checking for your self, as a result of the mannequin itself doesn’t really know what’s “true” or “false,” it is just making an attempt to finish a sample as finest it could.

AGI or robust AI

Synthetic Normal Intelligence, or robust AI, just isn’t actually a well-defined idea, however the easiest rationalization is that it’s an intelligence that’s highly effective sufficient not simply to do what folks do, however study and enhance itself like we do. Some fear that this cycle of studying, integrating these concepts, after which studying and rising quicker might be a self-perpetuating one which leads to a super-intelligent system that’s unimaginable to restrain or management. Some have even proposed delaying or limiting analysis to forestall this risk.

It’s a scary concept, certain, and films like The Matrix and Terminator have explored what may occur if AI spirals uncontrolled and makes an attempt to eradicate or enslave humanity. However these tales are usually not grounded in actuality. The looks of intelligence we see in issues like ChatGPT is a powerful act, however has little in frequent with the summary reasoning and dynamic multi-domain exercise that we affiliate with “actual” intelligence. Whereas it’s near-impossible to foretell how issues will progress, it could be useful to consider AGI as one thing like interstellar house journey: all of us perceive the idea and are seemingly working towards it, however on the similar time we’re extremely removed from attaining something prefer it. And because of the immense assets and basic scientific advances required, nobody goes to simply abruptly accomplish it accidentally!

AGI is fascinating to consider, however there’s no sense borrowing hassle when, as commentators level out, AI is already presenting actual and consequential threats at this time regardless of, and in reality largely attributable to, its limitations. Nobody desires Skynet, however you don’t want an superintelligence armed with nukes to trigger actual hurt: individuals are shedding jobs and falling for hoaxes at this time. If we are able to’t remedy these issues, what likelihood do we now have in opposition to a T-1000?

High gamers in AI

OpenAI

Picture Credit: Leon Neal / Getty Photographs

If there’s a family title in AI, it’s this one. OpenAI started as its title suggests, a corporation meaning to carry out analysis and supply the outcomes roughly overtly. It has since restructured as a extra conventional for-profit firm offering entry to its advances language fashions like ChatGPT by APIs and apps. It’s headed by Sam Altman, a technotopian billionaire who nonetheless has warned of the dangers AI may current. OpenAI is the acknowledged chief in LLMs but in addition performs analysis in different areas.

Microsoft

As you may anticipate, Microsoft has executed its fair proportion of labor in AI analysis, however like different firms has roughly failed to show its experiments into main merchandise. Its smartest transfer was to speculate early in OpenAI, which scored it an unique long-term partnership with the corporate, which now powers its Bing conversational agent. Although its personal contributions are smaller and fewer instantly relevant, the corporate does have a substantial analysis presence.

Recognized for its moonshots, Google by some means missed the boat on AI regardless of its researchers actually inventing the method that led on to at this time’s AI explosion: the transformer. Now it’s working arduous by itself LLMs and different brokers, however is clearly taking part in catch-up after spending most of its money and time during the last decade boosting the outdated “digital assistant” idea of AI. CEO Sundar Pichai has repeatedly stated that the corporate is aligning itself firmly behind AI in search and productiveness.

Anthropic

After OpenAI pivoted away from openness, siblings Dario and Daniela Amodei left it to start out Anthropic, supposed to fill the function of an open and ethically thoughtful AI analysis group. With the amount of money they’ve available, they’re a severe rival to OpenAI even when their fashions, like Claude, aren’t as widespread or well-known but.

Stability

Picture Credit: Bryce Durbin / TechCrunch

Controversial however inevitable, Stability represents the “do what thou wilt” open supply college of AI implementation, hoovering up the whole lot on the web and making the generative AI fashions it trains freely accessible in case you have the {hardware} to run it. That is very in step with the “info desires to be free” philosophy however has additionally accelerated ethically doubtful tasks like producing pornographic imagery and utilizing mental property with out consent (typically on the similar time).

Elon Musk

Not one to be unnoticed, Musk has been outspoken about his fears concerning out-of-control AI, in addition to some bitter grapes after he contributed to OpenAI early on and it went in a course he didn’t like. Whereas Musk just isn’t an professional on this subject, as typical his antics and commentary do provoke widespread responses (he was a signatory on the above-mentioned “AI pause” letter) and he’s trying to start out a analysis outfit of his personal.

Newest tales in AI

Nvidia turns into a trillion-dollar firm

GPU maker Nvidia was doing advantageous promoting to players and cryptocurrency miners, however the AI business put demand for its {hardware} into overdrive. The corporate has cleverly capitalized on this and the opposite day broke the symbolic (however intensely so) trillion-dollar market cap when its inventory hit $413. They present no signal of slowing down, as they confirmed lately at Computex…

At Computex, Nvidia redoubles dedication to AI

Amongst a dozen or two bulletins at Computex in Taipei, Nvidia CEO Jensen Huang talked up the corporate’s Grace Hopper superchip for accelerated computing (their terminology) and demoed generative AI that it claimed may flip anybody right into a developer.

OpenAI’s Sam Altman lobbies the world on AI’s behalf

Altman was lately advising the U.S. authorities on AI coverage, although some noticed this as letting the fox set the principles of the henhouse. The E.U.’s varied rulemaking our bodies are additionally searching for enter and Altman has been doing a grand tour, warning concurrently in opposition to extreme regulation and the hazards of unfettered AI. If these views appear against you… don’t fear, you’re not the one one.

Anthropic raises $450 million for its new technology of AI fashions

We form of spoiled this information for them after we revealed particulars of this fundraise and plan forward of them, however Anthropic is now formally $450 million richer and arduous at work on the successor to Claude and its different fashions. It’s clear the AI market is massive sufficient that there’s room on the prime for a number of main suppliers — if they’ve the capital to get there.

Tiktok is testing its personal in-app AI known as Tako

Video social networking platform Tiktok is testing a brand new conversational AI which you can ask about no matter you need, together with what you’re watching. The thought is as a substitute of simply trying to find extra “husky howling” movies, you may ask Tako “why do huskies howl a lot?” and it’ll give a helpful reply in addition to level you in the direction of extra content material to observe.

Microsoft is baking ChatGPT into Home windows 11

After investing a whole bunch of hundreds of thousands into OpenAI, Microsoft is decided to get its cash’s value. It’s already built-in GPT-4 into its Bing search platform, however now that Bing chat expertise might be accessible — certainly, in all probability unavoidable — on each Home windows 11 machine through an right-side bar throughout the OS.

Google provides a sprinkle of AI to simply about the whole lot it does

Google is taking part in catch-up within the AI world, and though it’s dedicating appreciable assets to doing so, its technique remains to be a little bit murky. Living proof: its I/O 2023 occasion was filled with experimental options that will or could not ever make it to a broad viewers. However they’re undoubtedly doing a full courtroom press to get again within the sport.