AI emotion recognition is a really energetic present discipline of pc imaginative and prescient analysis that entails facial emotion detection and the automated evaluation of sentiment from visible information and textual content evaluation. Human-machine interplay is a crucial space of analysis the place machine studying algorithms with visible notion goal to realize an understanding of human interplay.

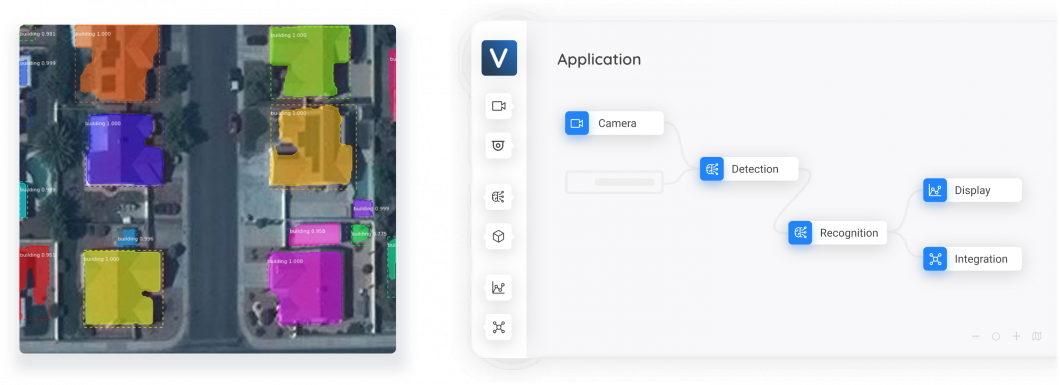

About us: Viso.ai supplies the end-to-end pc imaginative and prescient platform Viso Suite. This resolution permits main corporations to construct, deploy, and scale their AI imaginative and prescient purposes, together with AI emotion evaluation. Get a personalised demo to your group.

We offer an outline of Emotion AI expertise, traits, examples, and purposes:

- What’s Emotion AI?

- How does visible AI Emotion Recognition work?

- Facial Emotion Recognition Datasets

- What Feelings Can AI Detect?

- State-of-the-art emotion AI Algorithms

- Outlook, present analysis, and purposes

What Is AI Emotion Recognition?

What’s Emotion AI?

Emotion AI, additionally known as Affective Computing, is a quickly rising department of Synthetic Intelligence permitting computer systems to investigate and perceive human language nonverbal indicators similar to facial expressions, physique language, gestures, and voice tones to evaluate their emotional state. Therefore, deep neural community face recognition and visible Emotion AI analyze facial appearances in photographs and movies utilizing pc imaginative and prescient expertise to investigate a person’s emotional standing.

Visible AI Emotion Recognition

Emotion recognition is the duty of machines attempting to investigate, interpret, and classify human emotion via the evaluation of facial options.

Amongst all of the high-level imaginative and prescient duties, Visible Emotion Evaluation (VEA) is likely one of the most difficult duties for the present affective hole between low-level pixels and high-level feelings. In opposition to all odds, visible emotion evaluation continues to be promising as understanding human feelings is a vital step in direction of sturdy synthetic intelligence. With the fast growth of Convolutional Neural Networks (CNNs), deep studying grew to become the brand new methodology of selection for emotion evaluation duties.

How AI Emotion Recognition and Evaluation Works

On a excessive degree, an AI emotion software or imaginative and prescient system consists of the next steps:

- Step #1: Purchase the picture body from a digital camera feed (IP, CCTV, USB digital camera).

- Step #2: Preprocessing of the picture (cropping, resizing, rotating, shade correction).

- Step #3: Extract the essential options with a CNN mannequin

- Step #4: Carry out emotion classification

The idea of emotion recognition with AI is predicated on three sequential steps:

1. Face Detection in Pictures and Video Frames

In step one, the video of a digital camera is used to detect and localize the human face. The bounding field coordinate is used to point the precise face location in real-time. The face detection process continues to be difficult, and it’s not assured that each one faces are going to be detected in a given enter picture, particularly in uncontrolled environments with difficult lighting situations, completely different head poses nice distances, or occlusion.

2. Picture Preprocessing

When the faces are detected, the picture information is optimized earlier than it’s fed into the emotion classifier. This step significantly improves the detection accuracy. The picture preprocessing often consists of a number of substeps to normalize the picture for illumination modifications, scale back noise, carry out picture smoothing, picture rotation correction, picture resizing, and picture cropping.

3. Emotion Classification AI Mannequin

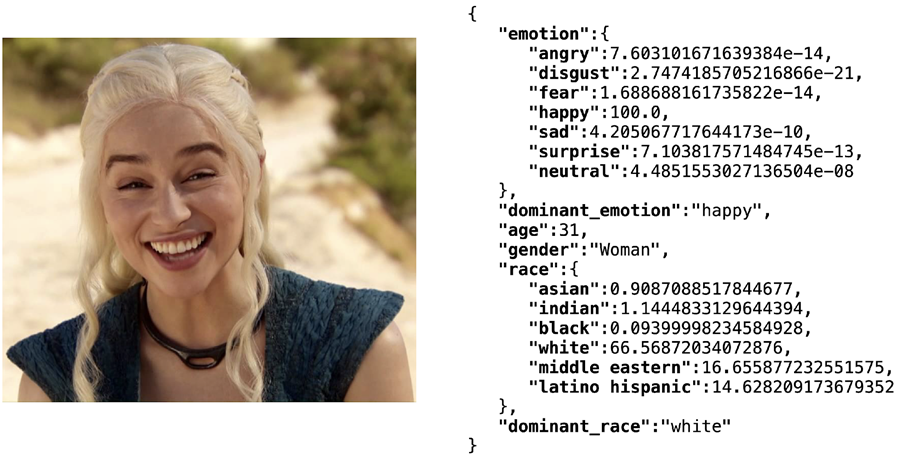

After pre-processing, the related options are retrieved from the pre-processed information containing the detected faces. There are completely different strategies to detect quite a few facial options. For instance, Motion Models (AU), the movement of facial landmarks, distances between facial landmarks, gradient options, facial texture, and extra.

Typically, the classifiers used for AI emotion recognition are based mostly on Assist Vector Machines (SVM) or Convolutional Neural Networks (CNN). Lastly, the acknowledged human face is assessed based mostly on facial features by assigning a pre-defined class (label) similar to “completely satisfied” or “impartial.”

Facial AI Emotion Recognition Datasets

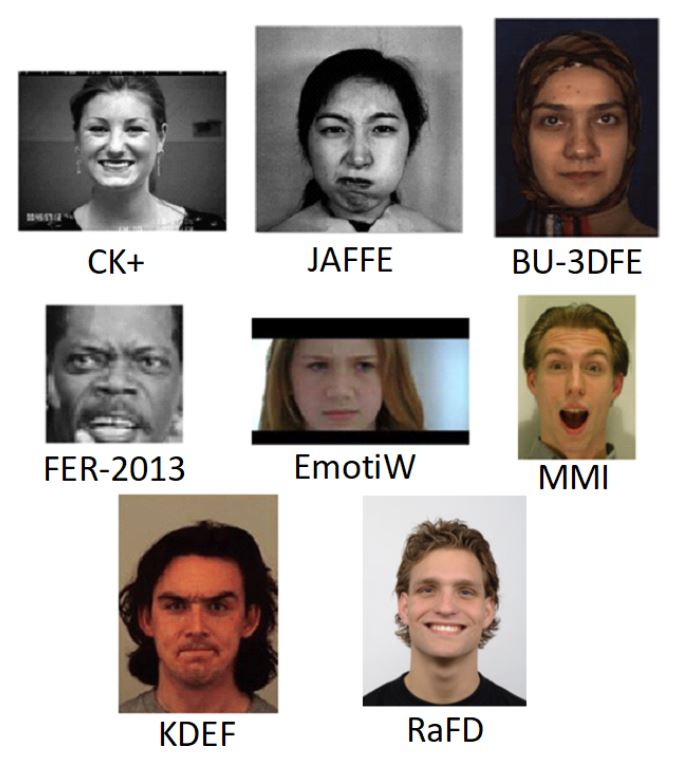

Most databases of emotion photographs are constructed on 2D static photographs or 2D video sequences; some include 3D photographs. Since most 2D databases solely include frontal faces, algorithms solely educated on these databases present poor efficiency for various head poses.

Crucial databases for visible emotion recognition embrace:

- Prolonged Cohn–Kanade database (CK+):

593 movies, Posed Emotion, Managed Surroundings - Japanese Feminine Facial Expression Database (JAFFE):

213 photographs, Posed Emotion, Managed Surroundings - Binghamton College 3D Facial Expression database (BU-3DFE):

606 movies, Posed and Spontaneous Emotion, Managed Surroundings - Facial Expression Recognition 2013 database (FER-2013):

35’887 photographs, Posed and Spontaneous Emotion, Uncontrolled Surroundings - Emotion Recognition within the Wild database (EmotiW):

1’268 movies and 700 photographs, Spontaneous Emotion, Uncontrolled Surroundings - MMI database:

2’900 movies, Posed Emotion, Managed Surroundings - eNTERFACE’05 Audiovisual Emotion database:

1’166 movies, Spontaneous Emotion, Managed Surroundings - Karolinska Directed Emotional Faces database (KDEF):

4’900 photographs, Posed Emotion, Managed Surroundings - Radboud Faces Database (RaFD):

8’040 photographs, Posed Emotion, Managed Surroundings

What Feelings Can AI Detect?

The feelings or sentiment expressions an AI mannequin can detect rely upon the educated lessons. Most emotion or sentiment databases are labeled with the next feelings:

- Emotion #1: Anger

- Emotion #2: Disgust

- Emotion #3: Worry

- Emotion #4: Happiness

- Emotion #5: Unhappiness

- Emotion #6: Shock

- Emotion #7: Neural expression

State-of-the-Artwork in AI Emotion Recognition Evaluation Expertise

The curiosity in facial emotion recognition is rising more and more, and new algorithms and strategies are being launched. Current advances in supervised and unsupervised machine studying methods introduced breakthroughs within the analysis discipline, and increasingly correct programs are rising yearly. Nonetheless, despite the fact that progress is appreciable, emotion detection continues to be a really large problem.

Earlier than 2014 – Conventional Pc Imaginative and prescient

A number of strategies have been utilized to cope with this difficult but essential drawback. Early conventional strategies aimed to design hand-crafted options manually, impressed by psychological and neurological theories. The options included shade, texture, composition, emphasis, stability, and extra.

The early makes an attempt that centered on a restricted set of particular options didn’t cowl all essential emotional components and didn’t obtain adequate outcomes on large-scale datasets. Unsurprisingly, fashionable deep studying strategies outperform conventional pc imaginative and prescient strategies.

After 2014 – Deep Studying Strategies for AI Emotion Evaluation

Deep studying algorithms are based mostly on neural community fashions the place linked layers of neurons are used to course of information equally to the human mind. A number of hidden layers are the idea of deep neural networks to investigate information capabilities within the context of purposeful hierarchy. Convolutional neural networks (CNN) are the preferred type of synthetic neural networks for picture processing duties.

CNN achieves general good ends in AI emotion recognition duties. For emotion recognition, the extensively used CNN backbones, together with AlexNet, VGG-16, and ResNet50, are initialized with pre-trained parameters on ImageNet after which fine-tuned on FI.

Since 2020 – Specialised Neural networks for Visible Emotion Evaluation

Most strategies are based mostly on convolutional neural networks that be taught sentiment representations from full photographs, though completely different picture areas and picture contexts can have a distinct influence on evoked sentiment.

Due to this fact, researchers developed particular neural networks for visible emotional evaluation based mostly on CNN backbones, particularly MldrNet or WSCNet.

The novel methodology (developed mid-2020) is named “Weakly Supervised Coupled Convolutional Community”, or WSCNet. The tactic robotically selects related delicate proposals given weak annotations similar to international picture labels. The emotion evaluation mannequin makes use of a sentiment-specific delicate map to couple the sentiment map with deep options as a semantic vector within the classification department. The WSCNet outperforms the state-of-the-art outcomes on numerous benchmark datasets.

Comparability of State-of-the-art strategies for AI emotion evaluation

There’s a frequent discrepancy in accuracy when testing in managed setting databases in comparison with wild setting databases. Therefore, it’s tough to translate the nice ends in managed environments (CK+, JAFFE, and so forth.) to uncontrolled environments (SFEW, FER-2013, and so forth.). For example, a mannequin acquiring 98.9% accuracy on the CK+ database solely achieves 55.27% on the SFEW database. That is primarily resulting from head pose variation and lighting situations in real-world eventualities.

The classification accuracy of various strategies of emotion evaluation will be in contrast and benchmarked utilizing a large-scale dataset such because the FI with over 3 million weakly labeled photographs.

- Algorithm #1: SentiBank (Hand-crafted), 49.23%

- Algorithm #2: Zhao et al. (Hand-crafted), 49.13%

- Algorithm #3: AlexNet (CNN, fine-tuned), 59.85%

- Algorithm #4: VGG-16 (CNN, fine-tuned) 65.52%

- Algorithm #5: ResNet-50 (CNN, fine-tuned) 67.53%

- Algorithm #6: MldrNet, 65.23%

- Algorithm #7: WILDCAT, 67.03%

- Algorithm #8: WSCNet, 70.07%

AI Emotion Recognition on Edge Units

Deploying emotion recognition fashions on resource-constrained edge units is a significant problem, primarily resulting from their computational value. Edge AI requires deploying machine studying to edge units the place an quantity of textual information is produced that can’t be processed with server-based options.

Highly optimized models permit working AI emotion evaluation on various kinds of edge units, particularly edge accelerators (similar to an Nvidia Jetson machine) and even smartphones. The implementation of real-time inference options utilizing scalable Edge Intelligence is feasible however difficult resulting from a number of components:

- Pre-training on completely different datasets for emotion recognition can enhance efficiency at no further value after deployment.

- Dimensionality discount achieves a trade-off between efficiency and computational necessities.

- Mannequin shrinking with pruning and mannequin compression methods are promising options. Even deploying educated fashions on embedded programs stays a difficult process. Giant, pre-trained fashions can’t be deployed and customised resulting from their massive computational energy requirement and mannequin dimension.

Decentralized, Edge-based sentiment evaluation and emotion recognition permit options with personal information processing (no data-offloading of visuals). Nonetheless, privateness considerations nonetheless come up when emotion evaluation is used for person profiling.

Outlook and present analysis in Emotion Recognition

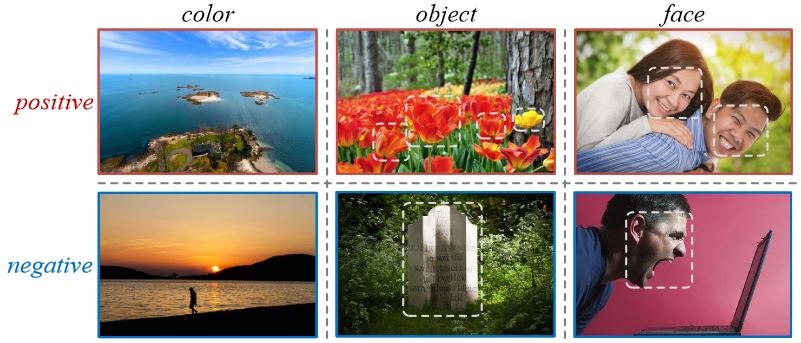

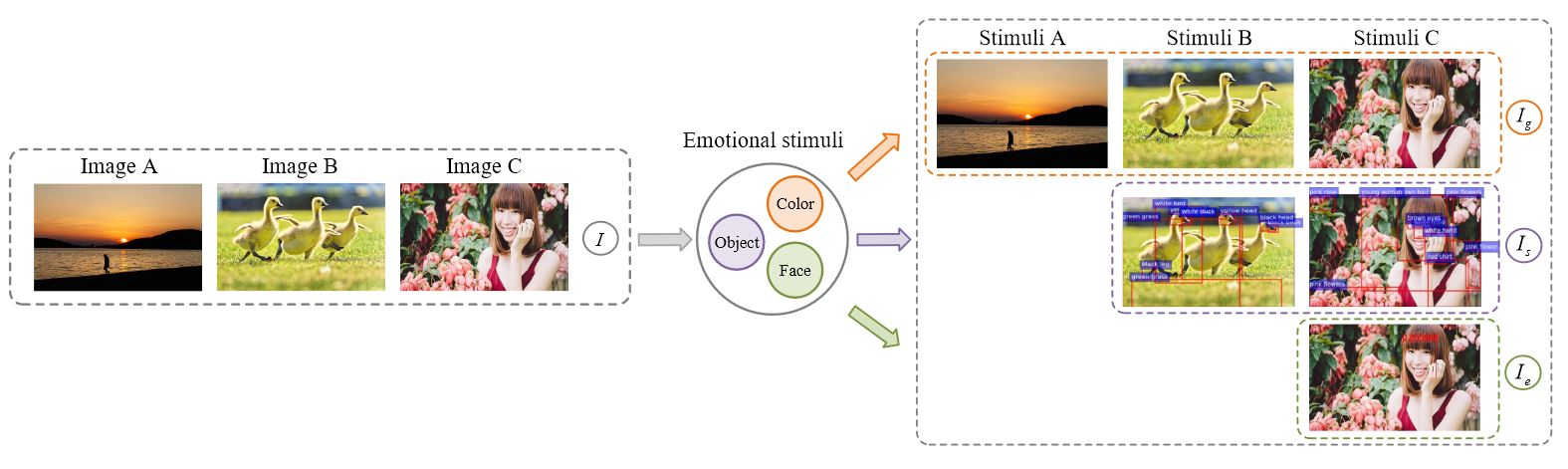

The newest 2021 market analysis on Visible Emotion Evaluation involves stimuli-aware emotion recognition that outperforms state-of-the-art strategies on visible emotion datasets. The tactic detects a complete set of emotional stimuli (similar to shade, object, or face) that may evoke completely different feelings (optimistic, damaging, or impartial).

Whereas the strategy is comparably complicated and computationally resource-intensive, it achieves barely greater accuracy on the FI dataset in comparison with the WSCNet (72% accuracy).

The strategy detects exterior components and stimuli based mostly on psychological concept to investigate shade, detected objects, and facial emotion in photographs. Because of this, an efficient picture is analyzed as a set of emotional stimuli that may be additional used for emotion prediction.

Functions of AI Emotion Recognition and Sentiment Evaluation

There’s a rising demand for numerous varieties of sentiment evaluation within the AI and pc imaginative and prescient market. Whereas it isn’t presently in style in large-scale use, fixing visible emotion evaluation duties is anticipated to significantly influence real-world purposes.

Opinion Mining and Buyer Service Evaluation

Opinion mining, or sentiment evaluation, goals to extract individuals’s opinions, attitudes, and particular feelings from information. Typical sentiment evaluation concentrates totally on textual content information or context (for instance, prospects’ on-line opinions or information articles). Nonetheless, visible sentiment evaluation is starting to obtain consideration since visible content material similar to photographs and movies grew to become in style for self-expression and product opinions on social networks. Opinion mining is a crucial technique of sensible promoting as it will probably assist corporations measure their model popularity and analyze the reception of their services or products choices.

Moreover, customer support will be improved bilaterally. First, the pc imaginative and prescient system could analyze the feelings of the consultant and supply suggestions on how they’ll enhance their interactions. Moreover, prospects will be analyzed in shops or throughout different interactions with workers to know whether or not their buying expertise was general optimistic or damaging or in the event that they skilled happiness or disappointment. Thus, buyer sentiments will be became tips that could be supplied within the retail sector of how the client expertise will be improved.

Medical Sentiment Evaluation

Medical sentiment classification considerations the affected person’s well being standing, medical situations, and therapy. Its evaluation and extraction have a number of purposes in psychological illness therapy, distant medical companies, and human-computer interplay.

Emotional Relationship Recognition

Current analysis developed an approach to acknowledge the emotional state of individuals to carry out pairwise emotional relationship recognition. The problem is to characterize the emotional relationship between two interacting characters utilizing AI-based video analytics.

What’s Subsequent for AI Emotion Recognition and Sentiment Evaluation With Pc Imaginative and prescient?

Sentiment evaluation and emotion recognition are key duties to construct empathetic programs and human-computer interplay based mostly on person emotion. Since deep studying options had been initially designed for servers with limitless sources, real-world deployment to edge units is a problem (Edge AI). Nonetheless, real-time inference of emotion recognition programs permits the implementation of large-scale options.

In case you are on the lookout for an enterprise-grade pc imaginative and prescient platform to ship pc imaginative and prescient quickly with no code and automation, try Viso Suite. Trade leaders use it to construct, deploy, monitor, and keep their AI purposes. Get a demo to your group.

Learn Extra about AI Emotion Recognition

Learn extra, and take a look at associated articles: