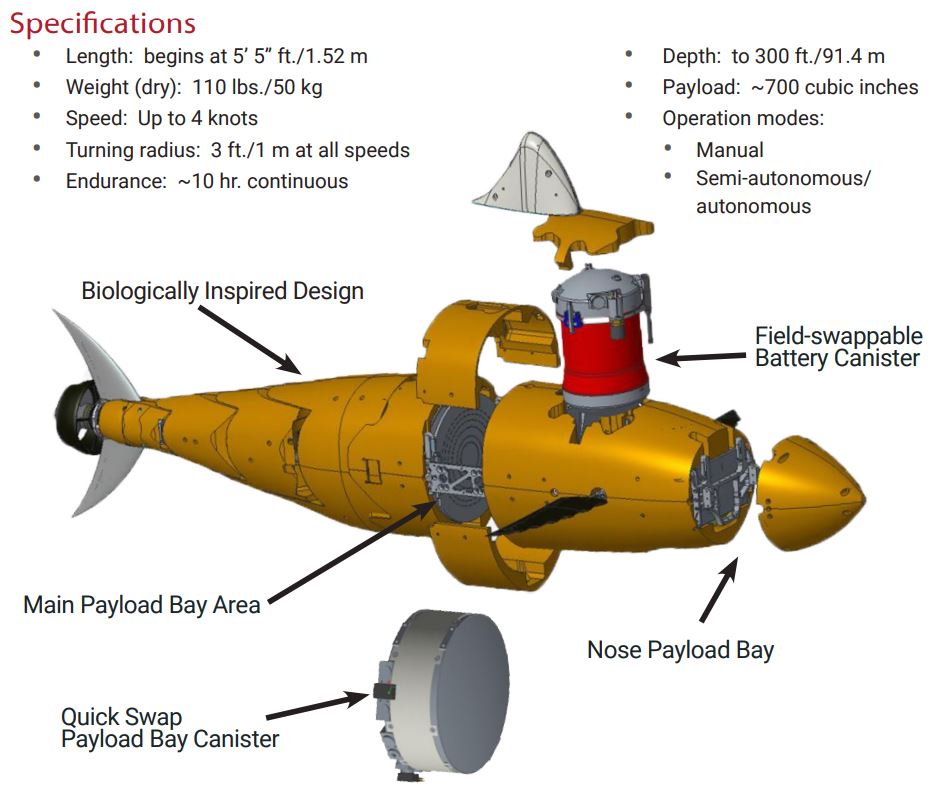

Autonomous underwater automobiles (AUVs) are unmanned underwater robots managed by an operator or pre-programmed to discover completely different waters autonomously. These robots are normally outfitted with cameras, sonars, and depth sensors, permitting them to autonomously navigate and accumulate invaluable information in difficult underwater environments. Not like remotely operated automobiles (ROVs), AUVs don’t require steady enter from operators, and with the event of AI, these automobiles are extra succesful than ever. AI has enabled AUVs to navigate advanced underwater environments, make clever selections, and carry out numerous duties with minimal human intervention.

On this article, we’ll delve into AI in AUVs. We’ll discover the important thing AI applied sciences that allow them, study real-world functions, and a hands-on tutorial for impediment detection.

About us: Viso Suite is the end-to-end platform for constructing, deploying, and scaling visible AI. It makes it potential for enterprise groups to implement AI options like folks monitoring, defect detection, and intrusion alerting seamlessly into their enterprise processes. To study extra about Viso Suite, ebook a demo with our staff.

AI Applied sciences for Autonomous Underwater Automobiles (AUVs)

Synthetic intelligence (AI) and machine studying (ML) have been reworking numerous industries together with autonomous automobiles. Whether or not it’s self-driving vehicles or AUVs, AI applied sciences like laptop imaginative and prescient (CV), present talents that take these concepts to actuality. CV is a subject of AI that allows machines to grasp by means of imaginative and prescient. There are a number of methods a machine can “see”, this consists of strategies like depth estimation, object detection, recognition, and scene understanding. This part will discover the AI applied sciences engineers use for autonomous underwater automobiles.

Laptop imaginative and prescient (CV)

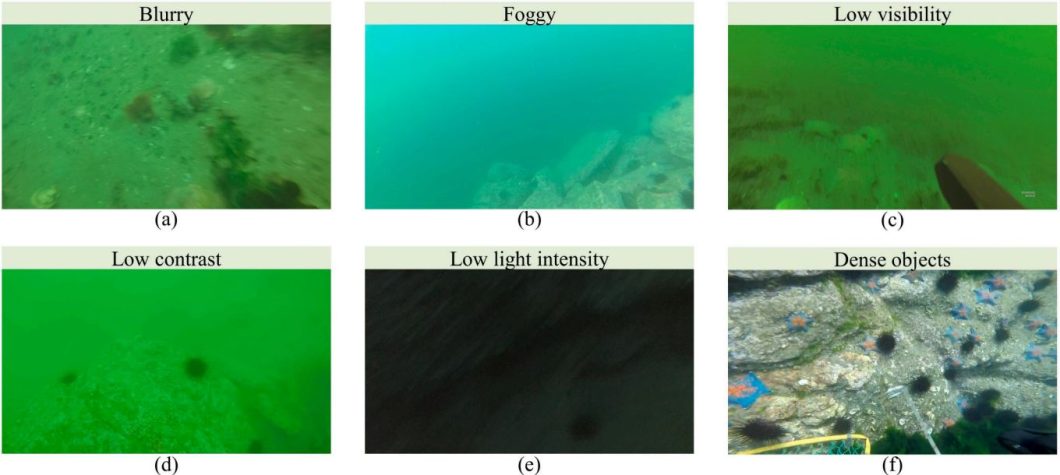

Laptop Imaginative and prescient is among the essential AI functions in AUVs. There are lots of issues to think about with underwater imaginative and prescient, it’s a difficult process and there are a number of components that may have an effect on this imaginative and prescient. Underwater, objects are much less seen due to decrease ranges of pure illumination as a result of gentle travels in another way underwater. So, high-quality cameras able to capturing clear photographs in low-light circumstances are a requirement for efficient laptop imaginative and prescient. Moreover, the depth stage not solely impacts the imaginative and prescient but in addition impacts the {hardware}. Deep waters have excessive pressures and gear should have the ability to face up to that.

With these challenges solved AI can begin analyzing footage and doing a variety of duties. Following are some laptop imaginative and prescient duties autonomous underwater automobiles carry out.

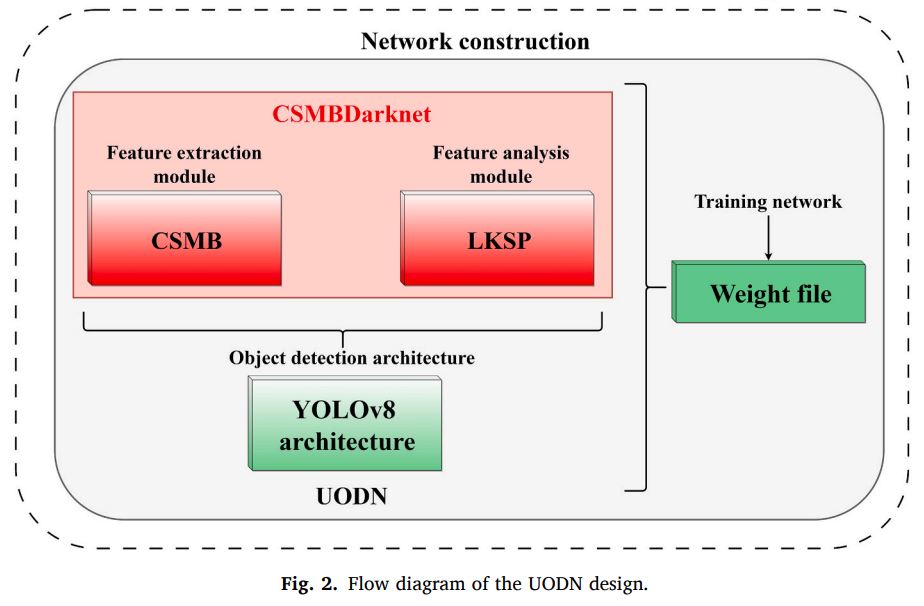

Hottest underwater object detection fashions make the most of common fashions like YOLOv8. These fashions are based mostly on convolutional neural networks (CNNs) that are a preferred kind of synthetic neural networks (ANNs) that work nice for imaginative and prescient duties like classification and detection. Nevertheless, researchers fine-tune these machine studying fashions and provide you with variations that work higher for underwater object detection duties. Some modifications embody including a cross-stage multi-branch (CSMB) module and a big kernel spatial pyramid (LKSP) module.

Different duties embody underwater mapping, the place autonomous underwater automobiles (AUVs) play an important function. AUVs allow the creation of detailed 3D maps of the ocean ground and underwater buildings. This course of typically entails combining laptop imaginative and prescient strategies with different sensor information, comparable to sonar and depth sensors. Depth estimation can be utilized to generate depth maps, that can be utilized for 3D reconstruction and mapping.

Navigation and Path Planning

Synthetic intelligence turns into notably helpful for duties like navigation and path planning. The underwater surroundings, particularly at excessive depths, places ahead numerous challenges. These challenges embody poor communication making it onerous for a floor operator to navigate the waters precisely. Moreover, underwater environments are completely different, making adaptability a key to navigating appropriately. This consists of all the time taking one of the best path for vitality consumption and mission objectives. AI allows these capabilities by offering algorithms and strategies that enable AUVs to adapt to dynamic circumstances and make clever selections.

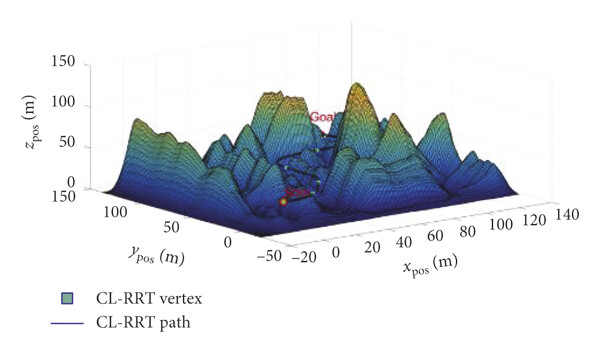

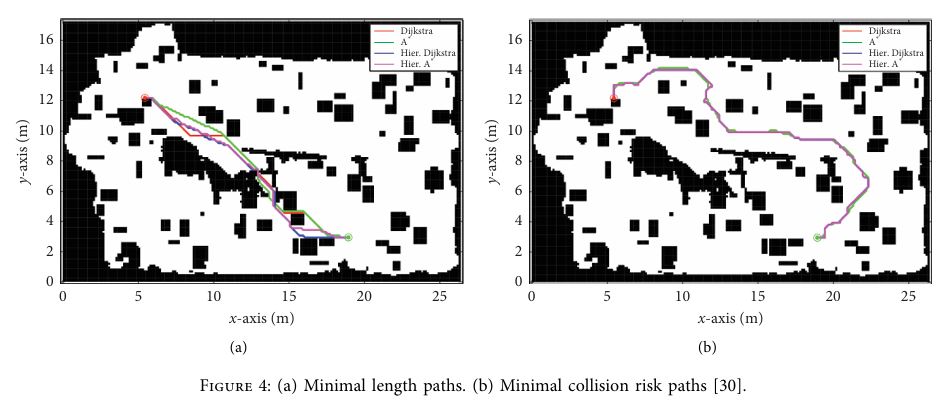

Earlier than an autonomous underwater car can navigate the surroundings, it wants to grasp its environment. That is normally executed with surroundings modeling, utilizing strategies like underwater mapping. This mannequin as seen above consists of obstacles, currents, and different related options of the surroundings. As soon as the surroundings is modeled, the AUV must plan a path from its place to begin to its vacation spot relying on components like vitality consumption and the mission purpose. This requires utilizing a wide range of path-learning algorithms to optimize towards sure standards. Following are a few of these algorithms.

Every algorithm can profit path planning in another way. For instance, neural networks are a good way to optimize for adaptability, by studying advanced relationships between sensor information and optimum management actions. Swarm intelligence is particularly helpful for a number of AUVs sharing information for cooperative duties. Researchers additionally use extra classical algorithms like A* and Dijkstra’s. They work by discovering probably the most optimum path relying on the purpose, which is nice for environments with well-defined obstacles.

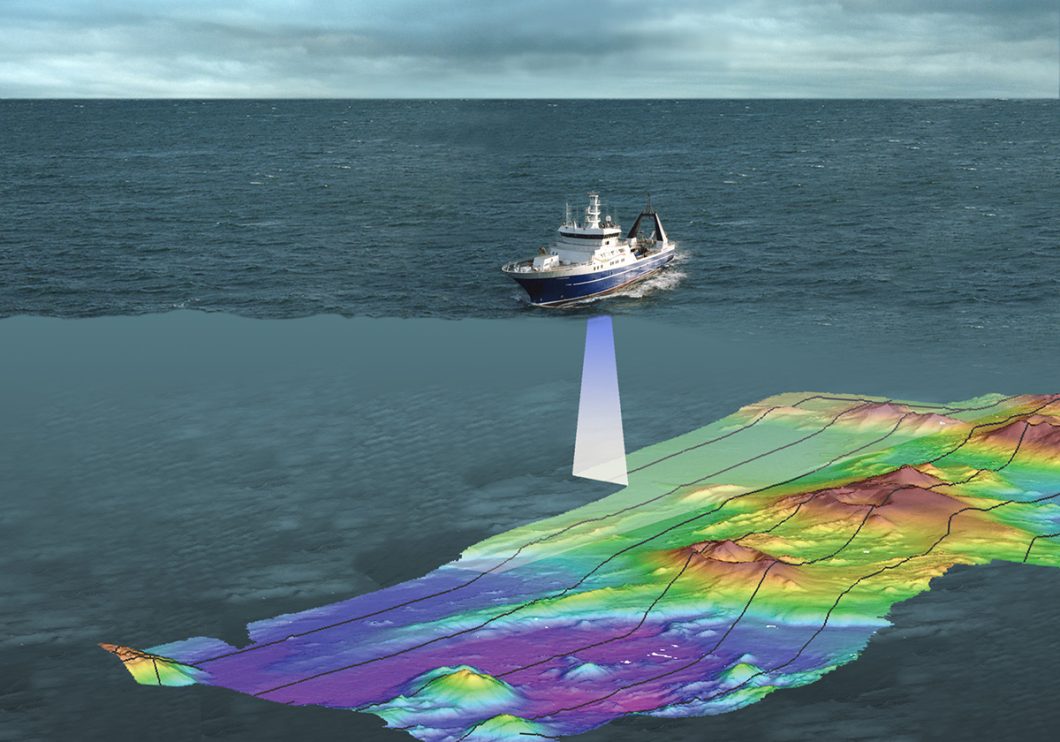

Underwater Mapping

Underwater mapping might be undertaken from completely different platforms, comparable to ships, autonomous underwater automobiles, and even low-wing plane. The car have to be outfitted with units like sonars, sensors, cameras, and extra. The information from these units can then be changed into maps, AI strategies can be utilized to boost the map and speed up its creation in a number of methods.

- Occupancy Grids

- Depth Estimation

- Oceanographic Knowledge Integration

As seen within the picture above, correct depth maps might be created by utilizing sensor and sonar information. AI algorithms can course of this information to replace the occupancy grid and supply a illustration of the obstacles within the surroundings. Mixed with deep studying strategies like depth estimation and 3D reconstruction, this information might be additional used to create detailed maps of the underwater surroundings. Plus, makes the map extremely customizable and adaptable.

For instance, researchers may add extra information to the mapping course of like present forecasts, water temperatures, and wave speeds and lengths. Underwater mapping is an important process to grasp the surroundings below the oceans and seas, it may possibly assist with path planning, however it may possibly additionally assist with issues like tsunami danger assessments. Let’s discover extra functions of AUVs within the subsequent part.

Purposes of AI-Powered Autonomous Underwater Automobiles

AI-powered AUVs are important to many functions within the water. This part will discover a few of the most impactful methods AI AUVs are being utilized in industries and underwater analysis.

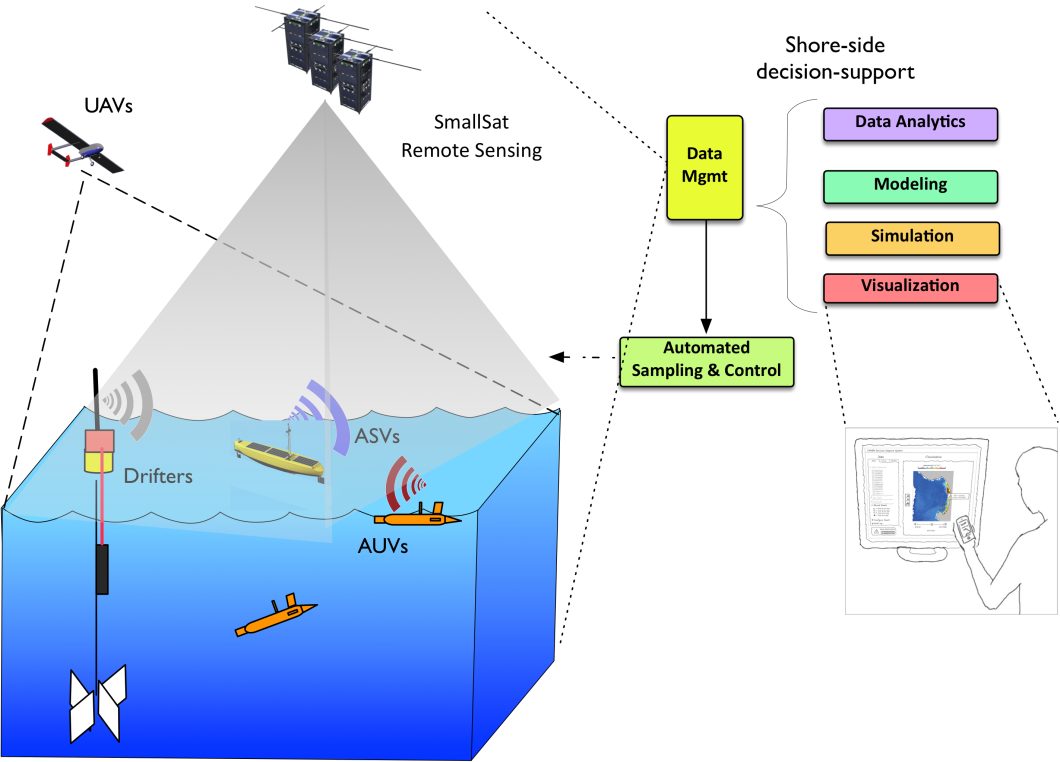

Oceanographic Analysis

AI-powered AUVs are essential for oceanographic analysis, they supply a extra autonomous and environment friendly solution to accumulate and analyze huge quantities of information from the ocean. AI can analyze the info from sensors to measure parameters like temperature, salinity, currents, and even the presence of particular marine organisms. The transformation is the flexibility of recent AI algorithms to investigate and supply insights into this information in actual time.

The ocean is a really huge and sophisticated surroundings, analysis has solely found and studied 5% of the oceans. Nevertheless, present developments in AI are enabling succesful underwater autonomous automobiles, that facilitate the invention and analysis accelerating it in the direction of the longer term. Moreover, the AI information evaluation helps determine refined modifications in ocean currents, monitor the motion of colleges of fish, and even determine potential websites for underwater geological formations.

Environmental Monitoring

Environmental monitoring is one other space the place AI-powered AUVs are making a major influence. Researchers are deploying them to watch the well being of underwater ecosystems, assess air pollution ranges, and even examine underwater infrastructure. It may determine indicators of coral bleaching, detect the presence of invasive species, and even monitor modifications in water high quality which may threaten the reef’s well being. Engineers can even adapt the car construction, mimicking the biology and physics of fish, making it final extra within the surroundings and adapt to it to assemble correct information.

In one other situation, an AI-powered AUV might be used to examine underwater pipelines or cables, figuring out indicators of corrosion, injury, or potential leaks. This kind of proactive monitoring will help forestall expensive repairs and even environmental disasters.

Underwater Archaeology

Underwater archaeology is an attention-grabbing subject that always entails exploring and documenting shipwrecks, historic ruins, or different historic websites hidden below the seas. AI-powered AUVs are offering new instruments for archaeologists to research these websites with out disturbing them. Autonomous below water automobiles are additionally used to create 3D fashions and buildings for these websites. AI algorithms can analyze the info collected from photographs and sensors to determine potential artifacts or reconstruct the ship again permitting us to create attention-grabbing simulations.

Mild AUVs are a preferred instrument archaeologists use to discover and perceive historic websites underwater. These websites are normally fragile and might be onerous to navigate, however LAUVs present a non-invasive strategy. This non-invasive strategy not solely helps protect delicate underwater websites but in addition permits for a greater and extra complete view with strategies like lighting correction.

These are only some attention-grabbing functions of AUVs in analysis and engineering, however there are numerous extra. Moreover, within the subsequent part, we’ll discover a step-by-step tutorial to construct an impediment detection mannequin.

Fingers-on Tutorial: Underwater Object Detection For Autonomous Underwater Automobiles

The autonomous mechanism in AUVs primarily makes use of reinforcement studying, and laptop imaginative and prescient mixed with {hardware} like sensors and cameras. These automobiles are normally despatched on missions, which might embody searching for one thing particular, like inspecting submarines for damages or searching for a specific species within the ocean. Virtually any mission purpose can use object detection capabilities to extend effectivity. This tutorial will use object detection to search for waste plastic underwater.

Amassing The Knowledge

For this tutorial, we’ll use Kaggle, Python, and YOLOv5. Kaggle will present the area to gather information, course of it, and prepare the mannequin. Kaggle additionally comprises a large assortment of datasets to make use of for autonomous underwater automobiles. Nevertheless, since our mission object is to detect and discover waste we’ll use one particular dataset here. Our first step is to begin the Kaggle pocket book and cargo the required dataset into it. Then we will import the libraries we want.

import os import yaml import matplotlib.pyplot as plt import matplotlib.patches as patches from PIL import Picture

Now let’s have a look at what sort of objects are included on this dataset. These are known as courses, and we will discover them within the “information.yaml” file. The next Python code defines the trail, finds the “information.yaml” file, and prints all of the courses.

dataset_path = "/kaggle/enter/underwater-plastic-pollution-detection/underwater_plastics"

with open(os.path.be part of(dataset_path, "information.yaml"), 'r') as f:

information = yaml.safe_load(f)

class_list = information['names']

print("Courses within the dataset:", class_list)

This dataset consists of the next courses: [‘Mask’, ‘can’, ‘cellphone’, ‘electronics’, ‘gbottle’, ‘glove’, ‘metal’, ‘misc’, ‘net’, ‘pbag’, ‘pbottle’, ‘plastic’, ‘rod’, ‘sunglasses’, ‘tire’], nevertheless, we is not going to want all of them so within the subsequent part we’ll course of it and take the courses we want. However first, let’s have a look at some samples from this dataset. The next code will present the outlined variety of samples from the dataset, additionally displaying the annotated bounding bins.

def visualize_samples(dataset_path, num_samples=10):

with open(os.path.be part of(dataset_path, "information.yaml"), 'r') as f:

information = yaml.safe_load(f)

class_list = information['names']

image_dir = os.path.be part of(dataset_path, "prepare", "photographs")

label_dir = os.path.be part of(dataset_path, "prepare", "labels")

for i in vary(num_samples):

image_file = os.listdir(image_dir)[i]

label_file = image_file[:-4] + ".txt"

image_path = os.path.be part of(image_dir, image_file)

label_path = os.path.be part of(label_dir, label_file)

img = Picture.open(image_path)

fig, ax = plt.subplots(1)

ax.imshow(img)

with open(label_path, 'r') as f:

traces = f.readlines()

for line in traces:

class_id, x_center, y_center, width, peak = map(float, line.strip().cut up())

class_name = class_list[int(class_id)]

x_min = (x_center - width / 2) * img.width

y_min = (y_center - peak / 2) * img.peak

bbox_width = width * img.width

bbox_height = peak * img.peak

rect = patches.Rectangle((x_min, y_min), bbox_width, bbox_height, linewidth=1, edgecolor="r", facecolor="none")

ax.add_patch(rect)

ax.textual content(x_min, y_min, class_name, shade="r")

plt.present()

visualize_samples(dataset_path)

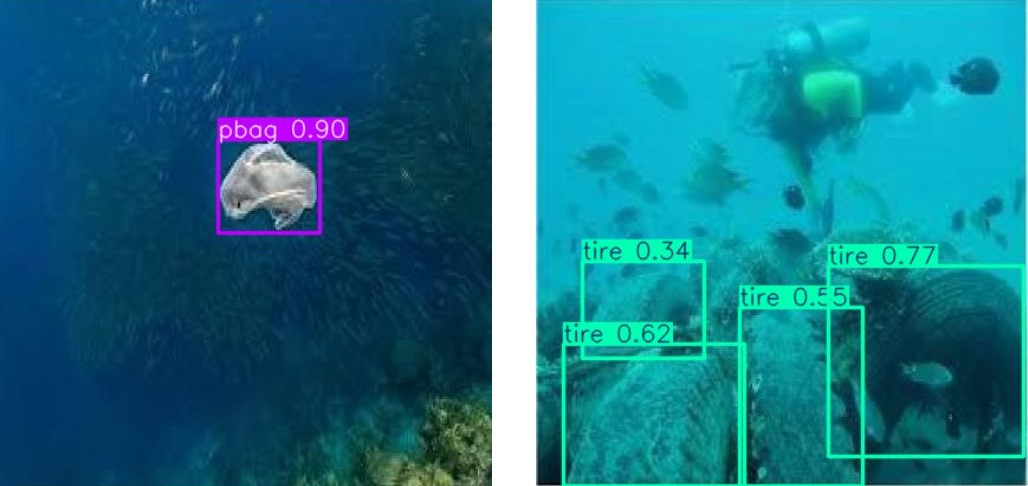

Following are some samples of courses we’re interested by.

As talked about beforehand, it’s higher to take solely the wanted courses from the dataset, so for the mission purpose the next courses appear to be probably the most related: [“can”, “cellphone”, “net”, “pbag”, “pbottle”, ‘Mask’, “tire”]. Subsequent, let’s course of this information to extract the courses we want.

Knowledge Processing

On this part, we’ll take an inventory of courses from the dataset to make use of later in coaching the YOLOv5 mannequin. The mission purpose is to detect trash and waste for elimination. The dataset now we have has many courses however we solely desire a handful of these. With Python, we will extract the wanted courses and arrange them in a brand new folder. For this, I’ve ready a easy Python operate that may take a dataset and extract the wanted courses into a brand new output folder.

import os

import shutil

import yaml

from pathlib import Path

from tqdm import tqdm

def extract_classes(dataset_path, classes_to_extract, output_dir):

"""

Extracts specified courses from the dataset into a brand new dataset.

Args:

dataset_path (str): Path to the dataset listing

classes_to_extract (checklist): Listing of sophistication names to extract

output_dir (str): Path to the output listing for the brand new dataset

"""

dataset_path = Path(dataset_path)

output_dir = Path(output_dir)

# Learn class names from yaml

strive:

with open(dataset_path / "information.yaml", 'r') as f:

information = yaml.safe_load(f)

class_list = information['names']

# Get indices of courses to extract

class_indices = {class_list.index(class_name) for class_name in classes_to_extract

if class_name in class_list}

if not class_indices:

increase ValueError(f"Not one of the specified courses {classes_to_extract} present in dataset")

besides FileNotFoundError:

increase FileNotFoundError(f"Couldn't discover information.yaml in {dataset_path}")

besides KeyError:

increase KeyError("information.yaml doesn't include 'names' subject")

# Create output construction

output_dir.mkdir(mother and father=True, exist_ok=True)

# Copy information.yaml with solely extracted courses

new_data = information.copy()

new_data['names'] = classes_to_extract

with open(output_dir / "information.yaml", 'w') as f:

yaml.dump(new_data, f)

# Course of every cut up

for cut up in ['train', 'valid', 'test']:

split_dir = dataset_path / cut up

if not split_dir.exists():

print(f"Warning: {cut up} listing not discovered, skipping...")

proceed

# Create output directories for this cut up

out_split = output_dir / cut up

out_images = out_split / 'photographs'

out_labels = out_split / 'labels'

out_images.mkdir(mother and father=True, exist_ok=True)

out_labels.mkdir(mother and father=True, exist_ok=True)

# Course of label information first to determine wanted photographs

label_files = checklist((split_dir / 'labels').glob('*.txt'))

needed_images = set()

print(f"Processing {cut up} cut up...")

for label_path in tqdm(label_files):

keep_file = False

new_lines = []

strive:

with open(label_path, 'r') as f:

traces = f.readlines()

for line in traces:

elements = line.strip().cut up()

if not elements:

proceed

class_id = int(elements[0])

if class_id in class_indices:

# Remap class ID to new index

new_class_id = checklist(class_indices).index(class_id)

new_lines.append(f"{new_class_id} {' '.be part of(elements[1:])}n")

keep_file = True

if keep_file:

needed_images.add(label_path.stem)

# Write new label file

with open(out_labels / label_path.identify, 'w') as f:

f.writelines(new_lines)

besides Exception as e:

print(f"Error processing {label_path}: {str(e)}")

proceed

# Copy solely the photographs we want

image_dir = split_dir / 'photographs'

if not image_dir.exists():

print(f"Warning: photographs listing not discovered for {cut up}")

proceed

for img_path in image_dir.glob('*'):

if img_path.stem in needed_images:

strive:

shutil.copy2(img_path, out_images / img_path.identify)

besides Exception as e:

print(f"Error copying {img_path}: {str(e)}")

print("Extraction full!")

# Print statistics

print("nDataset statistics:")

for cut up in ['train', 'valid', 'test']:

if (output_dir / cut up).exists():

n_images = len(checklist((output_dir / cut up / 'photographs').glob('*')))

print(f"{cut up}: {n_images} photographs")

Deep studying datasets normally cut up the info into 3 folders, these are coaching, testing, and validation, within the code above we go to every of these folders, discover the photographs folder and the labels folder, and extract the photographs with the labels we wish. Now, we will use this code by calling it as follows.

classes_to_extract = ["can", "cellphone", "net", "pbag", "pbottle", 'Mask', "tire"] output_dir = "/kaggle/working/extracted_dataset" extract_classes(dataset_path, classes_to_extract, output_dir)

Now we’re prepared to make use of the extracted dataset to coach a YOLOv5 mannequin within the subsequent part.

Practice Mannequin

We are going to first begin by downloading the YOLOv5 repository and set up the wanted libraries.

!git clone https://github.com/ultralytics/yolov5 !pip set up -r yolov5/necessities.txt

Now we will import the put in libraries.

import os import yaml from pathlib import Path import shutil import torch from PIL import Picture from tqdm import tqdm

Lastly, earlier than beginning the coaching script let’s put together our information to match the mannequin necessities like resizing the photographs, updating the info configuration file “information.yaml” and defining a couple of essential parameters for the YOLOv5 mannequin.

DATASET_PATH = Path("/kaggle/working/extracted_dataset")

IMG_SIZE = 640

BATCH_SIZE = 16

EPOCHS = 50

# Learn authentic information.yaml

with open(DATASET_PATH / 'information.yaml', 'r') as f:

information = yaml.safe_load(f)

# Create new YAML configuration

train_path = str(DATASET_PATH / 'prepare')

val_path = str(DATASET_PATH / 'legitimate')

nc = len(information['names']) # variety of courses

names = information['names'] # class names

yaml_content = {

'path': str(DATASET_PATH),

'prepare': train_path,

'val': val_path,

'nc': nc,

'names': names

}

# Save the YAML file

yaml_path = DATASET_PATH / 'dataset.yaml'

with open(yaml_path, 'w') as f:

yaml.dump(yaml_content, f, sort_keys=False)

print(f"Created dataset config at {yaml_path}")

print(f"Variety of courses: {nc}")

print(f"Courses: {names}")

Nice! Now we will use the prepare.py script we obtained by downloading the YOLOv5 repository to coach the mannequin. Nevertheless, this isn’t coaching from scratch, as that would want intensive time and assets, we’ll use a pre-trained checkpoint which is the YOLOv5s (small) this mannequin is environment friendly and might be sensible to put in on a trash assortment autonomous underwater car. Moreover, now we have outlined the variety of epochs the mannequin will prepare for. Following is how we’d use the outlined parameters with the coaching script.

!python prepare.py

--img {IMG_SIZE}

--batch {BATCH_SIZE}

--epochs {EPOCHS}

--data {yaml_path}

--weights yolov5s.pt

--workers 4

--cache

This course of will take round half-hour to finish 50 epochs, this may be decreased however may present much less correct outcomes. After the coaching, we will infer our skilled mannequin with a couple of examples on-line to check the mannequin on photographs completely different from what exists within the dataset. The next code masses the skilled mannequin.

from ultralytics import YOLO

# Load a mannequin

mannequin = YOLO("/kaggle/working/yolov5/runs/prepare/exp2/weights/finest.pt")

Subsequent, Let’s strive it out!

outcomes = mannequin("/kaggle/enter/test-AUVs_underwater_pollution/picture.jpg")

outcomes[0].present()

Following are some outcomes.

The Future Of Autonomous Underwater Automobiles

The developments in AI and AUVs have opened up new potentialities for underwater exploration and analysis. AI algorithms are enabling AUVs to change into extra clever and able to working with better autonomy. That is notably essential in underwater environments the place communication is restricted and the flexibility to adapt to dynamic circumstances is essential. Moreover, the way forward for AUVs is promising, with potential functions in numerous fields.

In oceanographic analysis, AI-powered AUVs can discover huge and uncharted locations, amassing invaluable information and offering insights into the mysteries of our oceans. In environmental monitoring, AUVs can play an important function in assessing air pollution ranges, monitoring underwater ecosystems, and defending marine biodiversity. Furthermore, AUVs can be utilized for underwater infrastructure inspection, comparable to pipelines, cables, and offshore platforms, guaranteeing their integrity and stopping potential hazards.

As AI know-how continues to advance, we will anticipate AUVs to change into much more refined and able to performing advanced duties with minimal human intervention. This is not going to solely increase their functions in analysis and business but in addition open up new potentialities for underwater exploration and discovery.