AlexNet is an Picture Classification mannequin that remodeled deep studying. It was launched by Geoffrey Hinton and his workforce in 2012, and marked a key occasion within the historical past of deep studying, showcasing the strengths of CNN architectures and its huge purposes.

Earlier than AlexNet, individuals had been skeptical about whether or not deep studying might be utilized efficiently to very giant datasets. Nonetheless, a workforce of researchers had been pushed to show that Deep Neural Architectures had been the long run, and succeeded in it; AlexNet exploded the curiosity in deep studying post-2012.

The Deep Studying (DL) mannequin was designed to compete within the ImageNet Giant Scale Visible Recognition Problem (ILSVRC) in 2012. That is an annual competitors that benchmarks algorithms for picture classification. Earlier than ImageNet, there was no availability of a big dataset to coach Deep Neural Networks. In consequence, consequently, ImageNet additionally performed a job within the success of Deep Studying.

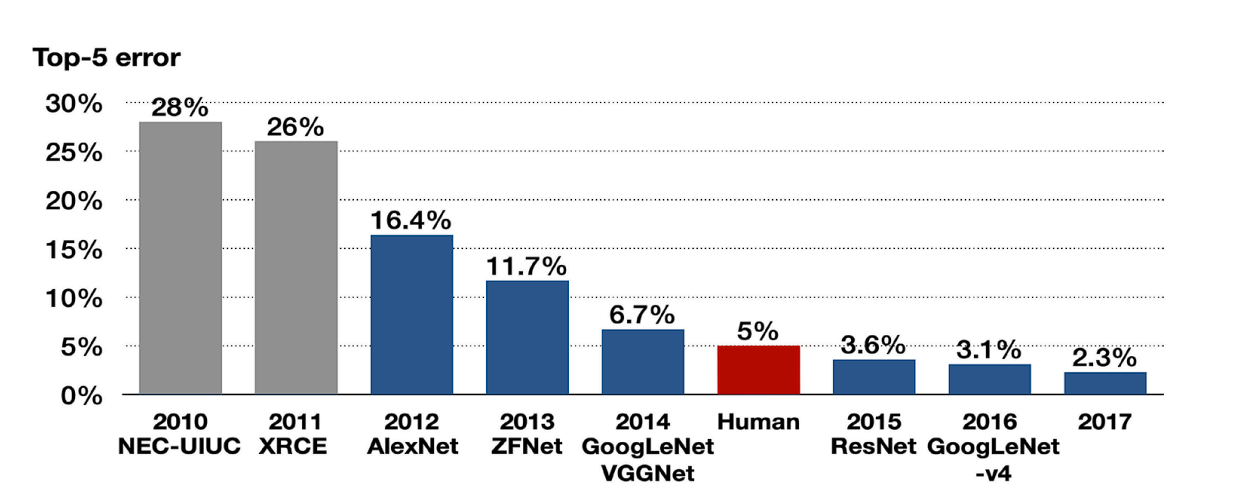

In that competitors, AlexNet carried out exceptionally nicely. It considerably outperformed the runner-up mannequin by decreasing the top-5 error charge from 26.2% to fifteen.3%.

Earlier than AlexNet

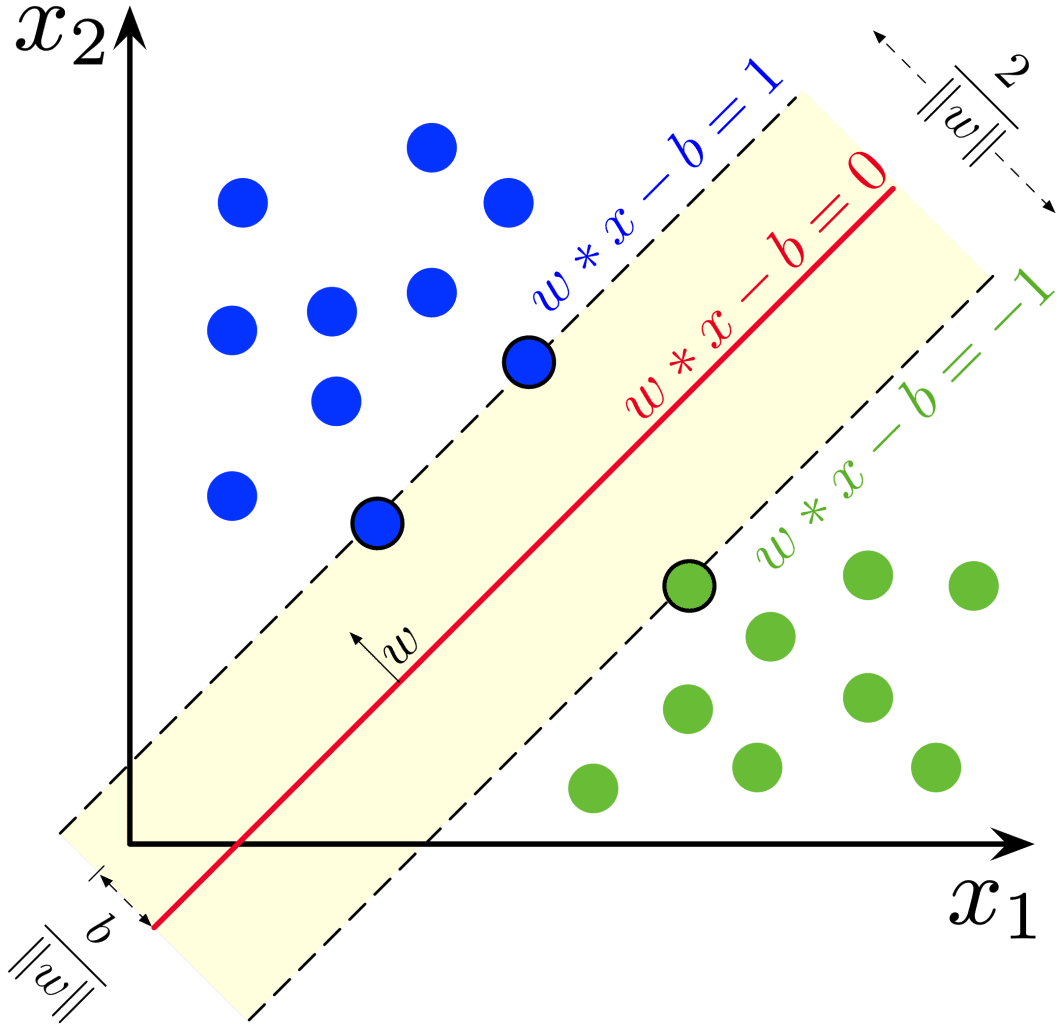

Machine studying fashions corresponding to Assist Vector Machines (SVMs) and shallow neural networks dominated laptop imaginative and prescient earlier than the event of AlexNet. Coaching a deep mannequin with tens of millions of parameters appeared not possible, we are going to examine why, however first, let’s look into the constraints of the earlier Machine Studying (ML) fashions.

- Characteristic Engineering: SVMs and easy Neural Networks (NNs) require intensive handcrafted characteristic engineering, which makes scaling and generalization not possible.

- Vanishing Gradient Downside: Deep Networks confronted a vanishing gradient downside. That is the place the gradients turn into too small throughout backpropagation or disappear utterly.

As a consequence of computational limitations, gradient vanishing, and lack of enormous datasets to coach the mannequin on, most neural networks had been shallow. These obstacles made it not possible for the mannequin to generalize.

What’s ImageNet?

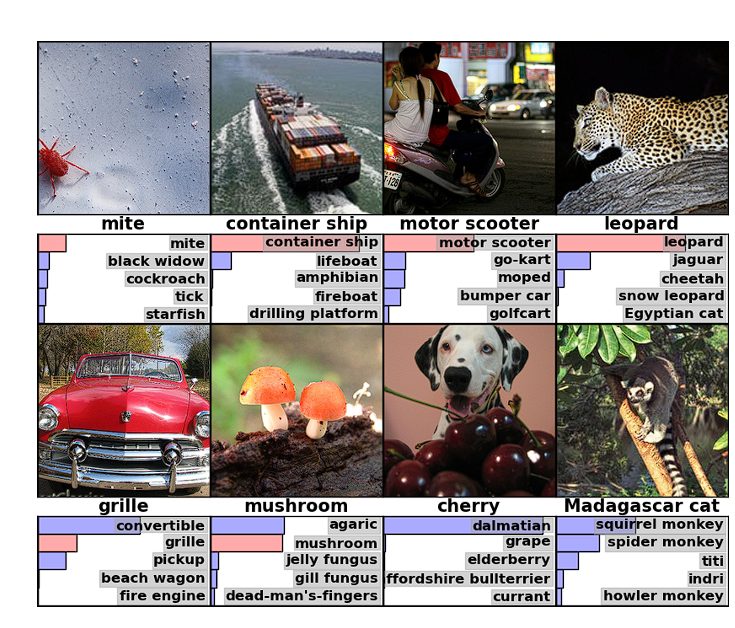

ImageNet is an unlimited visible database designed for object recognition analysis. It consists of over 14 million hand-annotated photographs with over 20,000 classes. This dataset performed a serious position within the success of AlexNet and the developments in DL, as there was no giant dataset on which Deep NNs might be skilled beforehand.

The ImageNet mission additionally conducts an annual ImageNet Giant Scale Visible Recognition Problem (ILSVRC) the place researchers consider their algorithms to categorise objects and scenes accurately.

The competitors is essential as a result of it permits researchers to push the bounds of current fashions and invent new methodologies. A number of breakthrough fashions have been unveiled on this competitors, AlexNet being considered one of them.

Contribution of AlexNet

The analysis paper on AlexNet titled “ImageNet Classification with Deep Convolutional Neural Networks” solved the above-discussed issues.

This paper’s launch deeply influenced the trajectory of deep studying. The strategies and innovation launched turned a normal for coaching Deep Neural Networks. Listed below are the important thing improvements launched:

- Deep Structure: This mannequin utilized deep structure in comparison with any NN mannequin launched beforehand. It consisted of 5 convolutional layers adopted by three totally linked layers.

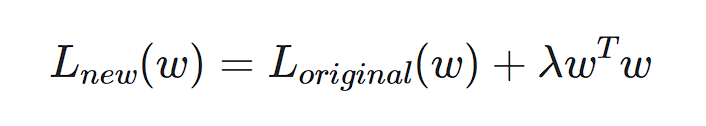

- ReLU Nonlinearity: CNNs at the moment used features corresponding to Tanh or Sigmoid to course of data between layers. These features slowed down the coaching. In distinction, ReLU (Rectified Linear Unit) made your entire course of less complicated and lots of instances quicker. It outputs provided that the enter is given to it as optimistic, in any other case it outputs a zero.

- Overlapping Pooling: Overlapping pooling is rather like common max pooling layers, however, in overlap pooling, because the window strikes throughout, it overlaps with the earlier window. This improved the error proportion in AlexNet.

- Use of GPU: Earlier than AlexNet, NNs had been skilled on the CPU, which made the method sluggish. Nonetheless, the researcher of AlexNet integrated GPUs, which accelerated computation time considerably. This proved that Deep NNs might be skilled feasibly on GPUs.

- Native Response Normalization (LRN): It is a strategy of normalizing adjoining channels within the community, which normalizes the exercise of neurons inside an area neighborhood.

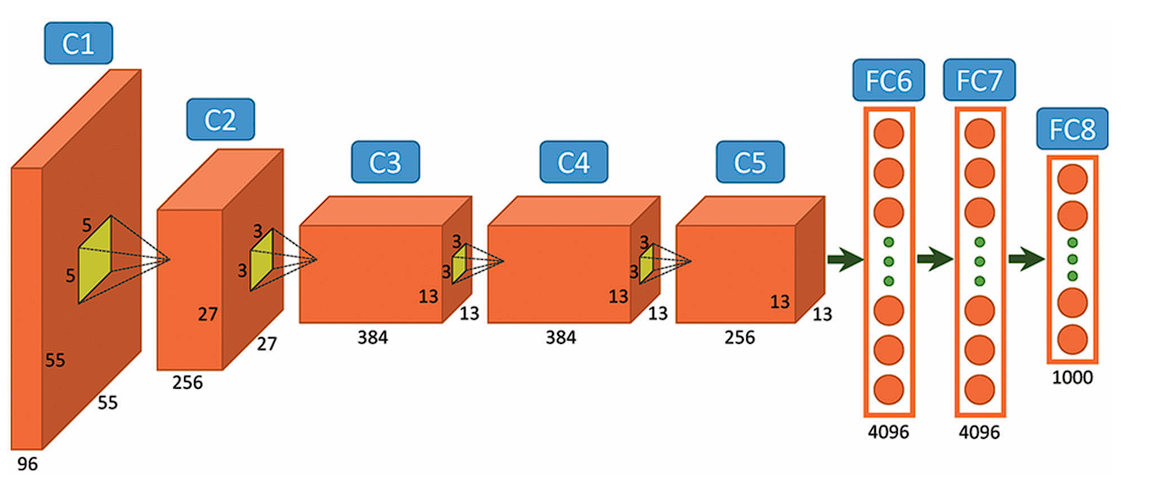

The AlexNet Structure

AlexNet includes a fairly easy structure in comparison with the most recent Deep Studying Fashions. It consists of 8 layers: 5 convolutional layers and three totally linked layers.

Nonetheless, it integrates a number of key improvements of its time, together with the ReLU Activation Features, Native Response Normalization (LRN), and Overlapping Max Pooling. We are going to take a look at every of them under.

Enter Layer

AlexNet takes photographs of the Enter dimension of 227x227x3 RGB Pixels.

Convolutional Layers

- First Layer: The primary layer makes use of 96 kernels of dimension 11×11 with a stride of 4, prompts them with the ReLU activation perform, after which performs a Max Pooling operation.

- Second Layer: The second layer takes the output of the primary layer because the enter, with 256 kernels of dimension 5x5x48.

- Third Layer: 384 kernels of dimension 3x3x256. No pooling or normalization operations are carried out on the third, fourth, and fifth layers.

- Fourth Layer: 384 kernels of dimension 3x3x192.

- Fifth Layer: 256 kernels of dimension 3x3x192.

Absolutely Linked Layers

The totally linked layers have 4096 neurons every.

Output Layer

The output layer is a SoftMax layer that outputs possibilities of the 1000 class labels.

Stochastic Gradient Descent

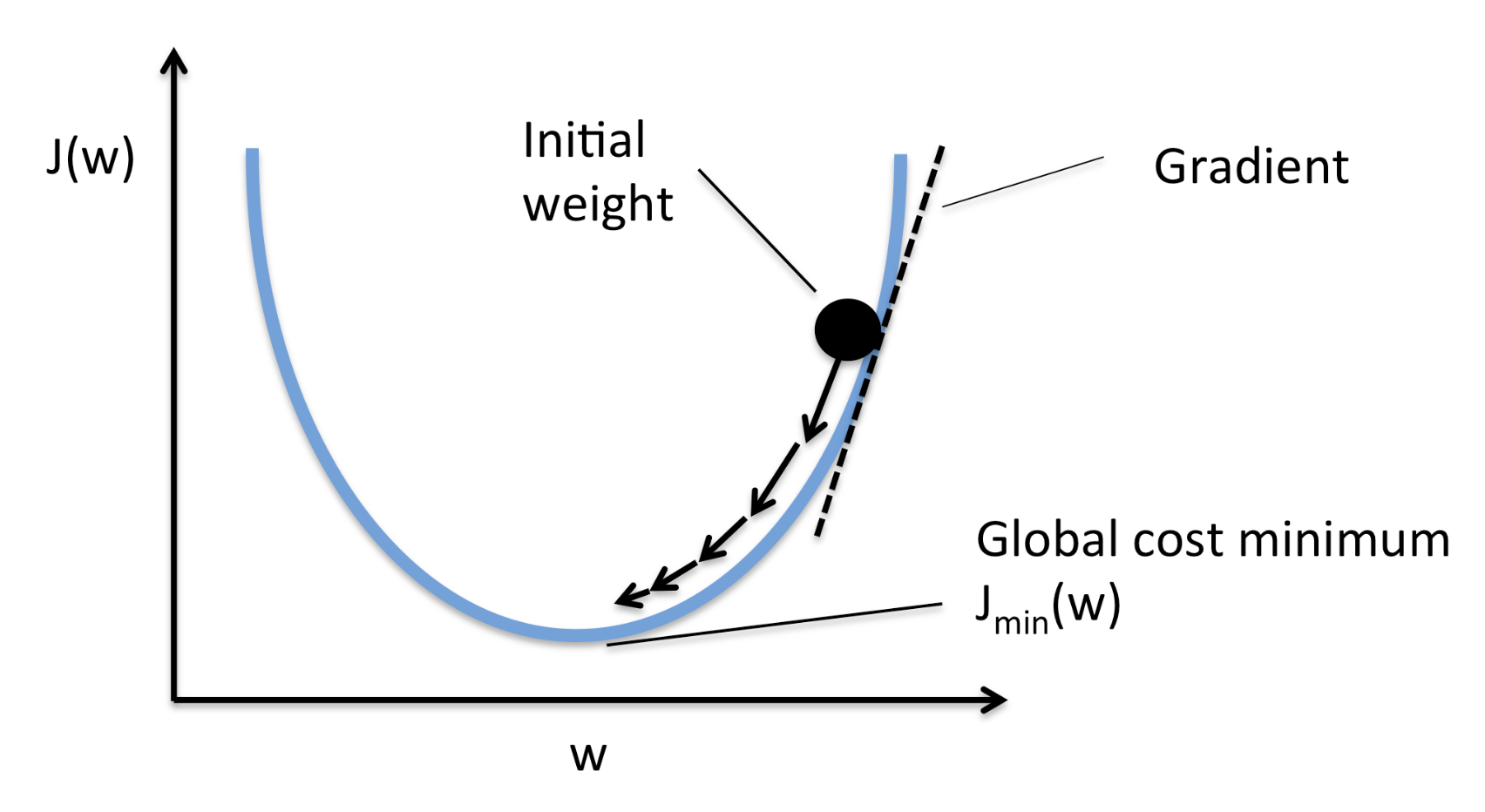

AlexNet makes use of stochastic gradient descent (SGD) with momentum a for optimization. SGD is a variant of Gradient Descent. In SGD, as an alternative of performing calculations on the entire dataset, which is computationally costly, SGD randomly picks a batch of photographs, calculates loss, and updates the load parameters.

Momentum

Momentum helps speed up SGD and dampens fluctuations. It performs this by including a fraction of the earlier weight vector to the present weight vector. This prevents sharp updates and helps the mannequin overcome saddle factors.

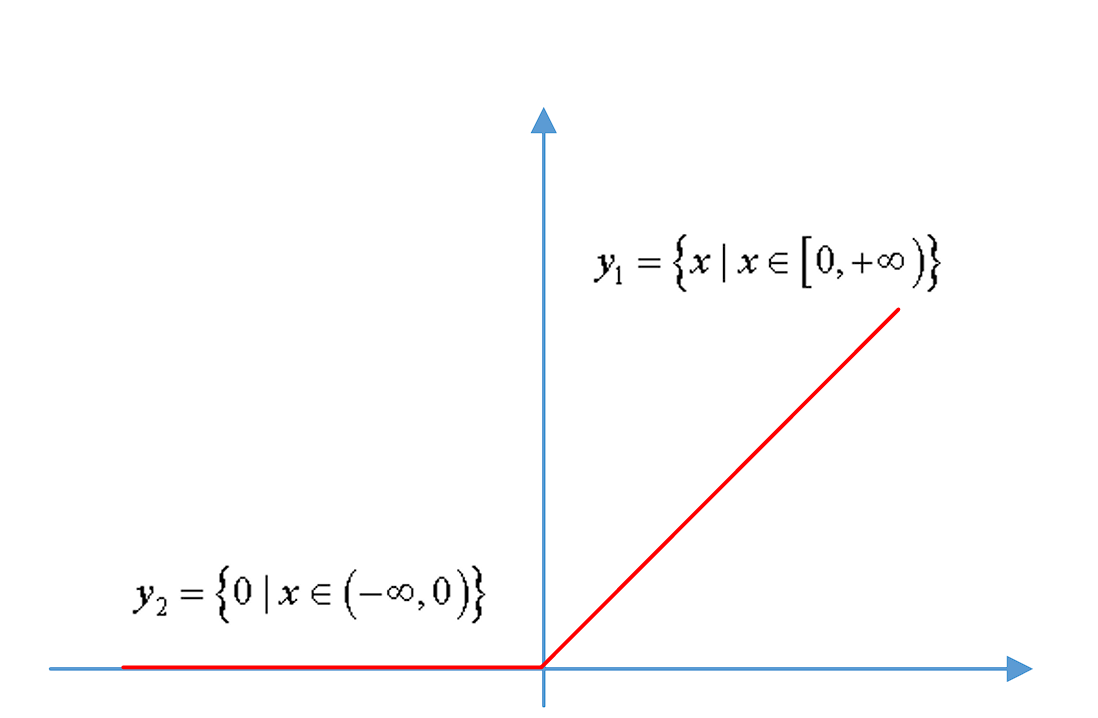

Weight Decay

Weight decay provides a penalty on the dimensions of the weights. By penalizing bigger weights, weight decay encourages the mannequin to study smaller weights that seize the underlying patterns within the knowledge, fairly than memorizing particular particulars that ends in overfitting.

Information Augmentation

Information Augmentation artificially will increase the dimensions and variety of the coaching knowledge utilizing transformations that don’t change the precise object within the picture. This forces the mannequin to study higher and enhance its skill to cater to unseen photographs.

AlexNet used Random Cropping and Flipping, and Colour Jitter Augmentations.

Improvements Launched

ReLU

It is a non-linear activation perform that has a easy spinoff. (It eradicates detrimental values and solely outputs optimistic values).

Earlier than ReLU, Sigmoid and Tanh features had been used, however they slowed down the coaching and brought about gradient vanishing. ReLU got here up as a substitute since it’s quicker and requires fewer computation sources throughout backpropagation as a result of the spinoff of Tanh is at all times lower than 1, whereas the spinoff of ReLU is both 0 or 1.

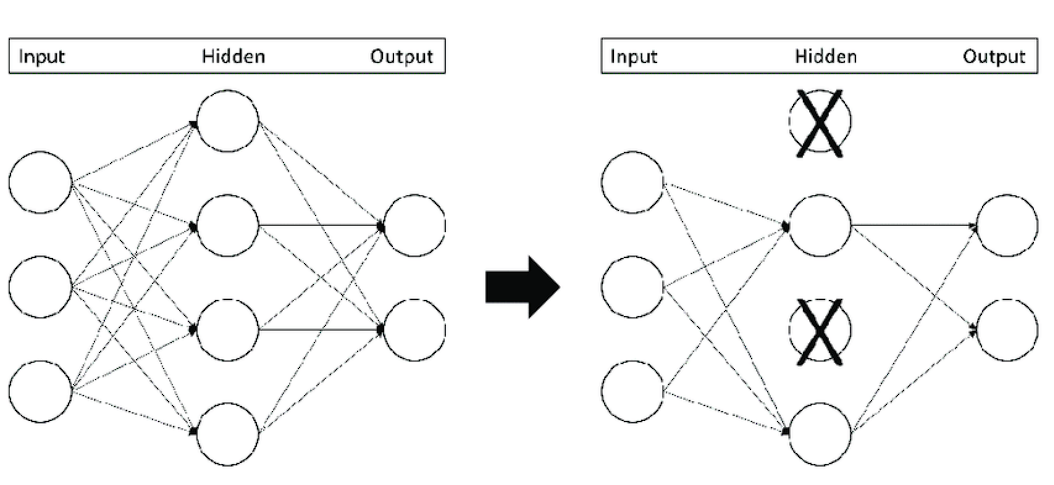

Dropout Layers

It is a regularization approach (a way used to deal with overfitting) that “drops out” or turns off neurons.

It is a easy methodology that forestalls the mannequin from overfitting. It does this by making the mannequin study extra strong options and stopping the mannequin from condensing options in a single space of the community.

Dropout will increase the coaching time; nevertheless, the mannequin learns to generalize higher.

Overlapping Pooling

Pooling layers in CNNs summarize the outputs of neighboring teams of neurons in the identical kernel map. Historically, the neighborhoods summarized by adjoining pooling items don’t overlap. Nonetheless, in Overlapping pooling the adjoining pooling operations overlap with one another. This strategy:

- Reduces the dimensions of the community

- Offers slight translational invariance.

- Makes it troublesome for the mannequin to overfit.

Efficiency and Influence

The AlexNet structure dominated in 2012 by reaching a top-5 error charge of 15.3%, considerably decrease than the runner-up’s 26.2%.

This massive discount in error charge excited the researchers with the untapped potential of deep neural networks in dealing with giant picture datasets. Subsequently, varied Deep Studying fashions had been developed later.

AlexNet impressed Networks

The success of AlexNet impressed the design and growth of varied neural community architectures. These embrace:

- VGGNet: It was developed by Okay. Simonyan and A. Zisserman at Oxford. The VGGNet integrated concepts from AlexNet and was the following step taken after AlexNet.

- GoogLeNet (Inception): This was Launched by Google, which additional developed the structure of AlexNet.

- ResNets: AlexNet began to face a vanishing gradient with deeper networks. To beat this, ResNet was developed. These networks launched residual studying, additionally known as skip connections. The skip connection connects activations of decrease layers to increased layers by skipping some layers in between.

Purposes of AlexNet

Builders created AlexNet for picture classification. Nonetheless, advances in its structure and switch studying (a way the place a mannequin skilled on one job is repurposed for a novel associated job) opened up a brand new set of potentialities for AlexNet. Furthermore, its convolutional layers kind the muse for object detection fashions corresponding to Quick R-CNN and Sooner R-CNN, and professionals have utilized them in fields like autonomous driving and surveillance.

- Agriculture: AlexNet analyzes photographs to acknowledge the well being and situation of vegetation, empowering farmers to take well timed measures that enhance crop yield and high quality. Moreover, researchers have employed AlexNet for plant stress detection and weed and pest identification.

- Catastrophe Administration: Rescue groups use the mannequin for catastrophe evaluation and making emergency aid selections utilizing photographs from satellites and drones.

- Medical Pictures: Docs make the most of AlexNet to diagnose varied medical circumstances. For instance, they use it to research X-rays, MRIs (notably mind MRIs), and CT scans for illness detection, together with varied varieties of cancers and organ-specific sicknesses. Moreover, AlexNet assists in diagnosing and monitoring eye ailments by analyzing retinal photographs.

Conclusion

AlexNet marked a big milestone within the growth of Convolutional Neural Networks (CNNs) by demonstrating their potential to deal with large-scale picture recognition duties. The important thing improvements launched by AlexNet embrace ReLU activation features for quicker convergence, using dropout for controlling overfitting, and using GPU to feasibly prepare the mannequin. These contributions are nonetheless in use at present. Furthermore, the additional fashions developed after AlexNet took it as a base groundwork and inspiration.