AI instruments have developed and right this moment they’ll generate utterly new texts, codes, photographs, and movies. ChatGPT, inside a brief interval, has emerged as a number one exemplar of generative synthetic intelligence techniques.

The outcomes are fairly convincing as it’s typically laborious to acknowledge whether or not the content material is created by man or machine. Generative AI is particularly good and relevant in 3 main areas: textual content, photographs, and video era.

About us: Viso.ai offers a sturdy end-to-end pc imaginative and prescient infrastructure – Viso Suite. Our software program helps a number of main organizations begin with pc imaginative and prescient and implement deep studying fashions effectively with minimal overhead for numerous downstream duties. Get a demo right here.

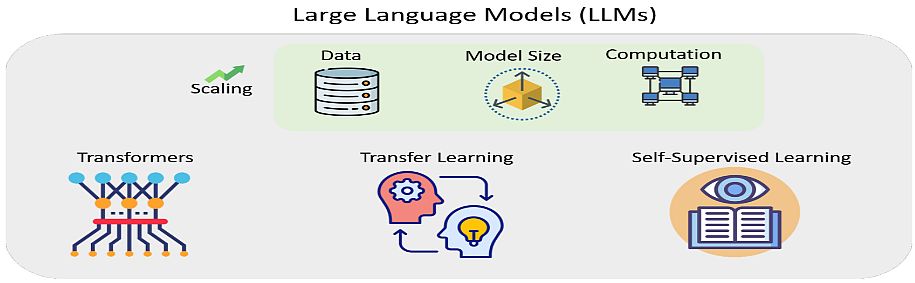

Giant Language Fashions

Textual content era as a device is already being utilized in journalism (information manufacturing), training (manufacturing and misuse of supplies), regulation (drafting contracts), medication (diagnostics), science (search and era of scientific papers), and so on.

In 2018, OpenAI researchers and engineers revealed an original work on AI-based generative giant language fashions. They pre-trained the fashions with a big and numerous corpus of textual content, in a course of they name Generative Pre-Coaching (GPT).

The authors described the way to enhance language understanding performances in NLP through the use of GPT. They utilized generative pre-training of a language mannequin on a various corpus of unlabeled textual content, adopted by discriminative fine-tuning on every particular activity. This annulates the necessity for human supervision and for time-intensive hand-labeling.

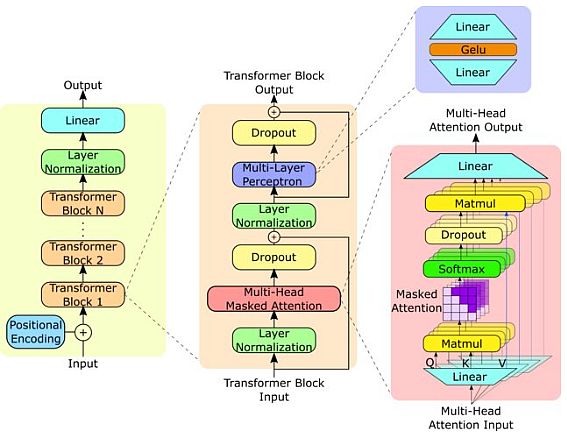

GPT fashions are primarily based on transformer-based deep studying neural community structure. Their purposes embody numerous Pure Language Processing (NLP) duties, together with query answering, textual content summarization, sentiment evaluation, and so on. with out supervised pre-training.

Earlier ChatGPT fashions

The GPT-1 model was launched in June 2018 as a way for language understanding through the use of generative pre-training. To display the success of this mannequin, OpenAI refined it and launched GPT-2 in February 2019.

The researchers skilled GPT-2 to foretell the subsequent phrase primarily based on 40GB of textual content. In contrast to different AI fashions and practices, OpenAI didn’t publish the complete model of the mannequin, however a lite model. In July 2020, they launched the GPT-3 mannequin as essentially the most superior language mannequin with 175 billion parameters.

GPT-2 mannequin

GPT-2 mannequin is an unsupervised multi-task learner. The benefits of GPT-2 over GPT-1 had been utilizing a bigger dataset and including extra parameters to the mannequin to be taught stronger language fashions. The coaching goal of the language mannequin was formulated as P (output|enter).

GPT-2 is a big transformer-based language mannequin, skilled to foretell the subsequent phrase in a sentence. The transformer offers a mechanism primarily based on encoder-decoders to detect input-output dependencies. Right this moment, it’s the golden method for producing textual content.

You don’t want to coach GPT-2 (it’s already pre-trained). GPT-2 isn’t just a language mannequin like BERT, it could additionally generate textual content. Simply give it the start of the phrase upon typing, after which it’ll full the textual content phrase by phrase.

At first, recurrent (RNN) networks, specifically, LSTM, had been mainstream on this space. However after the invention of the Transformer structure in the summertime of 2017 by OpenAI, GPT-2 steadily started to prevail in conversational duties.

GPT-2 Mannequin Options

To enhance the efficiency, in February 2019, OpenAI elevated its GPT by 10 occasions. They skilled it on an excellent bigger quantity of textual content, on 8 million Web pages (a complete of 40 GB of textual content).

The ensuing GPT-2 community was the biggest neural community, with an unprecedented variety of 1.5 billion parameters. Different options of GPT-2 embody:

- GPT-2 had 48 layers and used 1600 dimensional vectors for phrase embedding.

- Giant vocabulary of fifty,257 tokens.

- Bigger batch dimension of 512 and a bigger context window of 1024 tokens.

- Researchers carried out normalization on the enter of every sub-block. Furthermore, they added an extra layer after the ultimate self-attention block.

Because of this, GPT-2 was capable of generate complete pages of related textual content. Additionally, it reproduced the names of the characters in the middle of the story, quotes, references to associated occasions, and so forth.

Producing coherent textual content of this high quality is spectacular by itself, however there’s something extra fascinating right here. GPT-2 with none extra coaching instantly confirmed outcomes near the state-of-the-art on many conversational duties.

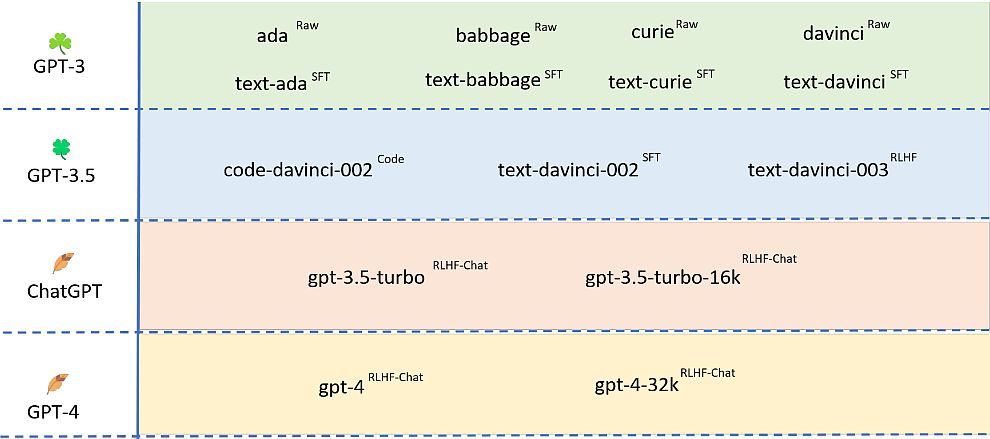

GPT-3

GPT-3 launch passed off in Might 2020 and beta testing started in July 2020. All three GPT generations make the most of synthetic neural networks. Furthermore, they prepare these networks on uncooked textual content and multimodal knowledge.

On the coronary heart of the Transformer is the eye perform, which calculates the likelihood of incidence of a phrase relying on the context. The algorithm learns contextual relationships between phrases within the texts supplied as coaching examples after which generates a brand new textual content.

- GPT-3 shares the identical structure because the earlier GPT-2 algorithm. The primary distinction is that they elevated the variety of parameters to 175 billion. Open-AI skilled GPT-3 on 570 gigabytes of textual content or 1.5 trillion phrases.

- The coaching supplies included: the whole Wikipedia, two datasets with books, and the second model of the WebText dataset.

- The GPT-3 algorithm is ready to create texts of various varieties, types, and functions: journal and ebook tales (whereas imitating the type of a selected writer), songs and poems, press releases, and technical manuals.

- OpenAI examined GPT-3 in apply the place it wrote a number of journal essays (for the UK information journal Guardian). This system also can clear up anagrams, clear up easy arithmetic examples, and generate tablatures and pc code.

ChatGPT – The most recent GPT-4 mannequin

Open AI launched its newest model, the GPT-4 mannequin on March 14, 2023, along with its publicly accessible ChatGPT bot, and sparked an AI revolution.

GPT-4 New Options

If we examine Chat GPT 3 vs 4, the brand new mannequin processes photographs and textual content as enter, one thing that earlier variations may solely do with textual content.

The brand new model has elevated API tokens from 4096 to 32,000 tokens. It is a main enchancment, because it offers the creation of more and more advanced and specialised texts and conversations. Additionally, GPT-4 has a bigger coaching set quantity than GPT-3, i.e. as much as 45 TB.

OpenAI skilled the mannequin on a considerable amount of multimodal knowledge, together with photographs and textual content from a number of domains and sources. They sourced knowledge from numerous public datasets, and the target is to foretell the subsequent token in a doc, given a sequence of earlier tokens and pictures.

- As well as, GPT-4 improves problem-solving capabilities by providing better responsiveness with textual content era that imitates the type and tone of the context.

- New data restrict: the message that the knowledge collected by ChatGPT has a deadline of September 2021 is coming to an finish. The brand new mannequin contains data as much as April 2023, offering a way more present question context.

- Higher instruction monitoring: The mannequin works higher than earlier fashions for duties that require cautious monitoring of directions, similar to producing particular codecs.

- A number of instruments in a chat: the up to date GPT-4 chatbot chooses the suitable instruments from the drop-down menu.

ChatGPT Efficiency

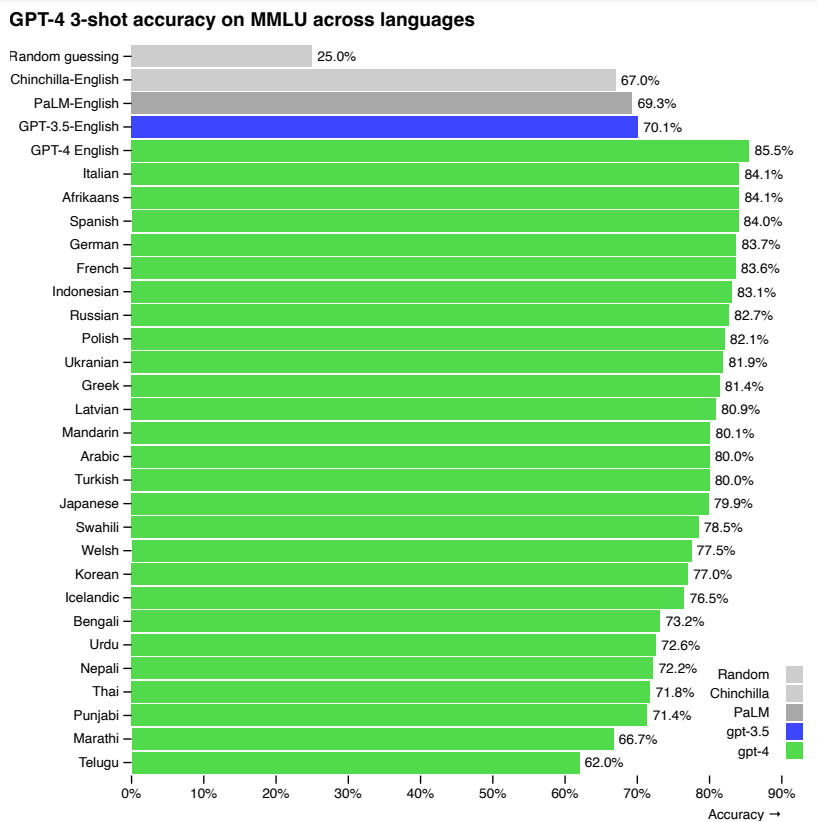

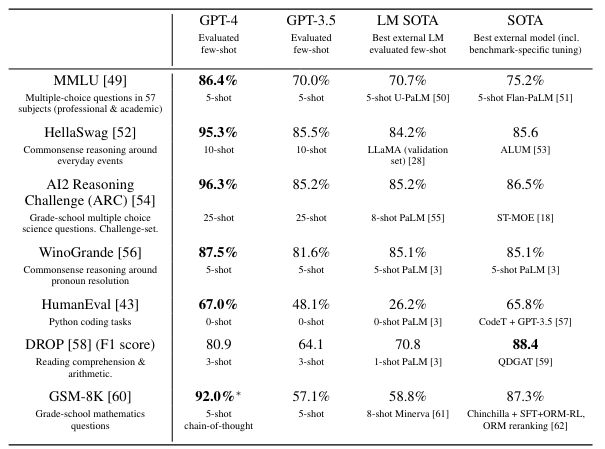

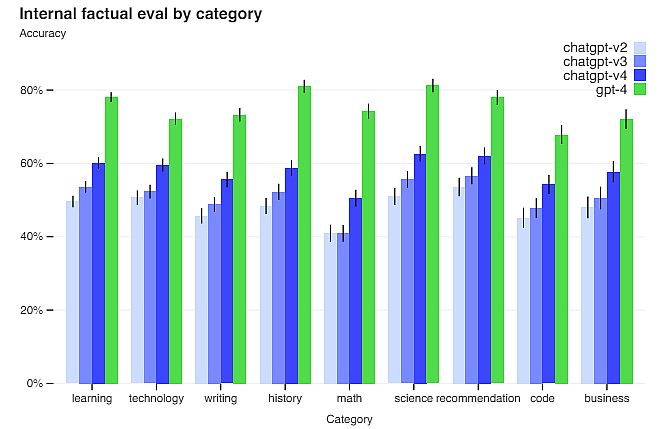

GPT-4 (ChatGPT) reveals human-level efficiency on nearly all of skilled and tutorial exams. Notably, it passes a simulated model of the Uniform Bar Examination with a rating within the high 10% of check takers.

The mannequin’s capabilities on bar exams originate primarily from the pre-training course of they usually don’t rely upon RLHF. On a number of selection questions, each the bottom GPT-4 mannequin and the RLHF mannequin carry out equally effectively.

On a dataset of 5,214 prompts submitted to ChatGPT and the OpenAI API, the responses generated by GPT-4 had been higher than the GPT-3 responses on 70.2% of prompts.

GPT-4 accepts prompts consisting of each photographs and textual content, which lets the consumer specify any imaginative and prescient or language activity. Furthermore, the mannequin generates textual content outputs given inputs consisting of arbitrarily interlaced textual content and pictures. Over a spread of domains (together with photographs), ChatGPT generates superior content material to its predecessors.

use Chat GPT 4?

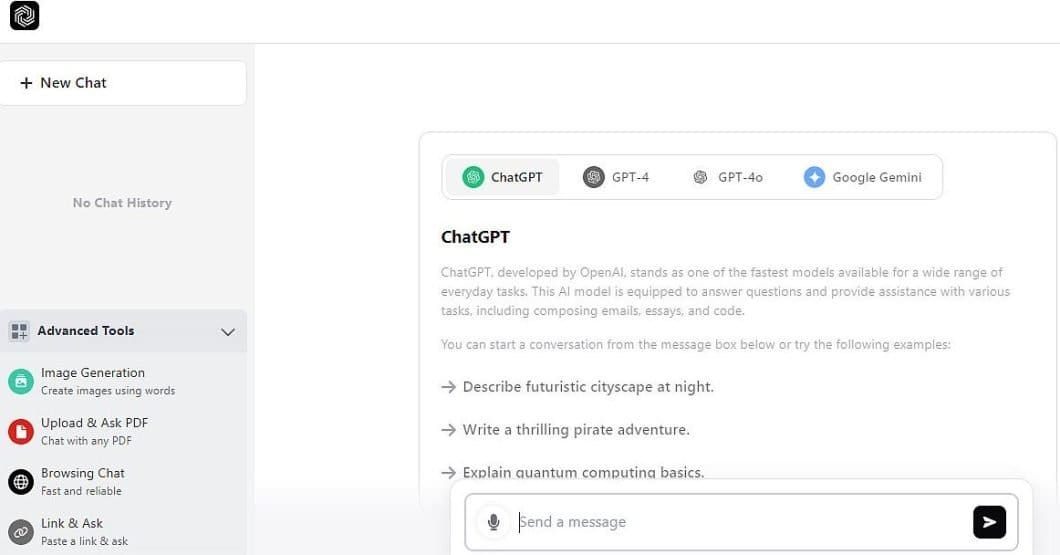

You possibly can entry ChatGPT here, and its interface is straightforward and clear. The fundamental utilization is a free model, whereas the Plus plan prices $20 monthly subscription. There are additionally Staff and Enterprise plans. For all of them, you want to create an account.

Listed here are the primary ChatGPT 4 choices, with the screenshot under for example:

- Chat bar and sidebar: The chat bar “Ship a message” button is positioned on the underside of the display screen. ChatGPT remembers your earlier conversations and can reply with context. Once you register and log in, the bot can bear in mind your conversations.

- Account (if registered): Clicking in your title within the higher proper nook offers you entry to your account data, together with settings, the choice to log off, get assist, and customise ChatGPT.

- Chat historical past: In Superior instruments (left sidebar) you may entry to GPT-4 previous conversations. You too can share your chat historical past with others, flip off chat historical past, delete particular person chats, or delete your complete chat historical past.

- Your Prompts: The questions or prompts you ship the AI chatbot seem on the backside of the chat window, together with your account particulars on the highest proper.

- ChatGPT’s responses: ChatGPT responds to your queries and the responses seem on the primary display screen. Additionally, you may copy the textual content to your clipboard to stick it elsewhere and supply suggestions on whether or not the response was correct.

Limitations of ChatGPT

Regardless of its capabilities, GPT-4 has comparable limitations as earlier GPT fashions. Most significantly, it’s nonetheless not absolutely dependable (it “hallucinates” information). You ought to be cautious when utilizing ChatGPT outputs, notably in high-stakes contexts, with the precise protocol for particular purposes.

GPT-4 considerably reduces hallucinations relative to earlier GPT-3.5 fashions (which have themselves been bettering with continued iteration). Thus, GPT-4 scores 19 share factors increased than the earlier GPT-3.5 on OpenAI evaluations.

GPT-4 usually lacks data of occasions which have occurred after the pre-training knowledge cuts off on September 10, 2021, and doesn’t be taught from its expertise. It may well generally make easy reasoning errors that don’t appear to comport with competence throughout so many domains.

Additionally, GPT-4 will be confidently incorrect in its predictions, not double-checking the output when it’s prone to make a mistake. GPT-4 has numerous biases in its outputs that Open AI nonetheless tries to characterize and handle.

Open AI intends to make GPT-4 have affordable default behaviors that replicate a large swath of customers’ values. Subsequently, they may customise their system inside some broad bounds, and get public suggestions on bettering it.

What’s Subsequent?

ChatGPT is a big multimodal mannequin able to processing picture and textual content inputs and producing textual content outputs. The mannequin can be utilized in a variety of purposes, similar to dialogue techniques, textual content summarization, and machine translation. As such, it is going to be the topic of considerable curiosity and progress within the upcoming years.

Is that this weblog fascinating? Learn extra of our comparable blogs right here: