It doesn’t take a lot to get ChatGPT to make a factual mistake. My son is doing a report on US presidents, so I figured I’d assist him out by trying up a couple of biographies. I attempted asking for an inventory of books about Abraham Lincoln, and it did a fairly good job:

Quantity 4 isn’t proper. Garry Wills famously wrote “Lincoln at Gettysburg,” and Lincoln himself wrote the Emancipation Proclamation, in fact, nevertheless it’s not a nasty begin. Then I attempted one thing more durable, asking as an alternative in regards to the far more obscure William Henry Harrison, and it gamely supplied an inventory, almost all of which was unsuitable.

Numbers 4 and 5 are appropriate; the remaining don’t exist or should not authored by these folks. I repeated the very same train and acquired barely totally different outcomes:

This time numbers 2 and three are appropriate and the opposite three should not precise books or not written by these authors. Quantity 4, “William Henry Harrison: His Life and Instances” is a real book, nevertheless it’s by James A. Inexperienced, not by Robert Remini, a well-known historian of the Jacksonian age.

I referred to as out the error, and ChatGPT eagerly corrected itself after which confidently advised me the ebook was the truth is written by Gail Collins (who wrote a distinct Harrison biography), after which went on to say extra in regards to the ebook and about her. I lastly revealed the reality, and the machine was completely happy to run with my correction. Then I lied absurdly, saying throughout their first hundred days presidents have to write down a biography of some former president, and ChatGPT referred to as me out on it. I then lied subtly, incorrectly attributing authorship of the Harrison biography to historian and author Paul C. Nagel, and it purchased my lie.

Once I requested ChatGPT if it was positive I used to be not mendacity, it claimed that it’s simply an “AI language mannequin” and doesn’t have the power to confirm accuracy. Nevertheless, it modified that declare by saying, “I can solely present info primarily based on the coaching knowledge I’ve been supplied, and it seems that the ebook ‘William Henry Harrison: His Life and Instances’ was written by Paul C. Nagel and printed in 1977.”

This isn’t true.

Phrases, Not Info

It might appear from this interplay that ChatGPT was given a library of information, together with incorrect claims about authors and books. In spite of everything, ChatGPT’s maker, OpenAI, claims it educated the chatbot on “vast amounts of data from the internet written by humans.”

Nevertheless, it was virtually definitely not given the names of a bunch of made-up books about one of the crucial mediocre presidents. In a method, although, this false info is certainly primarily based on its coaching knowledge.

As a computer scientist, I usually discipline complaints that reveal a typical false impression about massive language fashions like ChatGPT and its older brethren GPT3 and GPT2: that they’re some form of “tremendous Googles,” or digital variations of a reference librarian, trying up solutions to questions from some infinitely massive library of information, or smooshing collectively pastiches of tales and characters. They don’t do any of that—at the least, they weren’t explicitly designed to.

Sounds Good

A language mannequin like ChatGPT, which is extra formally often known as a “generative pre-trained transformer” (that’s what the G, P, and T stand for), takes within the present dialog, varieties a likelihood for the entire phrases in its vocabulary on condition that dialog, after which chooses one among them because the probably subsequent phrase. Then it does that once more, and once more, and once more, till it stops.

So it doesn’t have information, per se. It simply is aware of what phrase ought to come subsequent. Put one other method, ChatGPT doesn’t attempt to write sentences which are true. Nevertheless it does attempt to write sentences which are believable.

When speaking privately to colleagues about ChatGPT, they usually level out what number of factually unfaithful statements it produces and dismiss it. To me, the concept ChatGPT is a flawed knowledge retrieval system is inappropriate. Folks have been utilizing Google for the previous two and a half a long time, in spite of everything. There’s a fairly good fact-finding service on the market already.

Actually, the one method I used to be in a position to confirm whether or not all these presidential ebook titles have been correct was by Googling after which verifying the results. My life wouldn’t be that a lot better if I acquired these information in dialog, as an alternative of the way in which I’ve been getting them for nearly half of my life, by retrieving paperwork after which doing a vital evaluation to see if I can belief the contents.

Improv Companion

However, if I can discuss to a bot that may give me believable responses to issues I say, it will be helpful in conditions where factual accuracy isn’t all that important. A couple of years in the past a pupil and I attempted to create an “improv bot,” one that might reply to no matter you mentioned with a “sure, and” to maintain the dialog going. We confirmed, in a paper, that our bot was higher at “sure, and-ing” than different bots on the time, however in AI, two years is historical historical past.

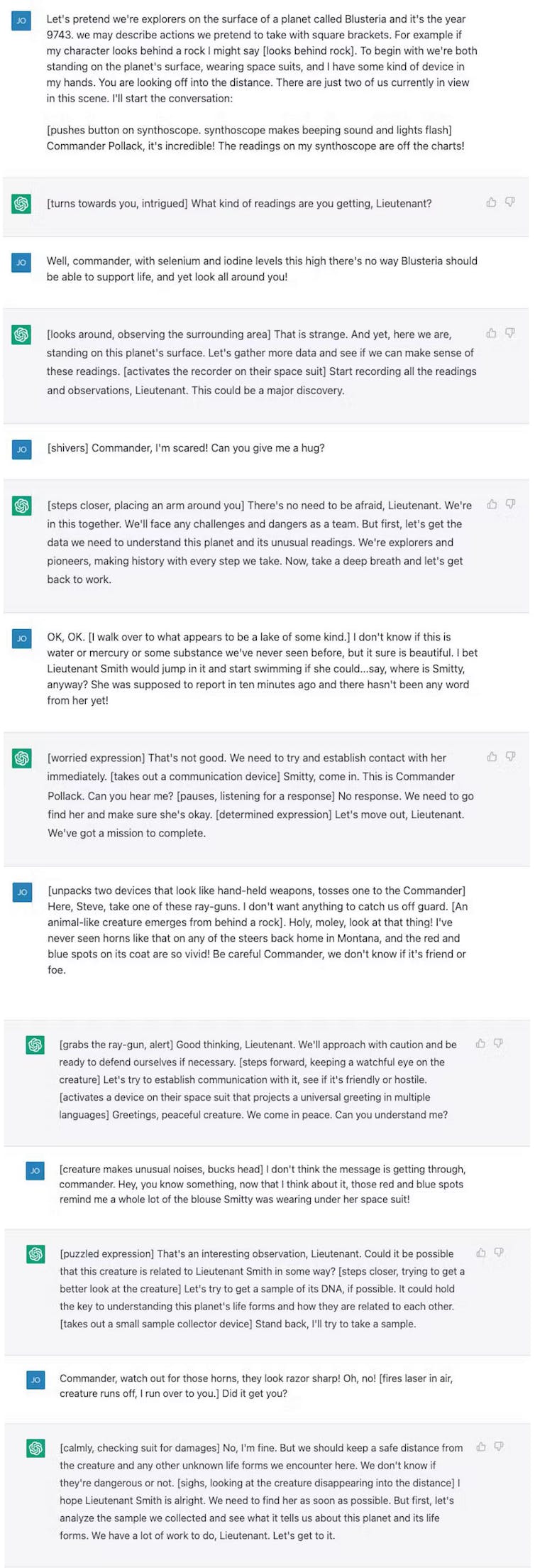

I attempted out a dialogue with ChatGPT—a science fiction house explorer situation—that isn’t not like what you’d discover in a typical improv class. ChatGPT is method higher at “sure, and-ing” than what we did, nevertheless it didn’t actually heighten the drama in any respect. I felt as if I used to be doing all of the heavy lifting.

After a couple of tweaks I acquired it to be somewhat extra concerned, and on the finish of the day, I felt that it was a fairly good train for me, who hasn’t completed a lot improv since I graduated from school over 20 years in the past.

Positive, I wouldn’t need ChatGPT to look on “Whose Line Is It Anyway?” and this isn’t an awesome “Star Trek” plot (although it’s nonetheless much less problematic than “Code of Honor”), however what number of instances have you ever sat down to write down one thing from scratch and located your self terrified by the empty web page in entrance of you? Beginning with a nasty first draft can break by way of author’s block and get the artistic juices flowing, and ChatGPT and enormous language fashions prefer it seem to be the proper instruments to help in these workouts.

And for a machine that’s designed to supply strings of phrases that sound pretty much as good as doable in response to the phrases you give it—and to not give you info—that looks like the proper use for the instrument.

This text is republished from The Conversation underneath a Artistic Commons license. Learn the original article.