Laptop imaginative and prescient (CV) is a quickly evolving space in synthetic intelligence (AI), permitting machines to course of advanced real-world visible information in numerous domains like healthcare, transportation, agriculture, and manufacturing. Trendy laptop imaginative and prescient analysis is producing novel algorithms for numerous functions, akin to facial recognition, autonomous driving, annotated surgical movies, and so on.

On this regard, this text will discover the next matters:

- The state of laptop imaginative and prescient in 2024

- What are the preferred laptop imaginative and prescient duties?

- Future tendencies and challenges

About Us: Viso.ai supplies the world’s main end-to-end laptop imaginative and prescient platform Viso Suite. Our answer permits main firms to make use of a wide range of machine studying fashions and duties for his or her laptop imaginative and prescient methods. Get a demo right here.

State of Laptop Imaginative and prescient Duties in 2024

The sphere of laptop imaginative and prescient at the moment includes superior AI algorithms and architectures, akin to convolutional neural networks (CNNs) and imaginative and prescient transformers (ViTs), to course of, analyze, and extract related patterns from visible information.

Nonetheless, a number of rising tendencies are reshaping the CV panorama to make it extra accessible and simpler to implement. The next checklist provides a short overview of those developments.

- Generative AI: Architectures like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) are giving rise to generative fashions that may synthesize new photos primarily based on enter information distributions. The expertise can assist you resolve information annotation points and increase information samples for higher mannequin coaching.

- Edge Computing: With the expansion in information quantity, processing visible information on the edge has change into a vital idea for the adoption of laptop imaginative and prescient. Edge AI includes processing information close to the supply. Subsequently, edge gadgets like servers or computer systems are related to cameras and run AI fashions in real-time functions.

- Actual-Time Laptop Imaginative and prescient: With the assistance of superior AI {hardware}, laptop imaginative and prescient options can analyze real-time video feeds to supply important insights. The commonest instance is safety analytics, the place deep studying fashions analyze CCTV footage to detect theft, visitors violations, or intrusions in real-time.

- Augmented Actuality: As Meta and Apple enter the augmented actuality area, the position of CV fashions in understanding bodily environments will witness breakthrough progress, permitting customers to mix the digital world with their environment.

- 3D-Imaging: Developments in CV modeling are serving to consultants analyze 3D photos by precisely capturing depth and distance data. As an example, CV algorithms can perceive Gentle Detection and Ranging (LIDAR) information for enhanced perceptions of the setting.

- Few-Shot vs. Zero-Shot Studying: Few-shot and zero-shot studying paradigms are revolutionizing machine studying (ML) growth by permitting you to coach CV fashions utilizing only some to no labeled samples.

Let’s now deal with the preferred laptop imaginative and prescient duties you possibly can carry out utilizing the newest CV fashions.

The next sections focus on picture classification, object detection, semantic and occasion segmentation, pose estimation, and picture technology duties. The aim is to present you an concept of contemporary laptop imaginative and prescient algorithms and functions.

Picture Classification

Picture classification duties contain CV fashions categorizing photos into user-defined courses for numerous functions. For instance, a classification mannequin will classify the picture under as a tiger.

The checklist under mentions a few of the greatest picture classification fashions:

BLIP

Bootstrapping Language-Picture Pre-training (BLIP) is a vision-language mannequin that lets you caption photos, retrieve photos, and carry out visual-question answering (VQA).

The mannequin achieves state-of-the-art (SOTA) outcomes utilizing a filter that removes noisy information from artificial captions.

The underlying structure includes an encoder-decoder structure that makes use of a bootstrapping technique to filter out noisy captions.

ResNet

Residual Neural Networks (ResNets) use the CNN structure to be taught advanced visible patterns. Essentially the most vital good thing about utilizing ResNets is that they help you construct dense, deep studying networks with out inflicting vanishing gradient issues.

Normally, deep neural networks with a number of layers fail to replace the weights of the preliminary layers. That is the results of very small gradients throughout backpropagation. ResNets circumvent this challenge by skipping just a few layers and studying a residual perform throughout coaching.

VGGNet

Very Deep Convolutional Networks, additionally known as VGGNet, is a kind of a CNN-based mannequin. VGGNet makes use of 3×3 filters to extract elementary options from picture information.

The mannequin secured first and second positions within the ImageNet Giant Scale Visible Recognition Problem (ILSVRC) 2014.

Actual-Life Purposes of Classification

The classification fashions help you use CV methods in numerous domains, together with:

- Laptop imaginative and prescient in logistics and stock administration to categorise stock objects for detailed evaluation.

- Laptop imaginative and prescient in healthcare to categorise medical photos, akin to X-rays and CT scans, for illness analysis.

- Laptop imaginative and prescient in manufacturing to detect faulty merchandise for high quality management.

Object Detection and Localization

Whereas picture classification categorizes a whole picture, object detection, and localization determine particular objects inside a picture.

For instance, CV fashions can detect a number of objects, akin to a chair and a desk, in a single picture. That is completed by drawing bounding containers or polygons across the object of curiosity.

Widespread object detection fashions embody:

Quicker R-CNN

Quicker R-CNN is a deep studying algorithm that follows a two-stage structure. For stage one, the mannequin makes use of Area Proposal Networks (RPN) primarily based on convolutional layers to determine related object areas for classification.

Within the second stage, Quick R-CNN makes use of the area proposals for detecting objects. As well as, the RPN and Quick R-CNN parts type a single community utilizing the novel consideration mechanism that permits the mannequin to concentrate to important areas for detection.

YOLO v7

You Solely Look As soon as (YOLO) is a well-liked object-detection algorithm that makes use of a deep convolutional community to detect objects in a single go. Not like Quicker R-CNN, it will possibly analyze and predict object areas while not having proposal areas.

YOLOv7 is a latest iteration of the YOLO community. This iteration improves upon all of the earlier variations by giving larger accuracy and quicker outcomes. The machine studying mannequin is useful in real-time functions the place you need immediate outcomes.

SSD

The Single-Shot Detector (SSD) mannequin breaks down bounding containers from ground-truth photos into a number of default containers with completely different facet ratios. The containers seem in a number of areas of a characteristic map having completely different scales.

The structure permits for extra accessible coaching and integration with object detection methods at scale.

Actual-Life Purposes of Object Detection

Actual-world functions for object detection embody:

- Autonomous driving, the place the car should determine completely different objects on the highway for navigation.

- Stock administration on cabinets and in shops to detect shortages.

- Anomaly detection and menace identification in surveillance utilizing detection and localization CV fashions.

Semantic Segmentation

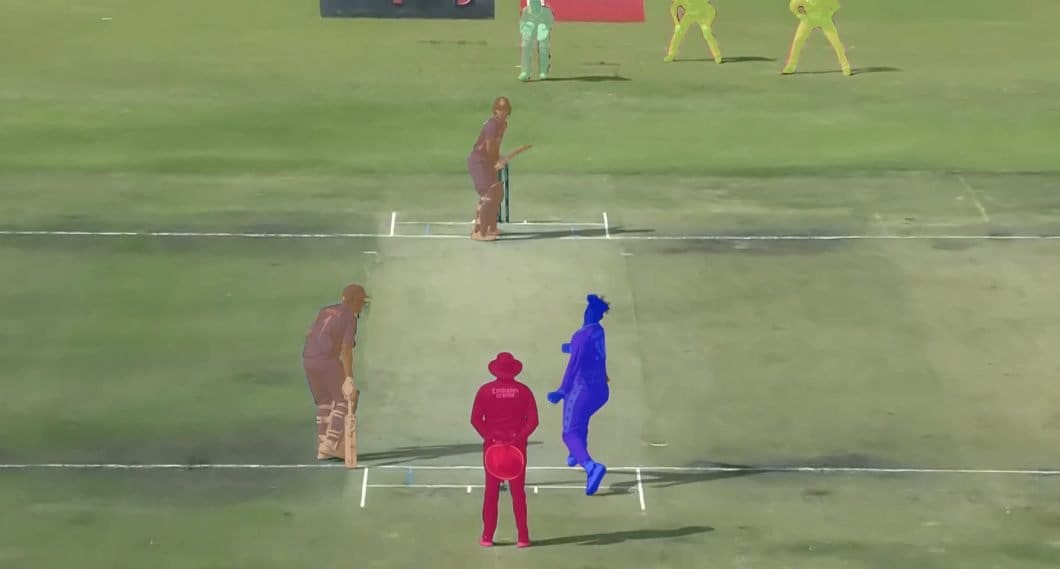

Semantic segmentation goals to determine every pixel inside a picture for a extra detailed categorization. The strategy produces extra exact classification by assigning a label to an object’s particular person pixels.

Widespread semantic segmentation fashions embody:

FastFCN

Quick Totally Convolutional Community (FastFCN) improves upon the earlier FCN structure for semantic segmentation. That is completed by introducing a Joint Pyramid Upsampling (JPU) technique that reduces the computation value of extracting characteristic maps.

DeepLab

The DeepLab system overcomes the challenges of conventional deep convolutional networks (DCNNs). These DCNNs have decrease characteristic resolutions, an lack of ability to seize objects at a number of scales, and inferior localization accuracy.

DeepLab addresses them by means of atrous convolutions, Atrous Spatial Pyramid Pooling (ASPP), and Conditional Random Fields (CRF).

U-Web

The first function of the U-Web structure was to phase biomedical photos, which requires excessive localization accuracy. Additionally, the dearth of annotated information samples is a major problem that forestalls you from efficient mannequin coaching.

U-Web solves these issues by modifying the FCN structure by means of upsampling operators that improve picture decision and mix the upsampled output with high-resolution options for higher localization.

Actual-Life Purposes of Semantic Segmentation

Semantic segmentation finds functions in various fields, akin to:

- In medical picture analysis to help docs in analyzing CT scans in additional element.

- In scene segmentation to determine particular person objects in a selected scene.

- In catastrophe administration to assist satellites detect broken areas ensuing from flooding.

Occasion Segmentation

Occasion segmentation identifies every occasion of the identical object, making it extra granular than semantic segmentation. For instance, if there are three elephants in a picture, occasion segmentation will individually determine and spotlight every elephant, treating them as distinct cases.

The next are just a few widespread occasion segmentation fashions:

SAM

Phase Something Mannequin (SAM) is an occasion segmentation framework by Meta AI that lets you phase any object by means of clickable prompts. The mannequin follows the zero-shot studying paradigm, making it appropriate for classifying novel objects in a picture.

The mannequin makes use of the encoder-decoder structure, the place the first encoder computes picture embeddings, and a immediate encoder takes person prompts as enter. A masks decoder works to grasp the encodings to foretell the ultimate output.

Masks R-CNN

Masks Area-based convolutional neural networks (Masks R-CNNs) lengthen the quicker R-CNN structure. They do that by together with one other department that predicts the segmentation masks of areas of curiosity (ROI).

In quicker R-CNN, one department classifies object areas primarily based on ground-truth bounding containers, and the opposite predicts bounding field offsets. Quicker R-CNN provides these offsets to the categorised areas to make sure predicted bounding containers come nearer to ground-truth bounding containers.

Including the third department improves generalization efficiency and boosts the coaching course of.

Actual-Life Purposes of Occasion Segmentation

Occasion segmentation finds its utilization in numerous laptop imaginative and prescient functions, together with:

- Aerial imaging for geospatial evaluation, to detect transferring objects (automobiles, and so on.) or constructions like streets and buildings.

- Digital try-on in retail, to let clients strive completely different wearables just about.

- Medical analysis, to determine completely different cases of cells for detecting most cancers.

Pose Estimation

Pose estimation identifies key semantic factors on an object to trace orientation. For instance, it helps determine human physique actions by marking key factors akin to shoulders, proper arm, left arm, and so on.

Mainstream fashions for pose estimation duties embody:

OpenPose

OpenPose is a real-time multi-person 2D bottom-up pose detection mannequin that makes use of Half Affinity Fields (PAFs) to narrate physique elements to people. It has higher runtime efficiency and accuracy because it solely makes use of PAF refinements as a substitute of the simultaneous PAF and body-part refinement technique.

MoveNet

MoveNet is a pre-trained high-speed place monitoring mannequin by TensorFlow that captures knee, hip, shoulder, elbow, wrist, ear, eye, and nostril actions, marking a most of 17 key factors.

TensorFlow gives two variants: Lightning and Thunder. The Lightning variant is for low-latency functions, whereas the Thunder variant is appropriate to be used circumstances the place accuracy is important.

PoseNet

PoseNet is a framework primarily based on tensorflow.js that detects poses utilizing a CNN and a pose-decoding algorithm. The algo assigns pose confidence scores, keypoint positions, and corresponding keypoint confidence scores.

The mannequin can detect as much as 17 key factors, together with nostril, ear, left knee, proper foot, and so on. It has two variants. One variant detects just one particular person, whereas the opposite can determine a number of people in a picture or video.

Actual-Life Purposes of Pose Estimation

Pose estimation has many functions, a few of which embody:

- Laptop imaginative and prescient robotics, the place pose estimation fashions can assist practice robotic actions.

- Health and sports activities, the place trainers can monitor physique actions to design higher coaching regimes.

- VR-enabled video games, the place pose estimation can assist detect a gamer’s motion throughout gameplay.

Picture Technology and Synthesis

Picture technology is an evolving area the place AI algorithms generate novel photos, art work, designs, and so on., primarily based on coaching information. This coaching information can embody photos from the online or another user-defined supply.

Under are just a few well-known image-generation fashions:

DALL-E

DALL-E is a zero-shot text-to-image generator created by OpenAI. This device takes user-defined textual prompts as enter to generate life like photos.

A variant of the well-known Generative Pre-Skilled Transformer 3 (GPT-3) mannequin, DALL-E 2 works on the Transformer structure. It additionally makes use of a variational autoencoder (VAE) to cut back the variety of picture tokens for quicker processing.

MidJourney

Like DALL-E, MidJourney can be a text-to-image generator however makes use of the diffusion structure to provide photos.

The diffusion technique successively provides noise to an enter picture after which denoises it to reconstruct the unique picture. As soon as educated, the mannequin can take any random enter to generate photos.

Secure Diffusion

Stable Diffusion by Stability AI additionally makes use of the diffusion framework to generate photo-realistic photos by means of textual person prompts.

Customers can practice the mannequin on restricted computation assets. It is because the framework makes use of pre-trained autoencoders with cross-attention layers to spice up high quality and coaching pace.

Actual-Life Purposes of Picture Technology and Synthesis

Picture technology has a number of use circumstances, together with:

- Content material creation, the place advertisers can use picture turbines to provide art work for branding and digital advertising and marketing.

- Product Ideation, the place it supplies producers and designers with textual prompts describing their desired options to generate appropriate photos.

- Artificial information technology to assist overcome information shortage and privateness issues in laptop imaginative and prescient.

Challenges and Future Instructions in Laptop Imaginative and prescient Duties

As laptop imaginative and prescient functions improve, the variety of challenges additionally rises. These challenges information future analysis to beat probably the most urgent points going through the AI group.

Challenges

- Lack of infrastructure: Laptop imaginative and prescient requires extremely highly effective {hardware} and a set of software program applied sciences. The principle problem is to make laptop imaginative and prescient scalable and cost-efficient, whereas reaching enough accuracy. The dearth of optimized infrastructure is the primary purpose why we don’t see extra laptop imaginative and prescient methods in manufacturing. At viso.ai, we’ve constructed probably the most highly effective end-to-end platform Viso Suite to resolve this problem and allow organizations to implement and scale real-world laptop imaginative and prescient.

- Lack of annotated information: Coaching CV fashions is difficult due to the shortage of related information for coaching. For instance, the dearth of annotated datasets has been a long-standing challenge within the medical area, the place only some photos exist, making AI-based analysis troublesome. Nonetheless, self-supervised studying is a promising growth that helps you develop fashions with restricted labeled information. On the whole, algorithms are inclined to change into dramatically extra environment friendly, and the newest frameworks allow higher AI fashions to be educated with a fraction of beforehand required information.

- Moral points: With ever-evolving information laws, it’s paramount that laptop imaginative and prescient fashions produce unbiased and truthful output. The problem right here is knowing important sources of bias and figuring out strategies to take away them with out compromising efficiency. Learn our article about moral challenges at OpenAI.

Future Instructions

- Explainable AI: Explainable AI (XAI) is one analysis paradigm that may assist you detect biases simply. It is because XAI lets you see how a mannequin works behind the scenes.

- Multimodal studying: As evident from picture generator fashions, combining textual content and picture information is the norm. The long run will possible see extra fashions integrating completely different modalities, akin to audio and video, to make CV fashions extra context-aware.

- Excessive-performance video analytics: As we speak, we’ve solely achieved a fraction of what’s going to be potential when it comes to real-time video understanding. The close to future will convey main breakthroughs in working extra succesful ML fashions extra cost-efficiently on higher-resolution information.

Laptop Imaginative and prescient Duties in 2024: Key Takeaways

Because the analysis group develops extra sturdy architectures, the duties that CV fashions can carry out will possible evolve, giving rise to newer functions in numerous domains.

However the important thing issues to recollect for now embody:

- Widespread laptop imaginative and prescient duties: Picture classification, object detection, pose semantic segmentation, occasion segmentation, pose estimation, and picture technology will stay among the many high laptop imaginative and prescient duties in 2024.

- CNNs and Transformers: Whereas the CNN framework dominates most duties mentioned above, the transformer structure stays essential for generative AI.

- Multimodal studying and XAI: Multimodal studying and explainable AI will revolutionize how people work together with AI fashions and enhance AI’s decision-making course of.

You possibly can discover associated matters within the following articles:

Getting Began With No-Code Laptop Imaginative and prescient

Deploying laptop imaginative and prescient methods may be messy as you require a strong information pipeline to gather, clear, and pre-process unstructured information, an information storage platform, and consultants who perceive modeling procedures.

Utilizing open-source instruments could also be one choice. Nonetheless, they often require familiarity with the back-end code, and integrating them right into a single orchestrated workflow together with your current tech stack is advanced.

Viso Suite is a one-stop, no-code end-to-end answer for all of your laptop imaginative and prescient wants because it helps you:

- Annotate visible information by means of automated instruments

- Construct a whole laptop imaginative and prescient pipeline for growth and deployment

- Monitor efficiency by means of customized dashboards

Wish to see how laptop imaginative and prescient can work in your business? Get began with Viso Suite for no-code machine studying.