Convey this venture to life

Generative intelligence within the pc imaginative and prescient discipline has gotten fairly a spotlight after the emergence of deep learning-based methods. After the success of diffusion process-based methods, producing photographs with textual knowledge or with any random noise has turn into extra profound. The photographs generated by diffusion fashions are photo-realistic and embody particulars primarily based on offered conditioning. However one of many downsides of diffusion fashions is that it generates the pictures iteratively utilizing a Markov-chain-based diffusion course of. On account of this, the time complexity of producing a picture has been a constraint for diffusion fashions.

Consistency Fashions by OpenAI is a just lately launched paradigm to generate the picture in a single step. The researchers liable for this have taken inspiration from the diffusion fashions and their foremost goal is to generate photographs in a single shot somewhat than iterative noise discount which is often utilized in diffusion fashions. Consistency fashions introduce new studying methodologies to map noisy photographs at any timestep of the diffusion course of to its preliminary noiseless transformation. The methodology is generalizable and the authors argue that the mannequin can carry out picture modifying kind of duties with none retraining.

Consistency Fashions

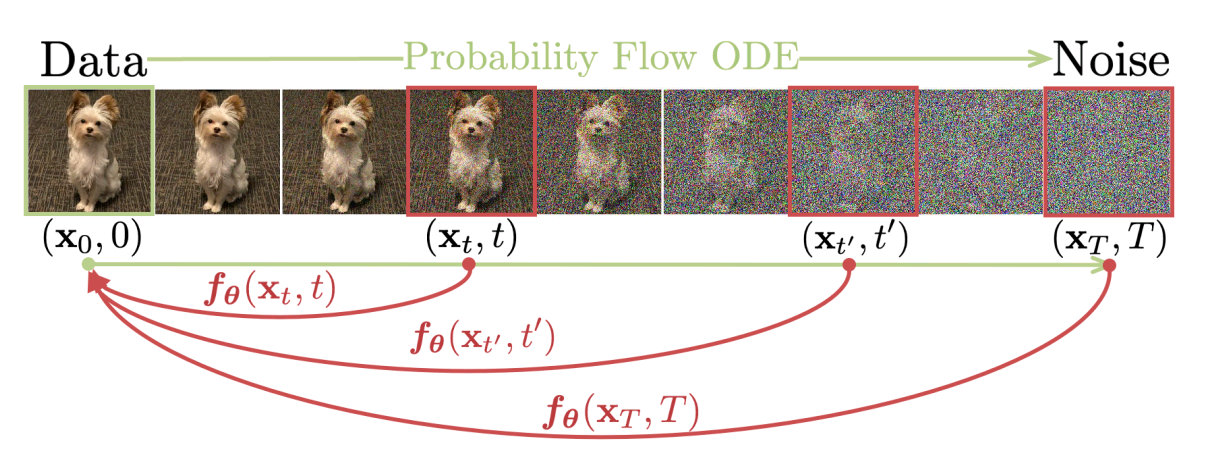

The first goal of consistency fashions is to allow single-step picture era with out dropping some great benefits of iterative era (diffusion course of). Consistency fashions attempt to convey a steadiness between the pattern high quality and the computational complexity. The essential thought of the mannequin is to map the latent noise tensor to the noiseless picture comparable to the preliminary timestep within the diffusion path as proven within the beneath determine.

Consistency fashions have the distinctive property of being self-consistent. It implies that the mannequin maps all latent picture tensors in the identical diffusion path trajectory to the identical preliminary noiseless picture. Although, you’ll be able to change the trail by conditioning the picture era course of for picture modifying duties. Consistency fashions take random noise tensor as enter and generate the picture (place to begin of the diffusion path trajectory). Though this workflow appears rather a lot just like how adversarial fashions (GANs) study, consistency fashions don’t make use of any type of adversarial coaching scheme.

To coach the consistency mannequin, the essential goal is to implement self-consistency property for picture era. The authors have offered two completely different strategies to coach the mannequin.

The primary methodology makes use of the pre-trained diffusion mannequin to generate the pairs of adjoining factors on the diffusion path trajectory. It can act as an information era scheme for coaching. This methodology additionally makes use of Odd Differential Equation (ODE) solvers to coach the rating perform used to estimate the chance circulation.

The second methodology avoids utilizing the pre-trained diffusion mannequin and illustrates the method of coaching the consistency mannequin independently. The coaching mechanism for this methodology would not assume something primarily based on a diffusion studying scheme and tries to have a look at the issue of picture era in an unbiased method. Allow us to have a high-level take a look at the algorithms of each of those strategies.

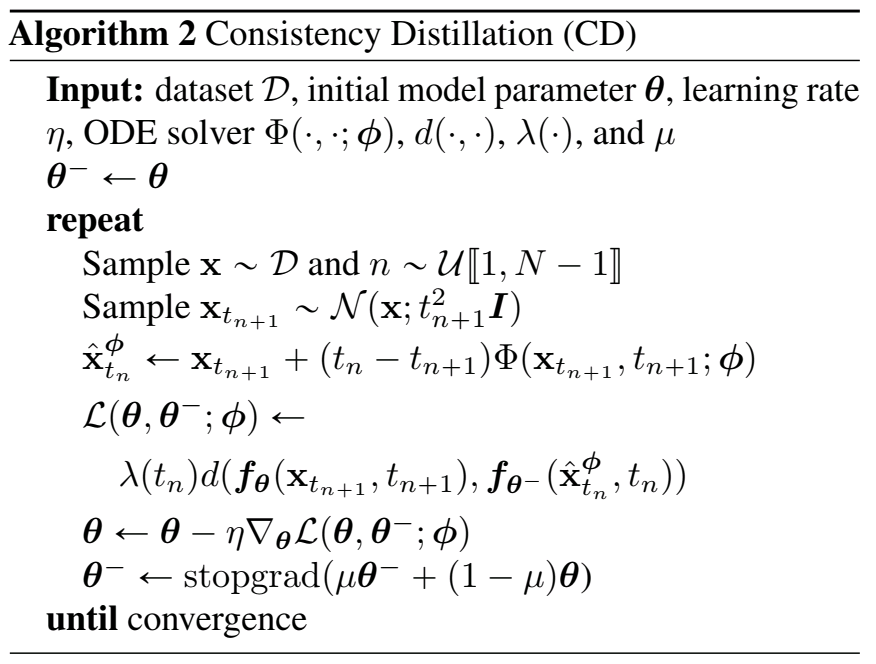

Coaching Consistency Fashions through Distillation

This methodology makes use of a pre-trained diffusion mannequin to study self-consistency. On this case, the authors seek advice from this pre-trained mannequin because the rating mannequin. The rating mannequin will assist us discover the precise timestep at which the present noise picture tensor is situated. For this, we have to discretize the time axis in $N-1$ small intervals within the vary $[epsilon, T]$. The boundaries of those intervals could be outlined as $t_1 = epsilon lt t_2 lt dots lt t_N = T$. The consistency mannequin tries to estimate intermediate states $x_{t_1}, x_{t_2}, dots, x_{t_N}$. The bigger the worth of N, the extra correct output we are able to get. The beneath picture describes the algorithm to coach the consistency mannequin utilizing distillation.

Initially, the picture $x$ is sampled from knowledge randomly. The quantity $n$ is chosen from a uniform random distribution. Now, the Gaussian noise is being added to $x$ comparable to timestep $t_{n+1}$ on the diffusion path to get the noisy picture tensor $x_{t_{n+1}}$. Utilizing the one-step ODE solver perform (and diffusion mannequin), we are able to estimate the $hat{x}_{t_n}^{phi}$ which is the estimated picture noise tensor. The loss perform tries to reduce the space between the mannequin predictions of $(hat{x}_{t_n}^{phi}, x_{t_{n+1}})$ pair. Parameter of the consistency mannequin updates primarily based on the gradient computed by the loss perform. Observe right here that there are two networks being employed right here: $f_{theta^-}$ (goal community) and $f_{theta}$ (on-line community). The goal community carries out the prediction for $hat{x}_{t_n}^{phi}$ whereas the net community carries out the prediction for $x_{t_{n+1}}$. The authors have argued that utilizing this two-network scheme enormously contributes to stabilizing the coaching course of.

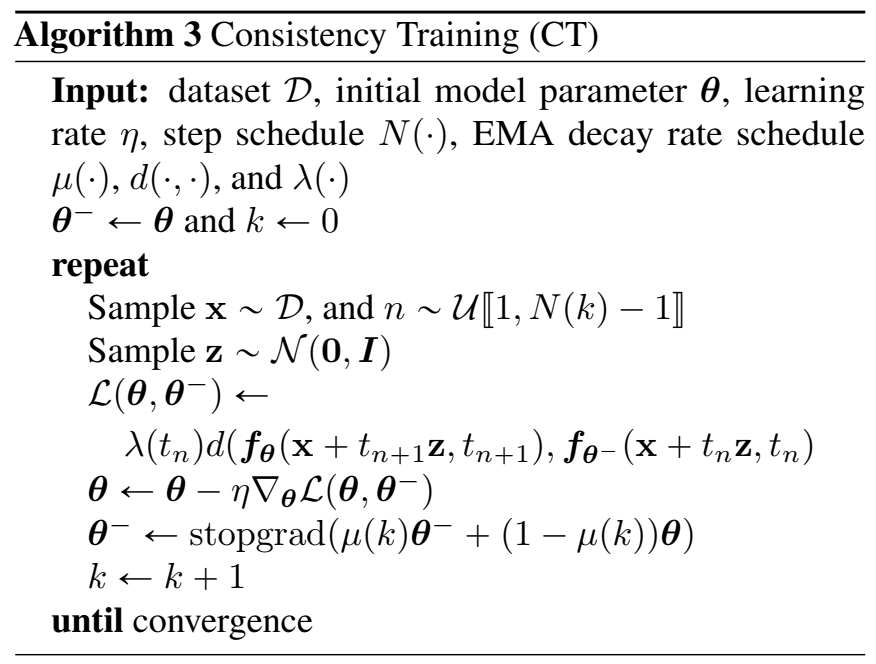

Coaching Consistency Fashions Independently

Within the earlier methodology, we use a pre-trained rating mannequin to estimate the bottom fact rating perform. On this methodology, we do not use any pre-trained fashions. To coach the mannequin independently, now we have to discover a solution to estimate the underlying rating perform with none pre-trained mannequin. The authors of the paper argue that the Monte Carlo Estimation of the rating perform utilizing the unique and noisy photographs is ample to exchange the pre-trained mannequin within the coaching perform. The beneath picture describes the algorithm to coach the consistency mannequin independently.

Initially, the picture $x$ is sampled from knowledge randomly. The quantity $n$ is chosen from a uniform random distribution utilizing the scheduled step perform. Now, a random noise tensor $z$ is sampled from the traditional distribution. The loss is calculated which minimizes the space between mannequin predictions of $(x+ t_{n+1}z, x+t_nz)$ pair. The remainder of the algorithm stays the identical because the earlier methodology. The writer argues that substituting $x+ t_{n+1}z$ rather than $x_{t_{n+1}}$ and $x+t_nz$ rather than $hat{x}_{t_n}^{phi}$ suffices. They base this argument on the truth that the loss perform right here solely relies upon upon the mannequin parameters $(theta, theta^-)$ and is unbiased of the diffusion mannequin.

Comparability with different fashions

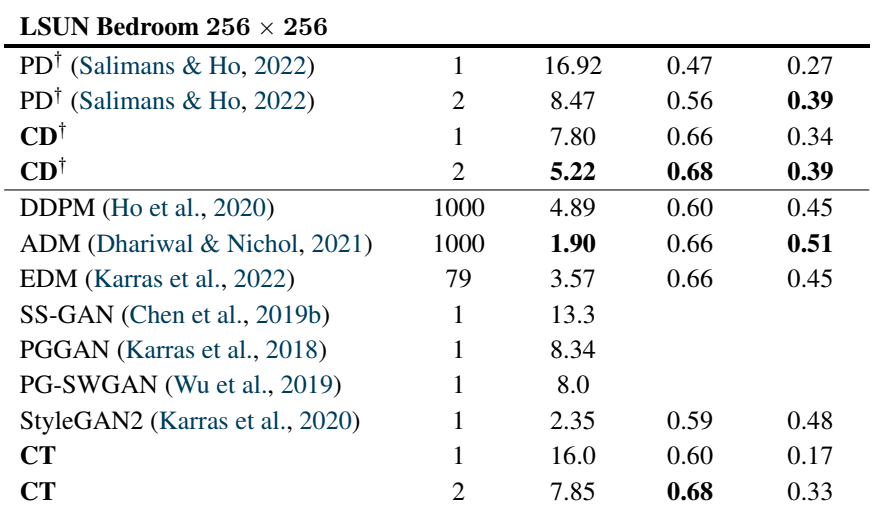

The authors of the paper have used ImageNet (64 x 64), LSUN Bed room (256 x 256), and LSUN Cat (256 x 256) datasets for analysis. The mannequin is in contrast with different current fashions in accordance with the metrics Fréchet Inception Distance (decrease is healthier), Inception Rating (increased is healthier), Precision & Recall (increased is healthier). The comparability of one of many above-mentioned datasets is proven beneath. Please head over to the paper to see the comparability of all datasets.

As proven above, the Consistency Coaching (CT) mannequin is in contrast with many fashionable picture era fashions like DDPM, StyleGAN2, PGGAN, and so forth. The Consistency Distillation (CD) mannequin is in contrast with Progressive Distillation (PD) which is the one comparable approach to CD researched until now.

We will discover within the comparability desk that the consistency fashions (CD & CT) have comparable & typically higher accuracy as in comparison with different fashions. The authors argue that the intention behind introducing consistency fashions is just not primarily to get higher accuracy however to ascertain the trade-off between the picture high quality and the time complexity of era.

Strive it your self

Convey this venture to life

Allow us to now stroll by how one can obtain the dataset & practice your individual consistency fashions. For the demo goal, you needn’t practice the mannequin. As a substitute, you’ll be able to obtain pre-trained mannequin checkpoints to strive. For this process, we are going to get this operating in a Gradient Pocket book right here on Paperspace. To navigate to the codebase, click on on the “Run on Gradient” button above or on the prime of this weblog.

Setup

The file installations.sh incorporates all the required code to put in the required dependencies. Observe that your system should have CUDA to coach Consistency fashions. Additionally, chances are you’ll require a special model of torch primarily based on the model of CUDA. In case you are operating this on Paperspace, then the default model of CUDA is 11.6 which is appropriate with this code. In case you are operating it some place else, please verify your CUDA model utilizing nvcc --version. If the model differs from ours, chances are you’ll need to change variations of PyTorch libraries within the first line of installations.sh by wanting on the compatibility desk.

To put in all of the dependencies, run the beneath command:

bash installations.sh

The above command additionally clones the unique Consistency-Fashions repository into consistency_models listing in order that we are able to make the most of the unique mannequin implementation for coaching & inference.

Downloading datasets & Begin coaching (Non-obligatory)

As soon as now we have put in all of the dependencies, we are able to obtain the datasets and begin coaching the fashions.

datasets listing within the codebase incorporates the required scripts to obtain the info and make it prepared for coaching. Presently, the codebase helps downloading ImageNet and LSUN Bed room datasets that the unique authors used.

Now we have already arrange bash scripts for you which can mechanically obtain the dataset. datasets incorporates the code which can obtain the coaching & validation knowledge to the corresponding dataset listing. To obtain the datasets, you’ll be able to run the beneath instructions:

# Obtain the ImageNet dataset

cd datasets/imagenet/ && bash fetch_imagenet.sh

# Obtain the LSUN Bed room dataset

cd datasets/lsun_bedroom/ && bash fetch_lsun_bedroom.sh

Furthermore, now we have offered scripts to coach several types of fashions because the authors have mentioned within the paper. scripts listing incorporates completely different bash scripts to coach the fashions. You possibly can run scripts with the beneath instructions to coach completely different fashions:

# EDM Mannequin on ImageNet dataset

bash scripts/train_edm/train_imagenet.sh

# EDM Mannequin on LSUN Bed room dataset

bash scripts/train_edm/train_lsun_bedroom.sh

# Consistency Distillation Mannequin on ImageNet dataset (L2 measure)

bash scripts/train_cd/train_imagenet_l2.sh

# Consistency Distillation Mannequin on ImageNet dataset (LPIPS measure)

bash scripts/train_cd/train_imagenet_lpips.sh

# Consistency Distillation Mannequin on LSUN Bed room dataset (L2 measure)

bash scripts/train_cd/train_lsun_bedroom_l2.sh

# Consistency Distillation Mannequin on LSUN Bed room dataset (LPIPS measure)

bash scripts/train_cd/train_lsun_bedroom_lpips.sh

# Consistency Coaching Mannequin on ImageNet dataset

bash scripts/train_ct/train_imagenet.sh

# Consistency Coaching Mannequin on LSUN Bed room dataset

bash scripts/train_ct/train_lsun_bedroom.sh

These bash scripts are appropriate with the Paperspace workspace. However in case you are operating it elsewhere, then you have to to exchange the bottom path of the paths talked about within the corresponding coaching script.

💡

This can take a very long time and require important storage in addition to GPU area, so it isn’t really useful to run coaching on a Free GPU-powered Paperspace Notebooks.

Observe that you’ll want to maneuver checkpoint.pt file to checkpoints listing for inference on the finish of coaching.

Don’t fret in the event you do not need to practice the mannequin. The beneath part illustrates downloading the pre-trained checkpoints for inference.

Operating Gradio Demo

Python script app.py incorporates Gradio demo which helps you to generate photographs utilizing pre-trained fashions. However earlier than we do this, we have to obtain the pre-trained mannequin checkpoints into checkpoints listing.

To obtain current checkpoints, run the beneath command:

bash checkpoints/fetch_checkpoints.sh

Observe that the most recent model of the code has the pre-trained mannequin checkpoints for 12 completely different mannequin sorts. You possibly can replace fetch_checkpoints.sh at any time when you could have new checkpoints.

Now, we’re able to launch the Gradio demo. Run the next command to launch the demo:

gradio app.py

Open the hyperlink within the browser and now you’ll be able to generate inferences from any of the accessible fashions in checkpoints listing. Furthermore, you’ll be able to generate photographs by modifying completely different parameters like dropout, generator, and steps.

You must have the ability to generate photographs utilizing completely different pre-trained fashions as proven within the beneath video:

Producing Photographs Utilizing Consistency Fashions

Hurray! 🎉🎉🎉 Now we have created a demo to generate photographs utilizing completely different pre-trained consistency fashions.

Conclusion

Consistency Fashions is a completely new approach launched by researchers at OpenAI. The first goal of most of these fashions is to beat the time complexity constraint of diffusion fashions resulting from iterative sampling. Consistency Fashions could be skilled both by distilling the diffusion fashions or could be skilled independently. On this weblog, we walked by the motivation behind Consistency Fashions, the 2 completely different strategies to coach such fashions, and a comparability of those fashions with different fashionable fashions. We additionally mentioned how you can arrange the setting, practice your individual Consistency Fashions & generate inferences utilizing the Gradio app on Gradient Pocket book.

You should definitely take a look at our repo and take into account contributing to it!