Experiment monitoring, or experiment logging, is a key side of MLOps. Monitoring experiments is necessary for iterative mannequin growth, the a part of the ML challenge lifecycle the place you strive many issues to get your mannequin efficiency to the extent you want.

On this article, we are going to reply the next questions:

- What’s experiment monitoring in ML?

- How does it work? (tutorials and examples)

- Find out how to implement experiment logging?

- What are greatest practices?

About us: We’re the creators of the enterprise no-code Laptop Imaginative and prescient Platform, Viso Suite. Viso Suite permits for the management of the whole ML pipeline, together with knowledge assortment, coaching, and mannequin analysis. Study Viso Suite and e book a demo.

What’s Experiment Monitoring for Machine Studying?

Experiment monitoring is the self-discipline of recording related metadata whereas growing a machine studying mannequin. It supplies researchers with a way to maintain monitor of necessary modifications throughout every iteration.

On this context, “experiment” refers to a selected iteration or model of the mannequin. You’ll be able to consider it when it comes to another scientific experiment:

- You begin with a speculation, i.e., how new modifications will affect the outcomes.

- Then, you modify the inputs (code, datasets, or hyperparameters) accordingly and run the experiment.

- Lastly, you file the outputs. These outputs may be the outcomes of efficiency benchmarks or a completely new ML mannequin.

Machine studying experiment monitoring makes it doable to hint the precise trigger and impact of modifications to the mannequin.

These parameters embrace, amongst others:

- Hyperparameters: Studying fee, batch dimension, variety of layers, neurons per layer, activation features, dropout charges.

- Mannequin Efficiency Metrics: Accuracy, precision, recall, F1 rating, space beneath the ROC curve (AUC-ROC).

- Coaching Parameters: Variety of epochs, loss operate, optimizer kind.

- {Hardware} Utilization: CPU/GPU utilization, reminiscence utilization.

- Dataset Metrics: Measurement of coaching/validation/check units, knowledge augmentation methods used.

- Coaching Setting: Configuration information of the underlying system or software program ecosystem.

- Versioning Data: Mannequin model, dataset model, code model.

- Run Metadata: Timestamp of the run, length of coaching, experiment ID.

- Mannequin-specific Information: Mannequin weights or different tuning parameters.

Experiment monitoring entails monitoring all info to create reproducible outcomes throughout each stage of the ML mannequin growth course of.

Why is ML Experiment Monitoring Essential?

We derive machine studying fashions by way of an iterative strategy of trial and error. Researchers can modify any variety of parameters in varied combos to supply completely different outcomes. A mannequin can even undergo an immense variety of diversifications or variations earlier than it reaches its last type.

With out realizing the what, why, and the way, it’s not possible to attract knowledgeable conclusions in regards to the mannequin’s progress. Sadly, as a result of complexity of those fashions, the causality between inputs and outputs is usually non-obvious.

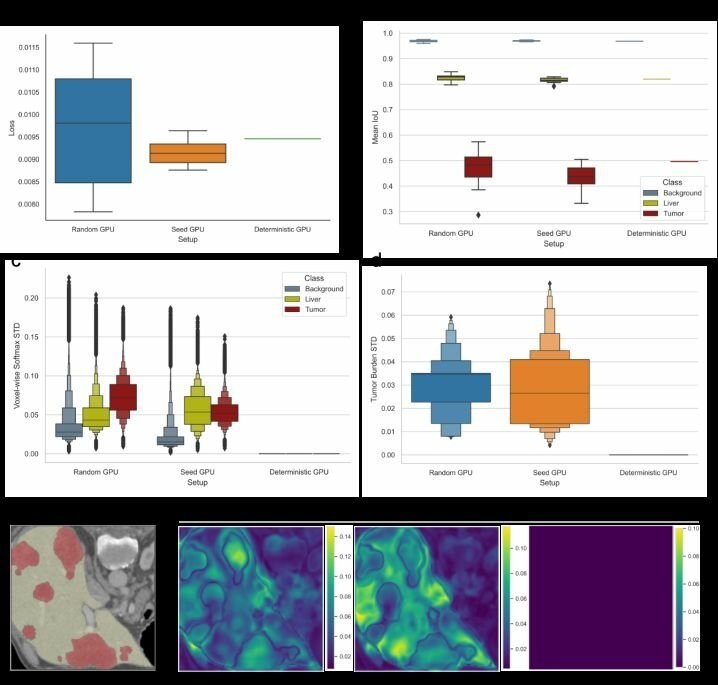

studying – source.

A small change in any of the parameters above can considerably change the output. An advert hoc method to monitoring these modifications and their results on the mannequin merely received’t lower it. That is significantly necessary for associated duties, corresponding to hyperparameter optimization.

Information scientists want a proper course of to trace these modifications over the lifetime of the event course of. Experiment monitoring makes it doable to match and reproduce the outcomes throughout iterations.

This enables them to grasp previous outcomes and the cause-and-effect of adjusting varied parameters. Extra importantly, it’s going to assist to extra effectively steer the coaching course of in the proper route.

How does an ML Experiment Monitoring System work?

Earlier than we take a look at completely different strategies to implement experiment monitoring, let’s see what an answer ought to seem like.

On the very least, an experiment-tracking resolution ought to offer you:

- A centralized hub to retailer, arrange, entry, and handle experiment data.

- Simple integration together with your ML mannequin coaching framework(s).

- An environment friendly and correct strategy to seize and file important knowledge.

- An intuitive and accessible strategy to pull up data and examine them.

- A strategy to leverage visualizations to signify knowledge in ways in which make sense to non-technical stakeholders.

For extra superior ML fashions or bigger machine studying tasks, you might also want the next:

- The flexibility to trace and report {hardware} useful resource consumption (monitoring of CPU, GPU utilization, and reminiscence utilization).

- Integration with model management techniques to trace code, dataset, and ML mannequin modifications.

- Collaboration options to facilitate group productiveness and communication.

- Customized reporting instruments and dashboards.

- The flexibility to scale with the rising variety of experiments and supply strong safety.

Experiment monitoring ought to solely be as complicated as you want it to be. That’s why methods differ from manually utilizing paper or spreadsheets to completely automated commercial-off-the-shelf instruments.

The centralized and collaborative side of experiment monitoring is especially necessary. You could conduct experiments on ML fashions in quite a lot of contexts. For instance, in your workplace laptop computer at your desk. Or to run an advert hoc hyperparameter tuning job utilizing a devoted occasion within the cloud.

Now, extrapolate this problem throughout a number of people or groups.

When you don’t correctly file or sync experiments, you could must repeat work. Or, worst case, lose the small print of a well-performing experiment.

Greatest Practices in ML Experiment Monitoring

So, we all know that experiment monitoring is important to precisely reproduce experiments. It permits debugging and understanding ML fashions at a granular degree. We additionally know the elements that an efficient experiment monitoring resolution ought to include.

Nonetheless, there are additionally some greatest practices it is best to keep on with.

- Set up a standardized monitoring protocol: You might want to have a constant apply of experiment monitoring throughout ML tasks. This consists of standardizing code documentation, model documentation, knowledge units, parameters (enter knowledge), and outcomes (output knowledge).

- Have rigorous model management: Implement each code and knowledge model management. This helps monitor modifications over time and to grasp the affect of every modification. You should use instruments like Git for code and DVC for knowledge.

- Automate knowledge logging: It’s best to automate experiment logging as a lot as doable. This consists of capturing hyperparameters, mannequin architectures, coaching procedures, and outcomes. Automation reduces human error and enhances consistency.

- Implement meticulous documentation: Alongside automated logging, clarify the rationale behind every experiment, the hypotheses examined, and interpretations of outcomes. Contextual info is invaluable for future reference when engaged on dynamic ML fashions.

- Go for scalable and accessible monitoring instruments: This may assist keep away from delays on account of operational constraints or the necessity for coaching.

- Prioritize reproducibility: Test that you may reproduce the outcomes of particular person experiments. You want detailed details about the atmosphere, dependencies, and random seeds to do that precisely.

- Common critiques and audits: Reviewing experiment processes and logs might help establish gaps within the monitoring course of. This lets you refine your monitoring system and make higher choices on future experiments.

- Incorporate suggestions loops: Equally, this may show you how to incorporate learnings from previous experiments into new ones. It should additionally assist with group buy-in and deal with shortcomings in your methodologies.

- Steadiness element and overhead: Over-tracking can result in pointless complexity, whereas inadequate monitoring can miss vital insights. It’s necessary to discover a stability relying on the complexity of your ML fashions and wishes.

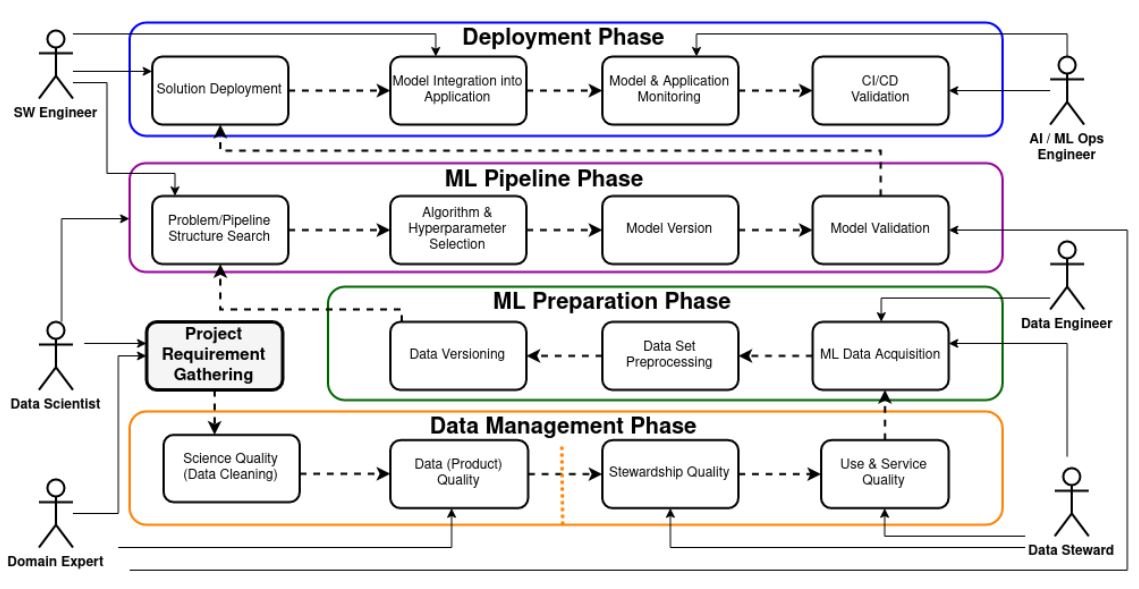

Distinction Between Experiment Monitoring and MLOps

When you work in an ML group, you’re most likely already acquainted with MLOps (machine studying operations). MLOps is the method of holistically managing the end-to-end machine studying growth lifecycle. It spans all the things from:

- Growing and coaching fashions,

- Scheduling jobs,

- Mannequin testing,

- Deploying fashions,

- Mannequin upkeep,

- Managing mannequin serving, to

- Monitoring and retraining fashions in manufacturing

Experiment monitoring is a specialised sub-discipline inside the MLOps subject. Its main focus is the iterative growth section, which entails primarily coaching and testing fashions. To not point out experimenting with varied fashions, parameters, and knowledge units to optimize efficiency.

Extra particularly, it’s the method of monitoring and using the related metadata of every experiment.

Experiment monitoring is very vital for MLOps in research-focused tasks. In these tasks, fashions might by no means even attain manufacturing. As an alternative, experiment monitoring affords helpful insights into mannequin efficiency and the efficacy of various approaches.

This will assist inform or direct future ML tasks with out having an instantaneous utility or finish aim. MLOps is of extra vital concern in tasks that may enter manufacturing and deployment.

Find out how to Implement Experiment Monitoring

Machine studying tasks come in several sizes and styles. Accordingly, there are a selection of the way you may monitor your experiments.

It’s best to rigorously choose the very best method relying on:

- The scale of your group

- The variety of experiments you intend to run

- The complexity of your ML fashions

- The extent of element you require relating to experiment metadata

- The important thing objectives of your challenge/analysis. I.e., enhancing capabilities in a selected process or optimizing efficiency

Among the frequent strategies used in the present day embrace:

- Handbook monitoring utilizing spreadsheets and naming conventions

- Utilizing software program versioning instruments/repositories

- Automated monitoring utilizing devoted ML experiment monitoring instruments

Let’s do a fast overview of every.

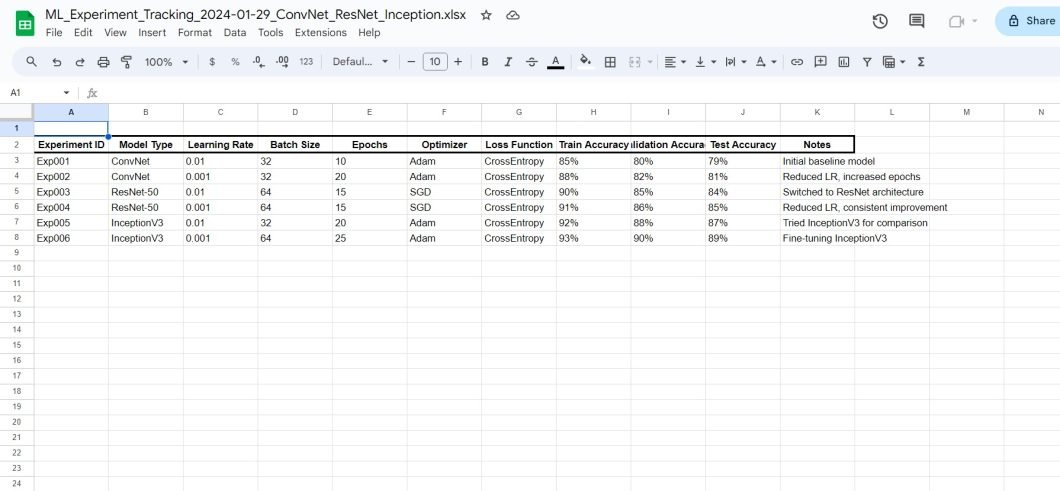

Handbook Monitoring

This entails utilizing spreadsheets to manually log experiment particulars. Sometimes, you’ll use systematic naming conventions to prepare information and experiments in directories. For instance, by naming them after parts like mannequin model, studying fee, batch dimension, and primary outcomes.

For instance, model_v3_lr0.01_bs64_acc0.82.h5 would possibly point out model 3 of a mannequin with a studying fee of 0.01, a batch dimension of 64, and an accuracy of 82%.

This methodology is simple to implement, but it surely falls aside at scale. Not solely is there a threat of logging incorrect info, but additionally overwriting others’ work. Plus, manually guaranteeing model monitoring conventions are being adopted may be time-consuming and tough.

Nonetheless, it could be appropriate for small-scale or private analysis tasks utilizing instruments like Excel or Google Sheets.

Automated Versioning in a Git Repository

You should use a model management system, like Git, to trace modifications in machine studying experiments. Every experiment’s metadata (like hyperparameters, mannequin configurations, and outcomes) is saved as information in a Git repository. These information can embrace textual content paperwork, code, configuration information, and even serialized variations of fashions.

After every experiment, it commits modifications to the repository, making a trackable historical past of the experiment iterations.

Whereas it’s not totally automated, it does deliver a few of its advantages. For instance, the system will robotically observe the naming conventions you implement. This reduces the danger of human error whenever you log metrics or different knowledge.

It’s additionally a lot simpler to revert to older variations with out having to create, arrange, and discover copies manually. They’ve the built-in means to department and run parallel workflows.

These techniques even have built-in collaboration, making it simple for group members to trace modifications and keep in sync. Plus, it’s comparatively technology-agnostic, so you should utilize it throughout tasks or frameworks.

Nonetheless, not all these techniques are optimized for big binary information. That is very true for ML fashions the place enormous knowledge units containing mannequin weights and different metadata are frequent. Additionally they have restricted options for visualizing and evaluating experiments, to not point out stay monitoring.

This method is extremely helpful for tasks that require an in depth historical past of modifications. Additionally, many builders are acquainted with platforms like Git, so adoption ought to be seamless for many groups. Nonetheless, it nonetheless lacks a number of the superior capabilities on provide with devoted MLOps or experiment monitoring software program.

Utilizing Fashionable Experiment Monitoring Instruments

There are specialised software program options designed to systematically file, arrange, and examine knowledge from machine studying experiments. designed particularly for ML tasks, they usually provide seamless integration with frequent fashions and frameworks.

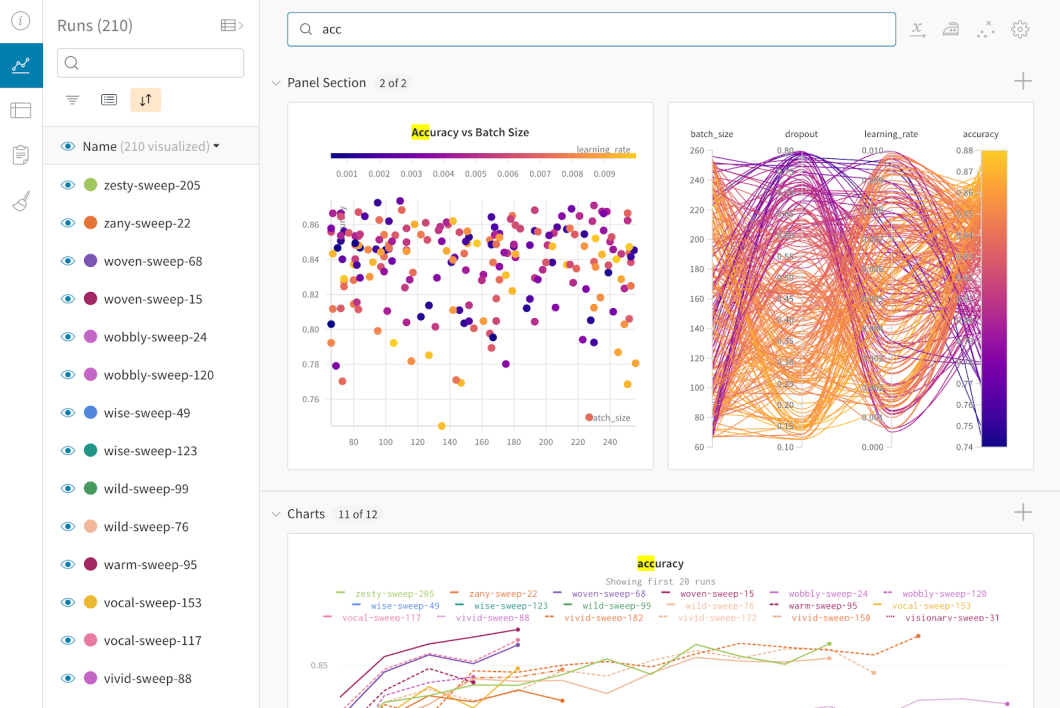

On high of instruments to trace and retailer knowledge, additionally they provide a person interface for viewing and analyzing outcomes. This consists of the flexibility to visualise knowledge and create customized reviews. Builders can even usually leverage APIs for logging knowledge to varied techniques and examine completely different runs. Plus, they will monitor experiment progress in real-time.

Constructed for ML fashions, they excel at monitoring hyperparameters, analysis metrics, mannequin weights, and outputs. Their functionalities are well-suited to typical ML duties.

Frequent experiment monitoring instruments embrace:

- MLFlow

- CometML

- Neptune

- TensorBoard

- Weights & Biases

The Viso Suite platform additionally affords strong experiment monitoring by way of its mannequin analysis instruments. You’ll be able to acquire complete insights into the efficiency of your pc imaginative and prescient experiments. Its vary of functionalities consists of regression, classification, detection analyses, semantic, and occasion segmentation, and so forth.

You should use this info to establish anomalies, mine laborious samples, and detect incorrect prediction patterns. Interactive plots and label rendering on photos facilitate knowledge understanding, augmenting your MLOps decision-making.

What’s Subsequent With Experiment Monitoring?

Experiment monitoring is a key part of ML mannequin growth, permitting for the recording and evaluation of metadata for every iteration. Integration with complete MLOps practices enhances mannequin lifecycle administration and operational effectivity, which means that organizations can drive steady enchancment and innovation of their ML initiatives.

As experiment monitoring instruments and methodologies evolve, we will count on to see the mannequin growth course of change and enhance as effectively.