Whereas a medical pupil at UC San Francisco, Dereck Paul grew involved that innovation in medical software program was lagging behind different sectors, like finance and aerospace. He got here to consider that sufferers are finest served when medical doctors are outfitted with software program that displays the slicing fringe of tech, and dreamed of beginning an organization that’d prioritize the wants of sufferers and medical doctors over hospital directors or insurance coverage corporations.

So Paul teamed up with Graham Ramsey, his buddy and an engineer at Fashionable Fertility, the ladies’s well being tech firm, to launch Glass Health in 2021. Glass Well being supplies a pocket book physicians can use to retailer, manage and share their approaches for diagnosing and treating circumstances all through their careers; Ramsey describes it as a “private knowledgement administration system” for studying and training drugs.

“In the course of the pandemic, Ramsey and I witnessed the overwhelming burdens on our healthcare system and the worsening disaster of healthcare supplier burnout,” Paul stated. “I skilled supplier burnout firsthand as a medical pupil on hospital rotations and later as an inner drugs resident doctor at Brigham and Ladies’s Hospital. Our empathy for frontline suppliers catalyzed us to create an organization dedicated to completely leveraging know-how to enhance the apply of drugs.”

Glass Well being gained early traction on social media, notably X (previously Twitter), amongst physicians, nurses and physicians-in-training, and this translated to the corporate’s first funding tranche, a $1.5 million pre-seed spherical led by Breyer Capital in 2022. Glass Well being was then accepted into Y Combinator’s Winter 2023 batch. However early this 12 months, Paul and Ramsey determined to pivot the corporate to generative AI — embracing the rising pattern.

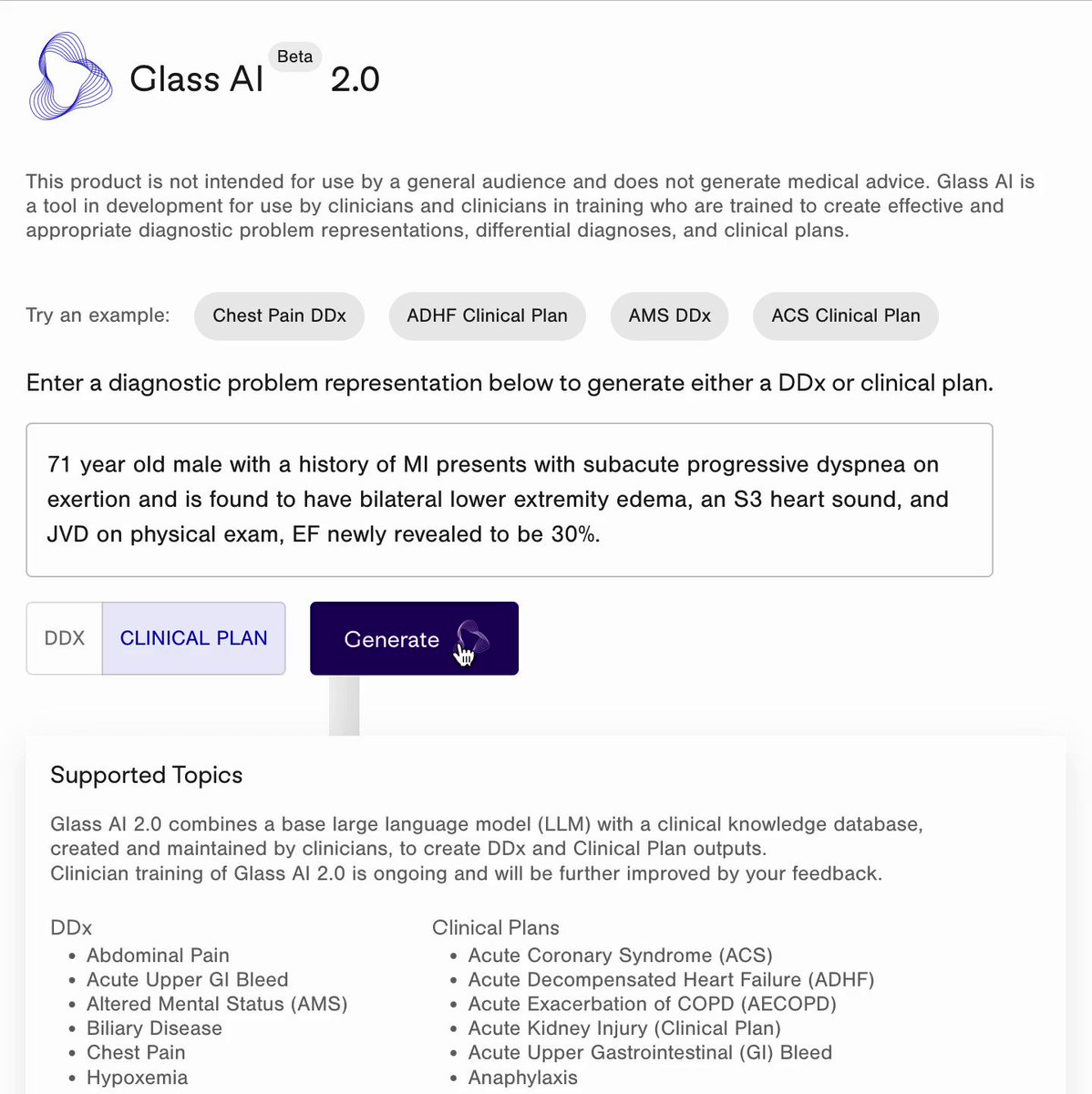

Glass Well being now presents an AI software powered by a big language mannequin (LLM), tech just like that behind OpenAI’s ChatGPT, to generate diagnoses and “evidence-based” remedy choices to contemplate for sufferers. Physicians can sort in descriptions like “71-year-old male with a historical past of myocardial infarction presents with subacute progressive dyspnea on exertion” or “65-year-old girls with a historical past of diabetes and hyperlipidemia presents with acute-onset chest ache and diaphoresis,” and Glass Well being’s AI will present a possible prognosis and scientific plan.

“Clinicians enter a affected person abstract, also called an issue illustration, that describes the related demographics, previous medical historical past, indicators and signs and descriptions of laboratory and radiology findings associated to a affected person’s presentation — the knowledge they could use to current a affected person to a different clinician,” Paul defined. “Glass analyzes the affected person abstract and recommends 5 to 10 diagnoses that the clinician might need to contemplate and additional examine.”

Glass Well being may also draft a case evaluation paragraph for clinicians to overview, which incorporates explanations about probably related diagnostic research. These explanations could be edited and used for scientific notes and data down the road, or shared with the broader Glass Well being neighborhood.

In idea, Glass Well being’s software appears extremely helpful. However even cutting-edge LLMs have confirmed to be exceptionally dangerous at giving well being recommendation.

Babylon Well being, an AI startup backed by the U.Okay.’s Nationwide Well being Service, has discovered itself beneath repeated scrutiny for making claims that its disease-diagnosing tech can carry out higher than medical doctors.

In an ill-fated experiment, the Nationwide Consuming Problems Affiliation (NEDA) launched a chatbot in partnership with AI startup Cass to offer help to individuals affected by consuming problems. Because of a generative AI methods improve, Cass started to parrot dangerous “eating regimen tradition” ideas like calorie restriction, main NEDA to close down the software.

Elsewhere, Well being Information just lately recruited a medical skilled to guage the soundness of ChatGPT’s well being recommendation in a variety of topics. The professional discovered that the chatbot missed latest research, made deceptive statements (like that “wine may forestall most cancers” and that prostate most cancers screenings must be based mostly on “private values”) and plagiarized content material from a number of well being information sources.

In a extra charitable piece in Stat Information, a analysis assistant and two Harvard professors discovered that ChatGPT listed the right prognosis (inside the prime three choices) in 39 of 45 completely different vignettes. However the researchers caveated that the vignettes have been the type usually used to check medical college students and may not mirror how individuals — notably these for whom English is a second language — describe their signs in the actual world.

It hasn’t been studied a lot. However I ponder if bias, too, might play a job in inflicting an LLM to incorrectly diagnose a affected person. As a result of medical LLMs like Glass’ are sometimes skilled on well being data, which solely present what medical doctors and nurses discover (and solely in sufferers who can afford to see them), they might have harmful blind spots that aren’t instantly obvious. Furthermore, medical doctors might unwittingly encode their very own racial, gender or socioeconomic biases in writing the data LLMs prepare on, main the fashions to prioritize sure demographics over others.

Paul appeared nicely conscious of the scrutiny surrounding generative AI in drugs — and asserted that Glass Well being’s AI is superior to most of the options already in the marketplace.

Picture Credit: Glass Well being

“Glass connects LLMs with scientific pointers which might be created and peer-reviewed by our tutorial doctor group,” he stated. “Our doctor group members are from main tutorial medical facilities across the nation and work part-time for Glass Well being, like they’d for a medical journal, creating pointers for our AI and fine-tuning our AI’s means to comply with them … We ask our clinician customers to oversee all of our our LLM utility’s outputs intently, treating it like an assistant that may provide useful suggestions and choices that they could contemplate however by no means replaces directs or replaces their scientific judgment.”

In the middle of our interview, Paul repeatedly emphasised that Glass Well being’s AI — whereas targeted on offering potential diagnoses — shouldn’t be interpreted as definitive or prescriptive in its solutions. Right here’s my guess as to the unstated cause: If it have been, Glass Well being can be topic to larger authorized scrutiny — and potentially even regulation by the FDA.

Paul isn’t the one one hedging. Google, which is testing a medical-focused language mannequin referred to as Med-PaLM 2, has been cautious in its advertising supplies to keep away from suggesting that the mannequin can supplant the expertise of well being professionals in a scientific setting. So has Hippocratic, a startup constructing an LLM specifically tuned for healthcare functions (however not diagnosing).

Nonetheless, Paul argues that Glass Well being’s method offers it “superb management” over its AI’s outputs and guides its AI to “mirror state-of-the-art medical data and pointers.” A part of that method includes amassing consumer knowledge to enhance Glass’ underlying LLMs — a transfer which may not sit nicely with each affected person.

Paul says that customers can request the deletion of all of their saved knowledge at any time.

“Our LLM utility retrieves physician-validated scientific pointers as AI context on the time it generates outputs,” he stated. “Glass is completely different from LLMs functions like ChatGPT that rely solely on their pre-training to provide outputs, and may extra simply produce medical data that’s inaccurate or outdated … Now we have tight management over the knowledge and pointers utilized by our AI to create outputs and the power to use a rigorous editorial course of to our pointers that goals to handle bias and align our suggestions with the aim of reaching well being fairness.”

We’ll see if that seems to be the case.

Within the meantime, Glass Well being isn’t struggling to seek out early adopters. To this point, the platform has signed up greater than 59,000 customers and already has a “direct-to-clinician” providing for a month-to-month subscription. This 12 months, Glass will start to pilot an digital well being record-integrated enterprise providing with HIPAA compliance; 15 unnamed well being methods and corporations are on the waitlist, Paul claims.

“Establishments like hospitals and well being methods will be capable to present an occasion of Glass to their medical doctors to empower their clinicians with AI-powered scientific determination help within the type of suggestions about diagnoses, diagnostic research and remedy steps they’ll contemplate,” Paul stated. “We’re additionally capable of customise Glass AI outputs to a well being system’s particular scientific pointers or care supply practices.”

With a complete of $6.5 million in funding, Glass plans to spend on doctor creation, overview and updating of the scientific pointers utilized by the platform, AI fine-tuning and normal R&D. Paul claims that Glass has 4 years of runway.