Laptop Imaginative and prescient (CV) fashions use coaching information to be taught the connection between enter and output information. The coaching is an optimization course of. Gradient descent is an optimization methodology primarily based on a value operate. It defines the distinction between the expected and precise worth of knowledge.

CV fashions attempt to decrease this loss operate or decrease the hole between prediction and precise output information. To coach a deep studying mannequin – we offer annotated photos. In every iteration – GD tries to decrease the error and enhance the mannequin’s accuracy. Then it goes by way of a technique of trials to attain the specified goal.

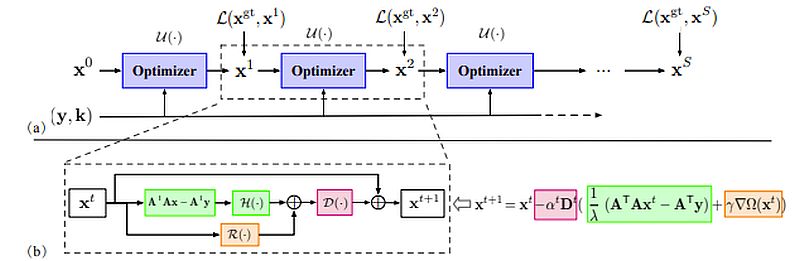

Dynamic Neural Networks use optimization strategies to reach on the goal. They want an environment friendly solution to get suggestions on the success. Optimization algorithms create that suggestions loop to assist the mannequin precisely hit the goal.

For instance, picture classification fashions use the picture’s RGB values to provide courses with a confidence rating. Coaching that community is about minimizing a loss operate. The worth of the loss operate supplies a measure – of how removed from the goal efficiency a community is with a given dataset.

On this article, we elaborate on probably the most in style optimization strategies in CV Gradient Descent (GD).

About us: Viso Suite is the enterprise machine studying infrastructure that fingers full management of the whole software lifecycle to ML groups. With top-of-the-line safety measures, ease of use, scalability, and accuracy, Viso Suite supplies enterprises with 695% ROI in 3 years. To be taught extra, e-book a demo with our group.

What’s Gradient Descent?

The most effective-known optimization methodology for a operate’s minimization is gradient descent. Like most optimization strategies, it applies a gradual, iterative strategy to fixing the issue. The gradient signifies the path of the quickest ascent. A damaging gradient worth signifies the path of the quickest descent.

- Gradient descent begins from a randomly chosen level. Then it takes a collection of steps within the gradient’s path to get closest to the answer.

- Researchers make the most of gradient descent to replace the parameters in laptop imaginative and prescient, e.g. regression coefficient in linear regression and weights in NN.

- The strategy defines the preliminary parameter’s values. Then it updates the variables iteratively within the path of the target operate. Consequently, each replace or iteration will lead the mannequin to attenuate the given price operate.

- Lastly – it’ll steadily converge to the optimum worth of the given operate.

We are able to illustrate this with canine coaching. The coaching is gradual with constructive reinforcements when reaching a selected objective. We begin by getting its consideration and giving it a deal with when it appears to be like at us.

With that reinforcement (that it did the precise factor with the deal with), the canine will proceed to observe your directions. Subsequently – we will reward it because it strikes to attain the specified objective.

How does Gradient Descent Work?

As talked about above – we will deal with or compute the gradient because the slope of a operate. It’s a set of a operate’s partial derivatives regarding all variables. It denotes the steepness of a slope and it factors within the path the place the operate will increase (decreases) quickest.

We are able to illustrate the gradient – by visualizing a mountain with two peaks and a valley. There’s a blind man at one peak, who must navigate to the underside. The individual doesn’t know which path to decide on, however he will get some reinforcement in case of an accurate path. He strikes down and will get reinforcement for every appropriate step, so he’ll proceed to maneuver down till he reaches the underside.

Studying Fee is a crucial parameter in CV optimization. The mannequin’s studying charge determines whether or not to skip sure components of the information or regulate from the earlier iteration.

Within the mountain instance this is able to be the dimensions of every step the individual takes down the mountain. At first – he could take giant steps. He would descend shortly however could overshoot and go up the opposite facet of the mountain.

Studying Fee in Gradient Descent

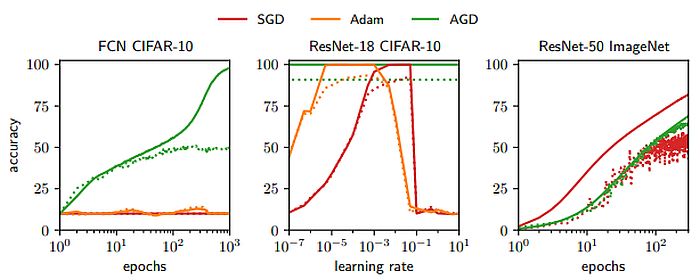

Gradient Descent is an iterative optimization algorithm that finds the native minimal of a operate. A decrease studying charge is best for real-world purposes. It might be excellent if the training charge decreases as every step goes downhill.

Thus, the individual can attain the objective with out going again. For that reason, the training charge ought to by no means be too excessive or too low.

Gradient Descent calculates the subsequent place through the use of a gradient on the present place. We scale up the present gradient by the training charge. We subtract the obtained worth from the present place (making a step). Studying charge has a robust impression on efficiency:

- A low studying charge implies that GD will converge slower, or could attain the ultimate iteration, earlier than reaching the optimum level.

- A excessive studying charge means the machine studying algorithm could not converge to the optimum level. It should discover a native minimal and even diverge utterly.

Points with Gradient Descent

Complicated constructions reminiscent of neural networks contain non-linear transformations within the speculation operate. It’s doable that their loss operate doesn’t seem like a convex operate with a single minimal. The gradient might be zero at a neighborhood minimal or zero at a world minimal all through the whole area.

If it arrives on the native minima – will probably be tough to flee that time. There are additionally saddle factors, the place the operate is a minima in a single path and a neighborhood maxima in one other path. It provides the phantasm of converging to a minimal.

It is very important overcome these gradient descent challenges:

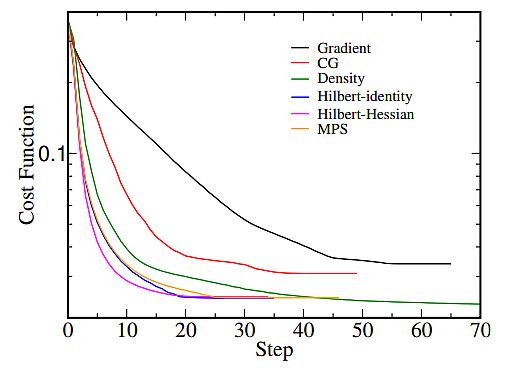

- Make sure that gradient descent runs correctly by plotting the price operate through the optimization course of. The variety of iterations is on the x-axis, and the worth of the price operate is on the y-axis.

- By representing the price operate’s worth after every iteration of gradient descent, you possibly can estimate how good is your studying charge.

- If gradient descent works advantageous, minimizing the price operate ought to happen after each iteration.

- When gradient descent will not be lowering the price operate (stays roughly on the identical degree) – it has converged.

- To converge – the gradient descent may have 50 iterations, or 50,000, and even as much as 1,000,000, so the variety of iterations to convergence will not be simple to estimate.

Monitoring the gradient descent on plots will permit you to decide if it’s not working correctly – in circumstances when the price operate is rising. Usually, the explanation for an rising price operate when utilizing gradient descent is a big studying charge.

Kinds of Gradient Descent

Primarily based on the quantity of knowledge the algorithm makes use of – there are 3 forms of gradient descent:

Stochastic Gradient Descent

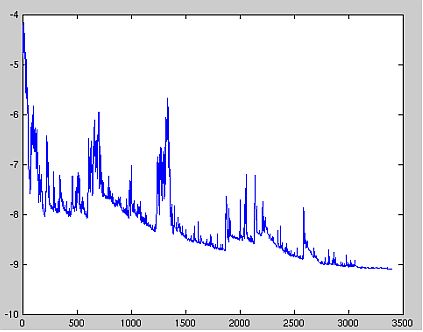

Stochastic gradient descent (SGD) updates the parameters for every coaching instance subsequently. In some situations – SGD is quicker than the opposite strategies.

A bonus is that frequent updates present a relatively detailed charge of enchancment. Nonetheless – SGD is computationally fairly costly. Additionally, the frequency of the updates leads to noisy gradients, inflicting the error charge to extend.

for i in vary (nb_epochs):

np . random . shuffle (information)

for instance in information :

params_grad = evaluate_gradient (loss_function, instance, params )

params = params - learning_rate * params_grad

Batch Gradient Descent

Batch gradient descent makes use of recurrent coaching epochs to calculate the error for every instance throughout the coaching dataset. It’s computationally environment friendly – it has a steady error gradient and a steady convergence. A downside is that the steady error gradient can converge in a spot that isn’t one of the best the mannequin can obtain. It additionally requires loading of the entire coaching set within the reminiscence.

for i in vary (nb_epochs):

params_grad = evaluate_gradient (loss_function, information, params)

params = params - learning_rate * params_grad

Gradient Descent and Mini-Batch

Mini-batch gradient descent is a mixture of the SGD and BGD algorithms. It divides the coaching dataset into small batches and updates every of those batches. This combines the effectivity of BGD and the robustness of SGD.

Typical mini-batch sizes vary round 100, however they might differ for various purposes. It’s the popular algorithm for coaching a neural community and the commonest GD kind in deep studying.

for i in vary (nb_epochs):

np.random . shuffle (information)

for batch in get_batches (information , batch_size =50):

params_grad = evaluate_gradient (loss_function, batch, params )

params = params - learning_rate * params_grad

What’s Subsequent?

Builders don’t work together with gradient descent algorithms straight. Mannequin libraries like TensorFlow, and PyTorch, already implement the gradient descent algorithm. However it’s useful to know the ideas and the way they work.

The CV platforms, reminiscent of Viso Suite can simplify this facet for builders even additional. They don’t should cope with a bunch of code. They will shortly annotate the information and deal with the actual worth of their software. CV platforms cut back the complexity of laptop imaginative and prescient and carry out most of the guide steps which can be tough and time-consuming.