Graph Neural Networks (GNNs) are a sort of neural community designed to instantly function on graphs, a knowledge construction consisting of nodes (vertices) and edges connecting them. GNNs have revolutionized how we analyze and make the most of knowledge which can be structured within the type of a graph. Everytime you hear about groundbreaking discoveries in fields like drug improvement, social media evaluation, or fraud detection, there’s a great likelihood that GNNs are taking part in a component behind the scenes.

On this article, we’ll begin with a delicate introduction to Graph Neural Networks and observe with a complete technical deep dive.

About us: Viso Suite is the end-to-end pc imaginative and prescient platform. With Viso Suite, it turns into attainable for enterprises to begin utilizing machine studying with no single line of code. E-book a demo with us to study extra.

Prediction Duties Carried out by GNNs

The first purpose of GNNs is to study a illustration (embedding) of the graph construction, through which the GNN captures each the properties of the nodes (what the node incorporates) and the topology of the graph (how these nodes are linked).

These representations can then be used for numerous duties resembling node classification (figuring out the label of a node), hyperlink prediction (predicting the existence of an edge between two nodes), and graph classification (classifying complete graphs).

For instance, GNN is extensively utilized in social community apps (Fb). Right here the GNNs can predict person conduct not simply based mostly on their profile, but in addition on their pals’ actions and social circles. Here’s what the GNN does:

- Node-level prediction: Predicting the class of a node (e.g. Does this individual eat a burger (predicting based mostly on the sort of pals the individual has, or actions the individual does).

- Edge-level prediction: Predicting the chance of a connection between two nodes (e.g., suggesting new pals in a social community or what subsequent Netflix video to play).

- Graph stage prediction: Classifying the whole graph based mostly on its construction and node properties (e.g., deciding whether or not a brand new molecule is an acceptable drug, or predicting what can be the aroma of the brand new molecule).

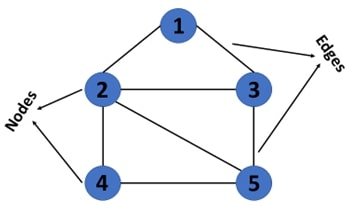

Understanding the Fundamentals of Graphs

To grasp the Graph Neural Community (GNN), we have to study its most simple ingredient, which is a Graph. A graph knowledge construction represents and organizes not simply the info factors but in addition emphasizes the relationships between knowledge factors.

Vertices and Edges

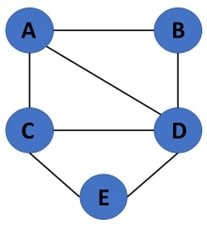

A graph is a set of factors (nodes) linked by strains (edges). Vertices symbolize entities, objects, or ideas, whereas edges symbolize relationships or connections between them.

Within the context of the social community, the nodes is usually a individual, and the perimeters will be the sort of relationship (the individual follows, and so forth.)

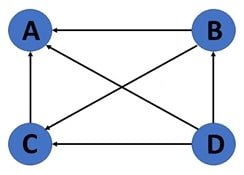

Directed vs Undirected Graphs

In a directed graph, edges have a path, indicating the circulation of the connection. Within the context of a social community, the path will be who follows whom (you is perhaps following individual A, however individual A doesn’t observe you again)

In an undirected graph, edges shouldn’t have a path, merely indicating a connection between two vertices. E.g. in Fb, for those who settle for a pal request, then you may see their posts, they usually can see again yours. The connection is mutual right here.

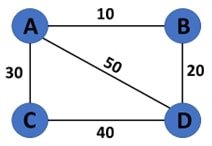

Weighted Graph

The sides have a weight related to them.

What’s Graph Illustration?

Graph illustration is a method to encode graph construction and options for processing by neural networks. Graphs embed the node’s knowledge and in addition the connection between the info factors. To symbolize these connections between the nodes, graph illustration is required.

Listed below are a few of the most used graph representations for Deep Studying.

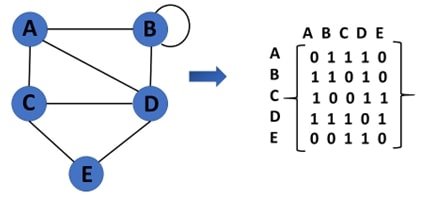

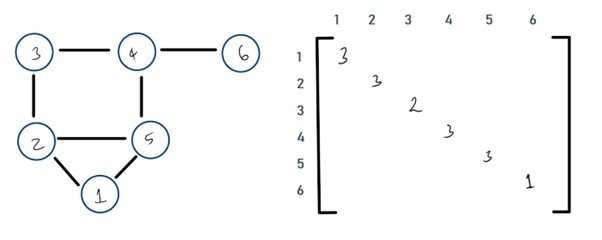

Adjacency Matrix

This can be a matrix itemizing all of the vertices linked to that vertex (all of the nodes linked to a node). E.g. right here, node A has nodes B and C linked to it, there within the array, the corresponding worth is 1. When a connection doesn’t exist, it has 0 within the array.

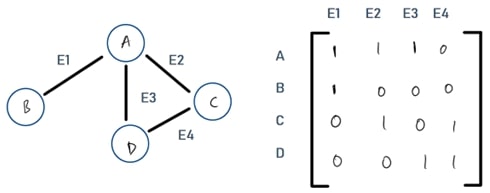

Incidence Matrix

A matrix of dimension NxM the place N is the variety of nodes and M are edges of the graph. In easy phrases, it’s used to symbolize the graph in matrix type. The worth is 1 if the node has a selected edge, and 0 if it doesn’t. E.g., A has edge E1 linked to it, due to this fact within the incidence matrix, it’s a 1. Whereas node A doesn’t have edge E4 linked to it, due to this fact it’s denoted by 0 within the matrix.

Diploma Matrix

A diagonal matrix which incorporates the variety of edges hooked up to every node.

Distinctive Traits of Graph Knowledge

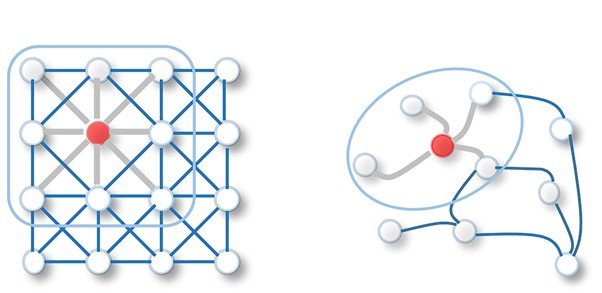

Graph knowledge construction fashions real-world knowledge effectively as a result of its particular traits. That is what makes them completely different from matrices utilized in Convolutional Neural Networks (CNNs).

To have the ability to work on graph knowledge construction, GNNs have been developed. Earlier than diving into GNNs let’s see what these distinctive traits of graphs are:

- Connections: In contrast to tabular knowledge the place entities exist in isolation, graph knowledge revolves round connections between entities (nodes). These connections becoming a member of the nodes, represented by edges, maintain essential info that makes the info beneficial and is central to understanding the system.

- No Construction: Conventional knowledge resides in ordered grids or tables. Graphs, nonetheless, don’t have any inherent order, and their construction is dynamic (the nodes are unfold out randomly and alter their positions). Which is what makes it helpful for modeling dynamic real-world knowledge.

- Heterogeneity: In a graph, factors (nodes) and connections (edges) can stand for various issues like folks, proteins, or transactions, every having its traits.

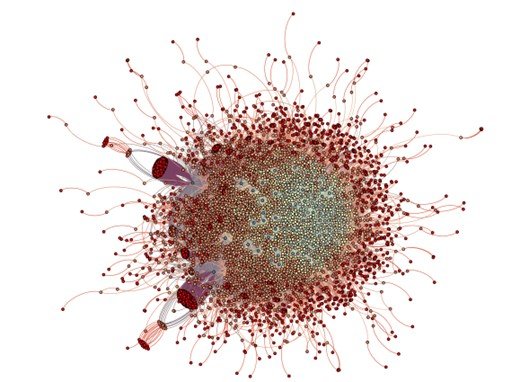

- Scalability: Graphs can change into very giant, together with advanced networks with hundreds of thousands of connections and factors, which makes them tough to retailer and analyze.

Graph Neural Networks vs Neural Networks

To higher perceive Graph Neural Networks (GNNs), it’s important to first understand how they differ from conventional Neural Networks (NNs). Beginning with NNs as a basis, we are able to then discover how GNNs construct on this to deal with graph-structured knowledge, making the idea clearer and extra approachable.

Listed below are a few of the variations between NNs and GNNs.

Knowledge Construction

- Neural Networks (NNs): Conventional neural networks, together with their variants like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), are primarily designed for grid-like knowledge constructions. This contains photographs (2D grids of pixels) for CNNs and sequential knowledge (time collection or textual content) for RNNs.

- Graph Neural Networks (GNNs): GNNs are particularly designed to deal with graph knowledge. Graphs not solely retailer knowledge factors but in addition the advanced relationships and interconnections between knowledge factors.

Knowledge Illustration

- NNs: Knowledge enter into conventional neural networks must be structured, resembling vectors for totally linked layers, multi-dimensional arrays for CNNs (e.g., photographs), or sequences for RNNs.

- GNNs: Enter knowledge for GNNs is within the type of graphs, the place every node can have its options (attribute vectors), and edges may also have options representing the connection between nodes.

Operation

- NNs: Operations in neural networks contain matrix multiplications, convolution operations, and element-wise operations, utilized in a structured method throughout layers.

- GNNs: GNNs function by aggregating options from a node’s neighbors via a course of referred to as message passing. This includes aggregating the present node’s options with the neighbor node’s options (learn extra on this operation under).

Studying Job

- NNs: Conventional neural networks are well-suited for duties like picture classification, object detection (CNNs), and sequence prediction or language modeling (RNNs).

- GNNs: GNNs excel at duties that require understanding the relationships and interdependencies between knowledge factors, resembling node classification, hyperlink prediction, and graph classification.

Interpretability

The power to grasp the internal workings and the way fashions make their choices or predictions.

- Neural Networks (NNs): Whereas NNs can study advanced patterns in knowledge, deciphering these patterns and the way they relate to the construction of the info will be difficult.

- Graph Neural Networks (GNNs): GNNs, by working instantly on graphs, provide insights into how relationships and construction within the knowledge contribute to the training process. That is extraordinarily beneficial in domains resembling drug discovery or social community evaluation.

Kinds of GNNS

Graph Neural Networks (GNNs) have developed into numerous architectures to deal with completely different challenges and functions. Listed below are a couple of of them.

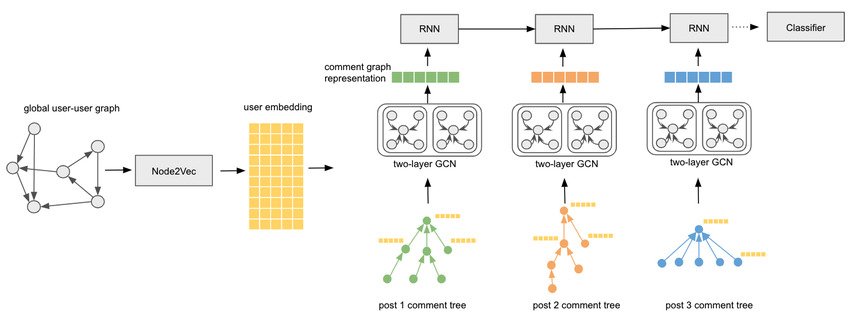

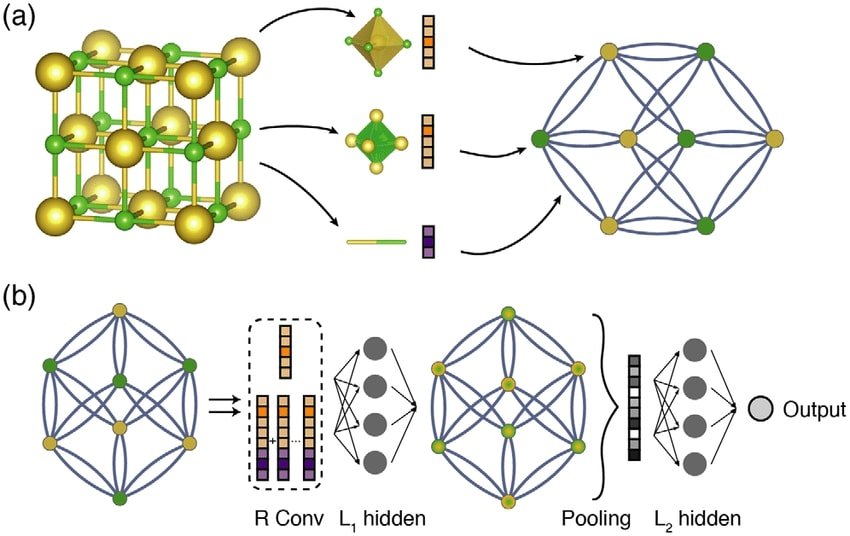

Graph Convolutional Community (GCN)

The preferred and in addition a foundational sort of GNN. The important thing concept behind GCNs is to replace the illustration of a node by aggregating and reworking the options of its neighboring nodes and itself (impressed by CNNs). This aggregation mechanism permits GCNs to seize the native graph construction round every node. GCNs are broadly used for node classification, graph classification, and different duties the place understanding the native construction is essential.

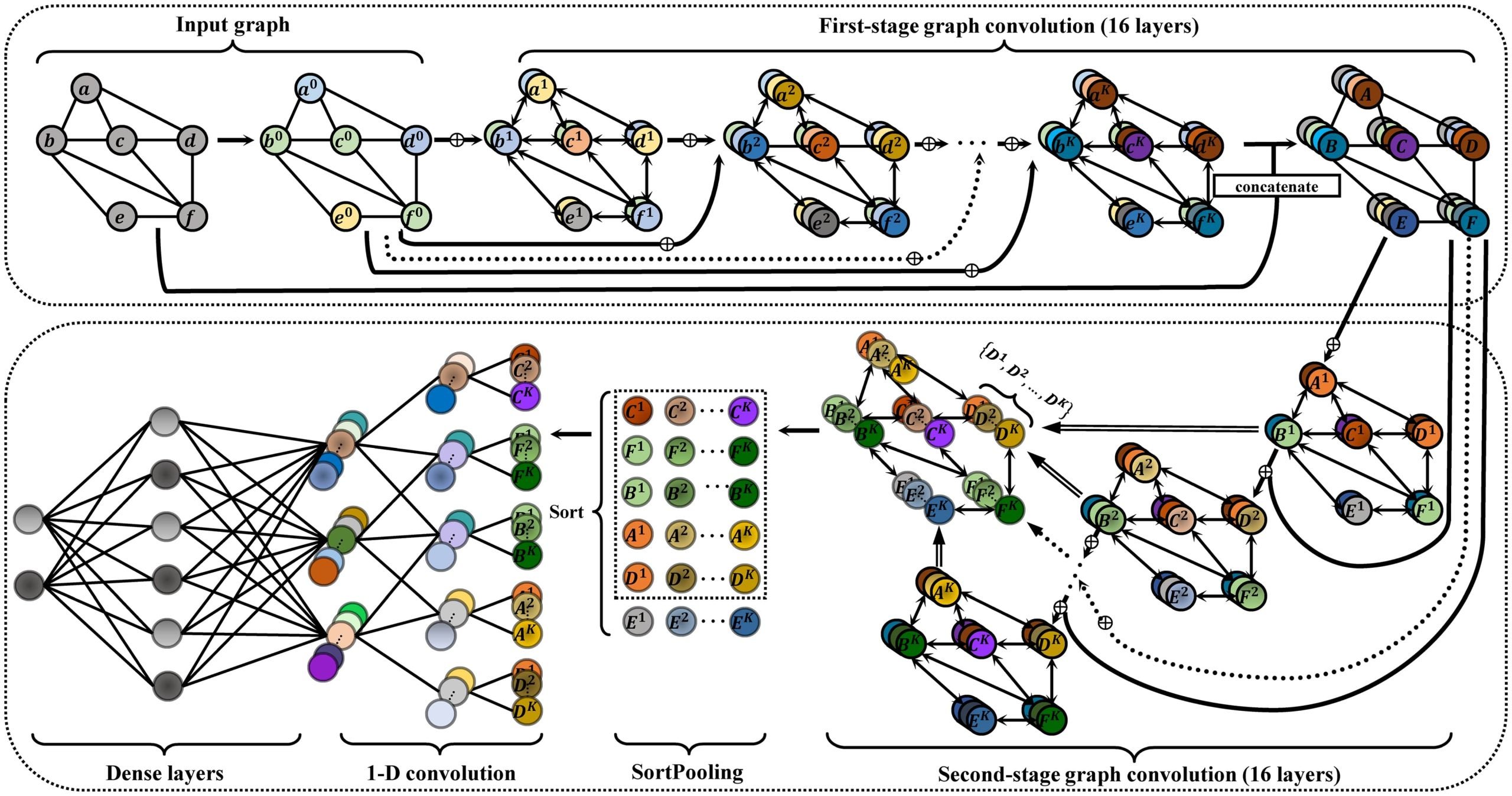

Deep Graph Convolutional Neural Community II (DGCNNII)

This structure makes use of a deep graph convolutional neural community structure for graph classification. It’s improved upon GCN. This new structure is predicated on a non-local message-passing framework and a spatial graph convolution layer. It’s shown to outperform many different graph neural community strategies on graph classification duties.

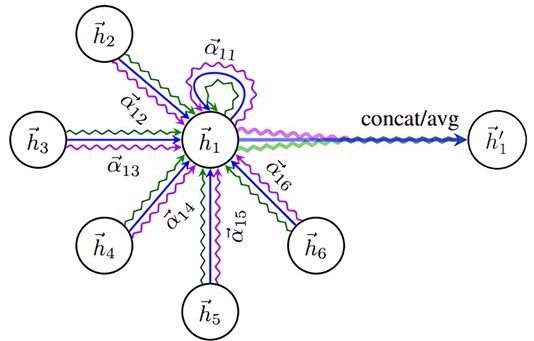

Graph Consideration Networks (GATs)

Introduce consideration mechanisms to the aggregation step in GNNs (including weight to the aggregation step).

In GATs, the significance of every neighbor’s options is dynamically weighted (the completely different colours of arrows within the picture) in the course of the aggregation course of, permitting the mannequin to focus extra on related neighbors for every node. This approach is useful in graphs the place not all connections are equally essential or some nodes overpower the remainder of the nodes (e.g. an affect with hundreds of thousands of followers), and it might result in extra expressive node representations. GATs are significantly helpful for duties that profit from distinguishing the importance of various edges, resembling in suggestion techniques or social community evaluation.

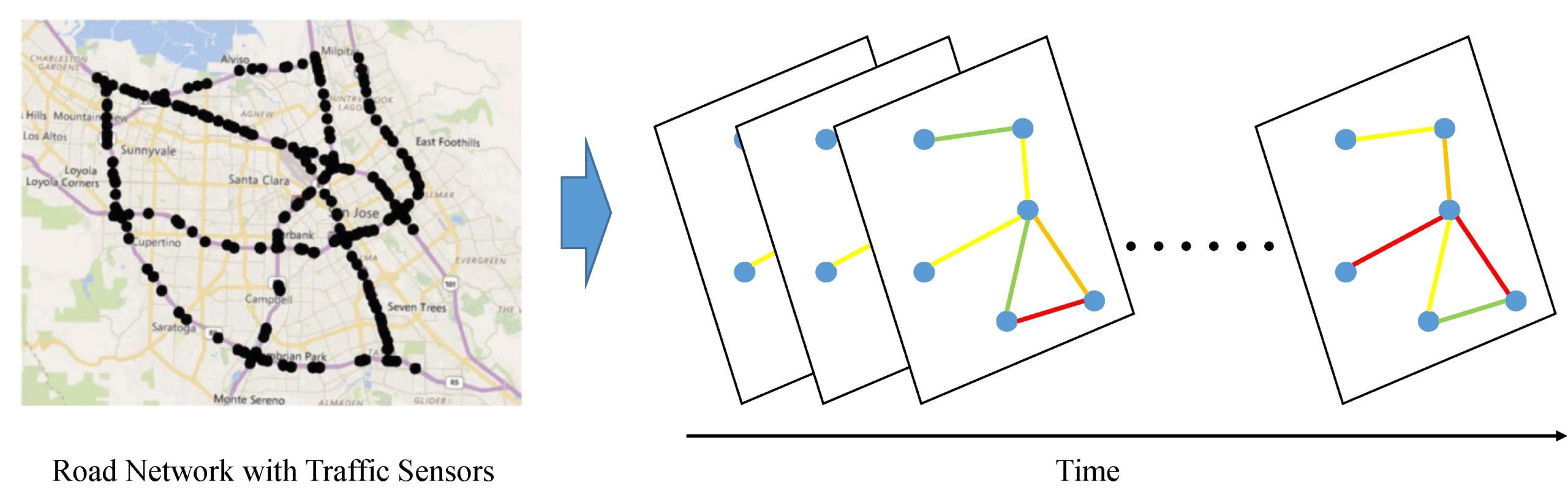

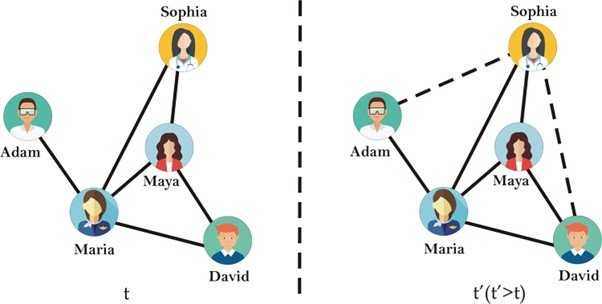

Graph Recurrent Networks (GRNs)

Mix the principles of recurrent neural networks (RNNs) with graph neural networks. In GRNs, node options are up to date via recurrent mechanisms, permitting the mannequin to seize dynamic adjustments in graph-structured knowledge over time. Examples of functions embrace site visitors prediction in street networks and analyzing time-evolving social networks.

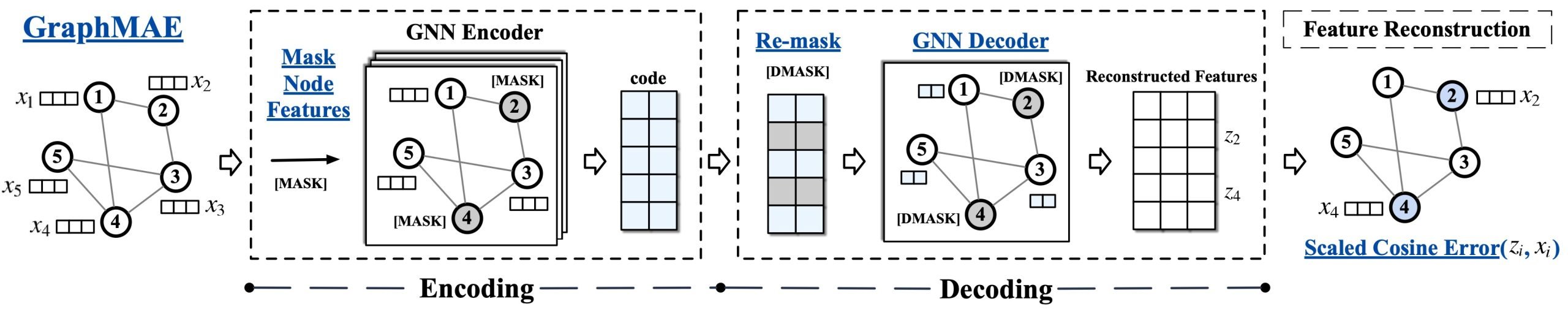

Graph Autoencoders (GAEs)

Designed for unsupervised studying duties on graphs. A GAE learns to encode the graph (or subgraphs/nodes) right into a lower-dimensional area (embedding) after which reconstructs the graph construction from these embeddings. The objective is to study representations that seize the important structural and have info of the graph. GAEs are significantly helpful for duties resembling hyperlink prediction, clustering, and anomaly detection in graphs, the place specific labels aren’t accessible.

Graph Generative Networks

Intention to generate new graph constructions or increase current ones. These fashions study the distribution of noticed graph knowledge and may generate new graphs that resemble the coaching knowledge. This functionality is efficacious for drug discovery, the place producing novel molecular constructions is of curiosity. Additionally in social community evaluation, the place simulating life like community constructions may also help understand community dynamics.

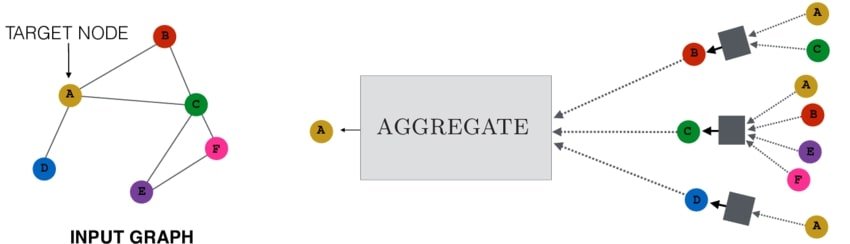

How Do GNNs Work

GNNs work by leveraging the construction of graph knowledge, which consists of nodes (vertices) and edges (connections between nodes), to study representations for nodes, edges, or complete graphs. They mix these options into a brand new function matrix (the present nodes and the neighboring nodes).

This new function matrix is up to date within the present node. When this course of is finished a number of occasions, with all of the nodes within the graph, in consequence, every node learns one thing about each different node.

GNN Studying Steps

- Message Passing: In every layer, info is handed between nodes within the graph. A node’s present state is up to date based mostly on the knowledge from its neighbors. That is accomplished by aggregating the knowledge from the neighbors after which combining it with the node’s present state. That is additionally referred to as graph convolution (impressed by CNNs). In CNNs, the knowledge from the neighbor pixels is built-in into the central pixel, equally in GNNs, the central node is up to date with aggregated details about its neighbors.

- Combination: There are alternative ways to combination the knowledge from a node’s neighbors. Some frequent strategies embrace averaging, taking the utmost, or utilizing a extra advanced operate like a recurrent neural community.

- Replace: After the knowledge from the neighbors has been aggregated, it’s mixed with the node’s present state, forming a brand new state. This new node state incorporates details about the neighboring nodes. Combination and replace are an important steps in GNNs. Right here is the formal definition:

- Output: The ultimate node, edge, or graph representations are used for duties like classification, regression, or hyperlink prediction.

- A number of Layers: The message-passing course of will be repeated for a number of layers. This enables nodes to study details about their neighbors’ neighbors, and so forth. The variety of layers formally referred to as hops is a hyperparameter.

What’s a Hop?

In GNNs, a hop refers back to the variety of steps a message can journey from one node to a different. For instance, right here the GNN has 2 layers, and every node incorporates info from its direct neighbors and its neighbors’ neighbors.

Nevertheless, one must be cautious at selecting the variety of layers or hops, as too many layers can result in all of the nodes having roughly the identical values (oversmoothing).

What’s Oversmoothing?

Oversmoothing is a problem that arises when too many layers of message passing and aggregation are utilized in a GNN.

Because the depth of the community will increase, the representations of nodes throughout completely different components of the graph might change into indistinguishable. In excessive circumstances, all node options converge to an analogous state, dropping their distinctive info.

This occurs as a result of, after many iterations of smoothing, the node options have aggregated a lot info from their neighborhoods that the distinctive traits of particular person nodes are washed out.

Smoothing in GNNs

Smoothing in GNNs refers back to the course of via which node options change into extra related to one another. This outcomes from the repeated utility of message passing and aggregation steps throughout the layers of the community.

As this course of is utilized via a number of layers, info from a node’s wider and wider neighborhood is built-in into its illustration.

Addressing Oversmoothing

- Skip Connections (Residual Connections): Much like their use in CNNs, skip connections in GNNs may also help protect preliminary node options by bypassing some layers, successfully permitting the mannequin to study from each native and international graph constructions with out oversmoothing.

- Consideration Mechanisms: By weighting the significance of neighbors’ options dynamically, consideration mechanisms can forestall the uniform mixing of options that results in oversmoothing.

- Depth Management: Rigorously design the community depth in keeping with the graph’s structural properties.

- Normalization Methods: Methods like Batch Normalization or Layer Normalization may also help preserve the distribution of options throughout GNN layers.

Purposes of GNNs

GNNs have lived to their theoretical potential and at the moment are actively impacting numerous real-world domains. So, how highly effective are graph neural networks? Right here’s a glimpse of its functions:

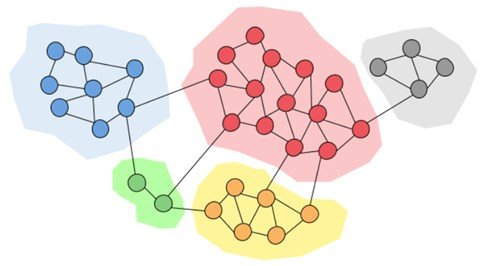

Social Networks

- Group Detection: Figuring out communities of customers based mostly on their interactions, pursuits, or affiliations. This can be utilized for focused promoting, content material suggestion, or anomaly detection.

- Hyperlink Prediction: Predicting new connections between customers based mostly on current relationships and community construction. This may be beneficial for pal suggestions or figuring out potential influencers.

- Sentiment Evaluation: Analyzing the sentiment of person posts or conversations by contemplating the context and relationships inside the community. This may also help perceive public opinion or model notion.

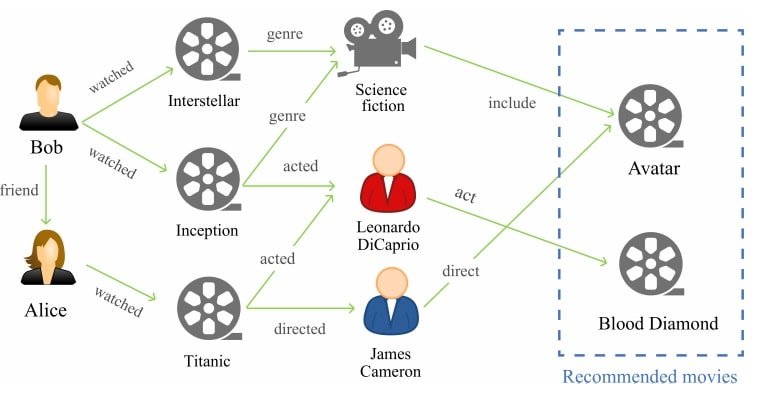

Advice Techniques

- Collaborative Filtering with GNNs: Going past conventional user-item interactions, GNNs leverage person relationships to personalize suggestions by incorporating social affect and content material similarity amongst customers.

- Modeling Person-Merchandise Interactions: Capturing the dynamic nature of person preferences by contemplating the evolution of person relationships and merchandise recognition inside a community.

- Explainable Suggestions: By analyzing how info propagates via the community, GNNs can provide insights into why sure gadgets are advisable, rising transparency and belief within the system.

Bioinformatics

- Protein-Protein Interplay Networks: Predicting protein-protein interactions based mostly on their structural and practical similarities, aiding in drug discovery and understanding illness mechanisms.

- Gene Regulatory Networks: Analyzing gene expression knowledge and community construction to determine genes concerned in particular organic processes or illness improvement.

- Drug Discovery: Leveraging GNNs to foretell the efficacy of drug candidates by contemplating their interactions with goal proteins and pathways inside a community.

Fraud Detection

Graphs can symbolize monetary networks, the place nodes is perhaps entities (resembling people, firms, or banks), and edges may symbolize monetary transactions or lending relationships. That is helpful for fraud detection, danger administration, and market evaluation.

Visitors Movement Prediction

Predicting site visitors congestion and optimizing site visitors mild timings for sensible cities by modeling the street community and car circulation dynamics.

Cybersecurity

Detecting malicious actions and figuring out vulnerabilities in pc networks by analyzing community site visitors and assault patterns.

Challenges of GNNs

Regardless of the spectacular progress and potential of GNNs, a number of analysis and implementation challenges and limitations stay. Listed below are some key areas.

Scalability

Many GNN fashions battle to effectively course of giant graphs because of the computational and reminiscence necessities of aggregating options from a node’s neighbors. This turns into significantly difficult for graphs with hundreds of thousands of nodes and edges, frequent in real-world functions like social networks or giant data graphs.

Oversmoothing

A word on over-smoothing for graph neural networks, as beforehand talked about, the depth of a GNN will increase, the options of nodes in numerous components of the graph can change into indistinguishable. This over-smoothing downside makes it tough for the mannequin to protect native graph constructions, resulting in a lack of efficiency in node classification and different duties.

Dynamic graphs

Many real-world graphs are dynamic, with nodes and edges being added or eliminated over time. Most GNN architectures are designed for static graphs and battle to mannequin these temporal dynamics successfully.

Heterogeneous graphs

Graphs usually comprise several types of nodes and edges (heterogeneous graphs), every with its options and patterns of interplay. Designing GNN architectures that may successfully study from heterogeneous graph knowledge is a difficult process. This requires capturing the advanced relationships between several types of entities.

Generalization Throughout Graphs

Many GNN fashions are skilled and examined on the identical graph or graphs with related constructions. Nevertheless, fashions usually battle to generalize to completely new graphs with completely different constructions, limiting their applicability.

Future Work in Graph Neural Networks (GNNs)

Interpretability and Explainability

Making GNN fashions extra comprehensible is a key space as a result of it’s essential for sectors like healthcare, and finance. Solely by understanding how the fashions make predictions can create belief and facilitate their adoption.

Dynamic and Temporal Graph Modeling

Enhancing the flexibility of GNNs to mannequin and predict adjustments in dynamic graphs over time is a key space of analysis. This contains not solely capturing the evolution of graph constructions but in addition predicting future states of the graph.

Heterogeneous Graph Studying

Advancing methods for studying from heterogeneous graphs, which comprise a number of kinds of nodes and relationships is a key space of ongoing analysis. This includes creating fashions that may successfully leverage the wealthy semantic info in these advanced networks.