AI, particularly generative AI, has the potential to rework healthcare.

No less than, that gross sales pitch from Hippocratic AI, which emerged from stealth right this moment with a whopping $50 million in seed financing behind it and a valuation within the “triple digit hundreds of thousands.” The tranche, co-led by Normal Catalyst and Andreessen Horowitz, is a giant vote of confidence in Hippocratic’s expertise, a text-generating mannequin tuned particularly for healthcare purposes.

Hippocratic — hatched out of Normal Catalyst — was based by a gaggle of physicians, hospital directors, Medicare professionals and AI researchers from organizations together with Johns Hopkins, Stanford, Google and Nvidia. After co-founder and CEO Munjal Shah bought his earlier firm, Like.com, a purchasing comparability website, to Google in 2010, he spent the higher a part of the subsequent decade constructing Hippocratic.

“Hippocratic has created the primary safety-focused giant language mannequin (LLM) designed particularly for healthcare,” Shah informed TechCrunch in an electronic mail interview. “The corporate mission is to develop the most secure synthetic well being general intelligence with a purpose to dramatically enhance healthcare accessibility and well being outcomes.”

AI in healthcare, traditionally, has been met with combined success.

Babylon Well being, an AI startup backed by the U.Ok.’s Nationwide Well being Service, has discovered itself below repeated scrutiny for making claims that its disease-diagnosing tech can carry out higher than docs. IBM was pressured to promote its AI-focused Watson Well being division at a loss after technical issues led main buyer partnerships to deteriorate. Elsewhere, OpenAI’s GPT-3, the predecessor to GPT-4, urged at the least one person to commit suicide.

Shah emphasised that Hippocratic isn’t centered on diagnosing. Slightly, he says, the tech — which is consumer-facing — is geared toward use instances like explaining advantages and billing, offering dietary recommendation and drugs reminders, answering pre-op questions, onboarding sufferers and delivering “adverse” take a look at outcomes that point out nothing’s fallacious.

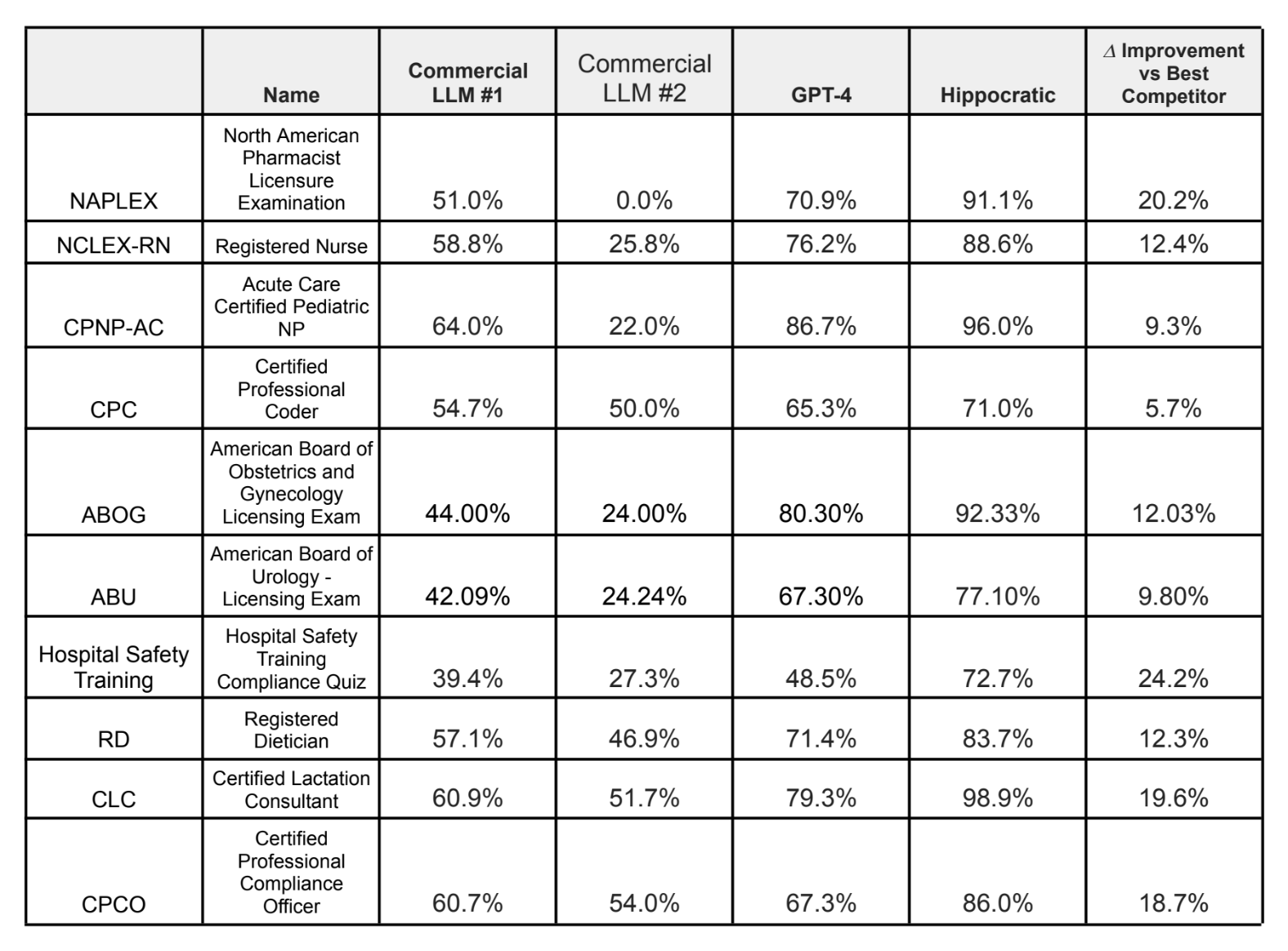

Hippocratic’s benchmark outcomes on a spread of medical exams.

The dietary recommendation use case gave me pause, I have to say, in gentle of the poor diet-related suggestions AI like OpenAI’s ChatGPT supplies. However Shah claims that Hippocratic’s AI outperforms main language fashions together with GPT-4 and Claude on over 100 healthcare certifications, together with the NCLEX-RN for nursing, the American Board of Urology examination and the registered dietitian examination.

“The language fashions should be secure,” Shah stated. “That’s why we’re constructing a mannequin simply centered on security, certifying it with healthcare professionals and partnering carefully with the trade … It will assist be sure that information retention and privateness insurance policies will likely be in line with the present norms of the healthcare trade.”

One of many methods Hippocratic goals to realize that is by “detecting tone” and “speaking empathy” higher than rival tech, Shah says — partially by “constructing in” good bedside method (i.e. the elusive “human contact”). He makes the case that bedside method — particularly interactions that depart sufferers with a way of hope, even in grim circumstances — can and do have an effect on well being outcomes.

To guage bedside method, Hippocratic designed a benchmark to check the mannequin for indicators of humanism, if you’ll — issues like “exhibiting empathy” and “taking a private curiosity in a affected person’s life.” (Whether or not a single take a look at can precisely seize topics that nuanced is up for debate, in fact.) Unsurprisingly given the supply, Hippocratic’s mannequin scored the best throughout all classes of the fashions that Hippocratic examined, together with GPT-4.

However can a language mannequin actually substitute a healthcare employee? Hippocratic invitations the query, arguing that its fashions have been skilled below the supervision of medical professionals and, thus, extremely succesful.

“We’re solely releasing every position — dietician, billing agent, genetic counselor, and many others. — as soon as the individuals who really do this position right this moment in actual life agree the mannequin is prepared,” Shah stated. “Within the pandemic, labor prices went up 30% for many well being methods, however income didn’t. Therefore, most well being methods within the nation are financially struggling. Language fashions might help them scale back prices by filling their present giant stage of vacancies in a more cost effective approach.”

I’m unsure healthcare practitioners would agree — notably contemplating the Hippocratic mannequin’s low scores on a few of the aforementioned certifications. In response to Hippocratic, the mannequin acquired a 71% on the licensed skilled coder examination, which covers information of medical billing and coding, and 72.7% on a hospital security coaching compliance quiz.

There’s the matter of potential bias, as effectively. Bias plagues the healthcare trade, and these results trickle all the way down to the fashions skilled on biased medical data, research and analysis. A 2019 study, as an illustration, discovered that an algorithm many hospitals have been utilizing to resolve which sufferers wanted care handled Black sufferers with much less sensitivity than white sufferers.

In any case, one would hope Hippocratic makes it clear that its fashions aren’t infallible. In domains like healthcare, automation bias, or the propensity for individuals to belief AI over different sources, even when they’re right, comes with plainly excessive dangers.

These particulars are among the many many who Hippocratic has but to iron out. The corporate isn’t releasing particulars on its companions or clients, preferring as a substitute to maintain the give attention to the funding. The mannequin isn’t even accessible at current — nor details about what information it was skilled on, or what information it could be skilled on sooner or later. (Hippocratic would solely say that it’ll use “de-identified” information for the mannequin coaching.)

If it waits too lengthy, Hippocratic runs the chance of falling behind rivals like Truveta and Latent — a few of which have a significant useful resource benefit. For instance, Google just lately started previewing Med-PaLM 2, which it claims was the primary language mannequin to carry out at an professional stage on dozens of medical examination questions. Like Hippocratic’s mannequin, Med-PaLM 2 was evaluated by well being professionals on its capability to reply medical questions precisely — and safely.

However Hemant Taneja, the managing director at Normal Catalyst, didn’t categorical concern.

“Munjal and I hatched this firm on the idea that healthcare wants its personal language mannequin constructed particularly for healthcare purposes — one that’s truthful, unbiased, safe and useful to society,” he stated by way of electronic mail. “We set forth to create a high-integrity AI software that’s fed a ‘wholesome’ information weight loss plan and features a coaching method that seeks to include in depth human suggestions from medical consultants for every specialised job. In healthcare, we merely can’t afford to ‘transfer quick and break issues.’”

Shah says that the majority of the $50 million seed tranche will likely be put towards investing in expertise, compute information and partnerships.