AI startup Hugging Face and ServiceNow Analysis, ServiceNow’s R&D division, have launched StarCoder, a free different to code-generating AI methods alongside the strains of GitHub’s Copilot.

Code-generating methods like DeepMind’s AlphaCode; Amazon’s CodeWhisperer; and OpenAI’s Codex, which powers Copilot, present a tantalizing glimpse at what’s attainable with AI inside the realm of pc programming. Assuming the moral, technical and authorized points are sometime ironed out (and AI-powered coding instruments don’t trigger extra bugs and safety exploits than they resolve), they might reduce improvement prices considerably whereas permitting coders to deal with extra inventive duties.

In line with a study from the College of Cambridge, at the least half of builders’ efforts are spent debugging and never actively programming, which prices the software program business an estimated $312 billion per yr. However to date, solely a handful of code-generating AI methods have been made freely out there to the general public — reflecting the industrial incentives of the organizations constructing them (see: Replit).

StarCoder, which in contrast is licensed to permit for royalty-free use by anybody, together with companies, was educated on over 80 programming languages in addition to textual content from GitHub repositories, together with documentation and programming notebooks. StarCoder integrates with Microsoft’s Visible Studio Code code editor and, like OpenAI’s ChatGPT, can observe primary directions (e.g., “create an app UI”) and reply questions on code.

Leandro von Werra, a machine studying engineer at Hugging Face and a co-lead on StarCoder, claims that StarCoder matches or outperforms the AI mannequin from OpenAI that was used to energy preliminary variations of Copilot.

“One factor we discovered from releases reminiscent of Secure Diffusion final yr is the creativity and functionality of the open-source neighborhood,” von Werra instructed TechCrunch in an e-mail interview. “Inside weeks of the discharge the neighborhood had constructed dozens of variants of the mannequin in addition to customized functions. Releasing a robust code era mannequin permits anyone to fine-tune and adapt it to their very own use-cases and can allow numerous downstream functions.”

Constructing a mannequin

StarCoder is part of Hugging Face’s and ServiceNow’s over-600-person BigCode mission, launched late final yr, which goals to develop “state-of-the-art” AI methods for code in an “open and accountable” approach. ServiceNow equipped an in-house compute cluster of 512 Nvidia V100 GPUs to coach the StarCoder mannequin.

Varied BigCode working teams deal with subtopics like accumulating datasets, implementing strategies for coaching code fashions, growing an analysis suite and discussing moral finest practices. For instance, the Authorized, Ethics and Governance working group explored questions on knowledge licensing, attribution of generated code to unique code, the redaction of personally identifiable info (PII), and the dangers of outputting malicious code.

Impressed by Hugging Face’s earlier efforts to open supply subtle text-generating methods, BigCode seeks to handle among the controversies arising across the follow of AI-powered code era. The nonprofit Software program Freedom Conservancy amongst others has criticized GitHub and OpenAI for utilizing public supply code, not all of which is beneath a permissive license, to coach and monetize Codex. Codex is out there by OpenAI’s and Microsoft’s paid APIs, whereas GitHub lately started charging for entry to Copilot.

For his or her components, GitHub and OpenAI assert that Codex and Copilot — protected by the doctrine of fair use, at the least within the U.S. — don’t run afoul of any licensing agreements.

“Releasing a succesful code-generating system can function a analysis platform for establishments which might be within the subject however don’t have the mandatory sources or know-how to coach such fashions,” von Werra mentioned. “We consider that in the long term this results in fruitful analysis on security, capabilities and limits of code-generating methods.”

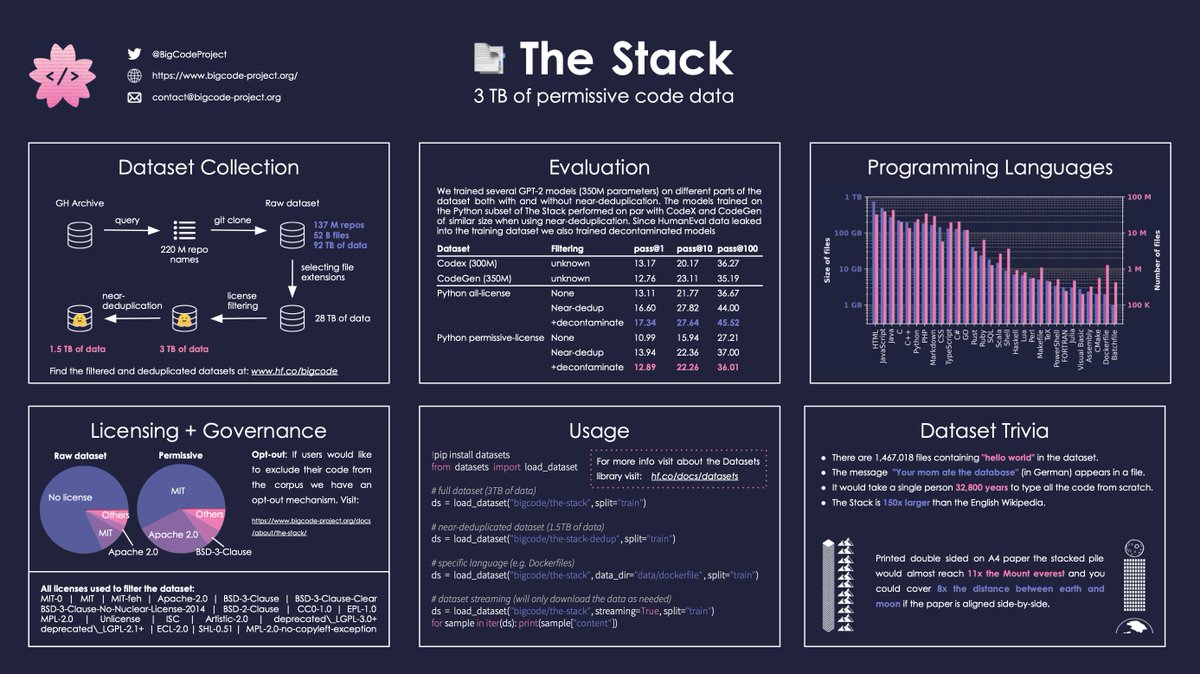

In contrast to Copilot, the 15-billion-parameter StarCoder was educated over the course of a number of days on an open supply dataset referred to as The Stack, which has over 19 million curated, permissively licensed repositories and greater than six terabytes of code in over 350 programming languages. In machine studying, parameters are the components of an AI system discovered from historic coaching knowledge and basically outline the ability of the system on an issue, reminiscent of producing code.

A graphic breaking down the contents of The Stack dataset. Picture Credit: BigCode

As a result of it’s permissively licensed, code from The Stack may be copied, modified and redistributed. However the BigCode mission additionally provides a way for builders to “choose out” of The Stack, just like efforts elsewhere to let artists take away their work from text-to-image AI coaching datasets.

The BigCode workforce additionally labored to take away PII from The Stack, reminiscent of names, usernames, e-mail and IP addresses, and keys and passwords. They created a separate dataset of 12,000 information containing PII, which they plan to launch to researchers by “gated entry.”

Past this, the BigCode workforce used Hugging Face’s malicious code detection device to take away information from The Stack that may be thought-about “unsafe,” reminiscent of these with recognized exploits.

The privateness and safety points with generative AI methods, which for essentially the most half are educated on comparatively unfiltered knowledge from the online, are well-established. ChatGPT as soon as volunteered a journalist’s cellphone quantity. And GitHub has acknowledged that Copilot might generate keys, credentials and passwords seen in its coaching knowledge on novel strings.

“Code poses among the most delicate mental property for many firms,” von Werra mentioned. “Specifically, sharing it outdoors their infrastructure poses immense challenges.”

To his level, some authorized consultants have argued that code-generating AI methods may put firms in danger in the event that they have been to unwittingly incorporate copyrighted or delicate textual content from the instruments into their manufacturing software program. As Elaine Atwell notes in a chunk on Kolide’s company weblog, as a result of methods like Copilot strip code of its licenses, it’s troublesome to inform which code is permissible to deploy and which could have incompatible phrases of use.

In response to the criticisms, GitHub added a toggle that lets prospects forestall urged code that matches public, probably copyrighted content material from GitHub from being proven. Amazon, following swimsuit, has CodeWhisperer spotlight and optionally filter the license related to features it means that bear a resemblance to snippets present in its coaching knowledge.

Industrial drivers

So what does ServiceNow, an organization that offers largely in enterprise automation software program, get out of this? A “strong-performing mannequin and a accountable AI mannequin license that allows industrial use,” mentioned Hurt de Vries, the lead of the Massive Language Mannequin Lab at ServiceNow Analysis and the co-lead of the BigCode mission.

One imagines that ServiceNow will finally construct StarCoder into its industrial merchandise. The corporate wouldn’t reveal how a lot, in {dollars}, it’s invested within the BigCode mission, save that the quantity of donated compute was “substantial.”

“The Massive Language Fashions Lab at ServiceNow Analysis is build up experience on the accountable improvement of generative AI fashions to make sure the secure and moral deployment of those highly effective fashions for our prospects,” de Vries mentioned. “The open-scientific analysis strategy to BigCode supplies ServiceNow builders and prospects with full transparency into how every little thing was developed and demonstrates ServiceNow’s dedication to creating socially accountable contributions to the neighborhood.”

StarCoder isn’t open supply within the strictest sense. Relatively, it’s being launched beneath a licensing scheme, OpenRAIL-M, that features “legally enforceable” use case restrictions that derivatives of the mannequin — and apps utilizing the mannequin — are required to adjust to.

For instance, StarCoder customers should agree to not leverage the mannequin to generate or distribute malicious code. Whereas real-world examples are few and much between (at the least for now), researchers have demonstrated how AI like StarCoder might be utilized in malware to evade primary types of detection.

Whether or not builders truly respect the phrases of the license stays to be seen. Authorized threats apart, there’s nothing on the base technical degree to forestall them from disregarding the phrases to their very own ends.

That’s what occurred with the aforementioned Secure Diffusion, whose equally restrictive license was ignored by builders who used the generative AI mannequin to create photos of celeb deepfakes.

However the risk hasn’t discouraged von Werra, who feels the downsides of not releasing StarCoder aren’t outweighed by the upsides.

“At launch, StarCoder is not going to ship as many options as GitHub Copilot, however with its open-source nature, the neighborhood can assist enhance it alongside the best way in addition to combine customized fashions,” he mentioned.

The StarCoder code repositories, mannequin coaching framework, dataset-filtering strategies, code analysis suite and analysis evaluation notebooks can be found on GitHub as of this week. The BigCode mission will preserve them going ahead because the teams look to develop extra succesful code-generating fashions, fueled by enter from the neighborhood.

There’s actually work to be achieved. Within the technical paper accompanying StarCoder’s launch, Hugging Face and ServiceNow say that the mannequin might produce inaccurate, offensive, and deceptive content material in addition to PII and malicious code that managed to make it previous the dataset filtering stage.