Deliver this venture to life

Context Cluster

Convolutional Neural Networks and Imaginative and prescient primarily based Transformer fashions (ViT) are broadly unfold methods to course of photos and generate clever predictions. The flexibility of the mannequin to generate predictions solely relies on the way in which it processes the picture. CNNs contemplate a picture as well-arranged pixels and extract native options utilizing the convolution operation by filters in a sliding window trend. On the opposite facet, Imaginative and prescient Transformer (ViT) descended from NLP analysis and thus treats a picture as a sequence of patches and can extract options from every of these patches. Whereas CNNs and ViT are nonetheless very talked-about, it is very important take into consideration different methods to course of photos that will give us different advantages.

Researchers at Adobe & Northeastern College just lately launched a mannequin named Context-Cluster. It treats a picture as a set of many factors. Slightly than utilizing subtle methods, it makes use of the clustering method to group these units of factors into a number of clusters. These clusters may be handled as teams of patches and may be processed in a different way for downstream duties. We are able to make the most of the identical pixel embeddings for various duties (classification, semantic segmentation, and so forth.)

Mannequin structure

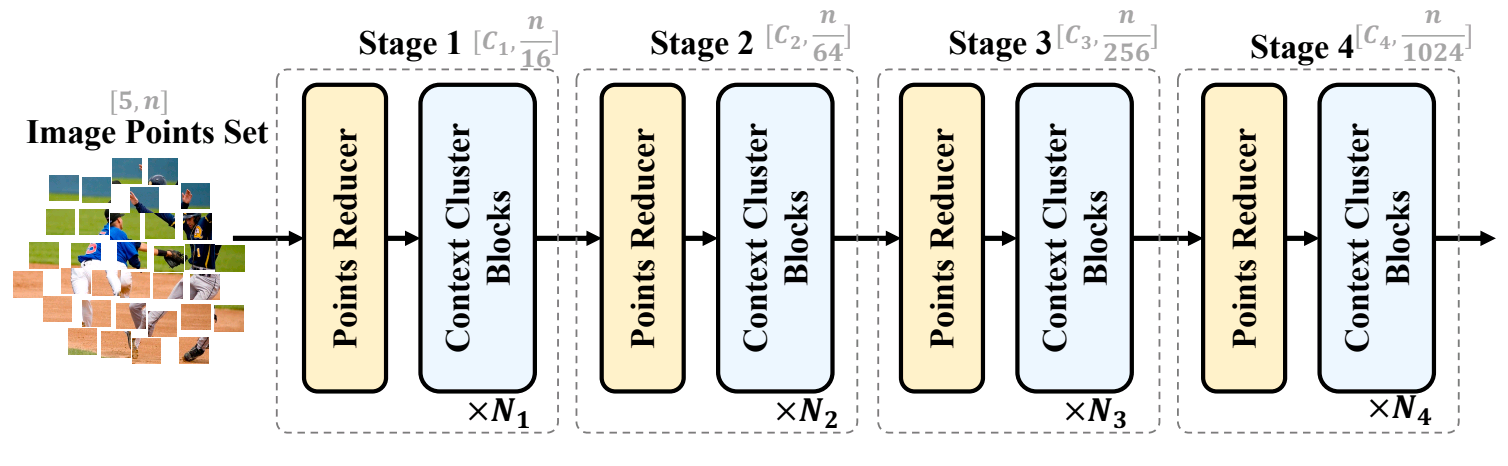

Initially, we have now a picture of form (3, W, H) denoting the variety of channels, width, and top of the picture. This uncooked picture accommodates 3 channels (RGB) representing totally different shade values. So as to add 2 extra information factors, we additionally contemplate the place of the pixel within the W x H airplane. To boost the distribution of the place function, the place worth (i, j) is transformed to (i/W – 0.5, j/H – 0.5) for all pixels in a picture. Ultimately, we find yourself with the dataset with form (5, N) the place N represents the variety of pixels (W * H) within the picture. Any such illustration of picture may be thought of common since we’ve not assumed something till now.

Now if we recall the normal clustering methodology (Okay-means), we have to assign some random factors as cluster facilities after which compute the closest cluster middle for all of the out there information factors (pixels). However because the picture can have arbitrarily giant decision and thus can have too many pixels of a number of dimensions in it. Computing the closest cluster middle for all of them is not going to be computationally possible. To beat this challenge, we first cut back the dimension of factors for the dataset by an operation known as Level Reducer. It reduces the dimension of the factors by linearly projecting (utilizing a totally related layer) the dataset. Because of this, we get a dataset of dimension (N, D) the place D is the variety of options of every pixel.

The subsequent step is context clustering. It randomly selects some c middle factors over the dataset, selects okay nearest neighbors for every middle level, concatenates these okay factors, and inputs them to the totally related linear layer. Outputs of this linear layer are the options for every middle level. From the c-center options, we outline the pairwise cosign similarity of every middle with every pixel. The form of this similarity matrix is (C, N). Observe right here that every pixel is assigned to solely a single cluster. It means it’s exhausting clustering.

Now, the factors in every cluster are aggregated primarily based on the similarity to the middle. This aggregation is finished equally utilizing a totally related layer(s) and converts options of M information factors inside the cluster to form (M, D’). This step applies to the factors in every cluster independently. It aggregates options of all of the factors inside the cluster. Consider it just like the factors inside every cluster sharing info. After aggregation, the factors are dispatched again to their authentic dimension. It’s once more carried out utilizing a totally related layer(s). Every level is once more remodeled again into D dimensional function.

The described 4 steps (Level Reducer, Context Clustering, Characteristic Aggregation & Characteristic Dispatching) create a single stage of the mannequin. Relying on the complexity of the info, we are able to add a number of such levels with totally different decreasing dimensions in order that it improves its studying instructions. The unique paper describes a mannequin with 4 levels as proven in Fig 1.

After computing the final stage of the mannequin, we are able to deal with the resultant options of every pixel in a different way relying on the downstream activity. For the classification activity, we are able to calculate the common of all the purpose options and go it by totally related layer(s) which is hooked up to softmax or sigmoid perform to categorise the logits. For the dense prediction activity like segmentation, we have to place the info factors by their location options on the finish of all stage computation. As a part of this weblog, we are going to carry out a cluster visualization activity that’s considerably just like a segmentation activity.

Comparability with different fashions

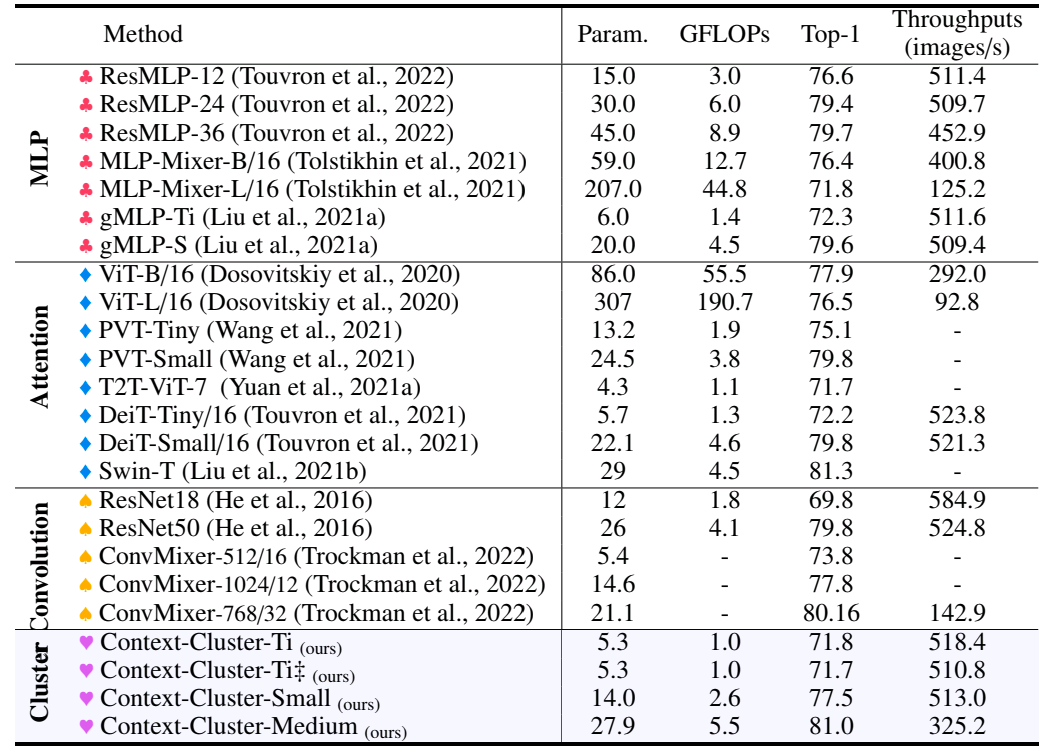

The context cluster mannequin is educated in several variants: tiny, small & medium. The variant principally has variations in depth (variety of levels). The context cluster mannequin is educated for 310 epochs on the ImageNet dataset. It’s then in comparison with different well-liked fashions which use Convolutional Neural Networks (CNNs) and Transformers. The mannequin is educated and in contrast for a number of duties like picture classification, object detection, 3D level cloud classification, semantic segmentation, and so forth. The fashions are in contrast for various metrics just like the variety of parameters, variety of FLOPs, top-1% accuracy, throughputs, and so forth.

Fig. 2 reveals the comparability of various variants of context-cluster fashions with many different well-liked pc imaginative and prescient fashions. The above-shown comparability is for the classification activity. The paper additionally has related comparability tables for different duties which you is perhaps thinking about taking a look at.

We are able to discover within the comparability desk that the context cluster fashions have comparable & generally higher accuracy as in comparison with different fashions. It additionally has a lesser variety of parameters and FLOPs than many different fashions. In use instances the place we have now big information of photos to categorise and we are able to bear little accuracy loss, context cluster fashions is perhaps a more sensible choice.

Strive it your self

Deliver this venture to life

Allow us to now stroll by how one can obtain the dataset & prepare your personal context cluster mannequin. For the demo goal, you needn’t prepare the mannequin. As an alternative, you possibly can obtain pre-trained mannequin checkpoints to strive. For this activity, we are going to get this working in a Gradient Pocket book right here on Paperspace. To navigate to the codebase, click on on the “Run on Gradient” button above or on the prime of this weblog.

Setup

The file installations.sh accommodates all the required code to put in the required issues. Observe that your system will need to have CUDA to coach Context-Cluster fashions. Additionally, chances are you’ll require a distinct model of torch primarily based on the model of CUDA. In case you are working this on Paperspace, then the default model of CUDA is 11.6 which is appropriate with this code. In case you are working it some place else, please test your CUDA model utilizing nvcc --version. If the model differs from ours, chances are you’ll need to change variations of PyTorch libraries within the first line of installations.sh by taking a look at compatibility desk.

To put in all of the dependencies, run the beneath command:

bash installations.sh

The above command additionally clones the unique Context-Cluster repository into context_cluster listing in order that we are able to make the most of the unique mannequin implementation for coaching & inference.

Downloading datasets & Begin coaching (Elective)

As soon as we have now put in all of the dependencies, we are able to obtain the datasets and begin coaching the fashions.

dataset listing on this repo accommodates the required scripts to obtain the info and make it prepared for coaching. At present, this repository helps downloading ImageNet dataset that the unique authors used.

We have now already setup bash scripts for you which is able to robotically obtain the dataset for you and can begin the coaching. prepare.sh accommodates the code which is able to obtain the coaching & validation information to dataset the listing and can begin coaching the mannequin.

This bash script is appropriate to the Paperspace workspace. However in case you are working it elsewhere, then you will have to interchange the bottom path of the paths talked about on this script prepare.sh.

Earlier than you begin the coaching, you possibly can test & customise all of the mannequin arguments in args.yaml file. Particularly, chances are you’ll need to change the argument mannequin to one of many following: coc_tiny, coc_tiny_plain, coc_small, coc_medium. These fashions differ by the variety of levels.

To obtain information information and begin coaching, you possibly can execute the beneath command:

bash prepare.sh

Observe that the generated checkpoints for the educated mannequin might be out there in context_cluster/outputs listing. You have to to maneuver checkpoint.pth.tar file to checkpoints listing for inference on the finish of coaching.

Don’t fret for those who do not need to prepare the mannequin. The beneath part illustrates downloading the pre-trained checkpoints for inference.

Operating Gradio Demo

Python script app.py accommodates Gradio demo which helps you to visualize clusters on the picture. However earlier than we do this, we have to obtain the pre-trained checkpoints into checkpoints listing.

To obtain current checkpoints, run the beneath command:

bash checkpoints/fetch_pretrained_checkpoints.sh

Observe that the newest model of the code solely has the pre-trained checkpoints for coc_tiny_plain mannequin variant. However you possibly can add the code in checkpoints/fetch_pretrained_checkpoints.sh at any time when the brand new checkpoints for different mannequin varieties can be found in authentic repository.

Now, we’re able to launch the Gradio demo. Run the next command to launch the demo:

gradio app.py

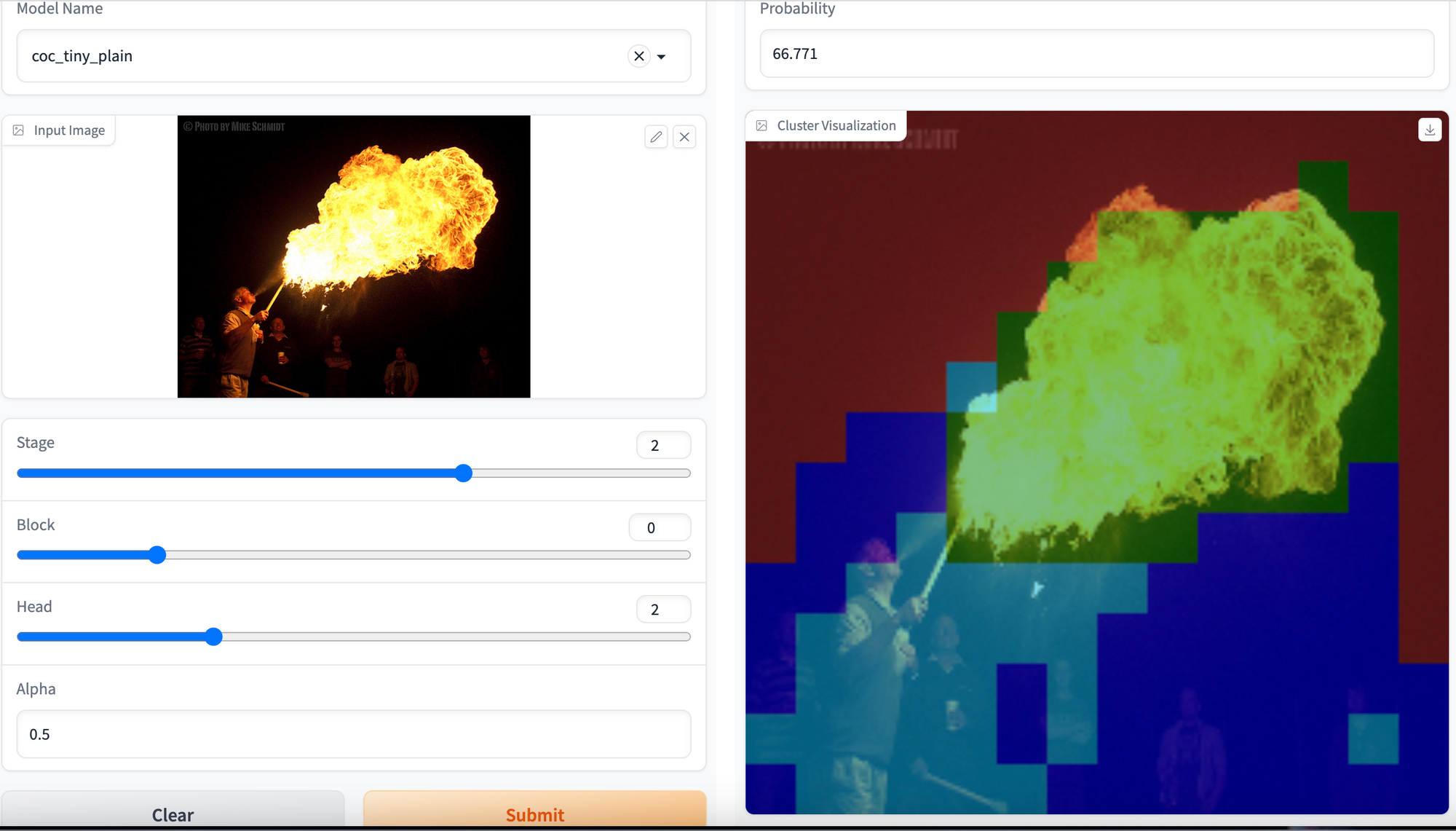

Open the hyperlink offered by the Gradio app within the browser and now you possibly can generate inferences from any of the out there fashions in checkpoints listing. Furthermore, you possibly can generate cluster visualization of particular stage, block and head for any picture. Add your picture and hit the Submit button.

It’s best to be capable of generate cluster visualization for any picture as proven beneath:

Hurray! 🎉🎉🎉 We have now created a demo to visualise clusters over any picture by inferring the Context-Cluster mannequin.

Conclusion

Context-Cluster is a pc imaginative and prescient method that treats a picture as a set of factors. It is extremely totally different from how CNNs and Imaginative and prescient primarily based Transformer fashions course of photos. By decreasing the factors, the context cluster mannequin performs clever clustering over the picture pixels and partitions photos into totally different clusters. It has a relatively lesser variety of parameters and FLOPs. On this weblog, we walked by the target & the structure of the Context-Cluster mannequin, in contrast the outcomes obtained from Context-Cluster with different state-of-the-art fashions, and mentioned methods to arrange the surroundings, prepare your personal Context-Cluster mannequin & generate inference utilizing Gradio app on Gradient Pocket book.

Make sure to check out every of the mannequin varieties utilizing Gradient’s wide selection of accessible machine varieties!