A Spatial Transformer Community (STN) is an efficient technique to attain spatial invariance of a pc imaginative and prescient system. Max Jaderberg et al. first proposed the idea in a 2015 paper by the identical title.

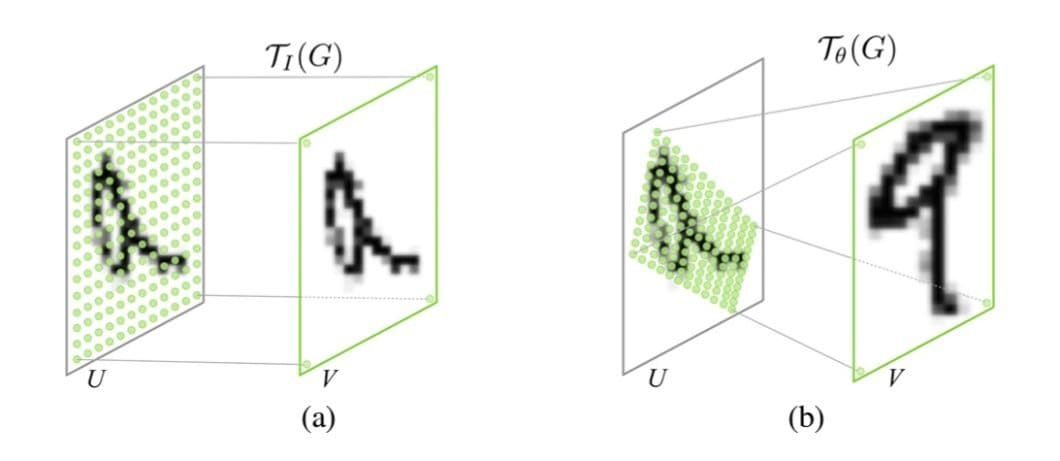

Spatial invariance is the power of a system to acknowledge the identical object, no matter any spatial transformations. For instance, it will probably establish the identical automotive no matter the way you translate, scale, rotate, or crop its picture. It even extends to varied non-rigid transformations, reminiscent of elastic deformations, bending, shearing, or different distortions.

We’ll go into extra element concerning how precisely STNs work afterward. Nonetheless, they use what’s referred to as “adaptive transformation” to provide a canonical, standardized pose for a pattern enter object. Going ahead, it transforms every new occasion of the item to the identical pose. With situations of the item posed equally, it’s simpler to match them for any similarities or variations.

STNs are used to “train” neural networks carry out spatial transformations on enter knowledge to enhance spatial invariance.

On this article, we’ll delve into the mechanics of STNs, combine them into the prevailing Convolutional Neural Community (CNN), and canopy real-world examples and case research of STNs in motion.

Spatial Transformer Networks Defined

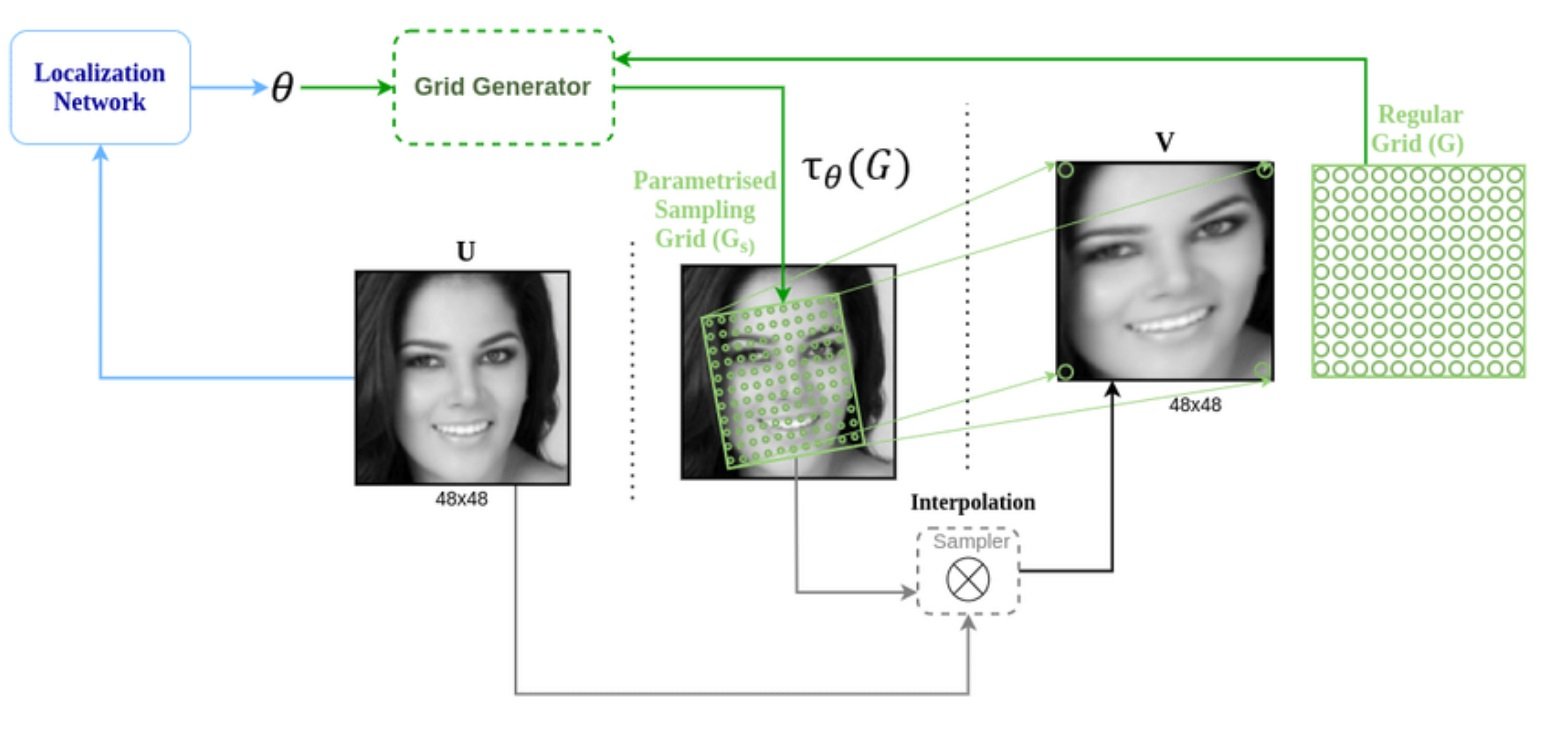

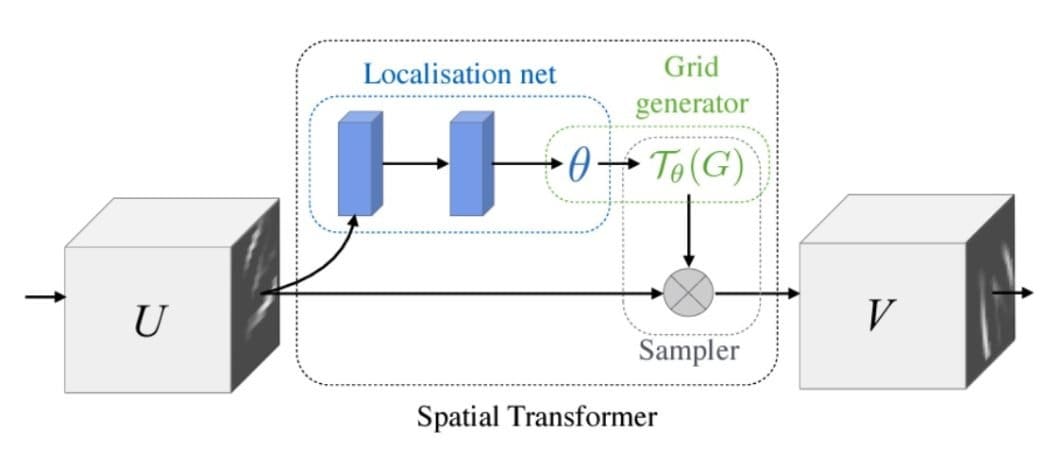

The central part of the STN is the spatial transformer module. In flip, this module consists of three sub-components: the localization community, the grid generator, and the sampler.

The concept of separation of concern is significant to how an STN works, with every part serving a definite perform. The interaction of elements not solely improves the accuracy of the STN but in addition its effectivity. Let’s have a look at every of them in additional element.

- Localization Community: Its function is to calculate the parameters that can rework the enter function map into the canonical pose, typically by an affine transformation matrix. Sometimes, a regression layer inside a fully-connected or convolutional community produces these transformation parameters.

The variety of dimensions wanted is dependent upon the complexity of the transformation. A easy translation, for instance, could solely require 2 dimensions. A extra complicated affine transformation could require as much as 6. - Grid Generator: Utilizing the inverse of the transformation parameters produced by the localization internet, the grid generator applies reverse mapping to extrapolate a sampling grid for the enter picture. Merely put, it maps the non-integer pattern positions again to the unique enter grid. This manner, it determines the place within the enter picture to pattern from to provide the output picture.

- Sampler: Receives a set of coordinates from the grid generator within the type of the sampling grid. Utilizing bilinear interpolation, it then extracts the corresponding pixel values from the enter map. This course of consists of three operations:

- Discover the 4 factors on the supply map surrounding the corresponding level.

- Calculate the burden of every neighboring level based mostly on proximity to the purpose.

- Produce the output by mapping the output level based mostly on the outcomes.

The separation of tasks permits for environment friendly backpropagation and reduces computational overhead. In some methods, it’s just like different approaches, like max pooling.

It additionally makes it attainable to calculate a number of goal pixels concurrently, rushing up the method by parallel processing.

STNs additionally present a chic answer to multi-channel inputs, reminiscent of RBG colour photographs. It goes by an similar mapping course of for every channel. This preserves spatial consistency throughout the completely different channels in order that it doesn’t negatively impression accuracy.

Integrating STNs with CNNS has been proven to considerably enhance spatial invariance. Conventional CNNs excel at hierarchically extracting options by convolution and max pooling layers. The introduction of STNs permits them to additionally successfully deal with objects with variations with regard to orientation, scale, place, and many others.

One poignant instance is that of MNIST – a traditional dataset of handwritten digits. On this use case, one can use an STN to heart and normalize digits, no matter enter presentation. This makes it simpler to precisely examine handwritten digits with many potential variations, dramatically reducing error charges.

Generally Used Applied sciences and Frameworks For Spatial Transformer Networks

Relating to implementation, the same old suspects, TensorFlow and PyTorch, are the go-to spine for STNs. These deep studying frameworks include all the mandatory instruments and libraries for constructing and coaching complicated neural community architectures.

TensorFlow is well-known for its versatility in designing customized layers. This flexibility is vital to implementing the assorted elements of the spatial transformation module; the localization internet, grid generator, and sampler.

Alternatively, PyTorch’s dynamic computational graphs make coding the in any other case complicated transformation and sampling processes extra intuitive. Its built-in Spatial Transformer Networks assist options the affine_grid and grid_sample capabilities to carry out transformation and sampling operations.

Though STNs have inherently environment friendly architectures, some optimization is required as a result of complicated use instances they deal with. That is very true on the subject of coaching these fashions.

Greatest practices embody the cautious collection of applicable loss capabilities and regularization strategies. Each transformation consistency Loss and task-specific loss capabilities are usually mixed to keep away from STN transformations distorting the info and to make sure that the output knowledge is beneficial for the duty at hand, respectively.

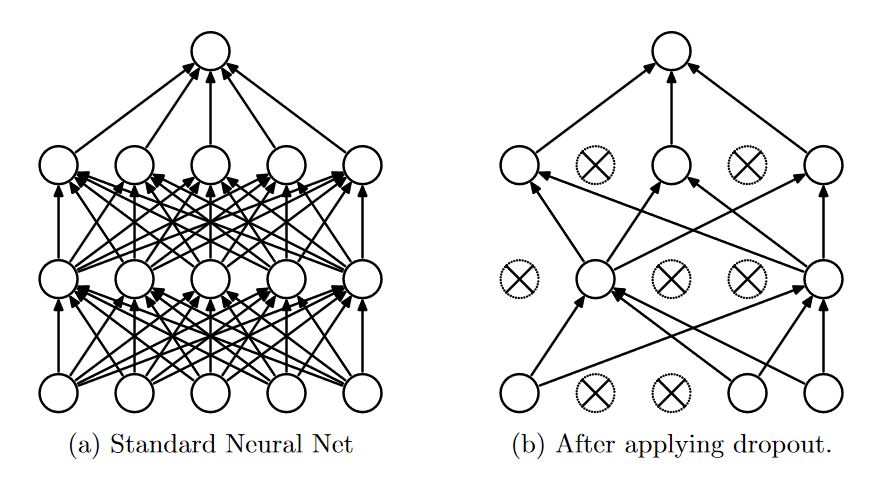

Regularization strategies assist keep away from the problem of overfitting the mannequin to its coaching knowledge. This could negatively impression its skill to generalize for brand spanking new or unseen use instances.

A number of regularization strategies are helpful within the growth of STNs. These embody dropout, L2 Regularization (weight decay), and early stopping. After all, enhancing the dimensions, scope, and variety of the coaching knowledge itself can also be essential.

Efficiency of Spatial Transformer Networks vs Different Options

Since its introduction in 2015, STNs have tremendously superior the sphere of laptop imaginative and prescient. They empower neural networks to carry out spatial transformations to standardize variable enter knowledge.

On this approach, STNs are serving to to resolve a cussed weak spot of most traditional convolutional networks. I.e., the robustness to precisely execute laptop imaginative and prescient duties on datasets the place objects have various displays.

Within the authentic paper, Jaderberg and co. examined the STN in opposition to conventional options utilizing quite a lot of knowledge. In noisy environments, the assorted fashions achieved the next error charges when processing MNIST datasets:

- Absolutely Convolutional Community (FCN): 13.2%

- CNN: 3.5%

- ST-FCN: 2.0%

- ST-CNN: 1.7%

As you possibly can see, each the spatial transformer-containing fashions considerably outperformed their typical predecessors. Specifically, the ST-FCN outperformed the usual FCN by an element of 6.

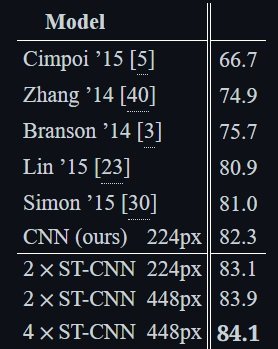

In one other experiment, they examined the power of those fashions to precisely classify photographs of birds.

The outcomes once more confirmed a tangible efficiency enchancment when evaluating STNs to different up to date options.

As you possibly can see from the pattern photographs in each experiments, the themes have broadly completely different poses and orientations. Within the chicken samples, some seize them in dynamic flight whereas others are stationary from completely different angles and focal lengths. The backgrounds additionally fluctuate vastly in colour and texture.

Additional Analysis

Other research has proven promising outcomes integrating STNs with different fashions, like Recurrent Neural Networks (RNNs). Specifically, this marriage has proven substantial efficiency enhancements in sequence prediction duties. This entails, for instance, digit recognition on cluttered backgrounds, just like the MNIST experiment.

The paper’s proposed RNN-SPN mannequin achieved an error fee of simply 1.5% in comparison with 2.9% for a CNN and a pair of.0% for a CNN with SPN layers.

Generative Adversarial Networks (GANs) are one other kind of mannequin with the potential to learn from STNs, as so-called ST-GANs. STNs could very effectively assist to enhance the sequence prediction in addition to picture technology capabilities of GANs.

Actual-World Purposes and Case Research of Spatial Transformer Networks

The wholesale advantages of STNs and their versatility imply they’re being utilized in all kinds of use instances. STNs have already confirmed their potential price in a number of real-world conditions:

- Healthcare: STNs are used to intensify the precision of medical imaging and diagnostic instruments. Topics reminiscent of tumors could have extremely nuanced variations in look. Aside from precise medical care, they may also be used to enhance compliance and operational effectivity in hospital settings

- Autonomous Automobiles: Self-driving and driver-assist techniques should take care of dynamic and visually complicated situations. Additionally they want to have the ability to carry out in real-time to be helpful. STNs can help in each by enhancing trajectory prediction, due to their relative computational effectivity. Efficiency in these situations will be additional improved by together with temporal processing capabilities.

- Robotics: In varied robotics functions, STNs contribute to extra exact object monitoring and interplay. That is very true for complicated and new environments the place the robotic will carry out object-handling duties.

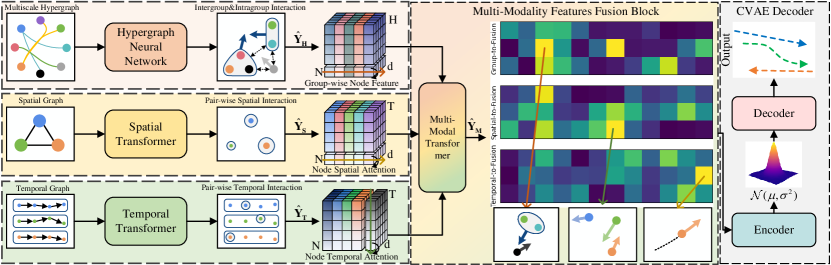

In a telling case research, researchers proposed TransMOT, a Spatial-Temporal Graph Transformer for A number of Object Monitoring. The purpose of this research was to enhance the power of robotics techniques to deal with and work together with objects in diverse environments. The staff applied STNs, particularly to assist the robotic’s notion techniques for improved object recognition and manipulation.

Certainly, variations and iterations of the TransMOT mannequin confirmed vital efficiency will increase over its counterparts in a spread of checks.

What’s Subsequent for Spatial Transformer Networks?

To proceed studying about machine studying and laptop imaginative and prescient, try our different blogs: