Giant Studying Fashions or LLMs are fairly standard phrases when discussing Synthetic intelligence (AI). With the appearance of platforms like ChatGPT, these phrases have now change into a phrase of mouth for everybody. At this time, they’re carried out in search engines like google and yahoo and each social media app corresponding to WhatsApp or Instagram. LLMs modified how we work together with the web as discovering related info or performing particular duties was by no means this straightforward earlier than.

What are Giant Language Fashions (LLMs)?

In generative AI, human language is perceived as a troublesome information sort. If a pc program is skilled on sufficient information such that it could actually analyze, perceive, and generate responses in pure language and different types of content material, it’s known as a Giant Language Mannequin (LLM). They’re skilled on huge curated coaching information with sizes starting from hundreds to hundreds of thousands of gigabytes.

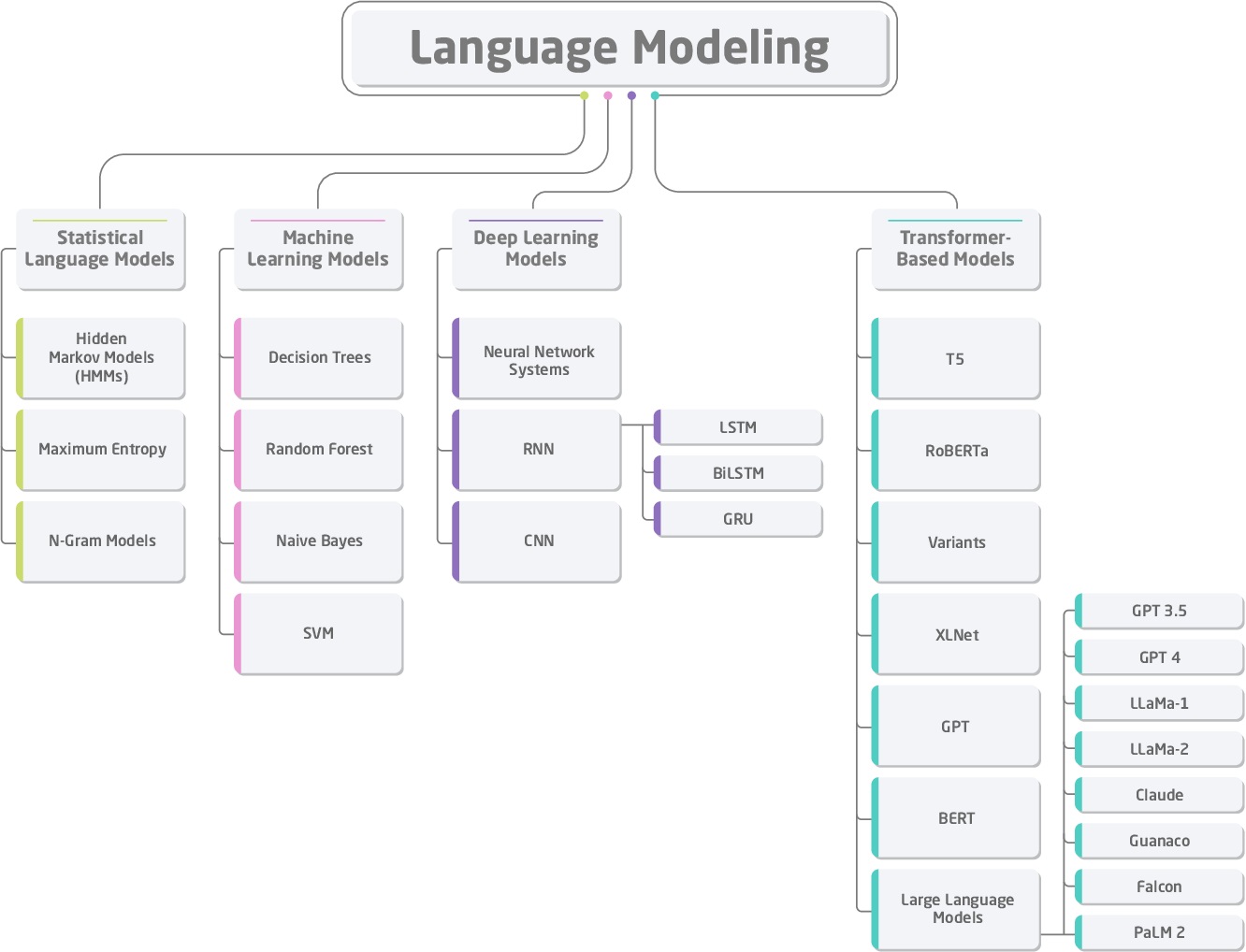

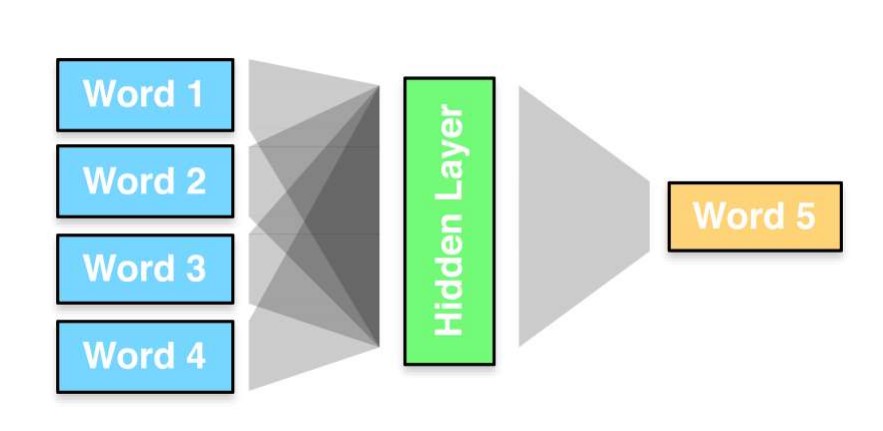

A simple method to describe LLM is an AI algorithm able to understanding and producing human language. Machine studying particularly Deep Studying is the spine of each LLM. It makes LLM able to decoding language enter based mostly on the patterns and complexity of characters and phrases in pure language.

LLMs are pre-trained on intensive information on the net which reveals outcomes after comprehending complexity, sample, and relation within the language.

At the moment, LLMs can comprehend and generate a variety of content material types like textual content, speech, footage, and movies, to call a number of. LLMs apply highly effective Pure Language Processing (NLP), machine translation, and Visible Query Answering (VQA).

One of the frequent examples of an LLM is a virtual voice assistant corresponding to Siri or Alexa. If you ask, “What’s the climate at present?”, the assistant will perceive your query and discover out what the climate is like. It then provides a logical reply. This easy interplay between machine and human occurs due to Giant Language Fashions. Attributable to these fashions, the assistant can learn person enter in pure language and reply accordingly.

Emergence and Historical past of LLMs

Synthetic Neural Networks (ANNs) and Rule-based Fashions

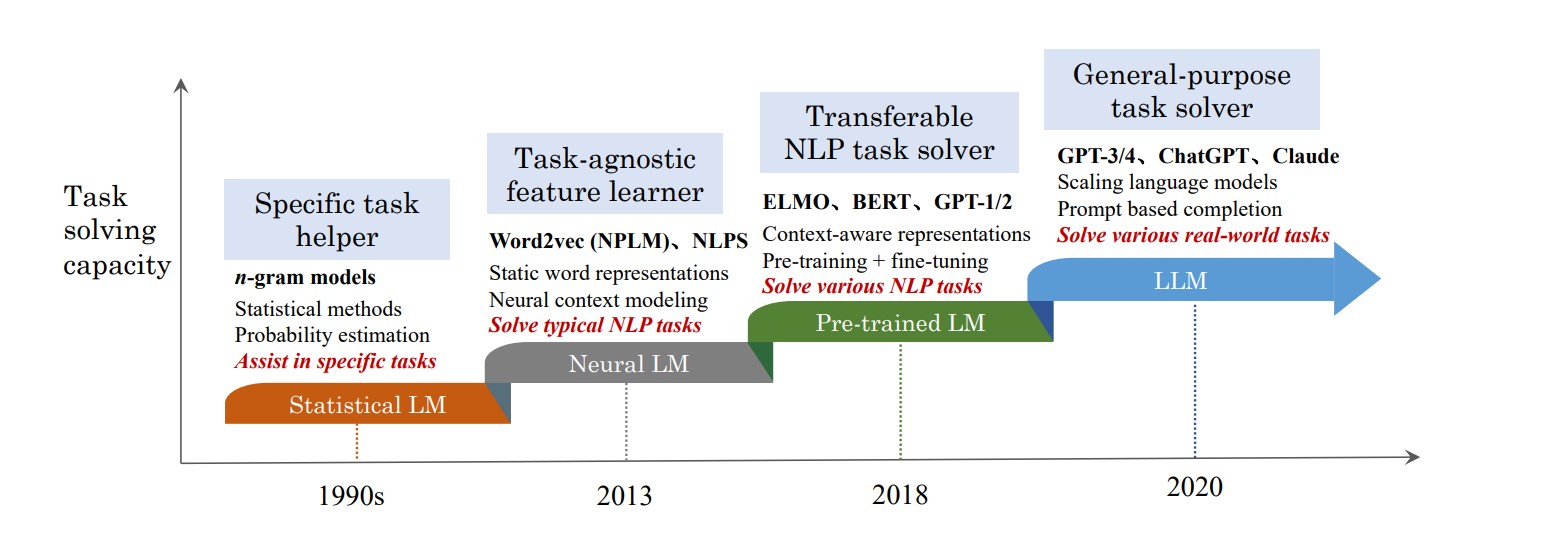

The muse of those Computational Linguistics fashions (CL) dates again to the Nineteen Forties when Warren McCulloch and Walter Pitts laid the groundwork for AI. This early analysis was not about designing a system however exploring the basics of Synthetic Neural Networks. Nonetheless, the very first language mannequin was a rule-based mannequin developed within the Nineteen Fifties. These fashions may perceive and produce pure language utilizing predefined guidelines however couldn’t comprehend advanced language or keep context.

Statistics-based Fashions

After the prominence of statistical fashions, the language fashions developed within the 90s may predict and analyze language patterns. Utilizing chances, they’re relevant in speech recognition and machine translation.

Introduction of Phrase Embeddings

The introduction of the phrase embeddings initiated nice progress in LLM and NLP. These fashions created within the Mid-2000s may seize semantic relationships precisely by representing phrases in a steady vector house.

Recurrent Neural Community Language Fashions (RNNLM)

A decade later, Recurrent Neural Community Language Fashions ((RNNLM) had been launched to deal with sequential information. These RNN language fashions had been the primary to maintain context throughout completely different components of the textual content for a greater understanding of language and output era.

Google Neural Machine Translation (GNMT)

In 2015, Google developed the revolutionary Google Neural Machine Translation (GNMT) for machine translation. The GNMT featured a deep neural community devoted to sentence-level translations somewhat than particular person word-base translations with a greater method to unsupervised studying.

It really works on the shared encoder-decoder-based structure with lengthy short-term reminiscence (LSTM) networks to seize context and the era of precise translations. Large datasets had been used to coach these fashions. Earlier than this mannequin, masking some advanced patterns within the language and adapting to potential language constructions was not potential.

Latest Growth

In recent times, deep studying structure transformer-based language fashions like BERT (Bidirectional Encoder Representations from Transformers) and GPT-1 (Generative Pre-trained Transformer) had been launched by Google and OpenAI, respectively. Such fashions use a bidirectional approach to know the context from each instructions in a sentence and in addition generate coherent textual content by predicting the subsequent phrase in a sequence to enhance duties like query answering and sentiment evaluation.

With the current launch of ChatGPT 4 and 4o, these fashions are getting extra subtle by including billions of parameters and setting new requirements in NLP duties.

Position of Giant Language Fashions in Trendy NLP

Giant Language Fashions are thought of subsets of Pure Language Processing and their progress additionally turns into necessary in Pure Language Processing (NLP). The fashions, corresponding to BERT and GPT-3 (improved model of GPT-1 and GPT-2), made NLP duties higher and polished.

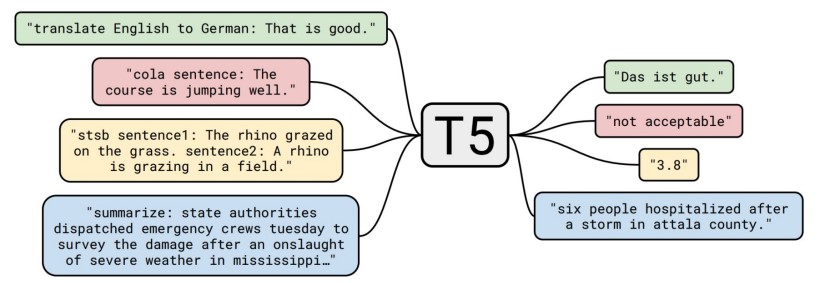

This language era mannequin requires giant quantities of information units to coach and so they use architectures like transformers to keep up long-range dependencies in textual content. For instance, BERT can perceive the context of a phrase like “financial institution” to distinguish whether or not it refers to a monetary establishment or the facet of a river.

OpenAI’s GPT-3, with its 175 billion parameters, is one other outstanding instance. Producing coherent and contextually related textual content is just made potential by OpenAI’s GPT-3 model. An instance of GPT-3’s functionality is its skill to finish sentences and paragraphs fluently, given a immediate.

LLM reveals excellent efficiency in duties involving data-to-text like suggesting based mostly in your preferences, translating to any language, and even inventive writing. Giant datasets ought to be used to coach these fashions after which fine-tuning is required based mostly on the precise software.

LLMs give rise to challenges as effectively whereas making nice progress. Issues like biases within the coaching set and the rising prices in computation want a large number of assets throughout intensive coaching and deployment.

Understanding The Working of LLMs – Transformer Structure

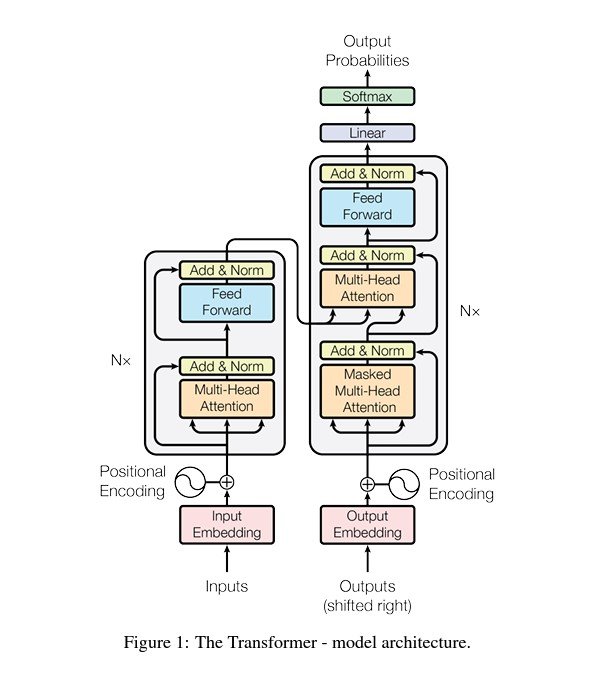

Deep studying structure Transformer serves because the cornerstone of contemporary LLMs and NLP. Not as a result of it’s comparatively environment friendly however because of the skill to deal with sequential information and seize long-range dependencies which might be long-needed in Giant Language Fashions. Launched by Vaswani et al. within the seminal paper “Consideration Is All You Want”, the Transformer mannequin revolutionized how language fashions course of and generate textual content.

Transformer Structure

A transformer structure primarily consists of an encoder and a decoder. Each include self-attention mechanisms and feed-forward neural networks. Moderately than processing the information body by body, transformers can course of enter information in parallel and keep long-range dependencies.

1. Tokenization

Each text-based enter is first tokenized into smaller items known as tokens. Tokenization converts every phrase into numbers representing a place in a predefined dictionary.

2. Embedding Layer

Tokens are handed by means of an embedding layer which then maps them to high-dimensional vectors to seize their semantic that means.

3. Positional Encoding

This step provides positional encoding to the embedding layer to assist the mannequin retain the order of tokens since transformers course of sequences in parallel.

4. Self-Consideration Mechanism

For each token, the self-attention mechanism generates and calculates three vectors:

The dot-product of queries with keys determines the token relevance. The normalization of the outcomes is completed utilizing SoftMax after which utilized to the worth vectors to get context-aware phrase illustration.

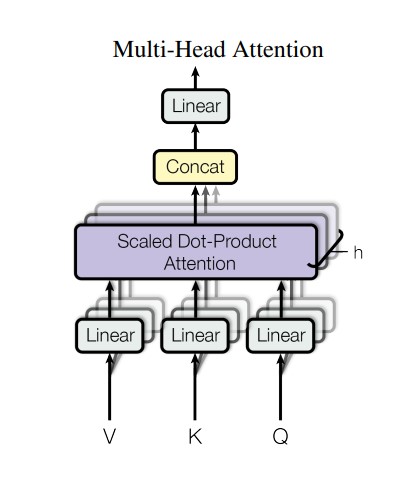

5. Multi-Head Consideration

Every head focuses on completely different enter sequences. The output is concatenated and linearly reworked leading to a greater understanding of advanced language constructions.

6. Feed-Ahead Neural Networks (FFNNs)

FFNNs course of every token independently. It consists of two linear transformations with a ReLU activation that provides non-linearity.

7. Encoder

The encoder processes the enter sequence and produces a context-rich illustration. It entails a number of layers of multi-head consideration and FFNNs.

8. Decoder

A decoder generates the output sequence. It processes the encoder’s output utilizing a further cross-attention mechanism, connecting sequences.

9. Output Technology

The output is generated because the vector of logic for every token. The SoftMax layer is utilized to the output to transform them into chance scores. The token with the very best rating is the subsequent phrase in sequence.

Instance

For a easy translation job by the Giant Language Mannequin, the encoder processes the enter sentence within the supply language to assemble a context-rich illustration, and the decoder generates a translated sentence within the goal language in response to the output generated by the encoder and the earlier tokens generated.

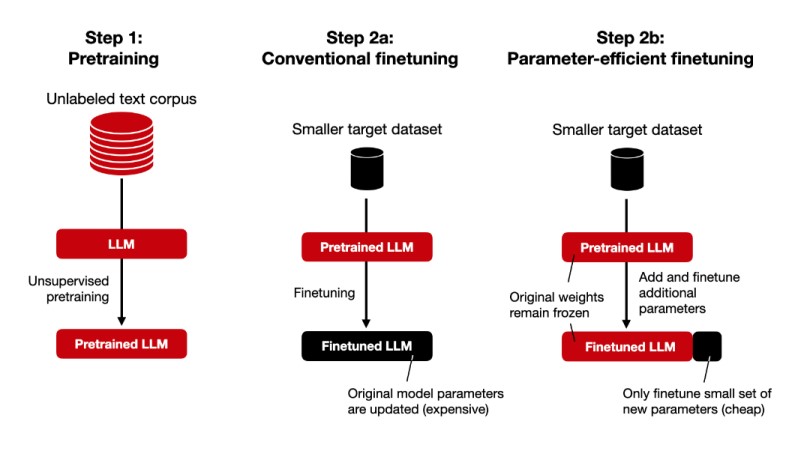

Customization and Superb-Tuning of LLMs For A Particular Job

It’s potential to course of complete sentences concurrently utilizing the transformer’s self-attention mechanism. That is the inspiration behind a transformer structure. Nonetheless, to additional enhance its effectivity and make it relevant to a sure software, a standard transformer mannequin wants fine-tuning.

Steps For Superb-Tuning

- Knowledge Assortment: Accumulate the information solely related to your particular job to make sure the mannequin achieves excessive accuracy.

- Knowledge preprocessing: Primarily based in your dataset and its nature, normalize and tokenize textual content, take away cease phrases, and carry out morphological evaluation to organize information for coaching.

- Choosing Mannequin: Select an acceptable pre-trained mannequin (e.g., GPT-4, BERT) based mostly in your particular job necessities.

- Hyperparameter Tuning: For mannequin efficiency, regulate the training fee, batch measurement, variety of epochs, and dropout fee.

- Superb-Tuning: Apply strategies like LoRA or PEFT to fine-tune the mannequin on domain-specific information.

- Analysis and Deployment: Use metrics corresponding to accuracy, precision, recall, and F1 rating to guage the mannequin and implement the fine-tuned mannequin in your job.

Giant Language Fashions’ Use-Instances and Functions

Medication

Giant Language Fashions mixed with Pc Imaginative and prescient have change into an amazing instrument for radiologists. They’re utilizing LLMs for radiologic decision purposes by means of the evaluation of photographs to allow them to have second opinions. Basic physicians and consultants additionally use LLMs like ChatGPT to get solutions to genetics-related questions from verified sources.

LLMs additionally automate the doctor-patient interplay, decreasing the chance of an infection or reduction for these unable to maneuver. It was a tremendous breakthrough within the medical sector particularly throughout pandemics like COVID-19. Instruments like XrayGPT automate the evaluation of X-ray photographs.

Training

Giant Language Fashions made studying materials extra interactive and simply accessible. With search engines like google and yahoo based mostly on AI fashions, lecturers can present college students with extra personalised programs and studying assets. Furthermore, AI instruments can provide one-on-one engagement and customised studying plans, corresponding to Khanmigo, a Digital Tutor by Khan Academy, which makes use of pupil efficiency information to make focused suggestions.

A number of studies present that ChatGPT’s efficiency on america Medical Licensing Examination (USMLE) was met or above the passing rating.

Finance

Danger evaluation, automated buying and selling, enterprise report evaluation, and help reporting may be carried out utilizing LLMs. Fashions like BloombergGPT obtain excellent outcomes for information classification, entity recognition, and question-answering duties.

LLMs built-in with Buyer Relation Administration Methods (CRMs) have change into essential instrument for many companies as they automate most of their enterprise operations.

Different Functions

- Builders are utilizing LLMs to jot down and debug their codes.

- Content material creation turns into tremendous straightforward with LLMs. They will generate blogs or YouTube scripts very quickly.

- LLMs can take enter of agricultural land and site and supply particulars on whether or not it’s good for agriculture or not.

- Instruments like PDFGPT assist automate literature critiques and extract related information or summarize textual content from the chosen analysis papers.

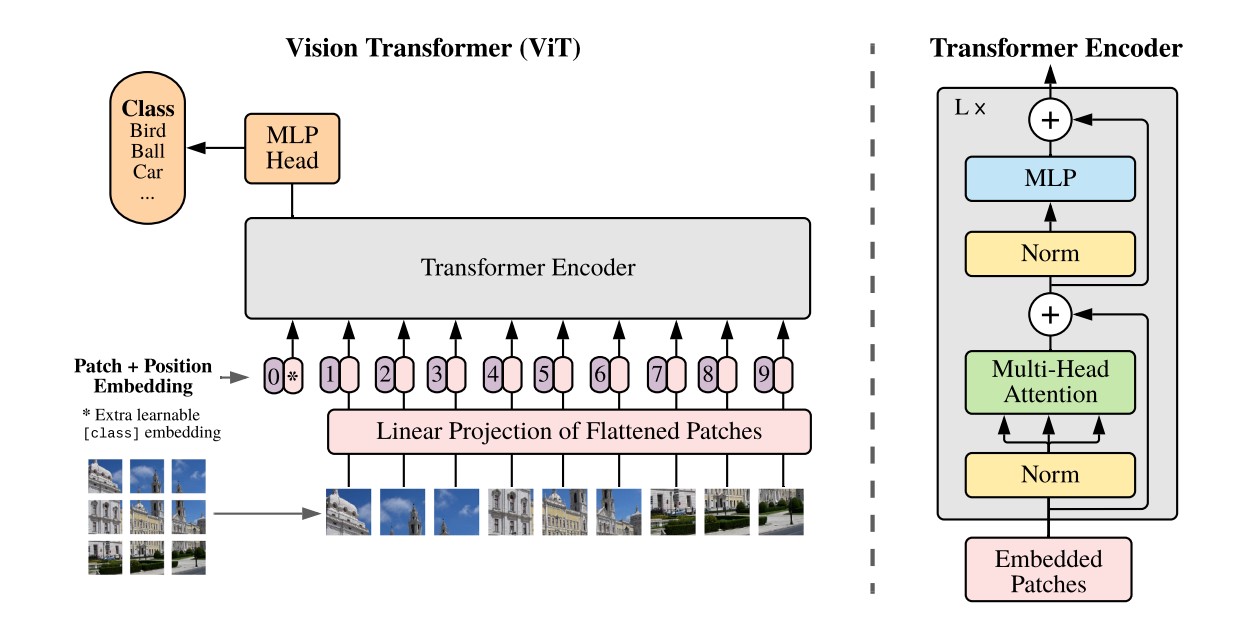

- Instruments like Imaginative and prescient Transformers (ViT) apply LLM ideas to picture recognition which helps in medical imaging.

What’s Subsequent?

Earlier than LLMs, it wasn’t straightforward to know and convey machine language. Nonetheless, Giant Language Fashions are part of our on a regular basis life making it too good to be true that we are able to discuss to computer systems. We are able to get extra personalised responses and perceive them due to their text-generation skill.

LLMs fill the long-awaited hole between machine and human communication. For the long run, these fashions want extra task-specific modeling and improved and correct outcomes. Getting extra correct and complicated with time, think about what we are able to obtain with the convergence of LLMs, Pc Imaginative and prescient, and Robotics.

Learn extra associated subjects and blogs about LLMs and Deep Studying: