Photonic computing startup Lightmatter is taking its massive shot on the quickly rising AI computation market with a hardware-software combo it claims will assist the business degree up — and save loads of electrical energy besides.

Lightmatter’s chips mainly use optical movement to unravel computational processes like matrix vector merchandise. This math is on the coronary heart of loads of AI work and at the moment carried out by GPUs and TPUs specializing in it however use conventional silicon gates and transistors.

The difficulty with these is that we’re approaching the bounds of density and due to this fact pace for a given wattage or dimension. Advances are nonetheless being made however at nice price and pushing the perimeters of classical physics. The supercomputers that make coaching fashions like GPT-4 doable are monumental, devour enormous quantities of energy and produce loads of waste warmth.

“The largest firms on the planet are hitting an vitality energy wall and experiencing huge challenges with AI scalability. Conventional chips push the boundaries of what’s doable to chill, and knowledge facilities produce more and more massive vitality footprints. AI advances will gradual considerably until we deploy a brand new resolution in knowledge facilities,” stated Lightmatter CEO and founder Nick Harris.

“Some have projected that coaching a single massive language mannequin can take extra vitality than 100 U.S. houses devour in a yr. Moreover, there are estimates that 10%-20% of the world’s complete energy will go to AI inference by the tip of the last decade until new compute paradigms are created.”

Lightmatter, after all, intends to be a kind of new paradigms. Its method is, at the least probably, sooner and extra environment friendly, utilizing arrays of microscopic optical waveguides to let the sunshine primarily carry out logic operations simply by passing via them: a kind of analog-digital hybrid. For the reason that waveguides are passive, the primary energy draw is creating the sunshine itself, then studying and dealing with the output.

One actually attention-grabbing side of this type of optical computing is that you could improve the facility of the chip simply by utilizing multiple coloration directly. Blue does one operation whereas crimson does one other — although in follow it’s extra like 800 nanometers wavelength does one, 820 does one other. It’s not trivial to take action, after all, however these “digital chips” can vastly improve the quantity of computation accomplished on the array. Twice the colours, twice the facility.

Harris began the corporate primarily based on optical computing work he and his crew did at MIT (which is licensing the related patents to them) and managed to wrangle an $11 million seed spherical again in 2018. One investor stated then that “this isn’t a science undertaking,” however Harris admitted in 2021 that whereas they knew “in precept” the tech ought to work, there was a hell of rather a lot to do to make it operational. Fortuitously, he was telling me that within the context of buyers dropping an extra $80 million on the corporate.

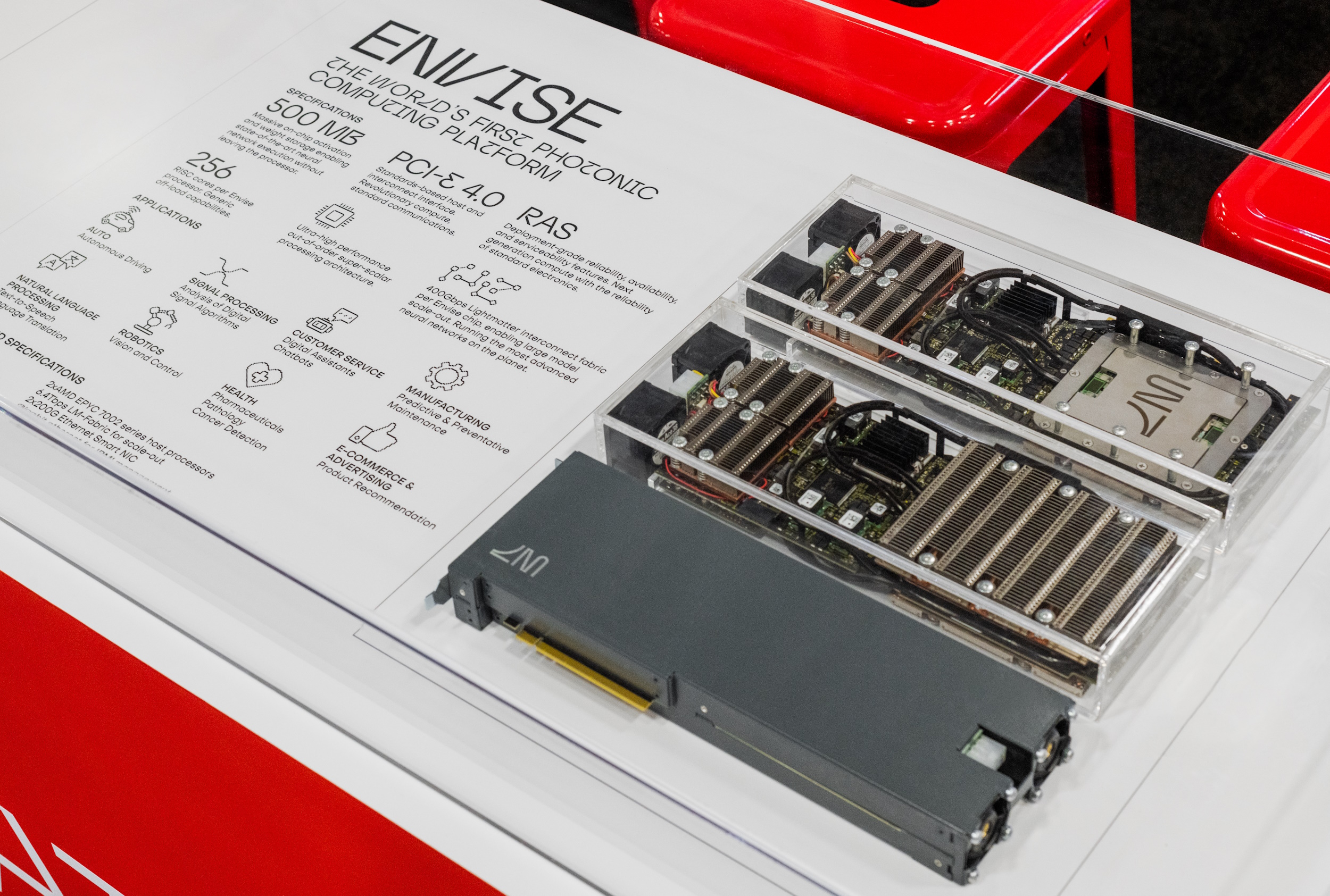

Now Lightmatter has raised a $154 million C spherical and is making ready for its precise debut. It has a number of pilots going with its full stack of Envise (computing {hardware}), Passage (interconnect, essential for big computing operations) and Idiom, a software program platform that Harris says ought to let machine studying builders adapt rapidly.

A Lightmatter Envise unit in captivity. Picture Credit: Lightmatter

“We’ve constructed a software program stack that integrates seamlessly with PyTorch and TensorFlow. The workflow for machine studying builders is identical from there — we take the neural networks in-built these business normal functions and import our libraries, so all of the code runs on Envise,” he defined.

The corporate declined to make any particular claims about speedups or effectivity enhancements, and since it’s a special structure and computing methodology it’s onerous to make apples-to-apples comparisons. However we’re undoubtedly speaking alongside the strains of an order of magnitude, not a measly 10% or 15%. Interconnect is equally upgraded, because it’s ineffective to have that degree of processing remoted on one board.

After all, this isn’t the type of general-purpose chip that you could possibly use in your laptop computer; it’s extremely particular to this activity. But it surely’s the dearth of activity specificity at this scale that appears to be holding again AI growth — although “holding again” is the fallacious time period because it’s transferring at nice pace. However that growth is massively expensive and unwieldy.

The pilots are in beta, and mass manufacturing is deliberate for 2024, at which level presumably they should have sufficient suggestions and maturity to deploy in knowledge facilities.

The funding for this spherical got here from SIP International, Constancy Administration & Analysis Firm, Viking International Traders, GV, HPE Pathfinder and current buyers.