Massive Language Fashions (LLMs) able to complicated reasoning duties have proven promise in specialised domains like programming and inventive writing. Nonetheless, the world of LLMs is not merely a plug-and-play paradise; there are challenges in usability, security, and computational calls for. On this article, we are going to dive deep into the capabilities of Llama 2, whereas offering an in depth walkthrough for establishing this high-performing LLM through Hugging Face and T4 GPUs on Google Colab.

Developed by Meta with its partnership with Microsoft, this open-source massive language mannequin goals to redefine the realms of generative AI and pure language understanding. Llama 2 is not simply one other statistical mannequin skilled on terabytes of information; it is an embodiment of a philosophy. One which stresses an open-source strategy because the spine of AI growth, notably within the generative AI house.

Llama 2 and its dialogue-optimized substitute, Llama 2-Chat, come outfitted with as much as 70 billion parameters. They endure a fine-tuning course of designed to align them intently with human preferences, making them each safer and simpler than many different publicly out there fashions. This stage of granularity in fine-tuning is commonly reserved for closed “product” LLMs, resembling ChatGPT and BARD, which aren’t usually out there for public scrutiny or customization.

Technical Deep Dive of Llama 2

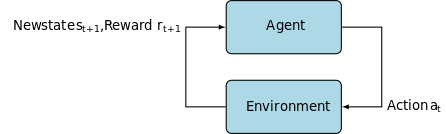

For coaching the Llama 2 mannequin; like its predecessors, it makes use of an auto-regressive transformer structure, pre-trained on an intensive corpus of self-supervised knowledge. Nonetheless, it provides an extra layer of sophistication through the use of Reinforcement Studying with Human Suggestions (RLHF) to higher align with human conduct and preferences. That is computationally costly however important for bettering the mannequin’s security and effectiveness.

Meta Llama 2 coaching structure

Pretraining & Information Effectivity

Llama 2’s foundational innovation lies in its pretraining regime. The mannequin takes cues from its predecessor, Llama 1, however introduces a number of essential enhancements to raise its efficiency. Notably, a 40% improve within the complete variety of tokens skilled and a twofold enlargement in context size stand out. Furthermore, the mannequin leverages grouped-query consideration (GQA) to amplify inference scalability.

Supervised Fantastic-Tuning (SFT) & Reinforcement Studying with Human Suggestions (RLHF)

Llama-2-chat has been rigorously fine-tuned utilizing each SFT and Reinforcement Studying with Human Suggestions (RLHF). On this context, SFT serves as an integral part of the RLHF framework, refining the mannequin’s responses to align intently with human preferences and expectations.

OpenAI has supplied an insightful illustration that explains the SFT and RLHF methodologies employed in InstructGPT. Very similar to LLaMa 2, InstructGPT additionally leverages these superior coaching strategies to optimize its mannequin’s efficiency.

Step 1 within the beneath picture focuses on Supervised Fantastic-Tuning (SFT), whereas the next steps full the Reinforcement Studying from Human Suggestions (RLHF) course of.

Supervised Fantastic-Tuning (SFT) is a specialised course of aimed toward optimizing a pre-trained Massive Language Mannequin (LLM) for a particular downstream job. Not like unsupervised strategies, which do not require knowledge validation, SFT employs a dataset that has been pre-validated and labeled.

Typically crafting these datasets is dear and time-consuming. Llama 2 strategy was high quality over amount. With simply 27,540 annotations, Meta’s staff achieved efficiency ranges aggressive with human annotators. This aligns effectively with recent studies exhibiting that even restricted however clear datasets can drive high-quality outcomes.

Within the SFT course of, the pre-trained LLM is uncovered to a labeled dataset, the place the supervised studying algorithms come into play. The mannequin’s inner weights are recalibrated primarily based on gradients calculated from a task-specific loss operate. This loss operate quantifies the discrepancies between the mannequin’s predicted outputs and the precise ground-truth labels.

This optimization permits the LLM to know the intricate patterns and nuances embedded throughout the labeled dataset. Consequently, the mannequin is not only a generalized software however evolves right into a specialised asset, adept at performing the goal job with a excessive diploma of accuracy.

Reinforcement studying is the following step, aimed toward aligning mannequin conduct with human preferences extra intently.

The tuning section leveraged Reinforcement Studying from Human Suggestions (RLHF), using strategies like Importance Sampling and Proximal Policy Optimization to introduce algorithmic noise, thereby evading native optima. This iterative fine-tuning not solely improved the mannequin but additionally aligned its output with human expectations.

The Llama 2-Chat used a binary comparability protocol to gather human choice knowledge, marking a notable pattern in the direction of extra qualitative approaches. This mechanism knowledgeable the Reward Fashions, that are then used to fine-tune the conversational AI mannequin.

Ghost Consideration: Multi-Flip Dialogues

Meta launched a brand new characteristic, Ghost Consideration (GAtt) which is designed to reinforce Llama 2’s efficiency in multi-turn dialogues. This successfully resolves the persistent situation of context loss in ongoing conversations. GAtt acts like an anchor, linking the preliminary directions to all subsequent person messages. Coupled with reinforcement studying strategies, it aids in producing constant, related, and user-aligned responses over longer dialogues.

From Meta Git Repository Utilizing obtain.sh

- Go to the Meta Web site: Navigate to Meta’s official Llama 2 site and click on ‘Obtain The Mannequin’

- Fill within the Particulars: Learn via and settle for the phrases and circumstances to proceed.

- E-mail Affirmation: As soon as the shape is submitted, you will obtain an e mail from Meta with a hyperlink to obtain the mannequin from their git repository.

- Execute obtain.sh: Clone the Git repository and execute the

obtain.shscript. This script will immediate you to authenticate utilizing a URL from Meta that expires in 24 hours. You’ll additionally select the dimensions of the mannequin—7B, 13B, or 70B.

From Hugging Face

- Obtain Acceptance E-mail: After gaining entry from Meta, head over to Hugging Face.

- Request Entry: Select your required mannequin and submit a request to grant entry.

- Affirmation: Count on a ‘granted entry’ e mail inside 1-2 days.

- Generate Entry Tokens: Navigate to ‘Settings’ in your Hugging Face account to create entry tokens.

Transformers 4.31 launch is totally suitable with LLaMa 2 and opens up many instruments and functionalities throughout the Hugging Face ecosystem. From coaching and inference scripts to 4-bit quantization with bitsandbytes and Parameter Environment friendly Fantastic-tuning (PEFT), the toolkit is in depth. To get began, ensure you’re on the most recent Transformers launch and logged into your Hugging Face account.

This is a streamlined information to working LLaMa 2 mannequin inference in a Google Colab atmosphere, leveraging a GPU runtime:

Google Colab Mannequin – T4 GPU

Bundle Set up

!pip set up transformers !huggingface-cli login

Import the mandatory Python libraries.

from transformers import AutoTokenizer import transformers import torch

Initialize the Mannequin and Tokenizer

On this step, specify which Llama 2 mannequin you will be utilizing. For this information, we use meta-llama/Llama-2-7b-chat-hf.

mannequin = "meta-llama/Llama-2-7b-chat-hf" tokenizer = AutoTokenizer.from_pretrained(mannequin)

Arrange the Pipeline

Make the most of the Hugging Face pipeline for textual content technology with particular settings:

pipeline = transformers.pipeline(

"text-generation",

mannequin=mannequin,

torch_dtype=torch.float16,

device_map="auto")

Generate Textual content Sequences

Lastly, run the pipeline and generate a textual content sequence primarily based in your enter:

sequences = pipeline(

'Who're the important thing contributors to the sphere of synthetic intelligence?n',

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

max_length=200)

for seq in sequences:

print(f"Consequence: {seq['generated_text']}")

A16Z’s UI for LLaMa 2

Andreessen Horowitz (A16Z) has lately launched a cutting-edge Streamlit-based chatbot interface tailor-made for Llama 2. Hosted on GitHub, this UI preserves session chat historical past and likewise supplies the pliability to pick from a number of Llama 2 API endpoints hosted on Replicate. This user-centric design goals to simplify interactions with Llama 2, making it a super software for each builders and end-users. For these all in favour of experiencing this, a reside demo is accessible at Llama2.ai.

Llama 2: What makes it completely different from GPT Fashions and its predecessor Llama 1?

Selection in Scale

Not like many language fashions that provide restricted scalability, Llama 2 provides you a bunch of various choices for fashions with diverse parameters. The mannequin scales from 7 billion to 70 billion parameters, thereby offering a spread of configurations to go well with numerous computational wants.

Enhanced Context Size

The mannequin has an elevated context size of 4K tokens than Llama 1. This permits it to retain extra data, thus enhancing its potential to grasp and generate extra complicated and in depth content material.

Grouped Question Consideration (GQA)

The structure makes use of the idea of GQA, designed to lock the eye computation course of by caching earlier token pairs. This successfully improves the mannequin’s inference scalability to reinforce accessibility.

Efficiency Benchmarks

Efficiency Evaluation of Llama 2-Chat Fashions with ChatGPT and Different Rivals

LLama 2 has set a brand new customary in efficiency metrics. It not solely outperforms its predecessor, LLama 1 but additionally provides vital competitors to different fashions like Falcon and GPT-3.5.

Llama 2-Chat’s largest mannequin, the 70B, additionally outperforms ChatGPT in 36% of situations and matches efficiency in one other 31.5% of instances. Supply: Paper

Open Supply: The Energy of Group

Meta and Microsoft intend for Llama 2 to be greater than only a product; they envision it as a community-driven software. Llama 2 is free to entry for each analysis and non-commercial functions. The are aiming to democratize AI capabilities, making it accessible to startups, researchers, and companies. An open-source paradigm permits for the ‘crowdsourced troubleshooting’ of the mannequin. Builders and AI ethicists can stress take a look at, establish vulnerabilities, and supply options at an accelerated tempo.

Whereas the licensing phrases for LLaMa 2 are usually permissive, exceptions do exist. Massive enterprises boasting over 700 million month-to-month customers, resembling Google, require specific authorization from Meta for its utilization. Moreover, the license prohibits using LLaMa 2 for the development of different language fashions.

Present Challenges with Llama 2

- Information Generalization: Each Llama 2 and GPT-4 generally falter in uniformly excessive efficiency throughout divergent duties. Information high quality and variety are simply as pivotal as quantity in these eventualities.

- Mannequin Transparency: Given prior setbacks with AI producing deceptive outputs, exploring the decision-making rationale behind these complicated fashions is paramount.

Code Llama – Meta’s Newest Launch

Meta lately introduced Code Llama which is a big language mannequin specialised in programming with parameter sizes starting from 7B to 34B. Much like ChatGPT Code Interpreter; Code Llama can streamline developer workflows and make programming extra accessible. It accommodates numerous programming languages and is available in specialised variations, resembling Code Llama–Python for Python-specific duties. The mannequin additionally provides completely different efficiency ranges to satisfy numerous latency necessities. Overtly licensed, Code Llama invitations neighborhood enter for ongoing enchancment.

Conclusion

This text has walked you thru establishing a Llama 2 mannequin for textual content technology on Google Colab with Hugging Face assist. Llama 2’s efficiency is fueled by an array of superior strategies from auto-regressive transformer architectures to Reinforcement Studying with Human Suggestions (RLHF). With as much as 70 billion parameters and options like Ghost Consideration, this mannequin outperforms present trade requirements in sure areas, and with its open nature, it paves the way in which for a brand new period in pure language understanding and generative AI.