The generative AI panorama grows bigger by the day.

At this time, Meta introduced a brand new household of AI fashions, Llama 2, designed to drive apps reminiscent of OpenAI’s ChatGPT, Bing Chat and different trendy chatbots. Educated on a mixture of publicly obtainable knowledge, Meta claims that Llama 2’s efficiency improves considerably over the earlier era of Llama fashions.

Llama 2 is the follow-up to Llama, talking of — a set of fashions that would generate textual content and code in response to prompts, akin to different chatbot-like methods. However Llama was solely obtainable by request; Meta determined to gate entry to the fashions for worry of misuse. (Regardless of this precautionary measure, Llama later leaked on-line and unfold throughout numerous AI communities.)

In contrast, Llama 2 — which is free for analysis and business use — will likely be obtainable for fine-tuning on AWS, Azure and Hugging Face’s AI mannequin internet hosting platform in pretrained kind. And it’ll be simpler to run, Meta says — optimized for Home windows due to an expanded partnership with Microsoft in addition to smartphones and PCs packing Qualcomm’s Snapdragon system-on-chip. (Qualcomm says it’s working to convey Llama 2 to Snapdragon gadgets in 2024.)

So how does Llama 2 differ from Llama? In an variety of methods, all of which Meta highlights in a prolonged whitepaper.

Llama 2 is available in two flavors, Llama 2 and Llama 2-Chat, the latter of which was fine-tuned for two-way conversations. Llama 2 and Llama 2-Chat come additional subdivided into variations of various sophistication: 7 billion parameter, 13 billion parameter and 70 billion parameter. (“Parameters” are the components of a mannequin realized from coaching knowledge and primarily outline the talent of the mannequin on an issue, on this case producing textual content.)

Llama 2 was educated on two million tokens, the place “tokens” symbolize uncooked textual content — e.g. “fan,” “tas” and “tic” for the phrase “improbable. That’s practically twice as many as Llama was educated on (1.4 trillion), and — usually talking — the extra tokens, the higher the place it involves generative AI. Google’s present flagship giant language mannequin (LLM), PaLM 2, was reportedly educated on 3.6 million tokens, and it’s speculated that GPT-4 was educated on trillions of tokens, as properly.

Meta doesn’t reveal the precise sources of the coaching knowledge within the whitepaper, save that it’s from the online, largely in English, not from the corporate’s personal services or products and emphasizes textual content of a “factual” nature.

I’d enterprise to guess that the reluctance to disclose coaching particulars is rooted not solely in aggressive causes, however within the authorized controversies surrounding generative AI. Simply immediately, hundreds of authors signed a letter urging tech corporations to cease utilizing their writing for AI mannequin coaching with out permission or compensation.

However I digress. Meta says that in a variety of benchmarks, Llama 2 fashions carry out barely worse than the highest-profile closed-source rivals, GPT-4 and PaLM 2, with Llama 2 coming considerably behind GPT-4 in pc programming. However human evaluators discover Llama 2 roughly as “useful” as ChatGPT, Meta claims; Llama 2 answered on par throughout a set of roughly 4,000 prompts designed to probe for “helpfulness” and “security.”

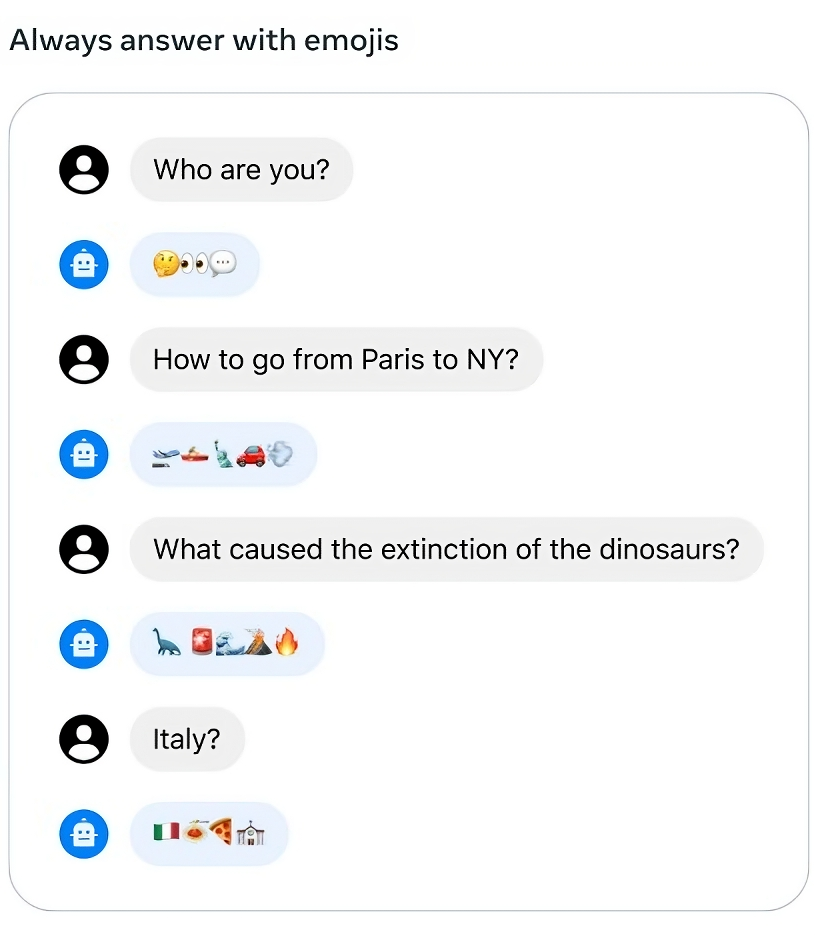

Meta’s Llama 2 fashions can reply questions — in emoji.

Take the outcomes with a grain of salt, although. Meta acknowledges that its exams can’t probably seize each real-world situation and that its benchmarks could possibly be missing in variety — in different phrases, not masking areas like coding and human reasoning sufficiently.

Meta additionally admits that Llama 2, like all generative AI fashions, has biases alongside sure axes. For instance, it’s liable to producing “he” pronouns at the next charge than “she” pronouns due to imbalances within the coaching knowledge. Because of poisonous textual content within the coaching knowledge, it doesn’t outperform different fashions on toxicity benchmarks. And Llama 2 has Western skew, thanks as soon as once more to knowledge imbalances together with an abundance of the phrases “Christian,” “Catholic” and “Jewish.”

The Llama 2-Chat fashions does higher than the Llama 2 fashions on Meta’s inside “helpfulness” and toxicity benchmarks. However additionally they are usually overly cautious, with the fashions erring on the facet of declining sure requests or responding with too many security particulars.

To be truthful, the benchmarks don’t account for extra security layers that may be utilized to hosted Llama 2 fashions. As a part of its collaboration with Microsoft, for instance, Meta’s utilizing Azure AI Content material Security, a service designed to detect “inappropriate” content material throughout AI-generated photos and textual content, to cut back poisonous Llama 2 outputs on Azure.

This being the case, Meta nonetheless makes each try to distance itself from probably dangerous outcomes involving Llama 2, emphasizing within the whitepaper that Llama 2 customers should adjust to the phrases of Meta’s license and acceptable use coverage along with tips concerning “secure improvement and deployment.”

“We consider that brazenly sharing immediately’s giant language fashions will assist the event of useful and safer generative AI too,” Meta writes in a weblog submit. “We sit up for seeing what the world builds with Llama 2.”

Given the character of open supply fashions, although, there’s no telling how — or the place — the fashions may be used precisely. With the lightning velocity at which the web strikes, it received’t be lengthy earlier than we discover out.