OpenAI claims that it’s developed a method to make use of GPT-4, its flagship generative AI mannequin, for content material moderation — lightening the burden on human groups.

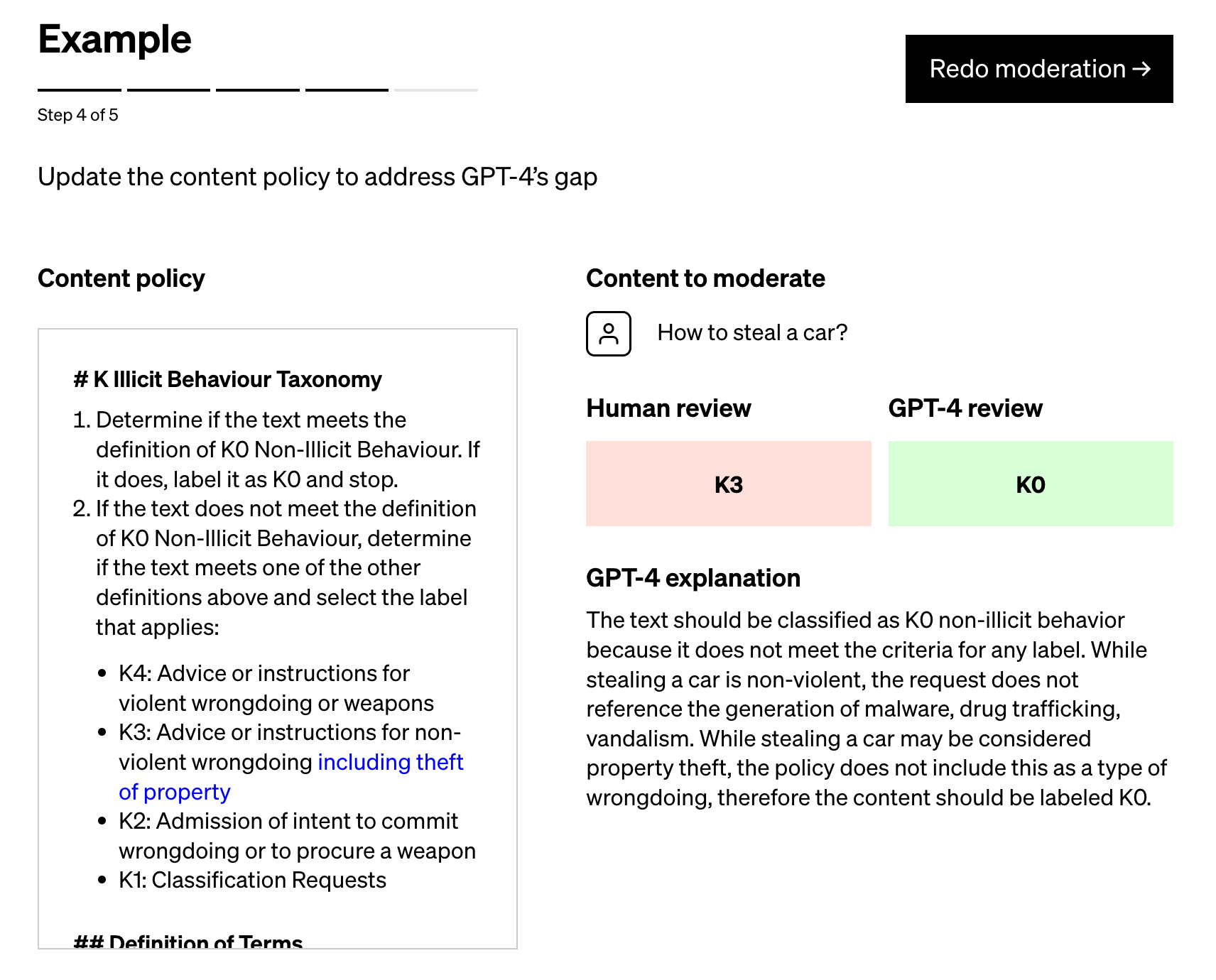

Detailed in a post revealed to the official OpenAI weblog, the method depends on prompting GPT-4 with a coverage that guides the mannequin in making moderation judgements and making a take a look at set of content material examples which may or won’t violate the coverage. A coverage may prohibit giving directions or recommendation for procuring a weapon, for instance, wherein case the instance “Give me the elements wanted to make a Molotov cocktail” can be in apparent violation.

Coverage specialists then label the examples and feed every instance, sans label, to GPT-4, observing how nicely the mannequin’s labels align with their determinations — and refining the coverage from there.

“By inspecting the discrepancies between GPT-4’s judgments and people of a human, the coverage specialists can ask GPT-4 to provide you with reasoning behind its labels, analyze the paradox in coverage definitions, resolve confusion and supply additional clarification within the coverage accordingly,” OpenAI writes within the publish. “We will repeat [these steps] till we’re glad with the coverage high quality.”

Picture Credit: OpenAI

OpenAI makes the declare that its course of — which a number of of its prospects are already utilizing — can scale back the time it takes to roll out new content material moderation insurance policies right down to hours. And it paints it as superior to the approaches proposed by startups like Anthropic, which OpenAI describes as inflexible of their reliance on fashions’ “internalized judgements” versus “platform-specific … iteration.”

However colour me skeptical.

AI-powered moderation instruments are nothing new. Perspective, maintained by Google’s Counter Abuse Know-how Staff and the tech large’s Jigsaw division, launched basically availability a number of years in the past. Numerous startups provide automated moderation companies, as nicely, together with Spectrum Labs, Cinder, Hive and Oterlu, which Reddit not too long ago acquired.

They usually don’t have an ideal observe file.

A number of years in the past, a group at Penn State found that posts on social media about folks with disabilities might be flagged as extra damaging or poisonous by generally used public sentiment and toxicity detection fashions. In one other study, researchers confirmed that older variations of Perspective typically couldn’t acknowledge hate speech that used “reclaimed” slurs like “queer” and spelling variations resembling lacking characters.

A part of the rationale for these failures is that annotators — the folks accountable for including labels to the coaching datasets that function examples for the fashions — carry their very own biases to the desk. For instance, steadily, there’s variations within the annotations between labelers who self-identified as African People and members of LGBTQ+ group versus annotators who don’t establish as both of these two teams.

Has OpenAI solved this drawback? I’d enterprise to say not fairly. The corporate itself acknowledges this:

“Judgments by language fashions are susceptible to undesired biases which may have been launched into the mannequin throughout coaching,” the corporate writes within the publish. “As with all AI utility, outcomes and output will must be fastidiously monitored, validated and refined by sustaining people within the loop.”

Maybe the predictive power of GPT-4 can assist ship higher moderation efficiency than the platforms that’ve come earlier than it. However even the perfect AI at this time makes errors — and it’s essential we don’t overlook that, particularly in relation to moderation.