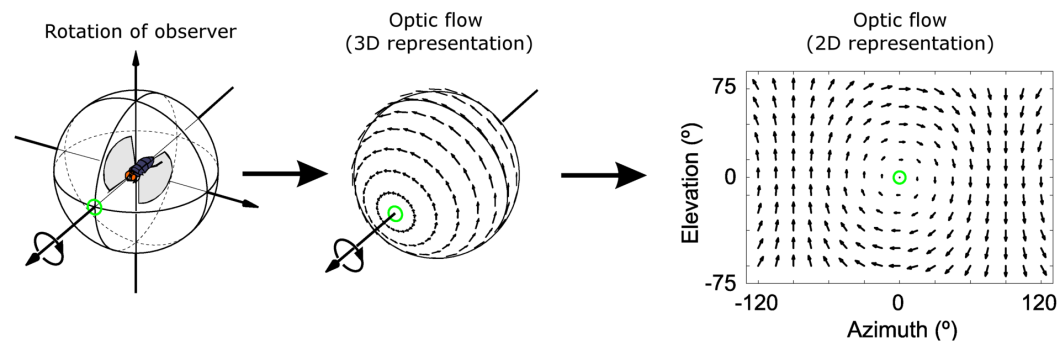

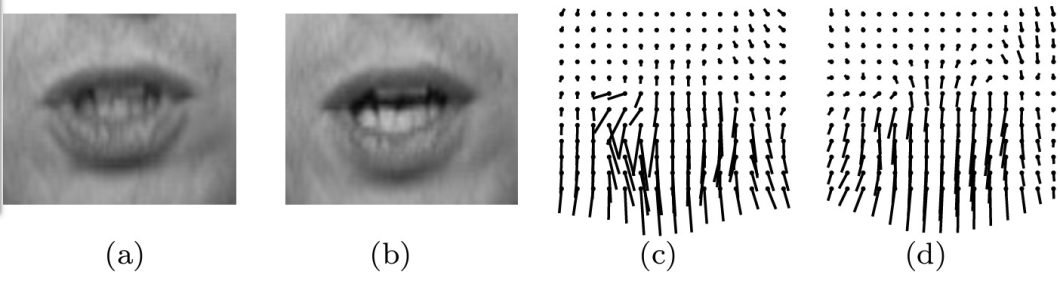

Optical movement quantifies the movement of objects between consecutive frames captured by a digicam. These algorithms try and seize the obvious movement of brightness patterns within the picture. It is a crucial subfield of laptop imaginative and prescient, enabling machines to grasp scene dynamics and motion.

The idea of optical movement dates again to the early works of James Gibson within the Nineteen Fifties. Gibson launched the idea within the context of visible notion. Researchers didn’t begin learning and utilizing optical movement till the Eighties, when computational instruments have been launched.

A major milestone was the event of the Lucas-Kanade technique in 1981. This supplied a foundational algorithm for estimating optical movement in a neighborhood window of a picture. The Horn-Schunck algorithm adopted quickly after, introducing a world strategy to optical movement estimation throughout all the picture.

Optical movement estimation depends on the idea that the brightness of some extent is fixed over quick time durations. Mathematically, that is expressed by means of the optical movement equation, Ixvx+Iyvy+It=0.

- Ix and Iy mirror the spatial gradients of the pixel depth within the x and y instructions, respectively

- It is the temporal gradient

- vx and vyare the movement velocities within the x and y instructions, respectively.

Newer breakthroughs contain leveraging deep studying fashions like FlowNet, FlowNet 2.0, and LiteFlowNet. These fashions remodeled optical movement estimation by considerably enhancing accuracy and computational effectivity. That is largely due to the mixing of Convolutional Neural Networks (CNNs) and the supply of huge datasets.

Even in settings with occlusions, optical movement strategies these days can precisely anticipate sophisticated patterns of obvious movement.

Methods and Algorithms

Various kinds of optic movement algorithms, every with a novel method for calculating the sample of movement, led to the evolution of computational approaches. Conventional algorithms just like the Lucas-Kanade and Horn-Schunck strategies laid the groundwork for this space of laptop imaginative and prescient.

The Lucas-Kanade Optical Move Technique

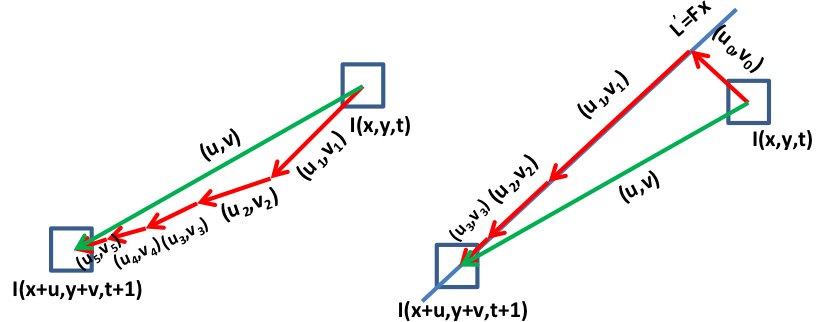

This technique caters to make use of circumstances with a sparse characteristic set. It operates on the idea that movement is regionally easy, making use of a Taylor-series approximation to the picture gradients. You possibly can thereby clear up an optical movement equation, which generally entails two unknown variables for every level within the characteristic set. This technique is very environment friendly for monitoring well-defined corners and textures typically, as recognized by the Shi-Tomasi nook detection or the Harris nook detector.

The Horn-Schunck Algorithm

This algorithm is a dense optical movement approach. It takes a world strategy by assuming smoothness within the optical movement throughout all the picture. This technique minimizes a world error operate and might infer the movement for each pixel. It provides extra detailed constructions of movement at the price of greater computational complexity.

Nevertheless, novel deep studying algorithms have ushered in a brand new period of optical movement algorithms. Fashions like FlowNet, LiteFlowNet, and PWC-Internet use CNNs to study from huge datasets of photographs. This allows the prediction with higher accuracy and robustness, particularly in difficult eventualities. For instance, in scenes with occlusions, various illumination, and sophisticated dynamic textures.

As an example the variations amongst these algorithms, contemplate the next comparative desk that outlines their efficiency by way of accuracy, pace, and computational necessities:

| Algorithm | Accuracy | Pace (FPS) | Computational Necessities |

|---|---|---|---|

| Lucas-Kanade | Reasonable | Excessive | Low |

| Horn-Schunck | Excessive | Low | Excessive |

| FlowNet | Excessive | Reasonable | Reasonable |

| LiteFlowNet | Very Excessive | Reasonable | Reasonable |

| PWC-Internet | Very Excessive | Excessive | Excessive |

Conventional strategies reminiscent of Lucas-Kanade and Horn-Schunck are foundational and shouldn’t be discounted. Nevertheless, they often can’t compete with the accuracy and robustness of deep studying approaches. Deep studying strategies, whereas highly effective, typically require substantial computational assets. This implies they may not be as appropriate for real-time functions.

In apply, the selection of algorithm will depend on the particular utility and constraints. The pace and low computational load of the Lucas-Kanade technique could also be extra apt for a cell app. The detailed output of a dense strategy, like Horn-Shunck, could also be extra appropriate for an offline video evaluation activity.

Optical Move in Motion – Use Circumstances and Functions

At the moment, you’ll discover functions of optical movement know-how in a wide range of industries. It’s changing into more and more essential for good laptop imaginative and prescient applied sciences that may interpret dynamic visible data shortly.

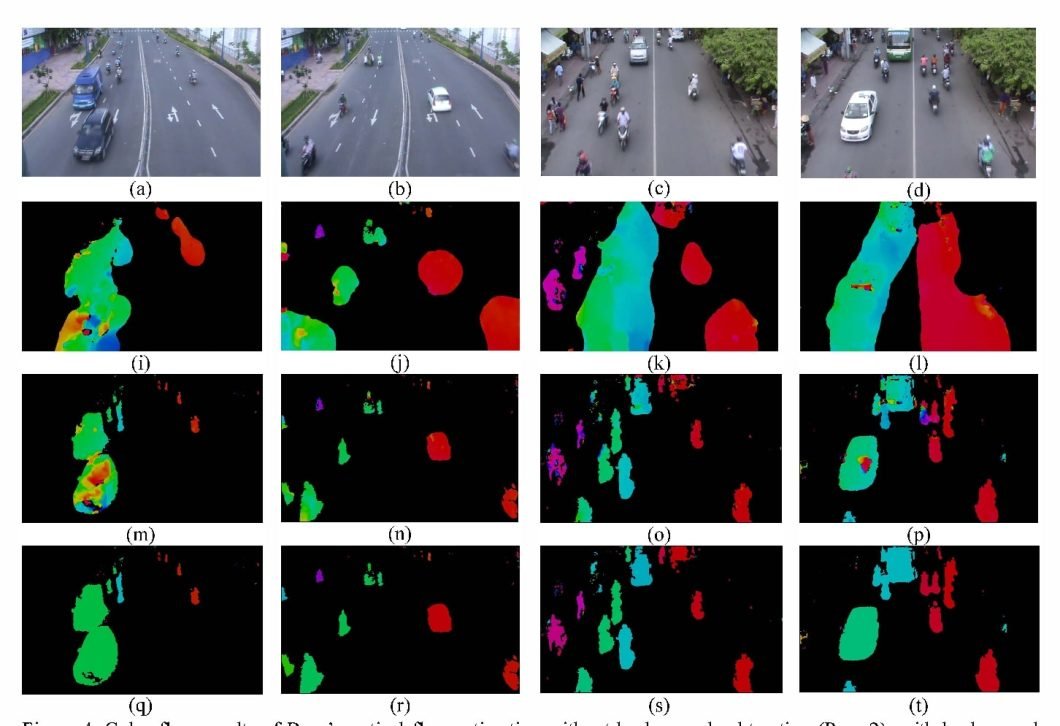

Automotive: ADAS and Autonomous Driving and Visitors Monitoring

Optical movement serves as a cornerstone know-how for Superior Driver Help Methods (ADAS). As an example, Tesla’s Autopilot makes use of these algorithms in its suite of sensors and cameras to detect and monitor objects. It assists in estimating the rate of transferring objects relative to the automobile as nicely. These capabilities are essential for collision avoidance and lane monitoring.

Surveillance and Safety: Crowd Monitoring and Anomalous Habits Detection

Optical movement aids in crowd monitoring by analyzing the movement of individuals to assist detect patterns or anomalies. In airports or buying facilities, for instance, it may possibly flag uncommon actions and alert safety. It may very well be one thing as easy (however laborious to see) as a person transferring in opposition to the group. Occasions just like the FIFA World Cup typically use it to assist monitor crowd dynamics for security functions.

Sports activities Analytics: Movement Evaluation for Efficiency Enchancment and Damage Prevention

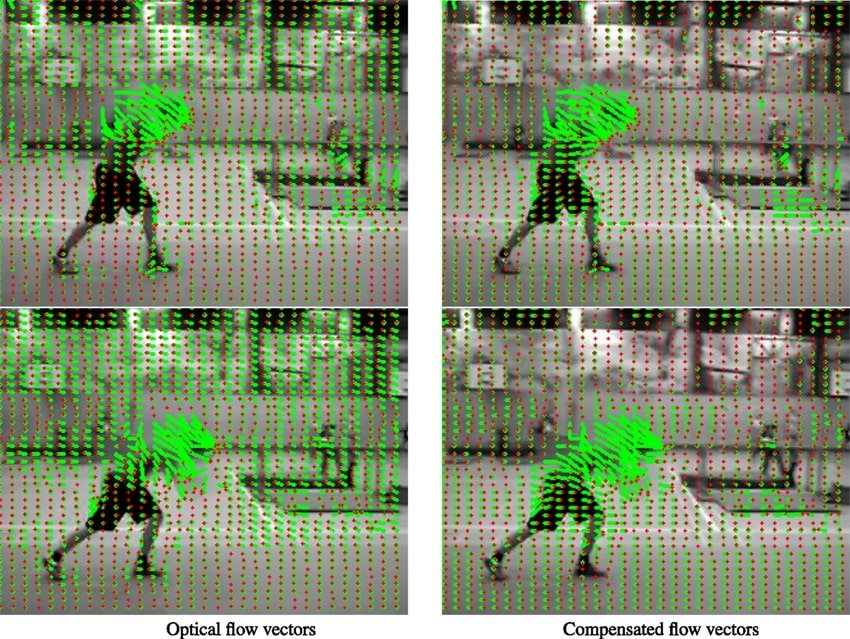

By analyzing the movement of gamers throughout the sector, groups can optimize coaching and methods for improved athletic output. Catapult Sports, a frontrunner in athlete analytics, leverages optical movement to trace participant actions. This offers coaches with information to reinforce efficiency and cut back harm dangers.

Robotics: Navigation and Object Avoidance

Drone know-how corporations, like Da-Jiang Improvements (DJI), use visible sensors to stabilize flight and keep away from obstacles. It additionally analyzes floor patterns, serving to drones keep place by calculating their movement relative to the bottom. This helps guarantee secure navigation, even with out GPS programs.

Movie and Video Manufacturing: Visible Results, Movement Monitoring, and Scene Reconstruction

Filmmakers use optical movement to create visible results and in post-production modifying. The visible results workforce for the film “Avatar” used these strategies for movement monitoring and scene reconstruction. This permits for the seamless integration of Laptop-generated Imagery (CGI) with live-action footage. It will probably facilitate the recreation of advanced dynamic scenes to offer life like and immersive visuals.

Optical Move Sensible Implementation

There are numerous methods of implementing optical movement in functions, starting from software program to {hardware} integration. For instance, we’ll have a look at a typical integration course of utilizing the favored OpenCV optical movement algorithm.

OpenCV (Open Supply Laptop Imaginative and prescient Library) is an in depth library providing a variety of real-time laptop imaginative and prescient capabilities. This consists of utilities for picture and video evaluation, characteristic detection, and object recognition. Identified for its versatility and efficiency, OpenCV is extensively utilized in academia and trade for fast prototyping and deployment.

Making use of the Lucas-Kanade Technique in OpenCV

- Setting Setup: Set up OpenCV utilizing bundle managers like pip for Python with the command

pip set up opencv-python. - Learn Frames: Seize video frames utilizing OpenCV’s

VideoCaptureobject. - Preprocess Frames: Convert frames to grayscale utilizing

cvtColorfor processing, as optical movement requires single-channel photographs. - Function Choice: Use

goodFeaturesToTrackfor choosing factors to trace or cross a predefined set of factors. - Lucas-Kanade Optical Move: Name

calcOpticalFlowPyrLKto estimate the optical movement between frames. - Visualize Move: Draw the movement vectors on photographs to confirm the movement’s course and magnitude.

- Iterate: Repeat the method for subsequent body pairs to trace movement over time.

Integrating Optical Move into {Hardware} Initiatives

An instance can embody drones for real-time movement monitoring:

- Sensor Choice: Select an optical movement sensor suitable along with your {hardware} platform, just like the PMW3901 for Raspberry Pi.

- Connectivity: Join the sensor to your {hardware} platform’s GPIO pins or use an interfacing module if obligatory.

- Driver Set up: Set up obligatory drivers and libraries to interface with the sensor.

- Information Acquisition: Write code to learn the displacement information from the sensor, representing the optical movement.

- Utility Integration: Feed the sensor information into your utility logic to make use of the optical movement for duties like navigation or impediment avoidance.

Efficiency Optimizations

You may additionally wish to contemplate the next to optimize efficiency for optical movement functions:

- High quality of Options: Guarantee chosen factors are well-distributed and trackable over time.

- Parameter Tuning: Modify the parameters of the optical movement operate to stability between pace and accuracy.

- Pyramid Ranges: Use picture pyramids to trace factors at totally different scales to account for adjustments in movement and scale.

- Error Checking: Implement error checks to filter out unreliable movement vectors.

Challenges and Limitations

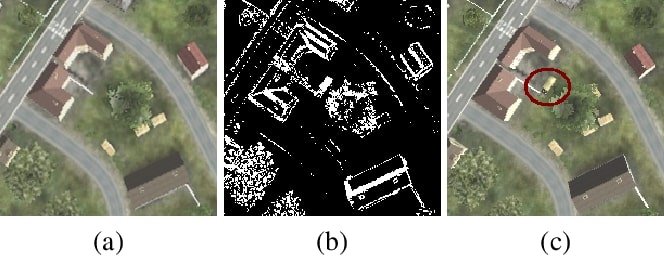

Occlusions, illumination adjustments, and texture much less areas nonetheless current a major problem to the accuracy of optical movement programs. Predictive fashions that estimate the movement of occluded areas primarily based on surrounding seen parts can enhance the outcomes. Adaptive algorithms that may normalize gentle variations might also help in compensating for illumination adjustments. Optical movement for sparsely textured areas can profit from integrating spatial context or higher-level cues to deduce movement.

One other problem generally confronted is named the “equation two unknown drawback.” This arises on account of having extra variables than equations, making the calculations underdetermined. By assuming movement consistency over small home windows, you may mitigate this problem by permitting for the aggregation of data to unravel for the unknowns. Different superior strategies could additional refine estimates utilizing iterative regularization strategies.

Present fashions and algorithms could wrestle in advanced environments with fast actions, numerous object scales, and 3D depth variations. One resolution is the event of multi-scale fashions that function throughout totally different resolutions. One other is the mixing of depth data from stereo imaginative and prescient or LiDAR, for instance, to reinforce 3D scene interpretation.

What’s Subsequent?

Viso Suite helps companies and enterprises combine laptop imaginative and prescient duties, like optical movement, into their ML pipelines. By offering an all-in-one resolution for all the ML pipeline, groups can cut back the time to worth of their functions from 3 months to three days. Study extra about Viso Suite by reserving a demo with our workforce at the moment.