Deliver this mission to life

Producing photographs with Deep Studying is arguably one of many best and most versatile purposes of this technology of generative, weak AI. From producing fast advertising content material to augmenting artist workflows to making a enjoyable studying device for AI, we are able to simply see this ubiquity in motion with the widespread recognition of the Steady Diffusion household of fashions. That is largely to the Stability AI and Runway ML groups efforts to maintain the mannequin releases open sourced, and in addition owes an enormous due to the lively group of builders creating instruments with these fashions. Collectively, these traits have made the mannequin extremely accessible and straightforward to run – even for individuals with no coding expertise!

Since their launch, these Latent Diffusion Mannequin based mostly text-to-image fashions have confirmed extremely succesful. Up till now, the one actual competitors from the open supply group was with different Steady Diffusion releases. Notably, there may be now an unlimited library of fine-tuned mannequin checkpoints obtainable on websites like HuggingFace and CivitAI.

On this article, we’re going to cowl our favourite open supply, text-to-image generative mannequin to be launched since Steady Diffusion: PixArt Alpha. This superior new mannequin boasts an exceptionally low coaching price, a modern coaching technique that abstracts important components from a sometimes blended methodology, extremely informative coaching information, and implement a novel T2I Environment friendly transformer. On this article, we’re going to talk about these traits in additional element in an effort to present what makes this mannequin so promising, earlier than diving into our a modified model of the unique Gradio demo working on a Paperspace Pocket book.

Click on the Run on Paperspace on the prime of this pocket book or beneath the “Demo” part to run the app on a Free GPU powered Pocket book.

PixArt Alpha: Undertaking Breakdown

On this part, we’ll take a deeper have a look at the mannequin’s structure, coaching methodology, and the outcomes of the mission compared to different T2I fashions when it comes to coaching price and efficacy. Let’s start with a breakdown of the novel mannequin structure.

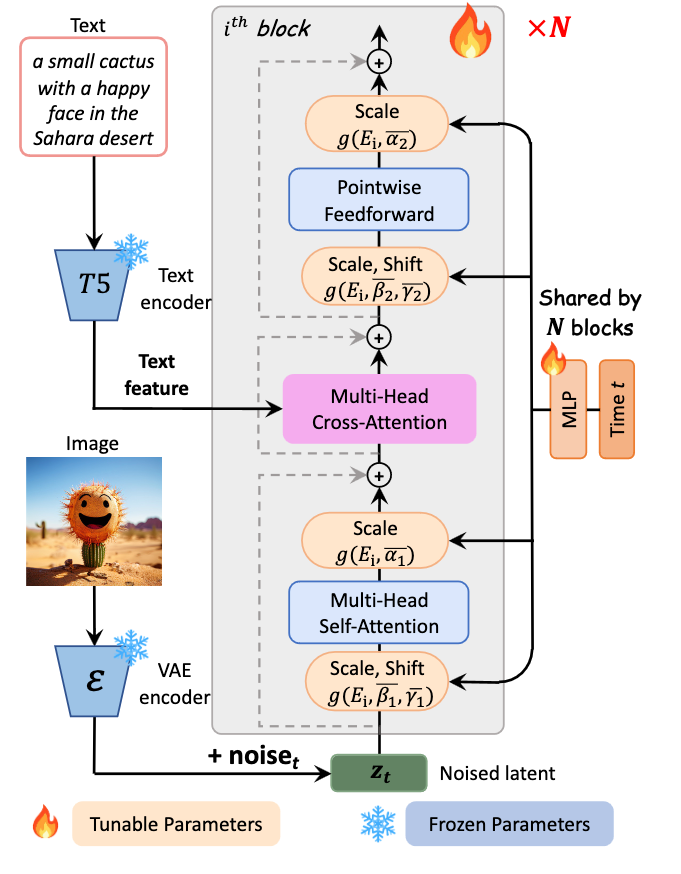

Mannequin structure

The mannequin structure is acquainted to different T2I fashions, as it’s based mostly on the Diffusion Transformer mannequin, however has some vital tweaks that provide noticeable enhancements. As recorded within the appendix of the paper, “We undertake the DiT-XL mannequin, which has 28 Transformer blocks in complete for higher efficiency, and the patch dimension of the PatchEmbed layer in ViT (Dosovitskiy et al., 2020b) is 2×” (Supply). With that in thoughts, we are able to construct a tough thought of the construction of the mannequin, however that does not expose all of the notable modifications they made.

Let’s stroll via the method every text-image pair makes via a Transformer block throughout coaching, so we are able to have a greater thought of what different modifications they made to DiT-XL to garner such substantial reductions in price.

First, we begin with our textual content and our picture being entered right into a T5 textual content encoder and Variational AutoEncoder (VAE) encoder modal, respectively. These encoders have frozen parameters, this prevents sure elements of the mannequin from being adjusted throughout coaching. We do that to protect the unique traits of those encoders all through the coaching course of. Right here our course of splits.

The picture information is subtle with noise to create a noised latent illustration. There it’s scaled and shifted utilizing AdaLN-single layers, that are linked to and might alter parameters throughout N totally different Transformer blocks. This scale and shift worth is set by a block-specific Multi Layer Perceptron (MLP), proven on the appropriate of the determine. It then passes via a self-attention layer and an extra AdaLN-single scaling layer. There it’s handed to the Multi-Head Cross Consideration layer.

Within the different path, the textual content function is entered on to the Multi-Head Cross Consideration layer, which is positioned between the self-attention layer and feed ahead layer of every Transformer block. Successfully, this permits the mannequin to work together with the textual content embedding in a versatile method. The output mission layer is initialized at zero to behave as an identification mapping and protect the enter for the next layers. In observe, this permits every block to inject textual situations. (Supply)

The Multi-Head Cross Consideration Layer has the flexibility to combine two totally different embedding sequences, as long as they share the identical dimension. (Supply). From there, the now unified embedding are handed to an extra Scale + Shift layer with the MLP. Subsequent, the Pointwise Feedforward layer helps the mannequin seize complicated relationships within the information by making use of a non-linear transformation independently to every place. It introduces flexibility to mannequin complicated patterns and dependencies throughout the sequence. Lastly, the embedding is handed to a remaining Scale layer, and on to the block output.

This intricate course of permits these layers to regulate to the inputted options of the text-image pairs over the time of coaching, and, very like with different diffusion fashions, the method could be functionally reversed for the aim of inference.

Now that we now have seemed on the course of a datum takes in coaching, let’s check out the coaching course of itself in higher element.

Coaching PixArt Alpha

The coaching paradigm for the mission has immense significance due to the influence it has on the associated fee to coach and remaining efficiency of the mannequin. The authors particularly recognized their novel technique as being important for the general success of the mannequin. They describe this technique as involving decomposing the duty of coaching the mannequin into three distinct subtasks.

First, they skilled the mannequin to concentrate on studying the pixel distribution of pure photographs. They skilled a class-conditional picture generational mannequin for pure photographs with an acceptable initialization. This creates a boosted ImageNet mannequin pre-trained on related picture information, and PixArt Alpha is designed to be suitable with these weights

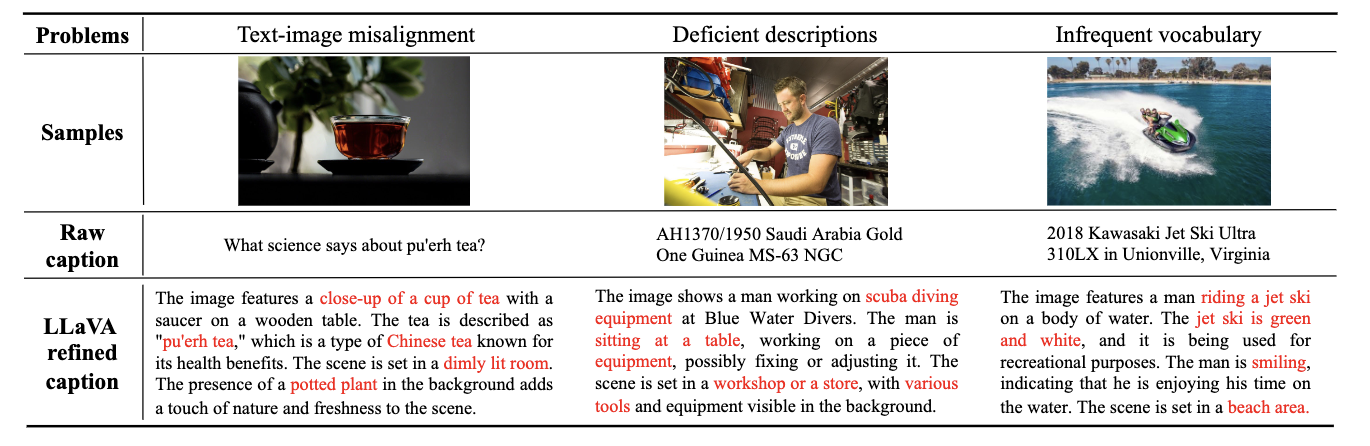

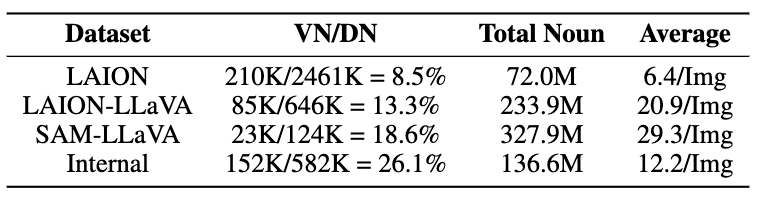

Within the second stage, the mannequin is tasked with studying to align the text-image object pairs. As a way to obtain an correct alignment between textual content ideas and pictures, they constructed a dataset consisting of text-image pairs utilizing LLaVA to caption samples from the SAM dataset. LLaVA-labeled captions had been considerably extra sturdy in terms of having ample legitimate nouns and idea density for finetuning when in comparison with LLaVA (for extra particulars, please go to the Dataset building part of the paper)

Lastly, they used the third stage to lift aesthetic high quality. Within the third coaching stage, they used augmented “Inside” information from JourneyDB with excessive “aesthetic” high quality. By fine-tuning the mannequin on these, they can improve the ultimate output for aesthetic high quality and element. This inner information they created is reported to be of even larger high quality than that created by SAM-LLaVA, when it comes to Legitimate Nouns over Complete Distinct Nouns.

Mixed, this decoupled pipeline is extraordinarily efficient at lowering the coaching price and time for the mannequin. Coaching for the mixed high quality of those three traits has confirmed troublesome, however by decomposing these processes and utilizing totally different information sources for every stage, the mission authors are in a position to obtain a excessive diploma of coaching high quality at a fraction of the associated fee.

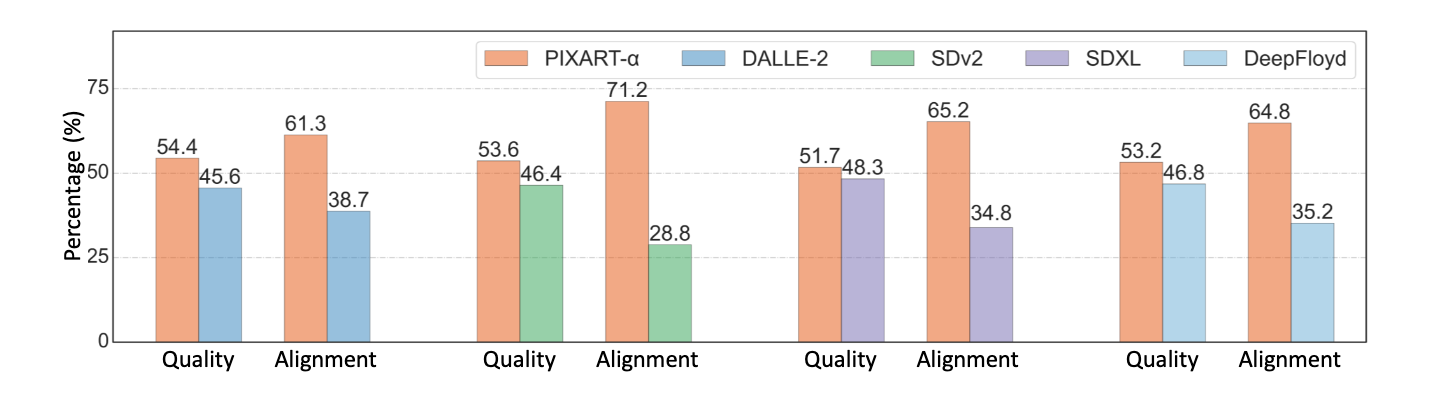

Price and efficacy advantages of PixArt Alpha in opposition to competitors

Now that we now have seemed a bit deeper on the mannequin structure and coaching methodology & reasoning, let’s talk about the ultimate outcomes of the PixArt Alpha mission. It is crucial when discussing this mannequin to debate its extremely low, comparative price of coaching to different T2I fashions.

The authors of the mission have supplied these three helpful figures for our comparability. Let’s establish a couple of key metrics from these graphics:

- PixArt Alpha trains in 10.8% of the time as Steady Diffusion v1.5 at a better decision (512 vs 1024).

- Trains in lower than 2% of coaching time of RAPHAEL, one of many newest closed supply releases for the mannequin

- Makes use of .2% of knowledge used to coach Imagen, presently #3 on Paperswithcode.com’s recording of prime text-to-image fashions examined on COCO

All collectively, these metrics point out that PixArt was extremely inexpensive to coach in comparison with competitors, however how does it carry out compared?

As we are able to see from the determine above, PixArt Alpha often outperforms aggressive open supply fashions when it comes to each picture constancy and text-image alignment. Whereas can’t evaluate it to closed supply fashions like Imagen or RAPHAEL, it stands to cause that their efficiency can be comparable, albeit barely inferior, given what we learn about these fashions.

Deliver this mission to life

Now that we now have gotten the mannequin breakdown out of the way in which, we’re prepared to leap proper into the code demo. For this demonstration, we now have supplied a pattern Pocket book in Paperspace that can make it straightforward to launch the PixArt Alpha mission on any Paperspace machine. We suggest extra highly effective machines just like the A100 or A6000 to get quicker outcomes, however the P4000 will generate photographs of equal high quality.

To get began, click on the Run on Paperspace hyperlink above or on the prime of the article.

Setup

To setup the appliance setting as soon as our Pocket book is spun up, all we have to do is run the primary code cell within the demo Pocket book.

!pip set up -r necessities.txt

!pip set up -U transformers speed upThis can set up the entire wanted packages for us, after which replace the transformers and speed up packages. This can guarantee the appliance runs easily once we proceed to the following cell and run our software.

Working the modified app

To run the appliance from right here, merely scroll the second code cell and execute it.

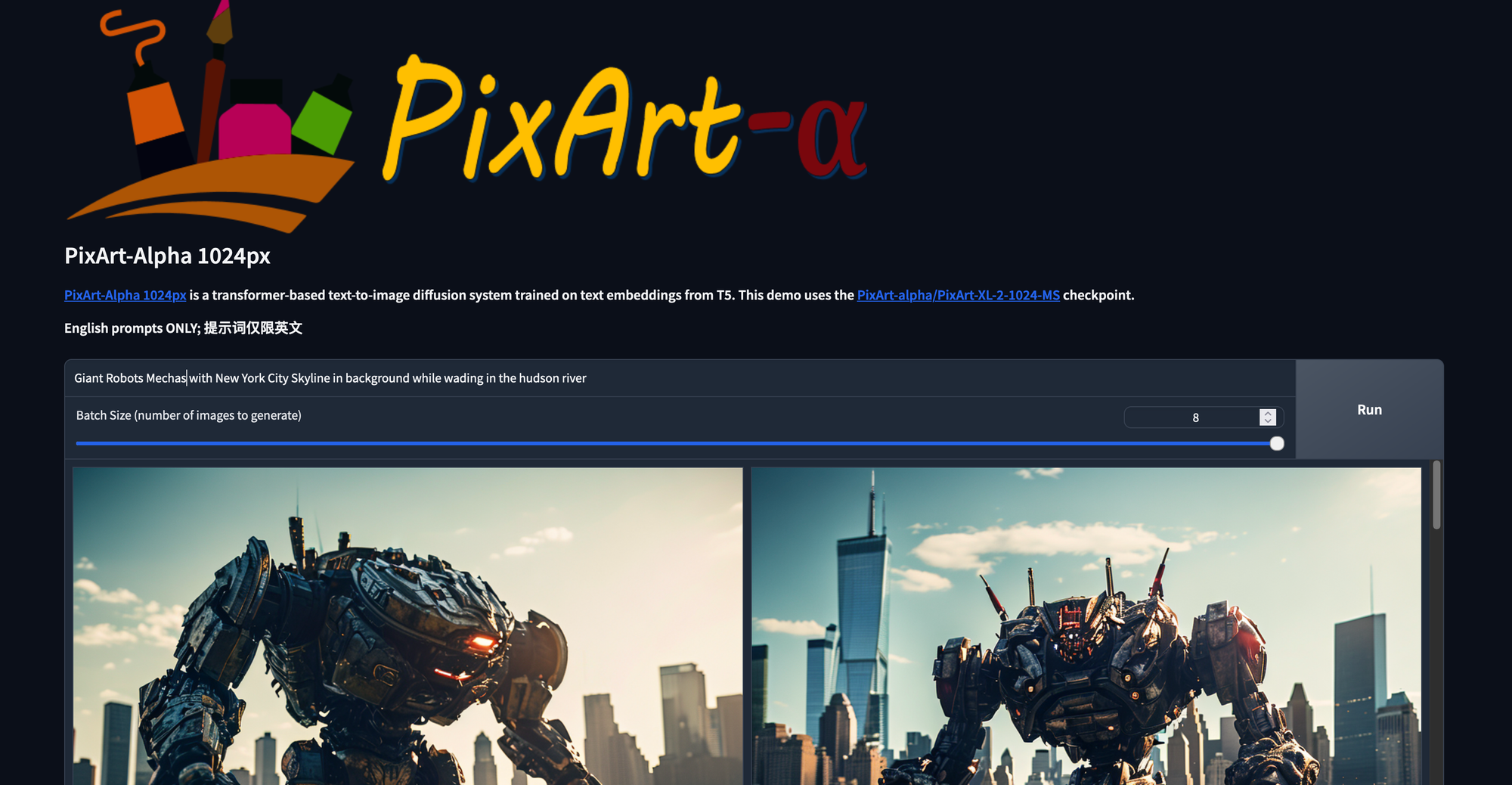

!python app.pyThis can launch our Gradio software, which has been modified barely from the demo for PixArt Alpha readers might have seen on their Github or HuggingFace web page. Let’s check out what it may possibly do, talk about the enhancements we now have added, after which check out some generated samples!

Right here is the primary web page for the net GUI. From right here, we are able to merely kind in no matter immediate we need and alter the slider to match the specified variety of outputs. Notice that this resolution will not generate a number of photographs per run of the mannequin, as the present Transformers pipeline appears to solely generated unconditional outputs with a couple of picture generated per run. Nonetheless, we’ll replace the slider to have batch dimension and looping parameters when the pipeline itself can cope with it. For now, that is the simplest method to view a number of photographs generated with the identical parameters without delay.

We have now additionally adjusted the gallery modal inside to show all of the outputs from a present run. These are then moved to a brand new folder after the run is full.

Within the part beneath our output, we are able to discover a dropdown for superior settings. Right here we are able to do issues like:

- Manually set the seed or set it to be randomized

- Toggle on or off the detrimental immediate, which can act like the alternative of our enter immediate

- Enter the detrimental immediate

- Enter a picture fashion. Types will have an effect on the ultimate output, and embrace no fashion, cinematic, photographic, anime, manga, digital artwork, pixel artwork, fantasy artwork, neonpunk, and 3d mannequin types.

- Modify the steerage scale. Not like steady diffusion, this worth must be pretty low (advisable 4.5) to keep away from any artifacting

- Modify variety of diffusion inference steps

Let’s check out some enjoyable examples we made.

Whereas the mannequin nonetheless clearly has some work to be performed, these outcomes present immense promise for an preliminary launch.

Closing ideas

As proven within the article right this moment, PixArt Alpha represents the primary tangible, open supply competitors to Steady Diffusion to hit the market. We’re keen t see how this mission continues to develop going ahead, and can be returning this subject shortly to show our readers tips on how to fine-tune PixArt alpha with Dreambooth!