Mannequin accuracy is a widely known metric to gauge a mannequin’s predictive energy. Nevertheless, it may be deceptive and trigger disastrous penalties. Right here is the place precision vs recall is available in.

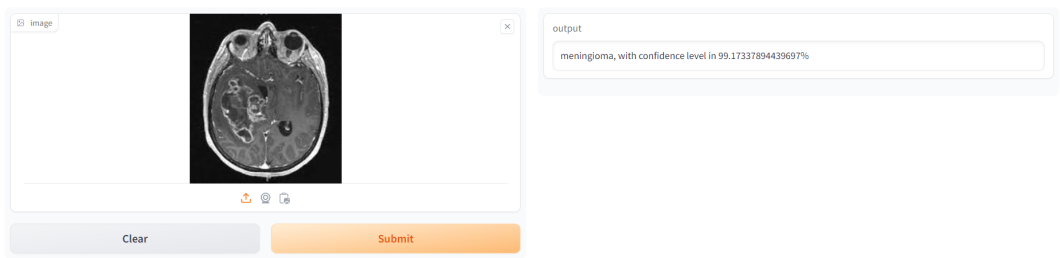

Think about a pc imaginative and prescient (CV) mannequin for diagnosing cancerous tumors with 99% accuracy. Whereas the mannequin’s efficiency appears spectacular, it’s nonetheless prone to miss 1% of tumor circumstances, resulting in extreme issues for particular sufferers.

Because of this we’d like a toolset of strategies to higher perceive and analyze mannequin efficiency. Recall vs precision are two useful metrics that enable for higher mannequin analysis. Each additionally function the inspiration for deriving different important metrics, such because the F1 rating and the ROC-AUC metric.

On this article, we’ll focus on:

- Accuracy and its limitations

- Precision, recall, and their trade-off

- F1-score, precision-recall curve, and ROC-AUC

- Multi-class precision and recall

- Use-cases and examples

About us: Viso.ai offers a strong end-to-end no-code laptop imaginative and prescient resolution – Viso Suite. Our software program permits ML groups to coach deep studying and machine studying fashions, and deploy them in laptop imaginative and prescient functions – utterly end-to-end. Get a demo.

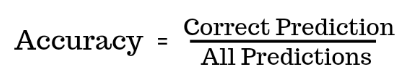

What’s Accuracy?

Accuracy measures how typically a mannequin predicts the result accurately relative to the whole variety of predictions. The metric has widespread use for measuring mannequin efficiency in laptop imaginative and prescient duties, together with classification, object detection, and segmentation.

Whereas accuracy is intuitive and simple to implement, it’s only appropriate for eventualities the place the coaching and validation datasets are comparatively balanced. For skewed datasets, accuracy scores could be unrealistically excessive.

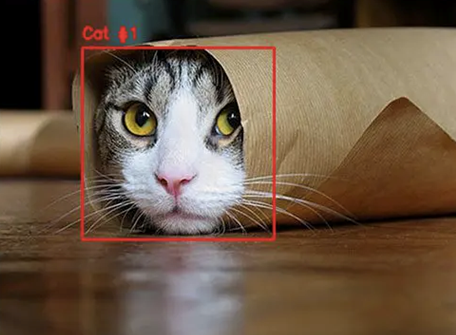

For instance, a CV mannequin for classifying cats can have 95% accuracy if the coaching dataset consists of 95 cat photos and solely 5 canine photos. The reason being the mannequin can hold classifying each picture as a cat and nonetheless be proper 95% of the time because the dataset has only a few canine photos.

A greater measure ought to acknowledge these prediction inconsistencies and spotlight the variety of incorrect predictions to information the model-building course of accurately.

That’s the place precision and recall come into play. Nevertheless, let’s first focus on the important thing ideas earlier than understanding how precision and recall work.

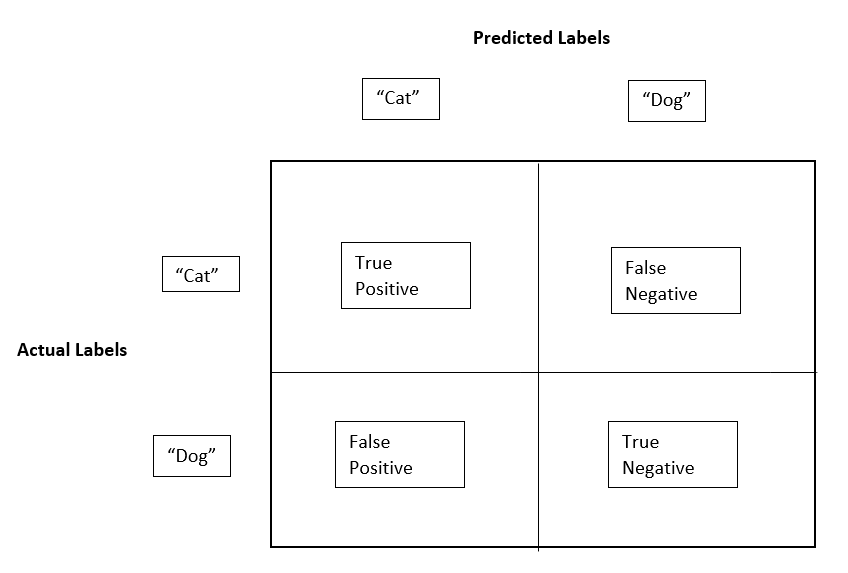

The Confusion Matrix

A confusion matrix is a desk that gives an in depth breakdown of the mannequin’s predictions by evaluating its output to the precise targets. It visually represents the variety of appropriate and incorrect predictions throughout all courses in optimistic and unfavourable assignments.

The right predictions in a confusion matrix are referred to as true positives (TP) and true negatives (TN). For example, in our cat classification mannequin, true positives are cat photos that the mannequin accurately labels as “cat,” and true negatives are canine photos that the mannequin accurately identifies as “canine” or “not cat.”

The wrong predictions are referred to as false positives (FP) and false negatives (FN). For instance, false positives could be canine photos predicted as cats, and false negatives could be cat photos predicted as canine.

Confusion matrices are important for calculating precision and recall, that are necessary metrics for assessing the standard of the classification mannequin.

Precision vs. Recall

The confusion matrix kinds the idea for calculating the precision and recall metrics. The next explains the 2 strategies intimately.

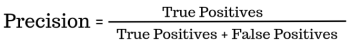

Precision

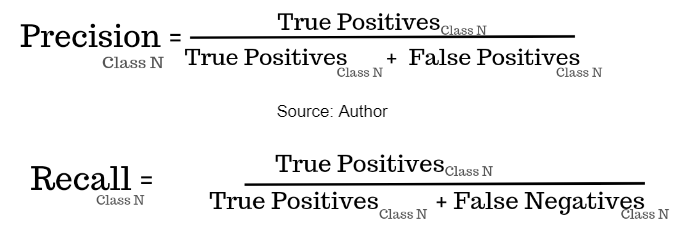

Precision is a metric that measures the proportion of true positives (appropriate predictions) in opposition to the mannequin’s complete optimistic predictions. The system under summarizes the idea.

The precision metric is necessary as a result of it focuses on the variety of true positives, that are the right predictions of the optimistic class.

Precision is appropriate in circumstances the place false positives are expensive. For example, a advice system that flags unsafe movies for youths ought to have excessive precision. The mannequin shouldn’t classify unsafe movies (true negatives) as protected (false positives). On this case, the mannequin will likely be acceptable even when it flags most protected movies as unsafe.

Nevertheless, precision shouldn’t be appropriate the place you need to decrease false negatives. So, within the instance highlighted earlier, a tumor detection mannequin wouldn’t be acceptable if it has decrease false positives (excessive precision) however misclassifies many precise tumor circumstances as regular (false negatives).

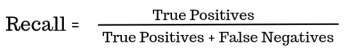

Recall

Recall, often known as sensitivity or true optimistic charge, is a metric that measures the proportion of true positives accurately recognized by a mannequin.

It measures the variety of appropriate class predictions relative to the variety of samples within the corresponding class. For example, out of 95 cat photos, what number of cats did the mannequin predict accurately?

The system under illustrates the idea.

Not like accuracy, which calculates the general charge of appropriate predictions, recall zooms in on avoiding false negatives.

The recall metric is significant in ML use circumstances the place lacking true optimistic cases can have vital penalties. For instance, a suitable tumor detection mannequin could have excessive recall – it should predict most circumstances as tumorous (excessive false positives), however is not going to label a cancerous tumor as regular (false unfavourable).

Precision vs. Recall Commerce-off

From the definition of each precision and recall, we will see an inherent trade-off between the 2 metrics. For example, a strong tumor detection mannequin can have excessive recall, however low precision, because the mannequin will predict nearly each case as tumorous (excessive false positives).

In distinction, the advice mannequin for flagging unsafe movies can have low recall however excessive precision, as it should predict nearly each video as unsafe (excessive false negatives).

As such, utilizing precision and recall in isolation doesn’t present the entire image. You have to use them collectively and choose a mannequin that offers an optimum end result for each.

F1 Rating, Precision-Recall Curve, and ROC-AUC

The F1 rating, precision-recall curve, and receiver working attribute (ROC) curve are useful measures that permit you to choose a mannequin with appropriate precision and recall scores.

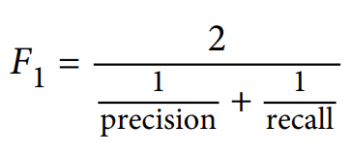

F1 Rating

The F1 rating is the harmonic imply of precision and recall scores. The system under illustrates the idea.

Because the system reveals, the F1 rating offers equal weightage to precision and recall. So, a mannequin with 70% precision and 50% recall could have an general F1 rating of 58%. You possibly can evaluate the F1 scores of a number of fashions to evaluate which is probably the most optimum.

Precision-Recall Curve

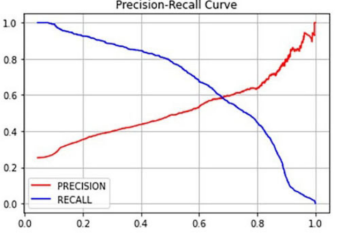

Precision and recall scores can change with completely different thresholds. The brink is the minimal likelihood for categorizing a prediction as optimistic. For a cat classification mannequin, a threshold of 0.5 means the mannequin will label a picture as a cat (optimistic) if the prediction likelihood is larger than or equal to 0.5.

Normally, setting a better threshold can lead the mannequin to have increased precision however low recall. For example, a threshold of 0.8 would imply the mannequin must be 80% assured that the picture is a cat to label it as a “cat.”

Elevating the brink to 0.7 means the mannequin will label a picture as a cat provided that the prediction likelihood is larger than or equal to 0.7.

Nevertheless, most photos might have decrease prediction possibilities, inflicting the mannequin to categorise a number of cat photos as canine (excessive false negatives), resulting in a decrease recall. The diagram under reveals how precision and recall might fluctuate with completely different threshold values.

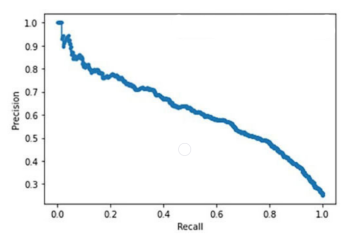

Visualizing the precision and recall trade-off along with completely different threshold values is useful. The diagram under illustrates the precision-recall curve based mostly on the graph above.

Right here, recall falls nearly linearly with precision. You should utilize this to pick out an acceptable precision-recall rating on your undertaking. For example, you’ll possible need excessive recall for the tumor recognition system (low false negatives).

Let’s say you desire a recall rating of 90%. This implies your precision rating will likely be roughly 40%.

Primarily based on this, you may configure the brink worth as 0.2 by wanting on the graph above. On this case, the mannequin will classify a case as tumorous even when there’s a 20% likelihood {that a} tumor is current.

Nevertheless, you may develop one other mannequin with a greater precision-recall trade-off. Such a mannequin’s precision-recall curve will likely be additional to the top-right nook.

ROC Curve and AUC

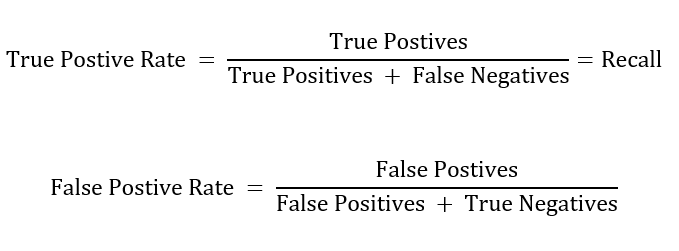

One other useful visualization is the ROC curve. Much like the PR curve, it illustrates the trade-off between the true optimistic charge (recall) and the false optimistic charge at completely different classification thresholds. The next reveals the calculation for true and false optimistic charges.

The diagram under reveals the ROC for a selected mannequin in opposition to a number of threshold values.

Once more, the diagram highlights the trade-off – excessive recall means a excessive false optimistic charge, which suggests decrease precision.

Growing recall (excessive true optimistic charge) for the tumor classification mannequin means capturing as many precise tumorous circumstances as attainable. Nevertheless, this will result in low precision (excessive false optimistic charge) because the mannequin will classify many non-tumorous circumstances as tumorous.

Deciding on a mannequin based mostly on ROC requires you to compute the realm below the curve (AUC). A totally random mannequin with no predictive energy could have an AUC rating of 0.5 – the realm of the triangle shaped by the diagonal dotted line within the picture – and an ideal mannequin could have an AUC rating of 1 – the orange line will likely be additional to the left.

Multi-Class Precision-Recall

Thus far, the definitions we regarded for Precision and Recall are for binary classifications – fashions that solely distinguish between two courses. Nevertheless, you should utilize the 2 metrics for multi-class classifications.

Since a number of courses exist in multi-class fashions, we will compute precision and recall scores for every class as follows.

Nevertheless, precision and recall scores for every class don’t assist assess a mannequin’s general efficiency. A mannequin having fifty courses could have fifty precision and recall scores. Judging the mannequin’s predictive energy from these will likely be difficult.

The answer is to make use of two strategies, referred to as micro and macro averaging, that mix the precision and recall scores for every class to offer an combination metric for analysis.

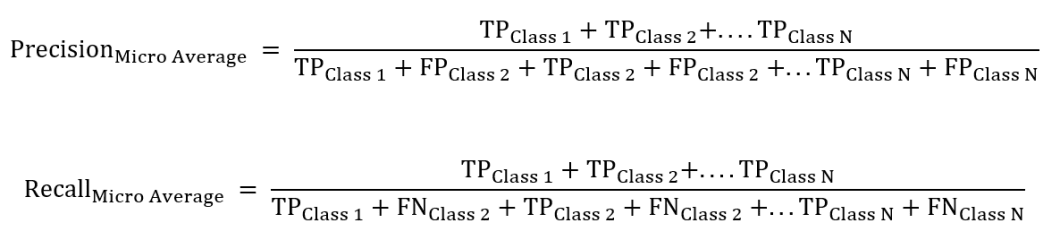

Micro Averaging

Micro averaging aggregates the true positives, false positives, and false negatives throughout all courses after which calculates precision and recall based mostly on the combination worth. The micro common offers equal weight to every occasion from every class. The next illustrates the system.

Whereas microaveraging addresses classification for every occasion, it may be deceptive for skewed datasets.

For example, think about two courses – Class 1 and Class 2, the place Class 1 has 95 samples, and Class 2 has 5 samples.

A mannequin that classifies all samples for Class 1 accurately however misclassifies all for Class 2 could have excessive precision and recall scores based mostly on micro averages. In such eventualities, it’s extra acceptable to make use of macro-average.

Macro Averaging

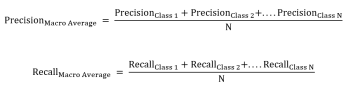

Macro averaging calculates precision and recall for every class individually after which takes the common throughout all courses. Macro-averaging offers equal weight to every class, because the system under illustrates,

Contemplating the identical instance as above, Class 2’s precision and recall scores will likely be low, inflicting macro averages to be low.

Precision vs Recall: Use Circumstances

As highlighted earlier, selecting between recall and precision requires prioritization as favoring one metric causes the opposite to fall. Let’s see just a few CV use circumstances that can assist you determine between the 2.

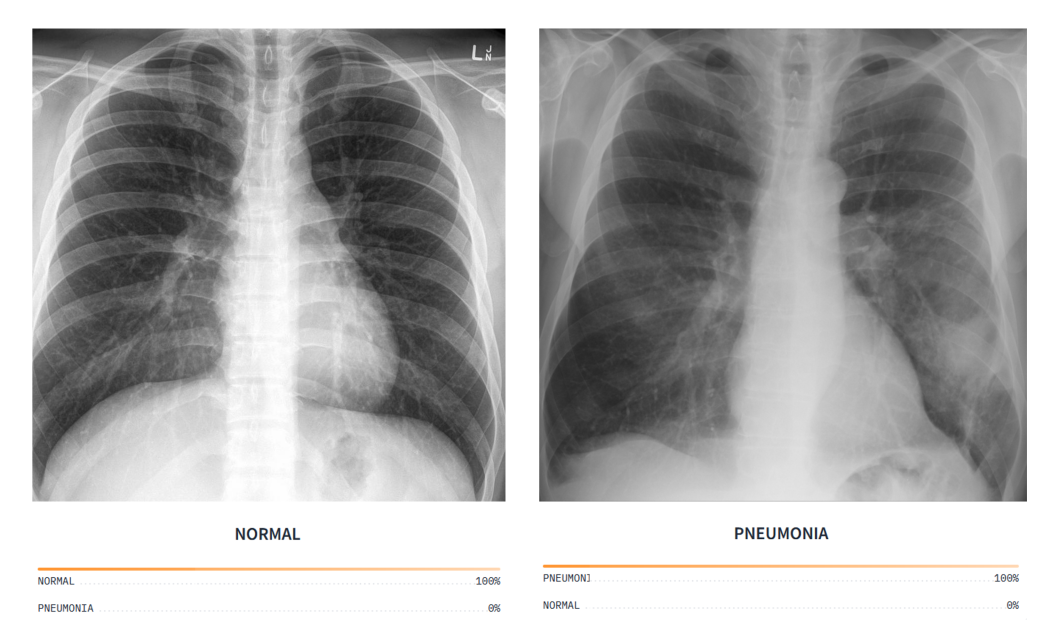

Medical Analysis

In medical analysis, lacking even one case can have devastating penalties. CV fashions for analyzing medical photos ought to usually have excessive recall for accurately classifying as many true positives as attainable, even when it results in some false positives or excessive precision.

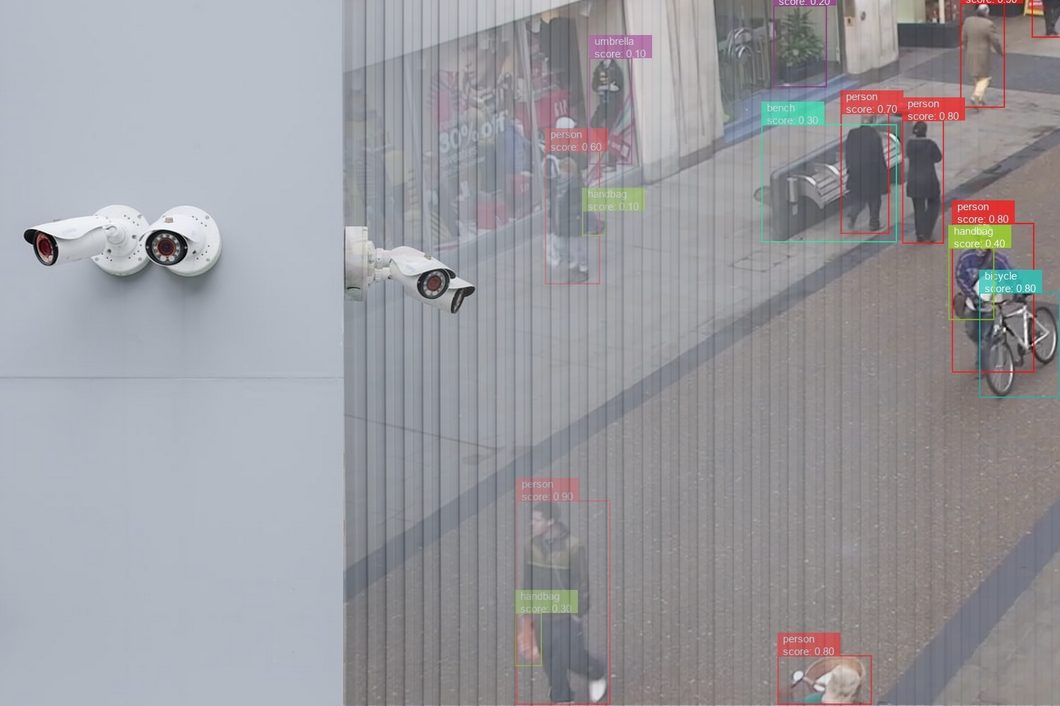

Safety Programs

Safety methods contain flagging anomalous conduct to alert authorities relating to potential threats. Once more, excessive recall is fascinating as capturing as many suspects as attainable (excessive false positives) is best than lacking precise culprits.

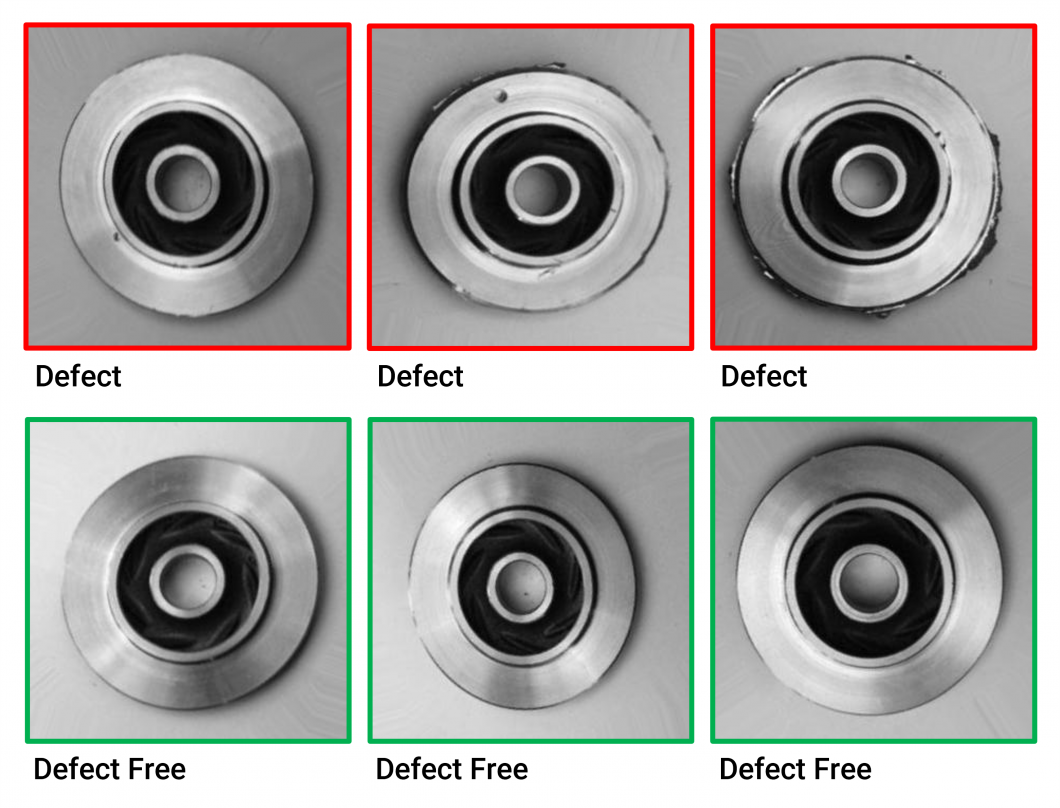

High quality Assurance

In manufacturing, CV fashions assist detect faulty merchandise on the meeting to make sure they don’t attain the shopper. These fashions ought to have excessive recall since classifying a defective product as regular (excessive false optimistic) can value an organization its prospects in the event that they get faulty gadgets.

Picture Retrieval Programs

CV fashions for picture retrieval analyze a consumer’s search question to fetch photos that match the question’s description. Having a mannequin with excessive precision (low false positives) is essential for producing related outcomes and guaranteeing customers simply discover what they’re trying to find.

Facial Recognition

Gadgets utilizing facial recognition fashions to authenticate customers ought to have excessive precision (low false positives) to reduce unauthorized entry.

Total, the selection between recall and precision is context-specific and based mostly on the results that false positives or false negatives may cause.

Precision vs. Recall: Key Takeaways

Precision vs. recall machine studying metrics are important for assessing a mannequin’s predictive efficiency. Beneath are just a few essential factors to recollect relating to these two measures.

- Precision and recall are fascinating for skewed datasets: Whereas accuracy is suitable for balanced datasets, precision, and recall supply a greater analysis for fashions educated on imbalanced information.

- Precision and recall trade-off: Growing precision results in low recall and vice versa. It’s best to select a mannequin with optimum precision and recall based mostly on the F1 rating, PR curve, and ROC-AUC.

- Multi-class precision and recall: Macro and micro-averaging are two strategies for computing precision and recall for multi-class classification fashions.

You possibly can learn extra about associated matters within the following blogs:

Utilizing Viso.ai for Mannequin Analysis

Evaluating CV fashions could be difficult on account of their growing prevalence in a number of industrial domains resembling healthcare, manufacturing, retail, and safety.

Additionally, constructing efficient analysis pipelines from scratch is tedious and susceptible to errors. Moreover, you require domain-level experience to decide on the proper analysis methodology for assessing mannequin efficiency for a selected activity.

A extra environment friendly methodology is to make use of instruments that enable you to construct and consider CV fashions by way of automated options and with minimal handbook effort.

And that’s the place the Viso Suite platform is available in. The platform is an end-to-end no-code resolution that permits you to construct, consider, and monitor CV fashions by way of sturdy pipelines and intuitive dashboards.

So, request a demo now to spice up your mannequin efficiency.