The mixing and software of enormous language fashions (LLMs) in drugs and healthcare has been a subject of serious curiosity and growth.

As famous within the Healthcare Information Management and Systems Society global conference and different notable occasions, corporations like Google are main the cost in exploring the potential of generative AI inside healthcare. Their initiatives, equivalent to Med-PaLM 2, spotlight the evolving panorama of AI-driven healthcare options, notably in areas like diagnostics, affected person care, and administrative effectivity.

Google’s Med-PaLM 2, a pioneering LLM within the healthcare area, has demonstrated spectacular capabilities, notably attaining an “skilled” stage in U.S. Medical Licensing Examination-style questions. This mannequin, and others prefer it, promise to revolutionize the best way healthcare professionals entry and make the most of info, doubtlessly enhancing diagnostic accuracy and affected person care effectivity.

Nevertheless, alongside these developments, issues concerning the practicality and security of those applied sciences in scientific settings have been raised. As an illustration, the reliance on huge web information sources for mannequin coaching, whereas helpful in some contexts, could not at all times be acceptable or dependable for medical functions. As Nigam Shah, PhD, MBBS, Chief Knowledge Scientist for Stanford Health Care, factors out, the essential inquiries to ask are concerning the efficiency of those fashions in real-world medical settings and their precise impression on affected person care and healthcare effectivity.

Dr. Shah’s perspective underscores the necessity for a extra tailor-made method to using LLMs in drugs. As a substitute of general-purpose fashions educated on broad web information, he suggests a extra centered technique the place fashions are educated on particular, related medical information. This method resembles coaching a medical intern – offering them with particular duties, supervising their efficiency, and step by step permitting for extra autonomy as they reveal competence.

In keeping with this, the event of Meditron by EPFL researchers presents an attention-grabbing development within the area. Meditron, an open-source LLM particularly tailor-made for medical purposes, represents a big step ahead. Skilled on curated medical information from respected sources like PubMed and scientific tips, Meditron presents a extra centered and doubtlessly extra dependable device for medical practitioners. Its open-source nature not solely promotes transparency and collaboration but in addition permits for steady enchancment and stress testing by the broader analysis neighborhood.

MEDITRON-70B-achieves-an-accuracy-of-70.2-on-USMLE-style-questions-in-the-MedQA-4-options-dataset

The event of instruments like Meditron, Med-PaLM 2, and others displays a rising recognition of the distinctive necessities of the healthcare sector in relation to AI purposes. The emphasis on coaching these fashions on related, high-quality medical information, and making certain their security and reliability in scientific settings, may be very essential.

Furthermore, the inclusion of numerous datasets, equivalent to these from humanitarian contexts just like the Worldwide Committee of the Pink Cross, demonstrates a sensitivity to the various wants and challenges in international healthcare. This method aligns with the broader mission of many AI analysis facilities, which intention to create AI instruments that aren’t solely technologically superior but in addition socially accountable and helpful.

The paper titled “Large language models encode clinical knowledge” not too long ago revealed in Nature, explores how massive language fashions (LLMs) could be successfully utilized in scientific settings. The analysis presents groundbreaking insights and methodologies, shedding gentle on the capabilities and limitations of LLMs within the medical area.

The medical area is characterised by its complexity, with an enormous array of signs, illnesses, and coverings which can be continuously evolving. LLMs should not solely perceive this complexity but in addition sustain with the most recent medical information and tips.

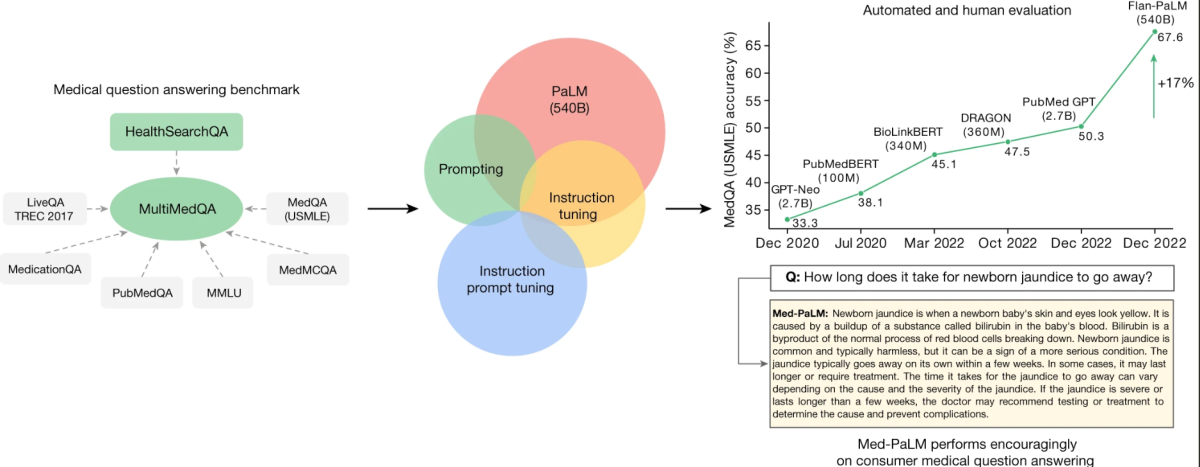

The core of this analysis revolves round a newly curated benchmark known as MultiMedQA. This benchmark amalgamates six current medical question-answering datasets with a brand new dataset, HealthSearchQA, which includes medical questions often searched on-line. This complete method goals to judge LLMs throughout numerous dimensions, together with factuality, comprehension, reasoning, potential hurt, and bias, thereby addressing the constraints of earlier automated evaluations that relied on restricted benchmarks.

MultiMedQA, a benchmark for answering medical questions spanning medical examination

Key to the examine is the analysis of the Pathways Language Mannequin (PaLM), a 540-billion parameter LLM, and its instruction-tuned variant, Flan-PaLM, on the MultiMedQA. Remarkably, Flan-PaLM achieves state-of-the-art accuracy on all of the multiple-choice datasets inside MultiMedQA, together with a 67.6% accuracy on MedQA, which includes US Medical Licensing Examination-style questions. This efficiency marks a big enchancment over earlier fashions, surpassing the prior state-of-the-art by greater than 17%.

MedQA

Format: query and reply (Q + A), a number of alternative, open area.

Instance query: A 65-year-old man with hypertension involves the doctor for a routine well being upkeep examination. Present drugs embrace atenolol, lisinopril, and atorvastatin. His pulse is 86 min−1, respirations are 18 min−1, and blood stress is 145/95 mmHg. Cardiac examination reveals finish diastolic murmur. Which of the next is the most definitely explanation for this bodily examination?

Solutions (right reply in daring): (A) Decreased compliance of the left ventricle, (B) Myxomatous degeneration of the mitral valve (C) Irritation of the pericardium (D) Dilation of the aortic root (E) Thickening of the mitral valve leaflets.

The examine additionally identifies vital gaps within the mannequin’s efficiency, particularly in answering client medical questions. To handle these points, the researchers introduce a technique often called instruction immediate tuning. This method effectively aligns LLMs to new domains utilizing a number of exemplars, ensuing within the creation of Med-PaLM. The Med-PaLM mannequin, although it performs encouragingly and exhibits enchancment in comprehension, information recall, and reasoning, nonetheless falls brief in comparison with clinicians.

A notable facet of this analysis is the detailed human analysis framework. This framework assesses the fashions’ solutions for settlement with scientific consensus and potential dangerous outcomes. As an illustration, whereas solely 61.9% of Flan-PaLM’s long-form solutions aligned with scientific consensus, this determine rose to 92.6% for Med-PaLM, similar to clinician-generated solutions. Equally, the potential for dangerous outcomes was considerably lowered in Med-PaLM’s responses in comparison with Flan-PaLM.

The human analysis of Med-PaLM’s responses highlighted its proficiency in a number of areas, aligning intently with clinician-generated solutions. This underscores Med-PaLM’s potential as a supportive device in scientific settings.

The analysis mentioned above delves into the intricacies of enhancing Giant Language Fashions (LLMs) for medical purposes. The methods and observations from this examine could be generalized to enhance LLM capabilities throughout numerous domains. Let’s discover these key facets:

Instruction Tuning Improves Efficiency

- Generalized Software: Instruction tuning, which includes fine-tuning LLMs with particular directions or tips, has proven to considerably enhance efficiency throughout numerous domains. This method could possibly be utilized to different fields equivalent to authorized, monetary, or academic domains to reinforce the accuracy and relevance of LLM outputs.

Scaling Mannequin Measurement

- Broader Implications: The statement that scaling the mannequin dimension improves efficiency is just not restricted to medical query answering. Bigger fashions, with extra parameters, have the capability to course of and generate extra nuanced and complicated responses. This scaling could be helpful in domains like customer support, artistic writing, and technical help, the place nuanced understanding and response technology are essential.

Chain of Thought (COT) Prompting

- Various Domains Utilization: The usage of COT prompting, though not at all times bettering efficiency in medical datasets, could be helpful in different domains the place complicated problem-solving is required. As an illustration, in technical troubleshooting or complicated decision-making eventualities, COT prompting can information LLMs to course of info step-by-step, resulting in extra correct and reasoned outputs.

Self-Consistency for Enhanced Accuracy

- Wider Purposes: The strategy of self-consistency, the place a number of outputs are generated and probably the most constant reply is chosen, can considerably improve efficiency in numerous fields. In domains like finance or authorized the place accuracy is paramount, this methodology can be utilized to cross-verify the generated outputs for increased reliability.

Uncertainty and Selective Prediction

- Cross-Area Relevance: Speaking uncertainty estimates is essential in fields the place misinformation can have critical penalties, like healthcare and legislation. Utilizing LLMs’ capacity to precise uncertainty and selectively defer predictions when confidence is low could be a essential device in these domains to stop the dissemination of inaccurate info.

The true-world software of those fashions extends past answering questions. They can be utilized for affected person schooling, helping in diagnostic processes, and even in coaching medical college students. Nevertheless, their deployment have to be rigorously managed to keep away from reliance on AI with out correct human oversight.

As medical information evolves, LLMs should additionally adapt and study. This requires mechanisms for steady studying and updating, making certain that the fashions stay related and correct over time.