The Phase Something Mannequin (SAM), a latest innovation by Meta’s FAIR (Fundamental AI Research) lab, represents a pivotal shift in laptop imaginative and prescient. This state-of-the-art occasion segmentation mannequin showcases a groundbreaking potential to carry out advanced picture segmentation duties with unprecedented precision and flexibility.

In contrast to conventional fashions that require in depth coaching on particular duties, the segment-anything undertaking design takes a extra adaptable strategy. Its creators took inspiration from latest developments in pure language processing (NLP) with basis fashions.

SAM’s game-changing impression lies in its zero-shot inference capabilities. Which means that SAM can precisely phase photos with out prior particular coaching, a activity that historically requires tailor-made fashions. This leap ahead is as a result of affect of basis fashions in NLP, reminiscent of GPT and BERT.

These fashions revolutionized how machines perceive and generate human language by studying from huge information, permitting them to generalize throughout varied duties. SAM applies the same philosophy to laptop imaginative and prescient, utilizing a big dataset to know and phase all kinds of photos.

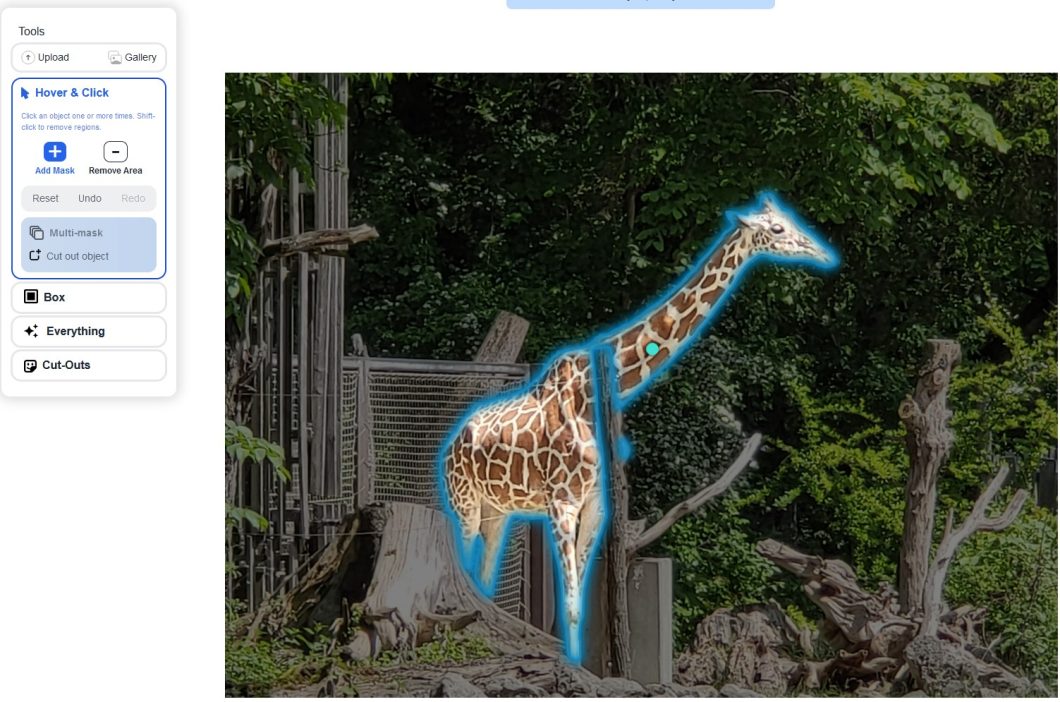

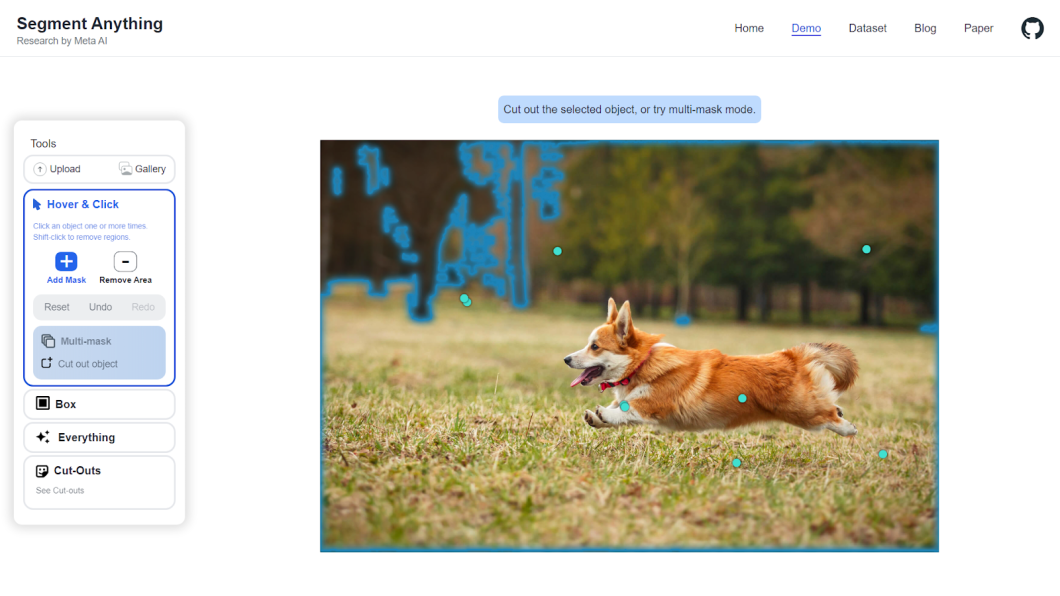

Considered one of its standout options is the power to course of a number of prompts. You may hover or click on on parts, drag packing containers round them, mechanically phase every little thing, and create customized masks or cut-outs.

Whereas efficient in particular areas, earlier fashions usually wanted in depth retraining to adapt to new or assorted duties. Thus, SAM is a big shift in making these fashions extra versatile and environment friendly, setting a brand new benchmark for laptop imaginative and prescient.

Pc Imaginative and prescient at Meta: A Temporary Historical past

Meta, previously generally known as Fb, has been a key participant in advancing AI and laptop imaginative and prescient applied sciences. The journey started with foundational work in machine studying, resulting in vital contributions which have formed right now’s AI panorama.

Over time, Meta has launched a number of influential fashions and instruments. The event of PyTorch, a preferred open-source machine studying library, marked a big milestone, providing researchers and builders a versatile platform for AI experimentation and deployment.

The introduction of the Phase Something Mannequin (SAM) represents a end result of those efforts, standing as a testomony to Meta’s ongoing dedication to innovation in AI. SAM’s refined strategy to picture segmentation demonstrates a leap ahead within the firm’s AI capabilities, showcasing superior understanding and manipulation of visible information.

Immediately, the pc imaginative and prescient undertaking has gained monumental momentum in cellular functions, automated picture annotation instruments, and facial recognition and picture classification functions.

Phase Something Mannequin (SAM)’s Community Structure and Design

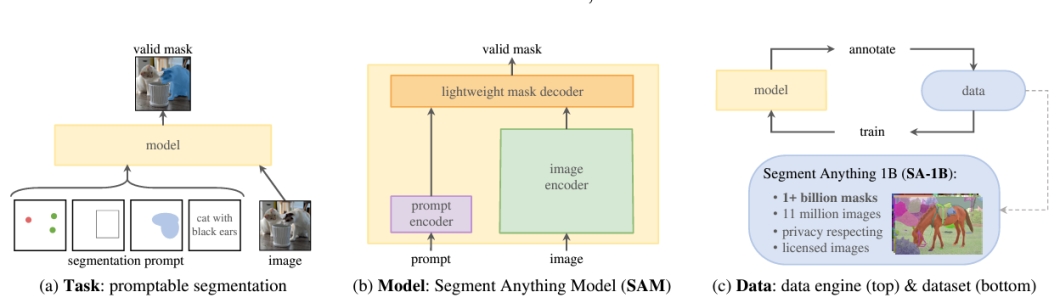

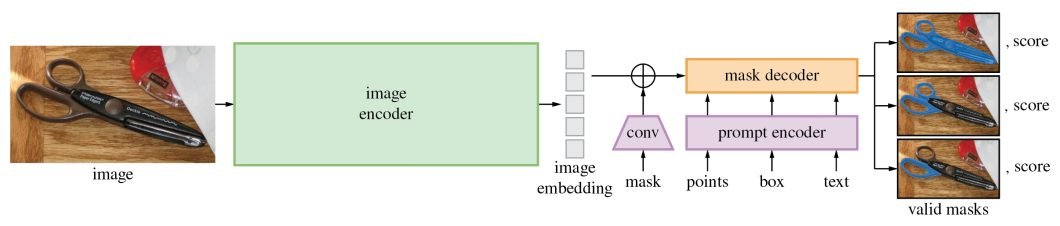

SAM’s revolutionary capabilities are largely due to its revolutionary structure. It’s made up of three fundamental elements: the picture encoder, immediate encoder, and masks decoder.

Picture Encoder

- The picture encoder is on the core of SAM’s structure, a complicated part liable for processing and reworking enter photos right into a complete set of options.

- Utilizing a transformer-based strategy, like what’s seen in superior NLP fashions, this encoder compresses photos right into a dense characteristic matrix. This matrix kinds the foundational understanding from which the mannequin identifies varied picture parts.

Immediate Encoder

- The immediate encoder is a singular side of SAM that units it aside from conventional picture segmentation fashions.

- It interprets varied types of enter prompts, be they text-based, factors, tough masks, or a mixture thereof.

- This encoder interprets these prompts into an embedding that guides the segmentation course of. This allows the mannequin to deal with particular areas or objects inside a picture because the enter dictates.

Masks Decoder

- The masks decoder is the place the magic of segmentation takes place. It synthesizes the data from each the picture and immediate encoders to provide correct segmentation masks.

- This part is liable for the ultimate output, figuring out the exact contours and areas of every phase throughout the picture.

- How these elements work together with one another is equally very important for efficient picture segmentation as their capabilities:

- The picture encoder first creates an in depth understanding of the complete picture, breaking it down into options that the engine can analyze.

- The immediate encoder then provides context, focusing the mannequin’s consideration primarily based on the offered enter, whether or not a easy level or a posh textual content description.

- Lastly, the masks decoder makes use of this mixed info to phase the picture precisely, guaranteeing that the output aligns with the enter immediate’s intent.

The Phase Something Mannequin Technical Spine: Convolutional, Generative Networks, and Extra

Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs) play a foundational function within the capabilities of SAM. These deep studying fashions are central to the development of machine studying and AI, significantly within the realm of picture processing. They supply the underpinning that makes SAM’s refined picture segmentation doable.

Convolutional Neural Networks (CNNs)

CNNs are integral to the picture encoder of the Phase Something Mannequin structure. They excel at recognizing patterns in photos by studying spatial hierarchies of options, from easy edges to extra advanced shapes.

In SAM, CNNs analyze and interpret visible information, effectively processing pixels to detect and perceive varied options and objects inside a picture. This potential is essential for SAM’s structure’s preliminary picture evaluation stage.

Generative Adversarial Networks (GANs)

GANs contribute to SAM’s potential to generate exact segmentation masks. Consisting of two components, the generator, and the discriminator, GANs are adept at understanding and replicating advanced information distributions.

The generator focuses on producing lifelike photos, and the discriminator evaluates these photos to find out if they’re actual or artificially created. This dynamic enhances the generator’s potential to create extremely practical artificial photos.

How CNNs and GANs Complement Every Different

The synergy between CNNs and GANs inside SAM’s framework is important. Whereas CNNs present a strong technique for characteristic extraction and preliminary picture evaluation, GANs improve the mannequin’s potential to generate correct and practical segmentations.

This mix permits SAM to know a big selection of visible inputs and reply with excessive precision. By integrating these applied sciences, SAM represents a big leap ahead, showcasing the potential of mixing totally different neural community architectures for superior AI functions.

CLIP (Contrastive Language-Picture Pre-training)

CLIP, developed by OpenAI, is a mannequin that bridges the hole between textual content and pictures.

CLIP’s potential to know and interpret textual content prompts in relation to photographs is invaluable to how SAM works. It permits SAM to course of and reply to text-based inputs, reminiscent of descriptions or labels, and relate them precisely to visible information.

This integration enhances SAM’s versatility, enabling it to phase photos primarily based on visible cues and observe textual directions.

Switch Studying and Pre-trained Fashions:

Switch studying includes using a mannequin skilled on one activity as a basis for an additional associated activity. Pre-trained fashions like ResNet, VGG, and EfficientNet, which have been extensively skilled on massive datasets, are prime examples of this.

ResNet is thought for its deep community structure, which solves the vanishing gradient drawback, permitting it to study from an enormous quantity of visible information. VGG is admired for its simplicity and effectiveness in picture recognition duties. EfficientNet, however, supplies a scalable and environment friendly structure that balances mannequin complexity and efficiency.

Utilizing switch studying and pre-trained fashions, SAM beneficial properties a head begin in understanding advanced picture options. That is important for its excessive accuracy and effectivity in picture segmentation. This makes SAM highly effective and environment friendly in adapting to new segmentation challenges.

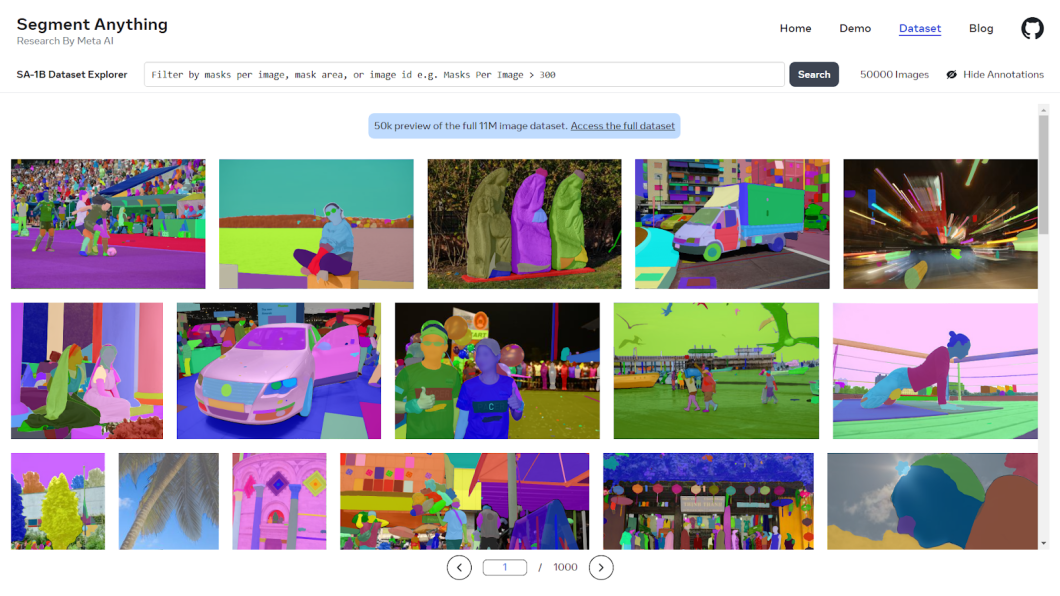

The SA-1B Dataset: A New Milestone in AI Coaching

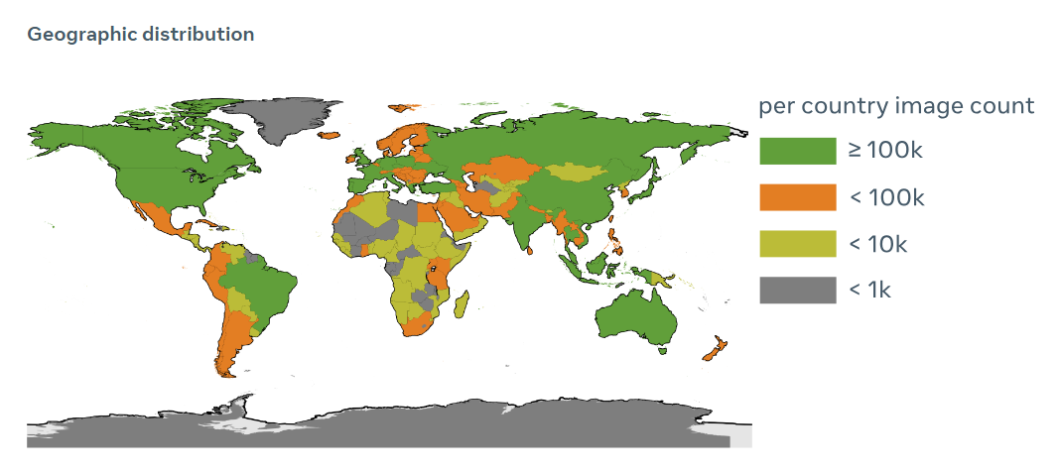

SAM’s true superpower is its coaching information, known as the SA-1B Dataset. Quick for Phase Something 1 Billion, it’s probably the most in depth and numerous picture segmentation dataset out there.

It encompasses over 1 billion high-quality segmentation masks derived from an enormous array of 11 million photos and covers a broad spectrum of situations, objects, and environments. SA-1B is an unparalleled, high-quality useful resource curated particularly for coaching segmentation fashions.

Creating this dataset took a number of phases of manufacturing:

- Assisted Guide: On this preliminary stage, human annotators work alongside the Phase Something Mannequin, guaranteeing that each masks in a picture is precisely captured and annotated.

- Semi-Automated: Annotators are tasked with specializing in areas the place SAM is much less assured, refining and supplementing the mannequin’s predictions.

- Full-Auto: SAM independently predicts segmentation masks within the last stage, showcasing its potential to deal with advanced and ambiguous situations with minimal human intervention.

The vastness and number of the SA-1B Dataset push the boundaries of what’s achievable in AI analysis. Such a dataset permits extra strong coaching, permitting fashions like SAM to deal with an unprecedented vary of picture segmentation duties with excessive accuracy. It supplies a wealthy pool of knowledge that enhances the mannequin’s studying, guaranteeing it will possibly generalize properly throughout totally different duties and environments.

Phase Something Mannequin Sensible Purposes and Future Potential

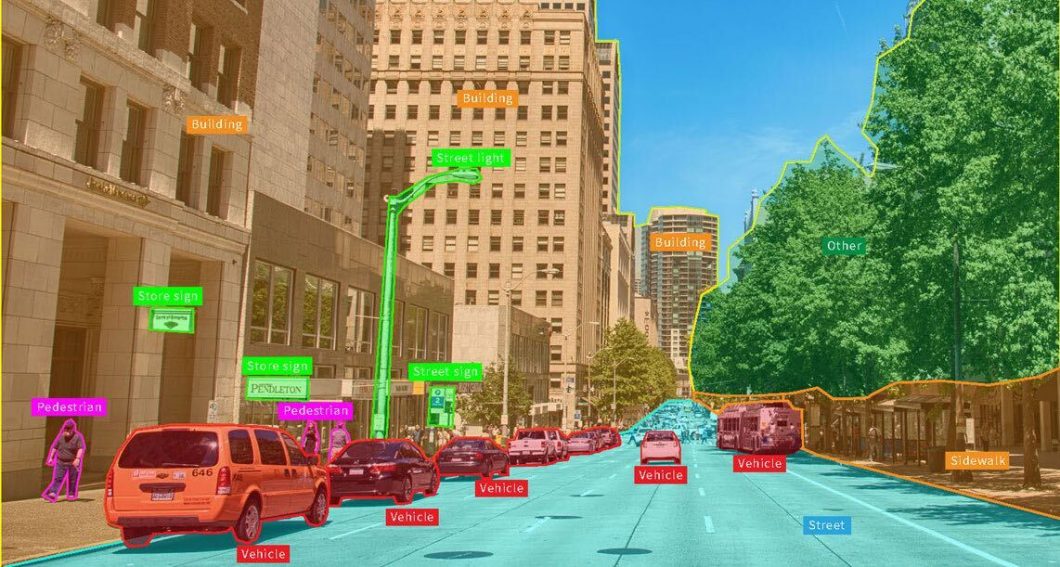

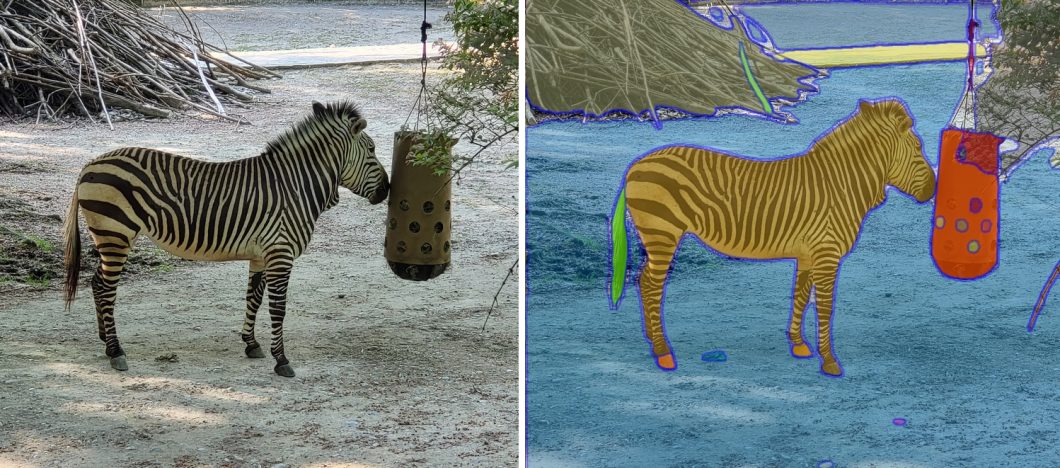

As an enormous leap ahead in picture segmentation, SAM can be utilized in virtually each doable utility.

Listed here are a few of the functions by which SAM is already making waves:

- AI-Assisted Labeling: SAM considerably streamlines the method of labeling photos. It may possibly mechanically establish and phase objects inside photos, drastically decreasing the effort and time required for guide annotation.

- Drugs Supply: In healthcare, SAM’s exact segmentation capabilities allow the identification of particular areas for drug supply. Thus, guaranteeing precision in therapy and minimizing negative effects.

- Land Cowl Mapping: SAM might be utilized to categorise and map various kinds of land cowl, enabling functions in city planning, agriculture, and environmental monitoring.

SAM’s potential extends past present functions. In fields like environmental monitoring, it may help in analyzing satellite tv for pc imagery for local weather change research or catastrophe response.

In retail, SAM may revolutionize stock administration by means of automated product recognition and categorization.

SAM’s unequaled adaptability and accuracy positions it to be the brand new commonplace in picture segmentation. Its potential to study from an enormous and numerous dataset means it will possibly repeatedly enhance and adapt to new challenges.

We are able to anticipate SAM to evolve with higher real-time processing capabilities. With this evolution, we are able to anticipate to see SAM change into ubiquitous in fields like autonomous automobiles and real-time surveillance. Within the leisure trade, we may see developments in visible results and augmented actuality experiences.