Writing a report on the state of AI should really feel lots like constructing on shifting sands: by the point you hit publish, the entire {industry} has modified underneath your ft. However there are nonetheless necessary traits and takeaways in Stanford’s 386-page bid to summarize this advanced and fast-moving area.

The AI Index, from the Institute for Human-Centered Synthetic Intelligence, labored with consultants from academia and personal {industry} to gather info and predictions on the matter. As a yearly effort (and by the dimensions of it, you possibly can guess they’re already exhausting at work laying out the subsequent one), this might not be the freshest tackle AI, however these periodic broad surveys are necessary to maintain one’s finger on the heartbeat of {industry}.

This 12 months’s report contains “new evaluation on basis fashions, together with their geopolitics and coaching prices, the environmental influence of AI techniques, Okay-12 AI training, and public opinion traits in AI,” plus a have a look at coverage in 100 new nations.

For the highest-level takeaways, allow us to simply bullet them right here:

- AI growth has flipped during the last decade from academia-led to industry-led, by a big margin, and this exhibits no signal of fixing.

- It’s turning into tough to check fashions on conventional benchmarks and a brand new paradigm could also be wanted right here.

- The vitality footprint of AI coaching and use is turning into appreciable, however we have now but to see the way it might add efficiencies elsewhere.

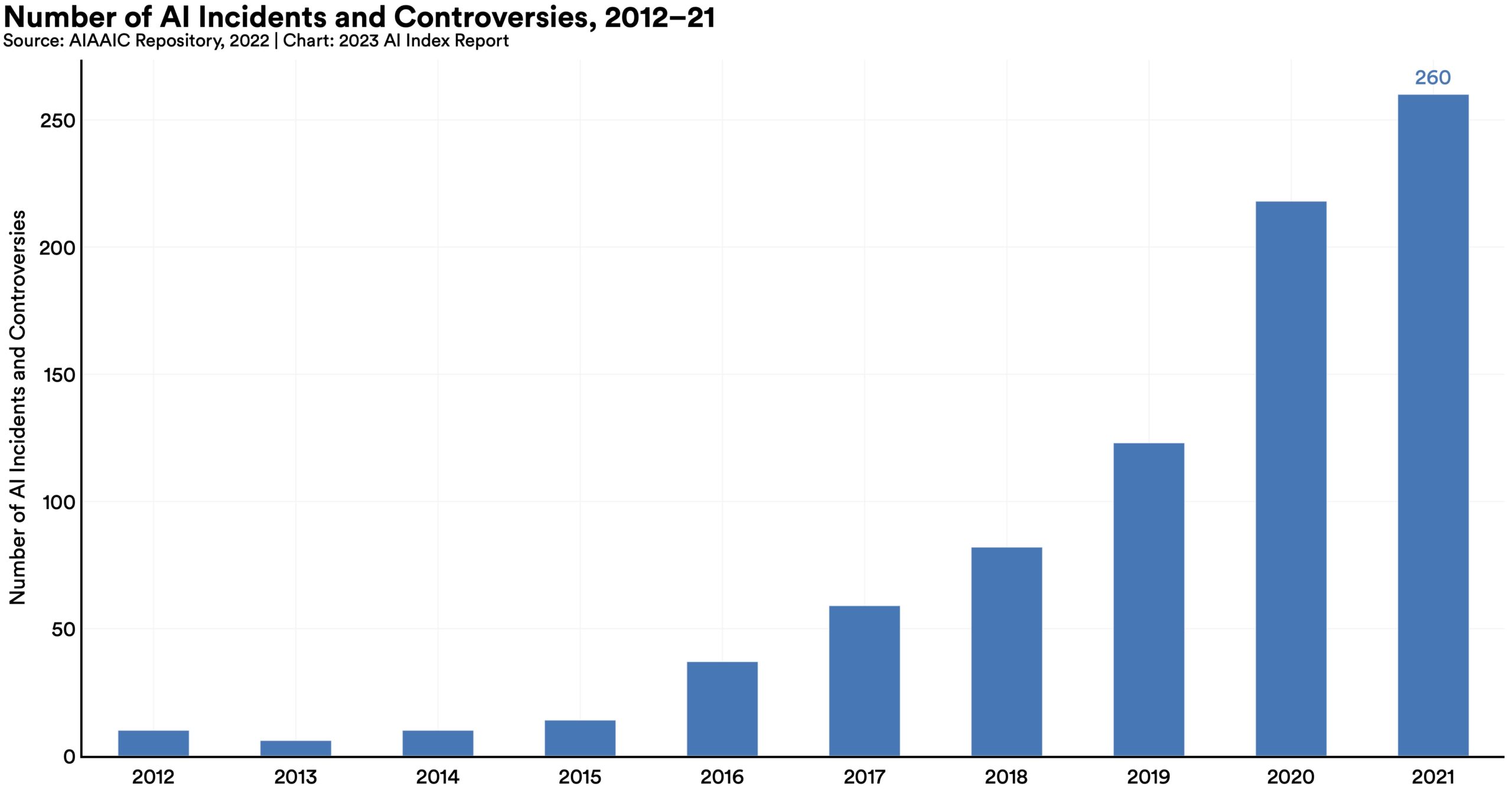

- The variety of “AI incidents and controversies” has elevated by an element of 26 since 2012, which really appears a bit low.

- AI-related expertise and job postings are growing, however not as quick as you’d suppose.

- Policymakers, nonetheless, are falling over themselves making an attempt to put in writing a definitive AI invoice, a idiot’s errand if there ever as one.

- Funding has quickly stalled, however that’s after an astronomic improve during the last decade.

- Greater than 70% of Chinese language, Saudi, and Indian respondents felt AI had extra advantages than drawbacks. Individuals? 35%.

However the report goes into element on many matters and sub-topics, and is sort of readable and non-technical. Solely the devoted will learn all 300-odd pages of study, however actually, nearly any motivated physique might.

Let’s have a look at Chapter 3, Technical AI Ethics, in a bit extra element.

Bias and toxicity are exhausting to scale back to metrics, however so far as we will outline and take a look at fashions for these items, it’s clear that “unfiltered” fashions are a lot, a lot simpler to steer into problematic territory. Instruction tuning, which is to say including a layer of additional prep (resembling a hidden immediate) or passing the mannequin’s output via a second mediator mannequin, is efficient at enhancing this concern, however removed from good.

The rise in “AI incidents and controversies” alluded to within the bullets is finest illustrated by this diagram:

Picture Credit: Stanford HAI

As you possibly can see, the pattern is upwards and these numbers got here earlier than the mainstream adoption of ChatGPT and different giant language fashions, to not point out the huge enchancment in picture mills. You’ll be able to make certain that the 26x improve is simply the beginning.

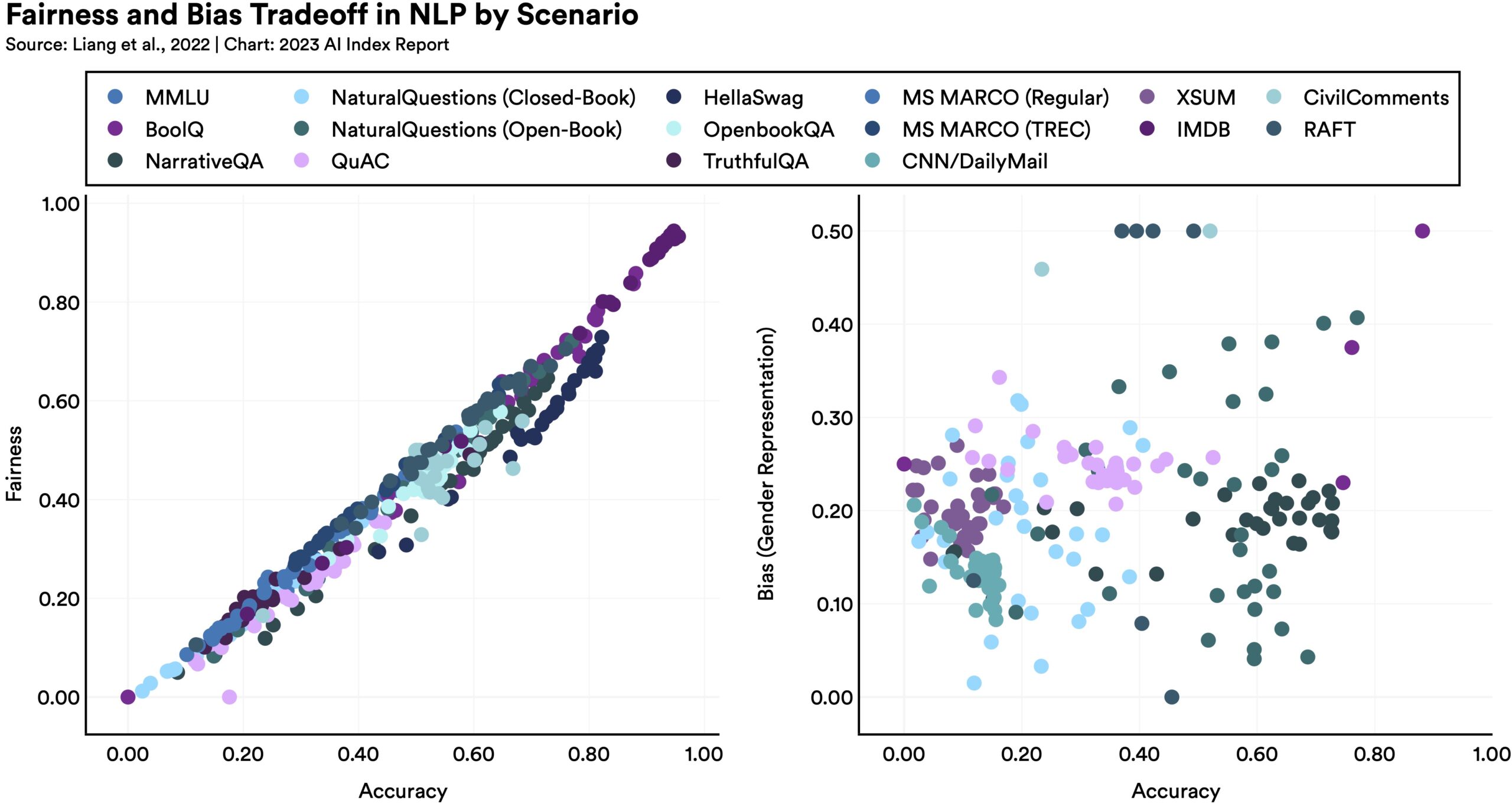

Making fashions extra honest or unbiased in a method might have surprising penalties in different metrics, as this diagram exhibits:

Picture Credit: Stanford HAI

Because the report notes, “Language fashions which carry out higher on sure equity benchmarks are likely to have worse gender bias.” Why? It’s exhausting to say, however simply goes to indicate that optimization just isn’t so simple as anybody hopes. There isn’t a easy answer to enhancing these giant fashions, partly as a result of we don’t actually perceive how they work.

Truth-checking is a type of domains that seems like a pure match for AI: having listed a lot of the online, it may consider statements and return a confidence that they’re supported by truthful sources, and so forth. That is very removed from the case. AI really is especially unhealthy at evaluating factuality and the chance just isn’t a lot that they are going to be unreliable checkers, however that they may themselves turn out to be highly effective sources of convincing misinformation. Plenty of research and datasets have been created to check and enhance AI fact-checking, however up to now we’re nonetheless kind of the place we began.

Happily, there’s a big uptick in curiosity right here, for the apparent motive that if individuals really feel they’ll’t belief AI, the entire {industry} is ready again. There’s been an amazing improve in submissions on the ACM Convention on Equity, Accountability, and Transparency, and at NeurIPS points like equity, privateness, and interpretability are getting extra consideration and stage time.

These highlights of highlights go away plenty of element on the desk. The HAI staff has completed an incredible job of organizing the content material, nonetheless, and after perusing the high-level stuff here you possibly can download the full paper and get deeper into any matter that piques your curiosity.