Maintaining with an business as fast-moving as AI is a tall order. So till an AI can do it for you, right here’s a useful roundup of the final week’s tales on this planet of machine studying, together with notable analysis and experiments we didn’t cowl on their very own.

This week, Google dominated the AI information cycle with a variety of latest merchandise that launched at its annual I/O developer convention. They run the gamut from a code-generating AI meant to compete with GitHub’s Copilot to an AI music generator that turns textual content prompts into brief songs.

A good variety of these instruments look to be official labor savers — greater than advertising fluff, that’s to say. I’m notably intrigued by Mission Tailwind, a note-taking app that leverages AI to arrange, summarize and analyze information from a private Google Docs folder. However additionally they expose the constraints and shortcomings of even the most effective AI applied sciences immediately.

Take PaLM 2, for instance, Google’s latest giant language mannequin (LLM). PaLM 2 will energy Google’s up to date Bard chat software, the corporate’s competitor to OpenAI’s ChatGPT, and performance as the muse mannequin for many of Google’s new AI options. However whereas PaLM 2 can write code, emails and extra, like comparable LLMs, it additionally responds to questions in poisonous and biased methods.

Google’s music generator, too, is pretty restricted in what it may possibly accomplish. As I wrote in my arms on, a lot of the songs I’ve created with MusicLM sound satisfactory at greatest — and at worst like a four-year-old let unfastened on a DAW.

There’s been a lot written about how AI will exchange jobs — doubtlessly the equal of 300 million full-time jobs, in keeping with a report by Goldman Sachs. In a survey by Harris, 40% of staff aware of OpenAI’s AI-powered chatbot software, ChatGPT, are involved that it’ll exchange their jobs solely.

Google’s AI isn’t the end-all be-all. Certainly, the corporate’s arguably behind within the AI race. Nevertheless it’s an indisputable fact that Google employs some of the top AI researchers in the world. And if that is the most effective they will handle, it’s a testomony to the truth that AI is much from a solved drawback.

Listed here are the opposite AI headlines of be aware from the previous few days:

- Meta brings generative AI to advertisements: Meta this week introduced an AI sandbox, of kinds, for advertisers to assist them create various copies, background era via textual content prompts and picture cropping for Fb or Instagram advertisements. The corporate mentioned that the options can be found to pick advertisers in the meanwhile and can develop entry to extra advertisers in July.

- Added context: Anthropic has expanded the context window for Claude — its flagship text-generating AI mannequin, nonetheless in preview — from 9,000 tokens to 100,000 tokens. Context window refers back to the textual content the mannequin considers earlier than producing further textual content, whereas tokens signify uncooked textual content (e.g., the phrase “implausible” could be cut up into the tokens “fan,” “tas” and “tic”). Traditionally and even immediately, poor reminiscence has been an obstacle to the usefulness of text-generating AI. However bigger context home windows might change that.

- Anthropic touts ‘constitutional AI’: Bigger context home windows aren’t the Anthropic fashions’ solely differentiator. The corporate this week detailed “constitutional AI,” its in-house AI coaching method that goals to imbue AI programs with “values” outlined by a “structure.” In distinction to different approaches, Anthropic argues that constitutional AI makes the conduct of programs each simpler to know and easier to regulate as wanted.

- An LLM constructed for analysis: The nonprofit Allen Institute for AI Analysis (AI2) introduced that it plans to coach a research-focused LLM referred to as Open Language Mannequin, including to the big and rising open supply library. AI2 sees Open Language Mannequin, or OLMo for brief, as a platform and never only a mannequin — one which’ll enable the analysis group to take every part AI2 creates and both use it themselves or search to enhance it.

- New fund for AI: In different AI2 information, AI2 Incubator, the nonprofit’s AI startup fund, is revving up once more at 3 times its earlier measurement — $30 million versus $10 million. Twenty-one firms have handed via the incubator since 2017, attracting some $160 million in additional funding and at the very least one main acquisition: XNOR, an AI acceleration and effectivity outfit that was subsequently snapped up by Apple for round $200 million.

- EU intros guidelines for generative AI: In a sequence of votes within the European Parliament, MEPs this week backed a raft of amendments to the bloc’s draft AI laws — together with deciding on necessities for the so-called foundational fashions that underpin generative AI applied sciences like OpenAI’s ChatGPT. The amendments put the onus on suppliers of foundational fashions to use security checks, knowledge governance measures and danger mitigations previous to placing their fashions available on the market

- A common translator: Google is testing a robust new translation service that redubs video in a brand new language whereas additionally synchronizing the speaker’s lips with phrases they by no means spoke. It might be very helpful for lots of causes, however the firm was upfront about the opportunity of abuse and the steps taken to stop it.

- Automated explanations: It’s typically mentioned that LLMs alongside the strains of OpenAI’s ChatGPT are a black field, and positively, there’s some reality to that. In an effort to peel again their layers, OpenAI is developing a software to routinely determine which elements of an LLM are liable for which of its behaviors. The engineers behind it stress that it’s within the early levels, however the code to run it’s accessible in open supply on GitHub as of this week.

- IBM launches new AI providers: At its annual Suppose convention, IBM introduced IBM Watsonx, a brand new platform that delivers instruments to construct AI fashions and supply entry to pretrained fashions for producing laptop code, textual content and extra. The corporate says the launch was motivated by the challenges many companies nonetheless expertise in deploying AI throughout the office.

Different machine learnings

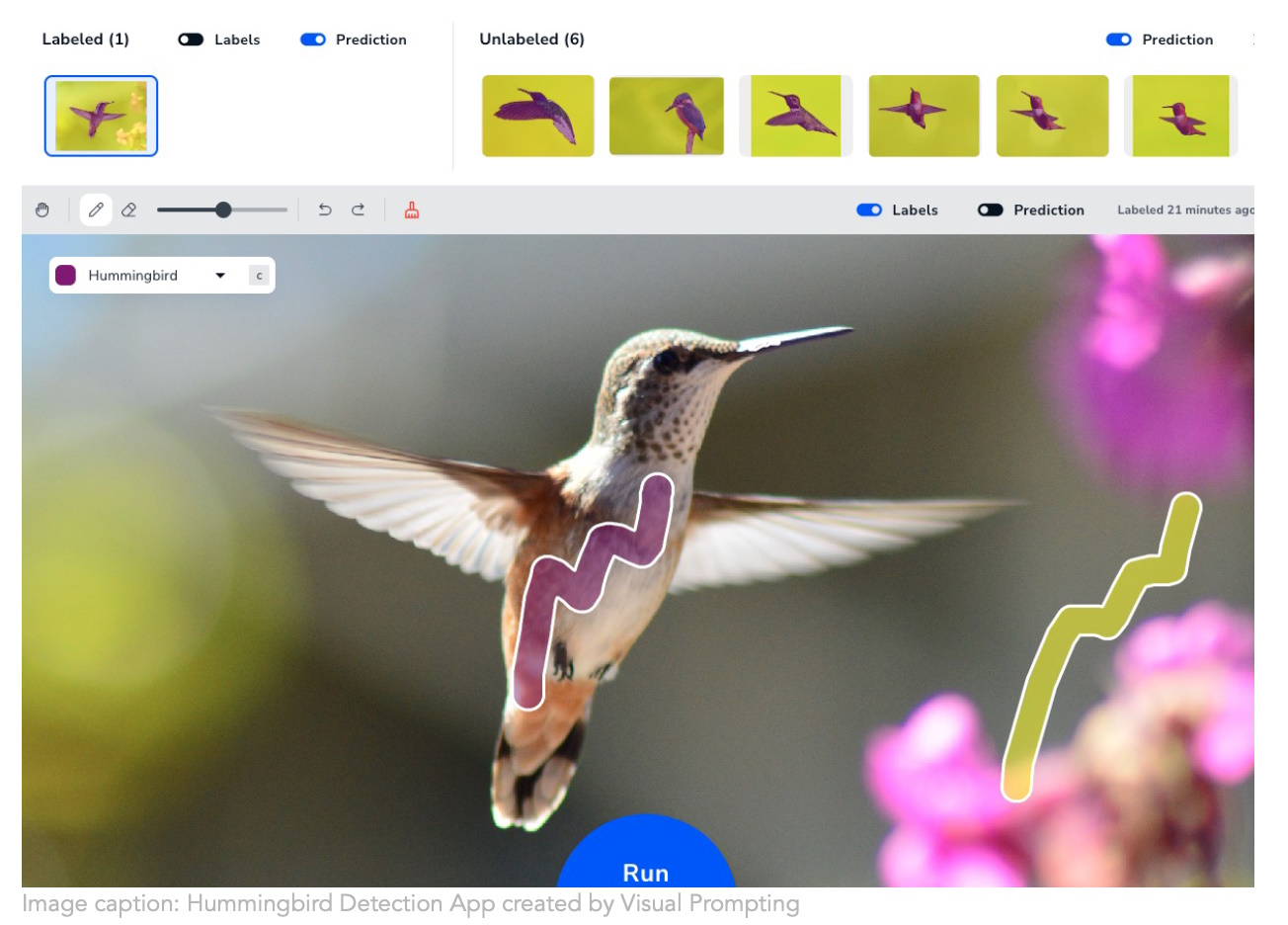

Picture Credit: Touchdown AI

Andrew Ng’s new firm Landing AI is taking a extra intuitive strategy to creating laptop imaginative and prescient coaching. Making a mannequin perceive what you wish to determine in photos is fairly painstaking, however their “visual prompting” technique enables you to simply make a number of brush strokes and it figures out your intent from there. Anybody who has to construct segmentation fashions is saying “my god, lastly!” Most likely numerous grad college students who presently spend hours masking organelles and family objects.

Microsoft has utilized diffusion models in a unique and interesting way, basically utilizing them to generate an motion vector as a substitute of a picture, having skilled it on numerous noticed human actions. It’s nonetheless very early and diffusion isn’t the plain resolution for this, however as they’re steady and versatile, it’s fascinating to see how they are often utilized past purely visible duties. Their paper is being introduced at ICLR later this 12 months.

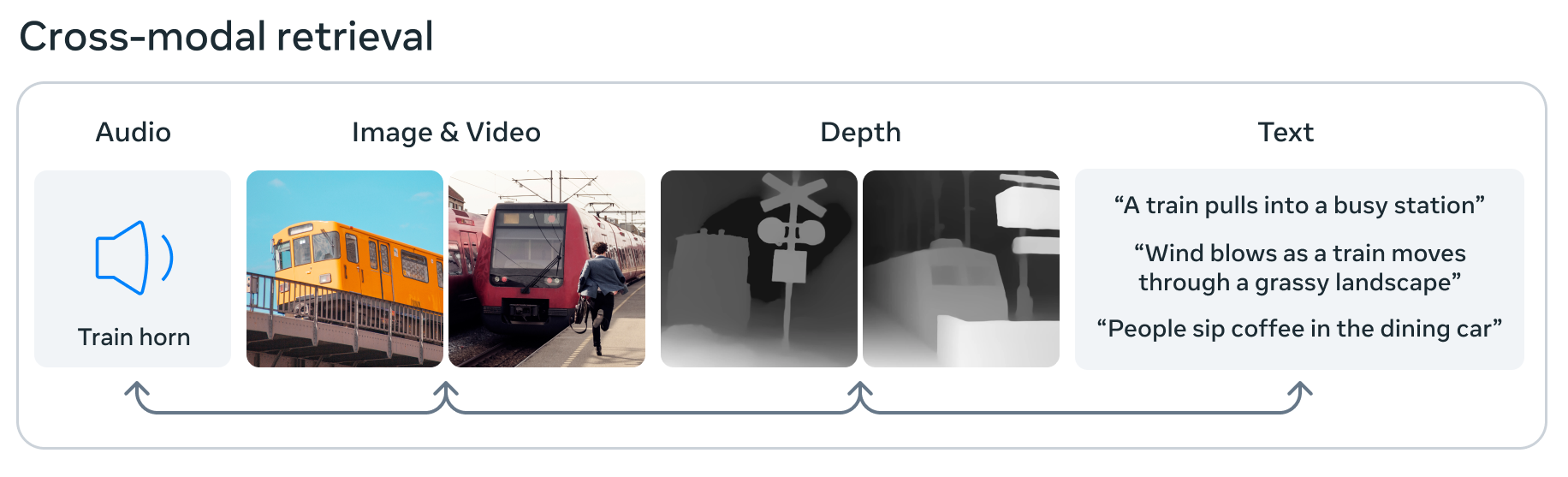

Picture Credit: Meta

Meta can be pushing the sides of AI with ImageBind, which it claims is the primary mannequin that may course of and combine knowledge from six totally different modalities: photos and video, audio, 3D depth knowledge, thermal data, and movement or positional knowledge. Because of this in its little machine studying embedding area, a picture could be related to a sound, a 3D form, and varied textual content descriptions, any certainly one of which might be requested about or used to decide. It’s a step in the direction of “common” AI in that it absorbs and associates knowledge extra just like the mind — nevertheless it’s nonetheless primary and experimental, so don’t get too excited simply but.

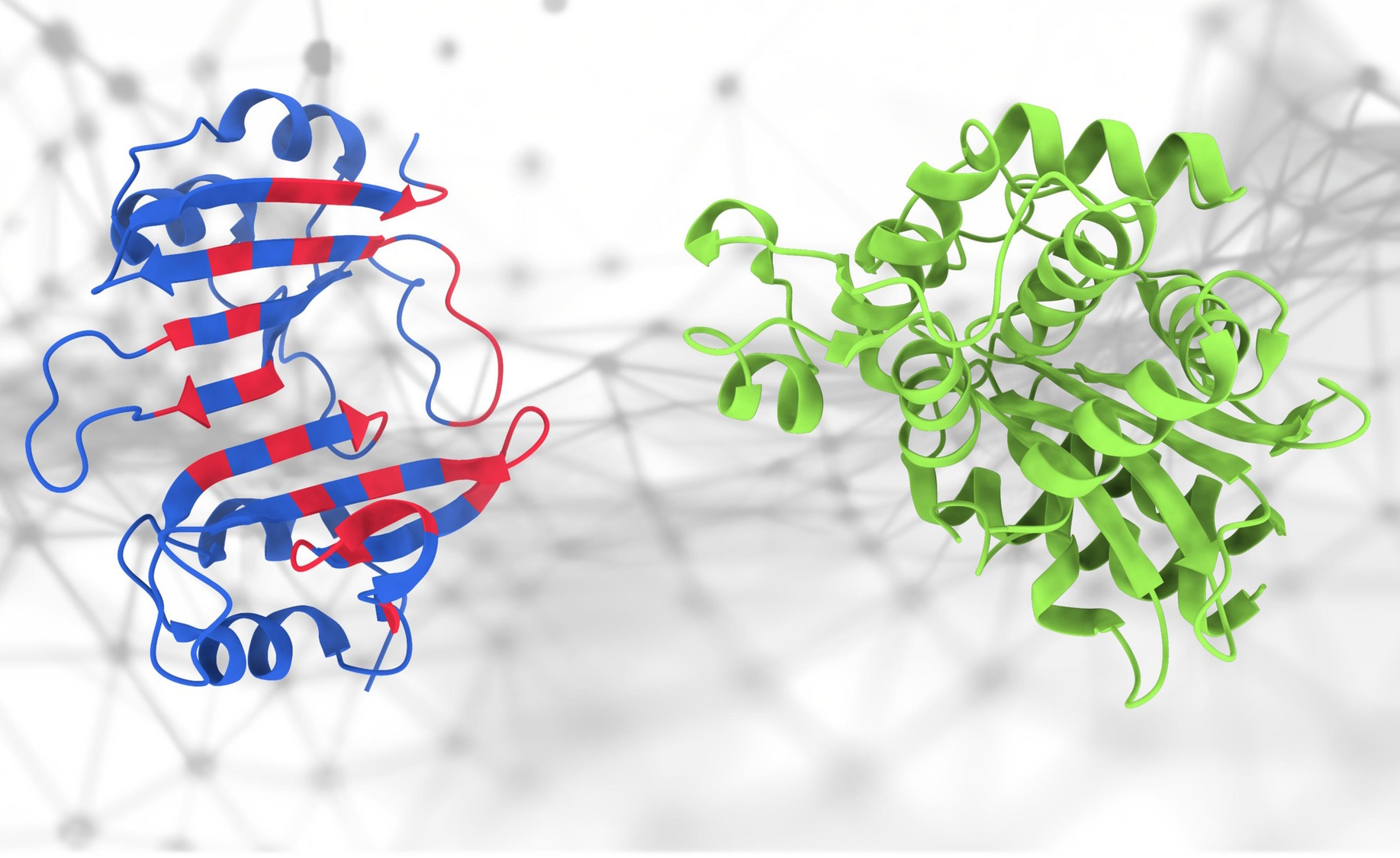

If these proteins contact… what occurs?

Everybody acquired enthusiastic about AlphaFold, and for good cause, however actually construction is only one small a part of the very advanced science of proteomics. It’s how these proteins work together that’s each essential and tough to foretell — however this new PeSTo model from EPFL makes an attempt to do exactly that. “It focuses on vital atoms and interactions throughout the protein construction,” mentioned lead developer Lucien Krapp. “It signifies that this methodology successfully captures the advanced interactions inside protein buildings to allow an correct prediction of protein binding interfaces.” Even when it isn’t actual or 100% dependable, not having to begin from scratch is tremendous helpful for researchers.

The feds are going massive on AI. The President even dropped in on a meeting with a bunch of top AI CEOs to say how essential getting this proper is. Possibly a bunch of companies aren’t essentially the suitable ones to ask, however they’ll at the very least have some concepts price contemplating. However they have already got lobbyists, proper?

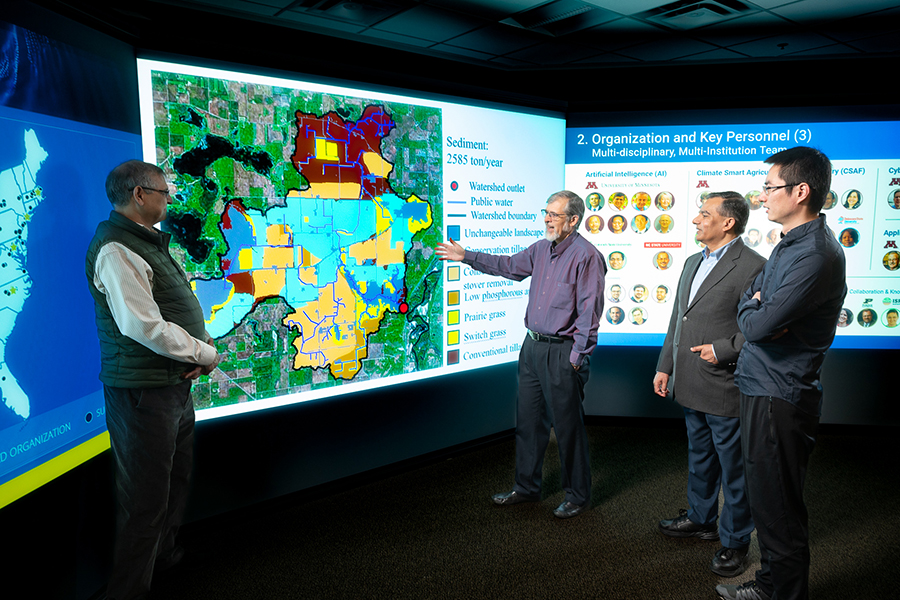

I’m extra excited concerning the new AI research centers popping up with federal funding. Primary analysis is vastly wanted to counterbalance the product-focused work being accomplished by the likes of OpenAI and Google — so when you’ve AI facilities with mandates to analyze issues like social science (at CMU), or local weather change and agriculture (at U of Minnesota), it appears like inexperienced fields (each figuratively and actually). Although I additionally wish to give a bit shout out to this Meta research on forestry measurement.

Doing AI collectively on an enormous display screen — it’s science!

Plenty of fascinating conversations on the market about AI. I believed this interview with UCLA (my alma mater, go Bruins) academics Jacob Foster and Danny Snelson was an fascinating one. Right here’s a fantastic thought on LLMs to fake you got here up with this weekend when individuals are speaking about AI:

These programs reveal simply how formally constant most writing is. The extra generic the codecs that these predictive fashions simulate, the extra profitable they’re. These developments push us to acknowledge the normative capabilities of our varieties and doubtlessly rework them. After the introduction of images, which is superb at capturing a representational area, the painterly milieu developed Impressionism, a method that rejected correct illustration altogether to linger with the materiality of paint itself.

Positively utilizing that!