Generative AI, notably text-to-image AI, is attracting as many lawsuits as it’s enterprise {dollars}.

Two firms behind in style AI artwork instruments, Midjourney and Stability AI, are entangled in a legal case that alleges they infringed on the rights of tens of millions of artists by coaching their instruments on web-scraped photographs. Individually, inventory picture provider Getty Photographs took Stability AI to court docket for reportedly utilizing photographs from its web site with out permission to coach Steady Diffusion, an art-generating AI.

Generative AI’s flaws — an inclination to regurgitate the information it’s skilled on and, relatedly, the make-up of its coaching knowledge — continues to place it within the authorized crosshairs. However a brand new startup, Bria, claims to reduce the chance by coaching image-generating — and shortly video-generating — AI in an “moral” manner.

“Our purpose is to empower each builders and creators whereas making certain that our platform is legally and ethically sound,” Yair Adato, the co-founder of Bria, instructed TechCrunch in an e mail interview. “We mixed the perfect of visible generative AI expertise and accountable AI practices to create a sustainable mannequin that prioritizes these issues.”

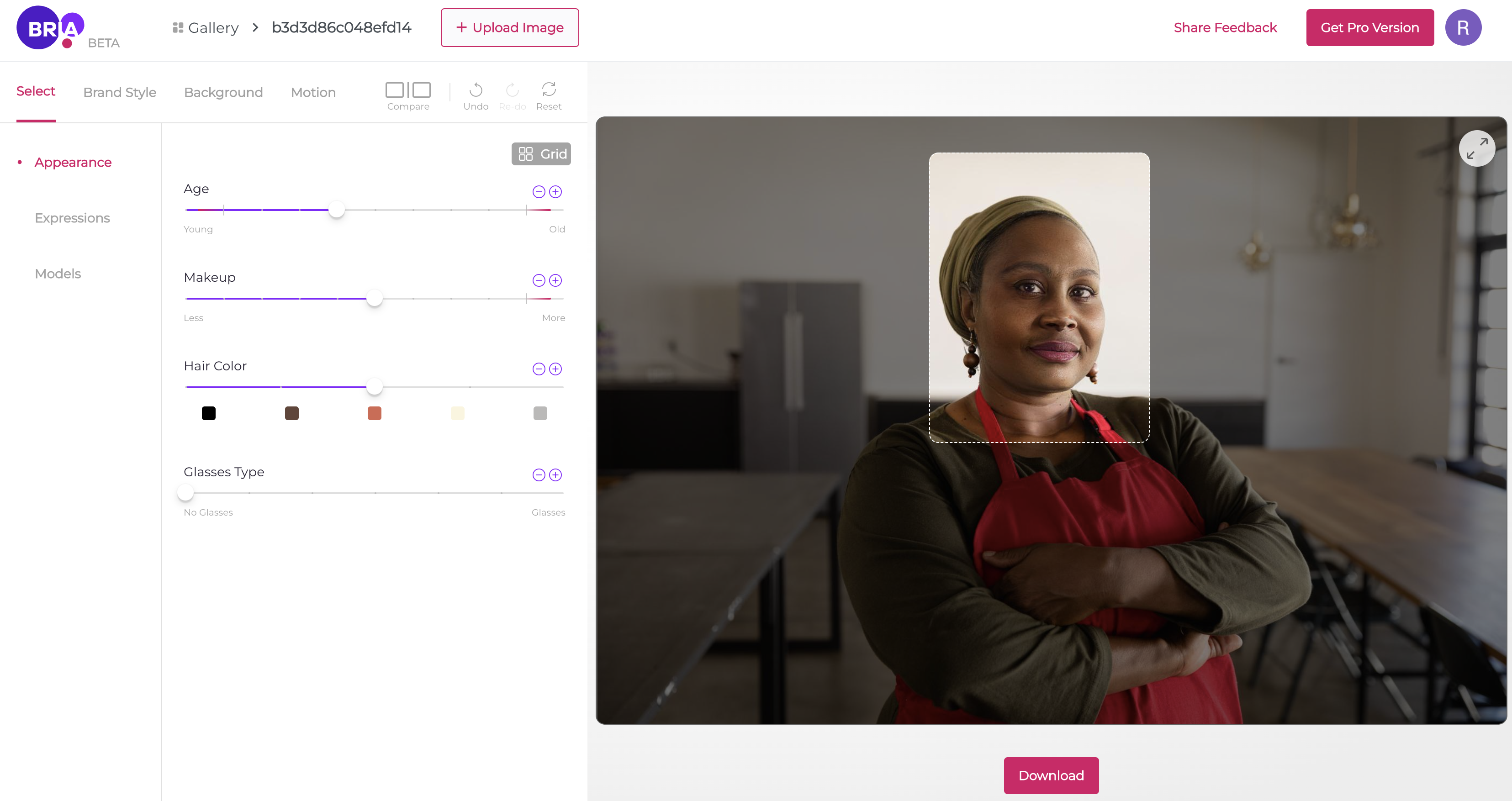

Picture Credit: Bria

Adato co-founded Bria when the pandemic hit in 2020, and the corporate’s different co-founder, Assa Eldar, joined in 2022. Throughout Adato’s Ph.D. research in pc science at Ben-Gurion College of the Negev, he says he developed a ardour for pc imaginative and prescient and its potential to “enhance” communication by generative AI.

“I noticed that there’s an actual enterprise use case for this,” Adato stated. “The method of making visuals is advanced, guide and infrequently requires specialised abilities. Bria was created to deal with this problem — offering a visible generative AI platform tailor-made to enterprises that digitizes and automates this complete course of.”

Because of current developments within the discipline of AI, each on the industrial and analysis facet (open supply fashions, the decreasing cost of compute, and so forth), there’s no scarcity of platforms that supply text-to-image AI artwork instruments (Midjourney, DeviantArt, and many others.). However Adato claims that Bria’s completely different in that it (1) focuses completely on the enterprise and (2) was constructed from the beginning with moral issues in thoughts.

Bria’s platform permits companies to create visuals for social media posts, adverts and e-commerce listings utilizing its image-generating AI. By way of an internet app (an API is on the way in which) and Nvidia’s Picasso cloud AI service, prospects can generate, modify or add visuals and optionally change on a “model guardian” function, which makes an attempt to make sure their visuals comply with model pointers.

The AI in query is skilled on “licensed” datasets containing content material that Bria licenses from companions, together with particular person photographers and artists, in addition to media firms and inventory picture repositories, which obtain a portion of the startup’s income.

Bria isn’t the one enterprise exploring a revenue-sharing enterprise mannequin for generative AI. Shutterstock’s lately launched Contributors Fund reimburses creators whose work is used to coach AI artwork fashions, whereas OpenAI licensed a portion of Shutterstock’s library to coach DALL-E 2, its picture era instrument. Adobe, in the meantime, says that it’s growing a compensation mannequin for contributors to Adobe Inventory, its inventory content material library, that’ll enable them to “monetize their skills” and profit from any income its generative AI expertise, Firefly, brings in.

However Bria’s method is extra in depth, Adato tells me. The corporate’s income share mannequin rewards knowledge homeowners based mostly on their contributions’ influence, permitting artists to set costs on a per-AI-training-run foundation.

Adato explains: “Each time a picture is generated utilizing Bria’s generative platform, we hint again the visuals within the coaching set that contributed probably the most to the [generated art], and we use our expertise to allocate income among the many creators. This method permits us to have a number of licensed sources in our coaching set, together with artists, and keep away from any points associated to copyright infringement.”

Picture Credit: Bria

Bria additionally clearly denotes all generated photographs on its platform with a watermark and gives free entry — or so it claims, no less than — to nonprofits and teachers who “work to democratize creativity, stop deepfakes or promote range.”

Within the coming months, Bria plans to go a step additional, providing an open supply generative AI artwork mannequin with a built-in attribution mechanism. There’s been makes an attempt at this, like Have I Been Trained? and Stable Attribution, websites that make a greatest effort to determine which artwork items contributed to a specific AI-generated visible. However Bria’s mannequin will enable different generative platforms to determine related income sharing preparations with creators, Adato says.

It’s robust to place too a lot inventory into Bria’s tech given the nascency of the generative AI trade. It’s unclear how, for instance, Bria is “tracing again” visuals within the coaching units and utilizing this knowledge to portion out income. How will Bria resolve complaints from creators who allege they’re being unfairly underpaid? Will bugs within the system end in some creators being overpaid? Time will inform.

Adato exudes the boldness you’d count on from a founder regardless of the unknowns, arguing Bria’s platform ensures every contributor to the AI coaching datasets will get their justifiable share based mostly on utilization and “actual influence.”

“We consider that the best solution to remedy [the challenges around generative AI] is on the coaching set stage, by utilizing a high-quality, enterprise-grade, balanced and protected coaching set,” Adato stated. “With regards to adopting generative AI, firms want to think about the moral and authorized implications to make sure that the expertise is utilized in a accountable and protected method. Nevertheless, by working with Bria, firms can relaxation assured that these issues are taken care of.”

That’s an open query. And it’s not the one one.

What if a creator needs to decide out of Bria’s platform? Can they? Adato assures me that they’ll be capable of. However Bria makes use of its personal opt-out mechanism versus a typical customary resembling DeviantArt‘s or artist advocacy group Spawning‘s, which presents an internet site the place artists can take away their artwork from one of many extra in style generative artwork coaching knowledge units.

That raises the burden for content material creators, who now must doubtlessly fear about taking the steps to take away their artwork from one more generative AI platform (until in fact they use a “cloaking” instrument resembling Glaze, rendering their artwork untrainable). Adato doesn’t see it that manner.

“We’ve made it a precedence to deal with protected and high quality enterprise knowledge collections within the development of our coaching units to keep away from biased or poisonous knowledge and copyright infringement,” he stated. “General, our dedication to moral and accountable coaching of AI fashions units us other than our rivals.”

These rivals embrace incumbents like OpenAI, Midjourney and Stability AI, in addition to Jasper, whose generative artwork instrument, Jasper Artwork, additionally targets enterprise prospects. The formidable competitors — and open moral questions — doesn’t appear to have scared away buyers, although — Bria has raised $10 million in enterprise capital so far from Entrée Capital, IN Enterprise, Getty Photographs and a bunch of Israeli angel buyers.

Picture Credit: Bria

Adato stated that Bria is presently serving “a spread” of purchasers, together with advertising businesses, visible inventory repositories and tech and advertising corporations. “We’re dedicated to persevering with to develop our buyer base and supply them with modern options for his or her visible communication wants,” he added.

Ought to Bria succeed, a part of me wonders if it’ll spawn a brand new crop of generative AI firms extra restricted in scope than the massive gamers at present — and thus much less vulnerable to authorized challenges. With funding for generative AI beginning to cool off, partly due to the excessive stage of competitors and questions round legal responsibility, extra “slender” generative AI startups simply would possibly stand an opportunity at reducing by the noise — and avoiding lawsuits within the course of.

We’ll have to attend and see.