Maintaining with an trade as fast-moving as AI is a tall order. So till an AI can do it for you, right here’s a helpful roundup of the final week’s tales on the earth of machine studying, together with notable analysis and experiments we didn’t cowl on their very own.

This week, movers and shakers within the AI trade, together with OpenAI CEO Sam Altman, launched into a goodwill tour with policymakers — making the case for his or her respective visions of AI regulation. Speaking to reporters in London, Altman warned that the EU’s proposed AI Act, resulting from be finalized subsequent yr, may lead OpenAI finally to drag its companies from the bloc.

“We are going to attempt to comply, but when we will’t comply we are going to stop working,” he mentioned.

Google CEO Sundar Pichai, additionally in London, emphasised the necessity for “acceptable” AI guardrails that don’t stifle innovation. And Microsoft’s Brad Smith, assembly with lawmakers in Washington, proposed a five-point blueprint for the general public governance of AI.

To the extent that there’s a typical thread, tech titans expressed a willingness to be regulated — as long as it doesn’t intervene with their business ambitions. Smith, as an illustration, declined to deal with the unresolved authorized query of whether or not coaching AI on copyrighted information (which Microsoft does) is permissible beneath the honest use doctrine within the U.S. Strict licensing necessities round AI coaching information, had been they to be imposed on the federal stage, may show pricey for Microsoft and its rivals doing the identical.

Altman, for his half, appeared to take difficulty with provisions within the AI Act that require corporations to publish summaries of the copyrighted information they used to coach their AI fashions, and make them partially accountable for how the techniques are deployed downstream. Necessities to cut back the power consumption and useful resource use of AI coaching — a notoriously compute-intensive course of — had been additionally questioned.

The regulatory path abroad stays unsure. However within the U.S., the OpenAIs of the world might get their method ultimately. Final week, Altman wooed members of the Senate Judiciary Committee with carefully-crafted statements in regards to the risks of AI, and his suggestions for regulating it. Sen. John Kennedy (R-LA) was notably deferential: “That is your likelihood, people, to inform us find out how to get this proper … Speak in plain English and inform us what guidelines to implement,” he mentioned.

In feedback to The Day by day Beast, Suresh Venkatasubramanian, Brown College’s director of the Middle for Tech Accountability, perhaps summed it up it finest: “We don’t ask arsonists to be answerable for the fireplace division.” And but that’s what’s in peril of taking place right here, with AI. It’ll be incumbent on legislators to withstand they honeyed phrases of tech execs and clamp down the place it’s wanted. Solely time will inform in the event that they do.

Listed below are the opposite AI headlines of notice from the previous few days:

- ChatGPT involves extra units: Regardless of being U.S.- and iOS-only forward of an growth to 11 extra international markets, OpenAI’s ChatGPT app is off to a stellar begin, Sarah writes. The app has already handed half 1,000,000 downloads in its first six days, app trackers say. That ranks it as one of many highest-performing new app releases throughout each this yr and the final, topped solely by the February 2022 arrival of the Trump-backed Twitter clone, Fact Social.

- OpenAI proposes a regulatory physique: AI is growing quickly sufficient — and the risks it could pose are clear sufficient — that OpenAI’s management believes that the world wants a global regulatory physique akin to that governing nuclear energy. OpenAI’s co-founders argued this week that the tempo of innovation in AI is so quick that we will’t anticipate current authorities to adequately rein within the expertise, so we’d like new ones.

- Generative AI involves Google Search: Google announced this week that it’s beginning to open up entry to new generative AI capabilities in Search after teasing them at its I/O occasion earlier within the month. With this new replace, Google says that customers can simply rise up to hurry on a brand new or sophisticated matter, uncover fast ideas for particular questions, or get deep information like buyer rankings and costs on product searches.

- TikTok exams a bot: Chatbots are scorching, so it’s no shock to be taught that TikTok is piloting its personal, as effectively. Known as “Tako,” the bot is in restricted testing in choose markets, the place it should seem on the right-hand aspect of the TikTok interface above a consumer’s profile and different buttons for likes, feedback and bookmarks. When tapped, customers can ask Tako numerous questions in regards to the video they’re watching or uncover new content material by asking for suggestions.

- Google on an AI Pact: Google’s Sundar Pichai has agreed to work with lawmakers in Europe on what’s being known as an “AI Pact” — seemingly a stop-gap set of voluntary guidelines or requirements whereas formal rules on AI are developed. Based on a memo, it’s the bloc’s intention to launch an AI Pact “involving all main European and non-European AI actors on a voluntary foundation” and forward of the authorized deadline of the aforementioned pan-EU AI Act.

- Folks, however made with AI: With Spotify’s AI DJ, the corporate educated an AI on an actual individual’s voice — that of its head of Cultural Partnerships and podcast host, Xavier “X” Jernigan. Now the streamer might flip that very same expertise to promoting, it appears. Based on statements made by The Ringer founder Invoice Simmons, the streaming service is growing AI expertise that can be capable to use a podcast host’s voice to make host-read adverts — with out the host really having to learn and report the advert copy.

- Product imagery through generative AI: At its Google Marketing Live occasion this week, Google introduced that it’s launching Product Studio, a brand new software that lets retailers simply create product imagery utilizing generative AI. Manufacturers will be capable to create imagery inside Service provider Middle Subsequent, Google’s platform for companies to handle how their merchandise present up in Google Search.

- Microsoft bakes a chatbot into Home windows: Microsoft is constructing its ChatGPT-based Bing expertise proper into Home windows 11 — and including just a few twists that permit customers to ask the agent to assist navigate the OS. The brand new Home windows Copilot is supposed to make it simpler for Home windows customers to search out and tweak settings with out having to delve deep into Home windows submenus. However the instruments will even permit customers to summarize content material from the clipboard, or compose textual content.

- Anthropic raises extra cash: Anthropic, the outstanding generative AI startup co-founded by OpenAI veterans, has raised $450 million in a Sequence C funding spherical led by Spark Capital. Anthropic wouldn’t disclose what the spherical valued its enterprise at. However a pitch deck we obtained in March suggests it might be within the ballpark of $4 billion.

- Adobe brings generative AI to Photoshop: Photoshop bought an infusion of generative AI this week with the addition of quite a lot of options that permit customers to increase photos past their borders with AI-generated backgrounds, add objects to photographs, or use a brand new generative fill function to take away them with much more precision than the previously-available content-aware fill. For now, the options will solely be obtainable within the beta model of Photoshop. However they’re already causing some graphic designers consternation about the way forward for their trade.

Different machine learnings

Invoice Gates is probably not an professional on AI, however he is very wealthy, and he’s been proper on issues earlier than. Seems he’s bullish on private AI brokers, as he told Fortune: “Whoever wins the private agent, that’s the massive factor, as a result of you’ll by no means go to a search web site once more, you’ll by no means go to a productiveness web site, you’ll by no means go to Amazon once more.” How precisely this may play out isn’t acknowledged, however his intuition that individuals would relatively not borrow hassle by utilizing a compromised search or productiveness engine might be not far off base.

Evaluating threat in AI fashions is an evolving science, which is to say we all know subsequent to nothing about it. Google DeepMind (the newly shaped superentity comprising Google Mind and DeepMind) and collaborators throughout the globe are attempting to maneuver the ball ahead, and have produced a model evaluation framework for “excessive dangers” comparable to “robust abilities in manipulation, deception, cyber-offense, or different harmful capabilities.” Effectively, it’s a begin.

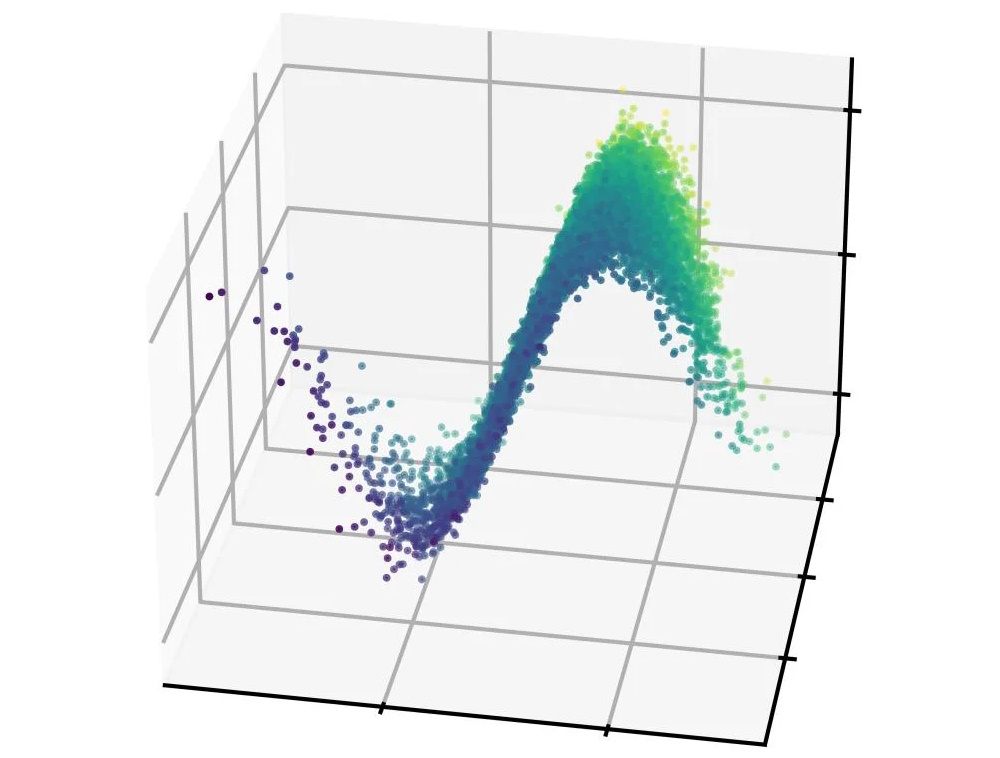

Picture Credit: SLAC

Particle physicists are discovering attention-grabbing methods to use machine studying to their work: “We’ve proven that we will infer very sophisticated high-dimensional beam shapes from astonishingly small quantities of information,” says SLAC’s Auralee Edelen. They created a mannequin that helps them predict the form of the particle beam within the accelerator, one thing that usually takes hundreds of information factors and plenty of compute time. That is way more environment friendly and will assist make accelerators all over the place simpler to make use of. Subsequent up: “exhibit the algorithm experimentally on reconstructing full 6D part area distributions.” OK!

Adobe Analysis and MIT collaborated on an attention-grabbing pc imaginative and prescient downside: telling which pixels in a picture represent the same material. Since an object might be a number of supplies in addition to colours and different visible elements, it is a fairly refined distinction but additionally an intuitive one. They needed to construct a brand new artificial dataset to do it, however at first it didn’t work. In order that they ended up fine-tuning an current CV mannequin on that information, and it bought proper to it. Why is this handy? Onerous to say, however it’s cool.

Body 1: materials choice; 2: supply video; 3: segmentation; 4: masks Picture Credit: Adobe/MIT

Giant language fashions are typically primarily educated in English for a lot of causes, however clearly the earlier they work as effectively in Spanish, Japanese, and Hindi the higher. BLOOMChat is a new model constructed on prime of BLOOM that works with 46 languages at current, and is aggressive with GPT-4 and others. That is nonetheless fairly experimental so don’t go to manufacturing with it however it might be nice for testing out an AI-adjacent product in a number of languages.

NASA simply introduced a brand new crop of SBIR II fundings, and there are a pair attention-grabbing AI bits and items in there:

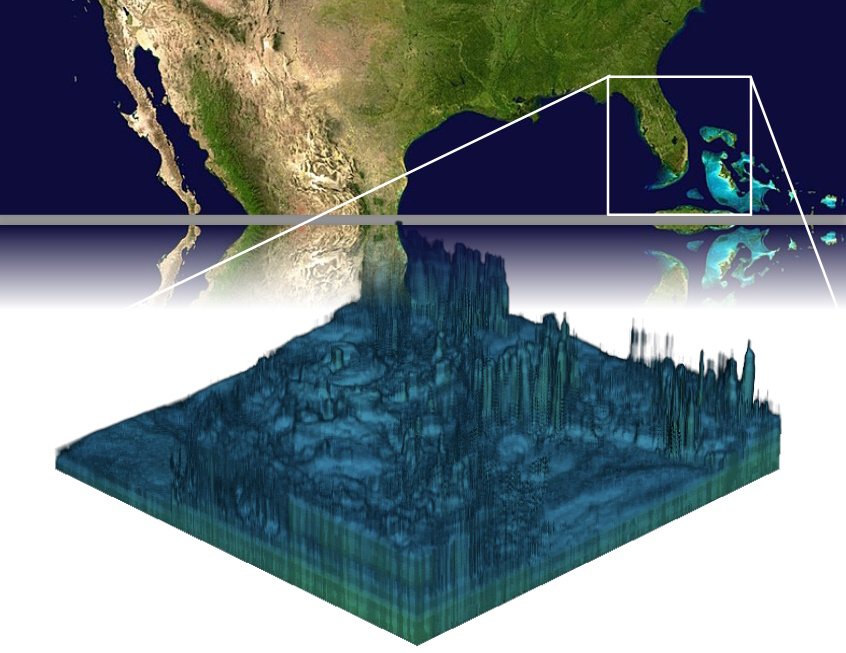

Geolabe is detecting and predicting groundwater variation utilizing AI educated on satellite tv for pc information, and hopes to use the mannequin to a brand new NASA satellite tv for pc constellation going up later this yr.

Zeus AI is engaged on algorithmically producing “3D atmospheric profiles” primarily based on satellite tv for pc imagery, primarily a thick model of the 2D maps we have already got of temperature, humidity, and so forth.

Up in area your computing energy may be very restricted, and whereas we will run some inference up there, coaching is correct out. However IEEE researchers need to make a SWaP-efficient neuromorphic processor for coaching AI fashions in situ.

Robots working autonomously in high-stakes conditions typically want a human minder, and Picknick is making such bots talk their intentions visually, like how they might attain to open a door, in order that the minder doesn’t should intervene as a lot. In all probability a good suggestion.