Maintaining with an business as fast-moving as AI is a tall order. So till an AI can do it for you, right here’s a helpful roundup of the final week’s tales on the earth of machine studying, together with notable analysis and experiments we didn’t cowl on their very own.

If it wasn’t apparent already, the aggressive panorama in AI — significantly the subfield often called generative AI — is red-hot. And it’s getting hotter. This week, Dropbox launched its first company enterprise fund, Dropbox Ventures, which the corporate stated would concentrate on startups constructing AI-powered merchandise that “form the way forward for work.” To not be outdone, AWS debuted a $100 million program to fund generative AI initiatives spearheaded by its companions and prospects.

There’s some huge cash being thrown round within the AI house, to make certain. Salesforce Ventures, Salesforce’s VC division, plans to pour $500 million into startups growing generative AI applied sciences. Workday recently added $250 million to its present VC fund particularly to again AI and machine studying startups. And Accenture and PwC have introduced that they plan to take a position $3 billion and $1 billion, respectively, in AI.

However one wonders whether or not cash is the answer to the AI subject’s excellent challenges.

In an enlightening panel throughout a Bloomberg convention in San Francisco this week, Meredith Whittaker, the president of safe messaging app Sign, made the case that the tech underpinning a few of right this moment’s buzziest AI apps is changing into dangerously opaque. She gave an instance of somebody who walks right into a financial institution and asks for a mortgage.

That individual will be denied for the mortgage and have “no concept that there’s a system in [the] again in all probability powered by some Microsoft API that decided, primarily based on scraped social media, that I wasn’t creditworthy,” Whittaker stated. “I’m by no means going to know [because] there’s no mechanism for me to know this.”

It’s not capital that’s the problem. Slightly, it’s the present energy hierarchy, Whittaker says.

“I’ve been on the desk for like, 15 years, 20 years. I’ve been on the desk. Being on the desk with no energy is nothing,” she continued.

In fact, reaching structural change is way more durable than scrounging round for money — significantly when the structural change received’t essentially favor the powers that be. And Whittaker warns what would possibly occur if there isn’t sufficient pushback.

As progress in AI accelerates, the societal impacts additionally speed up, and we’ll proceed heading down a “hype-filled highway towards AI,” she stated, “the place that energy is entrenched and naturalized below the guise of intelligence and we’re surveilled to the purpose [of having] very, little or no company over our particular person and collective lives.”

That ought to give the business pause. Whether or not it really will is one other matter. That’s in all probability one thing that we’ll hear mentioned when she takes the stage at Disrupt in September.

Listed here are the opposite AI headlines of be aware from the previous few days:

- DeepMind’s AI controls robots: DeepMind says that it has developed an AI mannequin, referred to as RoboCat, that may carry out a spread of duties throughout completely different fashions of robotic arms. That alone isn’t particularly novel. However DeepMind claims that the mannequin is the primary to have the ability to clear up and adapt to a number of duties and accomplish that utilizing completely different, real-world robots.

- Robots be taught from YouTube: Talking of robots, CMU Robotics Institute assistant professor Deepak Pathak this week showcased VRB (Imaginative and prescient-Robotics Bridge), an AI system designed to coach robotic methods by watching a recording of a human. The robotic watches for just a few key items of data, together with contact factors and trajectory, after which makes an attempt to execute the duty.

- Otter will get into the chatbot sport: Computerized transcription service Otter introduced a brand new AI-powered chatbot this week that’ll let contributors ask questions throughout and after a gathering and assist them collaborate with teammates.

- EU requires AI regulation: European regulators are at a crossroads over how AI shall be regulated — and in the end used commercially and noncommercially — within the area. This week, the EU’s largest client group, the European Client Organisation (BEUC), weighed in with its own position: Cease dragging your toes, and “launch pressing investigations into the dangers of generative AI” now, it stated.

- Vimeo launches AI-powered options: This week, Vimeo introduced a set of AI-powered instruments designed to assist customers create scripts, file footage utilizing a built-in teleprompter and take away lengthy pauses and undesirable disfluencies like “ahs” and “ums” from the recordings.

- Capital for artificial voices: ElevenLabs, the viral AI-powered platform for creating artificial voices, has raised $19 million in a brand new funding spherical. ElevenLabs picked up steam slightly rapidly after its launch in late January. However the publicity hasn’t all the time been constructive — significantly as soon as bad actors started to use the platform for their very own ends.

- Turning audio into textual content: Gladia, a French AI startup, has launched a platform that leverages OpenAI’s Whisper transcription mannequin to — through an API — flip any audio into textual content into close to actual time. Gladia guarantees that it may well transcribe an hour of audio for $0.61, with the transcription course of taking roughly 60 seconds.

- Harness embraces generative AI: Harness, a startup making a toolkit to assist builders function extra effectively, this week injected its platform with a bit of AI. Now, Harness can mechanically resolve construct and deployment failures, discover and repair safety vulnerabilities and make recommendations to carry cloud prices below management.

Different machine learnings

This week was CVPR up in Vancouver, Canada, and I want I may have gone as a result of the talks and papers look tremendous attention-grabbing. When you can solely watch one, try Yejin Choi’s keynote concerning the prospects, impossibilities, and paradoxes of AI.

Picture Credit: CVPR/YouTube

The UW professor and MacArthur Genius grant recipient first addressed just a few sudden limitations of right this moment’s most succesful fashions. Specifically, GPT-4 is basically dangerous at multiplication. It fails to search out the product of two three-digit numbers accurately at a shocking charge, although with a bit of coaxing it may well get it proper 95% of the time. Why does it matter {that a} language mannequin can’t do math, you ask? As a result of the whole AI market proper now’s predicated on the concept language fashions generalize effectively to a number of attention-grabbing duties, together with stuff like doing all of your taxes or accounting. Choi’s level was that we ought to be searching for the constraints of AI and dealing inward, not vice versa, because it tells us extra about their capabilities.

The opposite elements of her discuss had been equally attention-grabbing and thought-provoking. You can watch the whole thing here.

Rod Brooks, launched as a “slayer of hype,” gave an interesting history of some of the core concepts of machine learning — ideas that solely appear new as a result of most individuals making use of them weren’t round once they had been invented! Going again by the a long time, he touches on McCulloch, Minsky, even Hebb — and reveals how the concepts stayed related effectively past their time. It’s a useful reminder that machine studying is a subject standing on the shoulders of giants going again to the postwar period.

Many, many papers had been submitted to and introduced at CVPR, and it’s reductive to solely have a look at the award winners, however this can be a information roundup, not a complete literature overview. So right here’s what the judges on the convention thought was essentially the most attention-grabbing:

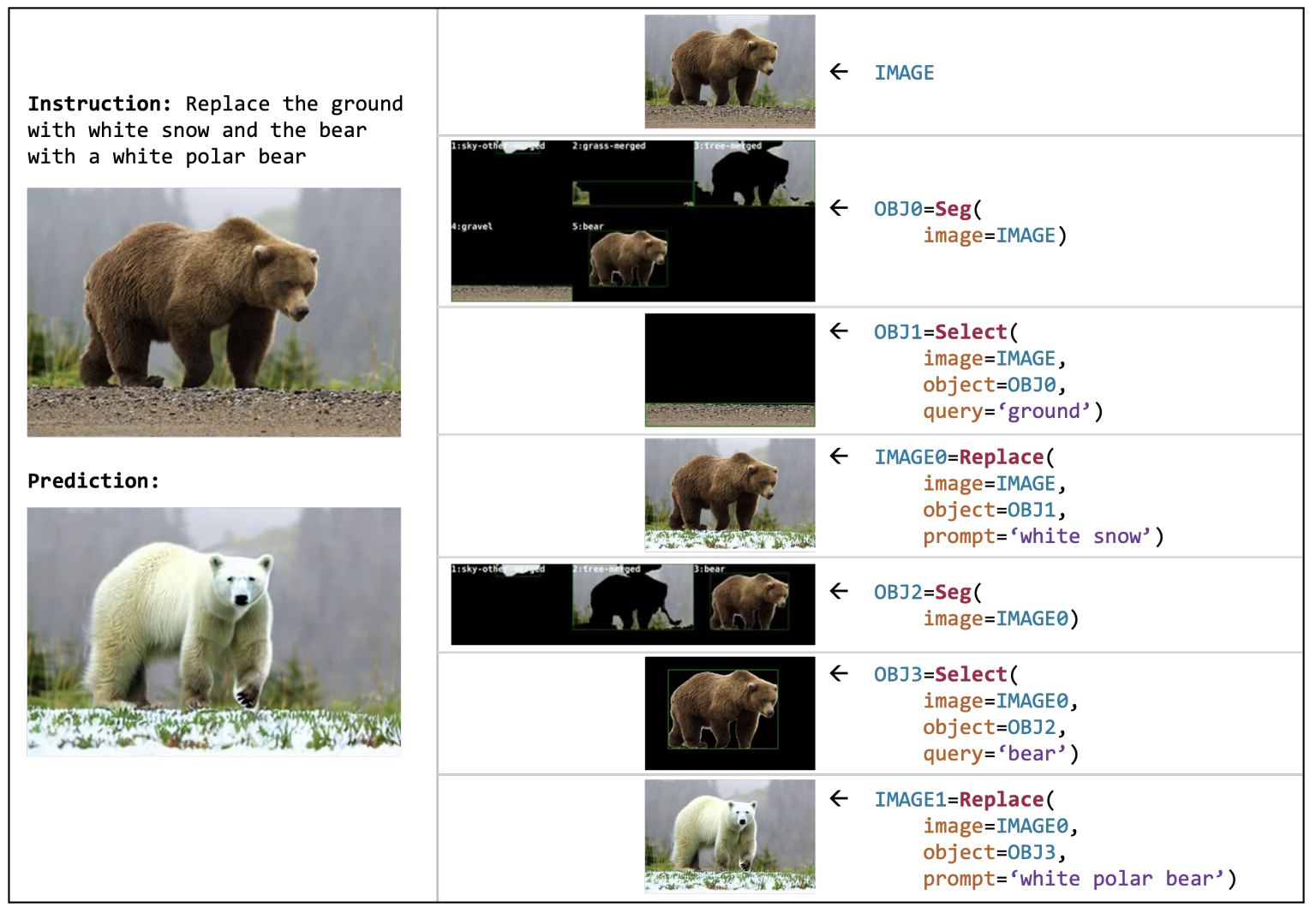

Picture Credit: AI2

VISPROG, from researchers at AI2, is a type of meta-model that performs complicated visible manipulation duties utilizing a multi-purpose code toolbox. Say you’ve gotten an image of a grizzly bear on some grass (as pictured) — you’ll be able to inform it to only “change the bear with a polar bear on snow” and it begins working. It identifies the elements of the picture, separates them visually, searches for and finds or generates an acceptable alternative, and stitches the entire thing again once more intelligently, with no additional prompting wanted on the consumer’s half. The Blade Runner “improve” interface is beginning to look downright pedestrian. And that’s simply one in all its many capabilities.

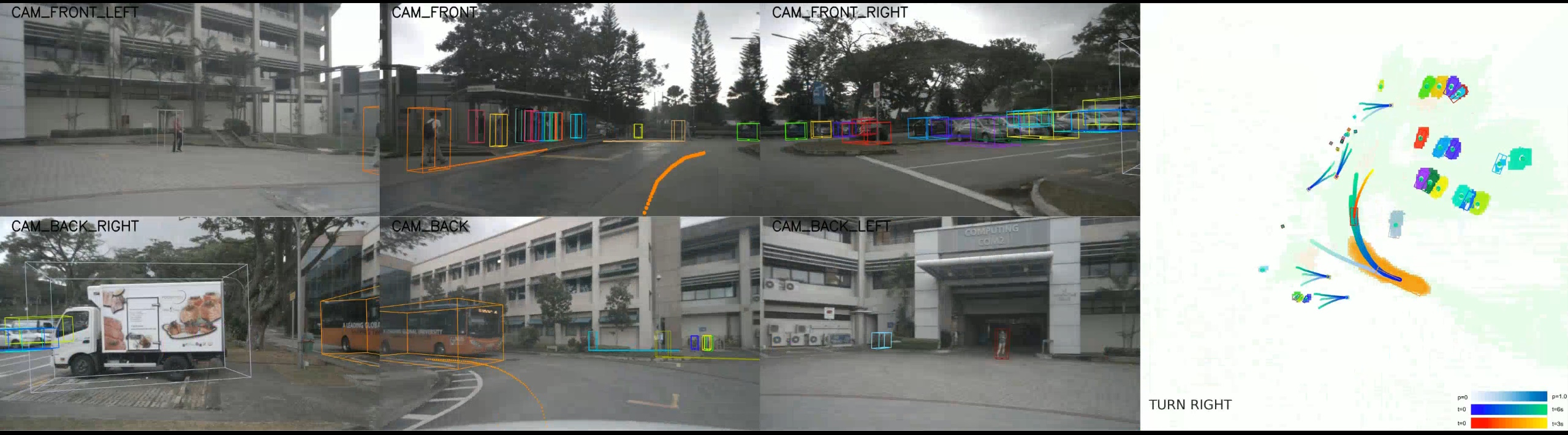

“Planning-oriented autonomous driving,” from a multi-institutional Chinese language analysis group, makes an attempt to unify the varied items of the slightly piecemeal method we’ve taken to self-driving vehicles. Ordinarily there’s a type of stepwise strategy of “notion, prediction, and planning,” every of which could have quite a few sub-tasks (like segmenting folks, figuring out obstacles, and many others). Their mannequin makes an attempt to place all these in a single mannequin, type of just like the multi-modal fashions we see that may use textual content, audio, or photographs as enter and output. Equally this mannequin simplifies in some methods the complicated inter-dependencies of a contemporary autonomous driving stack.

DynIBaR reveals a high-quality and strong methodology of interacting with video utilizing “dynamic Neural Radiance Fields,” or NeRFs. A deep understanding of the objects within the video permits for issues like stabilization, dolly actions, and different belongings you typically don’t count on to be attainable as soon as the video has already been recorded. Once more… “improve.” That is positively the type of factor that Apple hires you for, after which takes credit score for on the subsequent WWDC.

DreamBooth you might bear in mind from a bit of earlier this yr when the challenge’s web page went dwell. It’s the very best system but for, there’s no method round saying it, making deepfakes. In fact it’s invaluable and highly effective to do these sorts of picture operations, to not point out enjoyable, and researchers like these at Google are working to make it extra seamless and reasonable. Penalties… later, perhaps.

One of the best pupil paper award goes to a way for evaluating and matching meshes, or 3D level clouds — frankly it’s too technical for me to attempt to clarify, however this is a vital functionality for actual world notion and enhancements are welcome. Check out the paper here for examples and more info.

Simply two extra nuggets: Intel confirmed off this interesting model, LDM3D, for producing 3D 360 imagery like digital environments. So while you’re within the metaverse and also you say “put us in an overgrown break within the jungle” it simply creates a contemporary one on demand.

And Meta launched a voice synthesis tool called Voicebox that’s tremendous good at extracting options of voices and replicating them, even when the enter isn’t clear. Often for voice replication you want an excellent quantity and number of clear voice recordings, however Voicebox does it higher than many others, with much less knowledge (suppose like 2 seconds). Happily they’re protecting this genie within the bottle for now. For individuals who suppose they may want their voice cloned, try Acapela.