Maintaining with an business as fast-moving as AI is a tall order. So till an AI can do it for you, right here’s a useful roundup of latest tales on the earth of machine studying, together with notable analysis and experiments we didn’t cowl on their very own.

This week in AI, DeepMind, the Google-owned AI R&D lab, launched a paper proposing a framework for evaluating the societal and moral dangers of AI techniques.

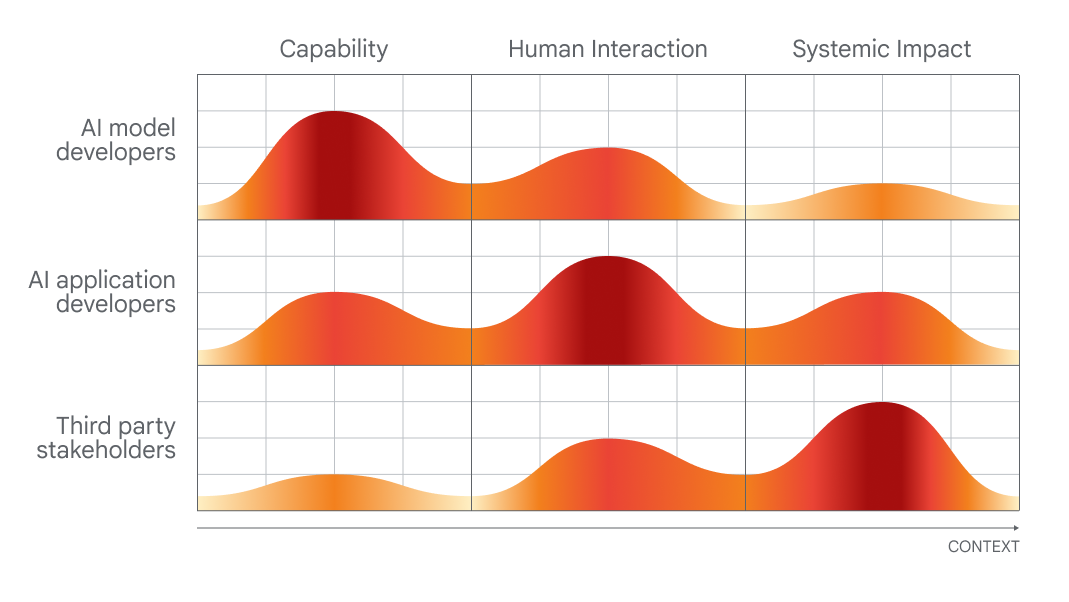

The timing of the paper — which requires various ranges of involvement from AI builders, app builders and “broader public stakeholders” in evaluating and auditing AI — isn’t unintentional.

Subsequent week is the AI Security Summit, a U.Okay.-government-sponsored occasion that’ll deliver collectively worldwide governments, main AI corporations, civil society teams and consultants in analysis to give attention to how finest to handle dangers from the newest advances in AI, together with generative AI (e.g. ChatGPT, Steady Diffusion and so forth). There, the U.Okay. is planning to introduce a world advisory group on AI loosely modeled on the U.N.’s Intergovernmental Panel on Local weather Change, comprising a rotating forged of teachers who will write common experiences on cutting-edge developments in AI — and their related risks.

DeepMind is airing its perspective, very visibly, forward of on-the-ground coverage talks on the two-day summit. And, to offer credit score the place it’s due, the analysis lab makes a couple of affordable (if apparent) factors, similar to calling for approaches to look at AI techniques on the “level of human interplay” and the methods during which these techniques could be used and embedded in society.

Chart exhibiting which individuals could be finest at evaluating which points of AI.

However in weighing DeepMind’s proposals, it’s informative to take a look at how the lab’s mum or dad firm, Google, scores in a latest study launched by Stanford researchers that ranks ten main AI fashions on how brazenly they function.

Rated on 100 standards, together with whether or not its maker disclosed the sources of its coaching knowledge, details about the {hardware} it used, the labor concerned in coaching and different particulars, PaLM 2, considered one of Google’s flagship text-analyzing AI fashions, scores a measly 40%.

Now, DeepMind didn’t develop PaLM 2 — at the least indirectly. However the lab hasn’t traditionally been constantly clear about its personal fashions, and the truth that its mum or dad firm falls brief on key transparency measures means that there’s not a lot top-down stress for DeepMind to do higher.

Alternatively, along with its public musings about coverage, DeepMind seems to be taking steps to vary the notion that it’s tight-lipped about its fashions’ architectures and internal workings. The lab, together with OpenAI and Anthropic, dedicated a number of months in the past to offering the U.Okay. authorities “early or precedence entry” to its AI fashions to help analysis into analysis and security.

The query is, is that this merely performative? Nobody would accuse DeepMind of philanthropy, in any case — the lab rakes in a whole lot of hundreds of thousands of {dollars} in income annually, primarily by licensing its work internally to Google groups.

Maybe the lab’s subsequent huge ethics check is Gemini, its forthcoming AI chatbot, which DeepMind CEO Demis Hassabis has repeatedly promised will rival OpenAI’s ChatGPT in its capabilities. Ought to DeepMind want to be taken significantly on the AI ethics entrance, it’ll have to completely and completely element Gemini’s weaknesses and limitations — not simply its strengths. We’ll actually be watching carefully to see how issues play out over the approaching months.

Listed here are another AI tales of word from the previous few days:

- Microsoft research finds flaws in GPT-4: A brand new, Microsoft-affiliated scientific paper regarded on the “trustworthiness” — and toxicity — of enormous language fashions (LLMs), together with OpenAI’s GPT-4. The co-authors discovered that an earlier model of GPT-4 will be extra simply prompted than different LLMs to spout poisonous, biased textual content. Massive yikes.

- ChatGPT will get internet looking out and DALL-E 3: Talking of OpenAI, the corporate’s formally launched its internet-browsing characteristic to ChatGPT, some three weeks after re-introducing the feature in beta after a number of months in hiatus. In associated information, OpenAI additionally transitioned DALL-E 3 into beta, a month after debuting the newest incarnation of the text-to-image generator.

- Challengers to GPT-4V: OpenAI is poised to launch GPT-4V, a variant of GPT-4 that understands pictures in addition to textual content, quickly. However two open supply options beat it to the punch: LLaVA-1.5 and Fuyu-8B, a mannequin from well-funded startup Adept. Neither is as succesful as GPT-4V, however they each come shut — and importantly, they’re free to make use of.

- Can AI play Pokémon?: Over the previous few years, Seattle-based software program engineer Peter Whidden has been coaching a reinforcement studying algorithm to navigate the traditional first recreation of the Pokémon collection. At current, it solely reaches Cerulean Metropolis — however Whidden’s assured it’ll proceed to enhance.

- AI-powered language tutor: Google’s gunning for Duolingo with a brand new Google Search characteristic designed to assist individuals follow — and enhance — their English talking expertise. Rolling out over the subsequent few days on Android gadgets in choose international locations, the brand new characteristic will present interactive talking follow for language learners translating to or from English.

- Amazon rolls out extra warehouse robots: At an occasion this week, Amazon announced that it’ll start testing Agility’s bipedal robotic, Digit, in its amenities. Studying between the traces, although, there’s no assure that Amazon will really start deploying Digit to its warehouse amenities, which at present make the most of north of 750,000 robotic techniques, Brian writes.

- Simulators upon simulators: The identical week Nvidia demoed making use of an LLM to assist write reinforcement studying code to information a naive, AI-driven robotic towards performing a process higher, Meta launched Habitat 3.0. The most recent model of Meta’s knowledge set for coaching AI brokers in sensible indoor environments. Habitat 3.0 provides the potential for human avatars sharing the area in VR.

- China’s tech titans spend money on OpenAI rival: Zhipu AI, a China-based startup growing AI fashions to rival OpenAI’s and people from others within the generative AI area, announced this week that it’s raised 2.5 billion yuan ($340 million) in whole financing so far this yr. The announcement comes as geopolitical tensions between the U.S. and China ramp up — and present no indicators of simmering down.

- U.S. chokes off China’s AI chip provide: With regards to geopolitical tensions, the Biden administration this week introduced a slew of measures to curb Beijing’s navy ambitions, together with an additional restriction on Nvidia’s AI chip shipments to China. A800 and H800, the 2 AI chips Nvidia designed particularly to proceed delivery to China, will be hit by the fresh round of new rules.

- AI reprises of pop songs go viral: Amanda covers a curious pattern: TikTok accounts that use AI to make characters like Homer Simpson sing ’90s and ’00s rock songs similar to “Smells Like Teen Spirit.” They’re enjoyable and foolish on the floor, however there’s a darkish undertone to the entire follow, Amanda writes.

Extra machine learnings

Machine studying fashions are continually resulting in advances within the organic sciences. AlphaFold and RoseTTAFold have been examples of how a cussed downside (protein folding) could possibly be, in impact, trivialized by the appropriate AI mannequin. Now David Baker (creator of the latter mannequin) and his labmates have expanded the prediction course of to incorporate extra than simply the construction of the related chains of amino acids. In spite of everything, proteins exist in a soup of different molecules and atoms, and predicting how they’ll work together with stray compounds or parts within the physique is crucial to understanding their precise form and exercise. RoseTTAFold All-Atom is an enormous step ahead for simulating organic techniques.

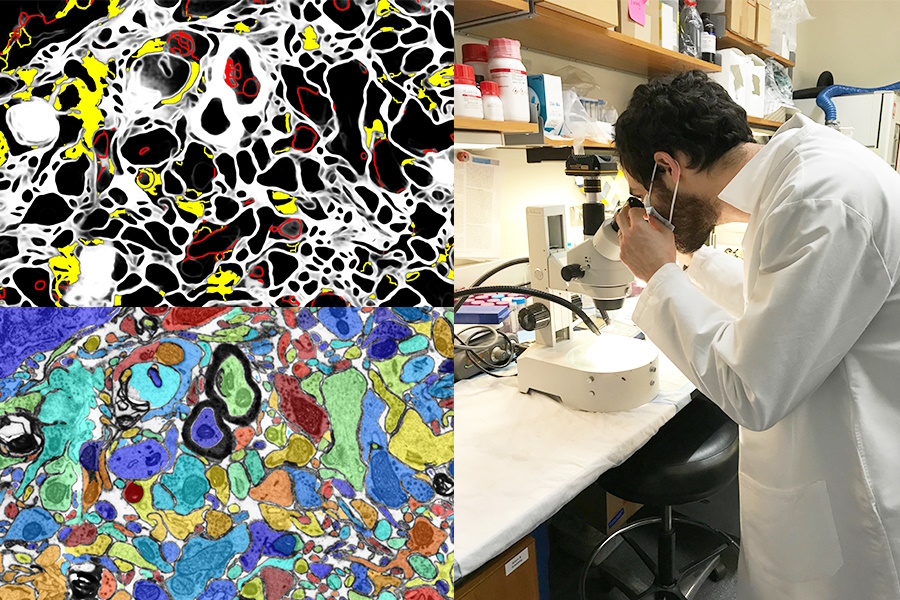

Picture Credit: MIT/Harvard College

Having a visible AI improve lab work or act as a studying software can also be a fantastic alternative. The SmartEM project from MIT and Harvard put a pc imaginative and prescient system and ML management system inside a scanning electron microscope, which collectively drive the system to look at a specimen intelligently. It may keep away from areas of low significance, give attention to attention-grabbing or clear ones, and do good labeling of the ensuing picture as nicely.

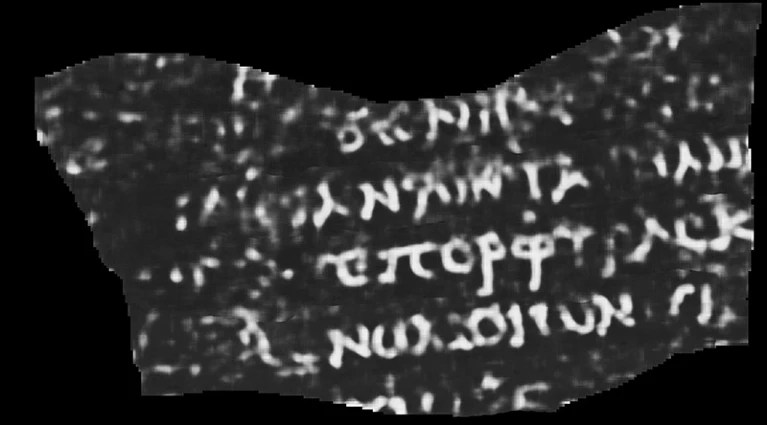

Utilizing AI and different excessive tech instruments for archaeological functions by no means will get previous (if you’ll) for me. Whether or not it’s lidar revealing Mayan cities and highways or filling within the gaps of incomplete historical Greek texts, it’s all the time cool to see. And this reconstruction of a scroll thought destroyed within the volcanic eruption that leveled Pompeii is without doubt one of the most spectacular but.

ML-interpreted CT scan of a burned, rolled-up papyrus. The seen phrase reads “Purple.”

College of Nebraska–Lincoln CS scholar Luke Farritor educated a machine studying mannequin to amplify the delicate patterns on scans of the charred, rolled-up papyrus which are invisible to the bare eye. His was considered one of many strategies being tried in a global problem to learn the scrolls, and it could possibly be refined to carry out worthwhile tutorial work. Lots more info at Nature here. What was within the scroll, you ask? Thus far, simply the phrase “purple” — however even that has the papyrologists dropping their minds.

One other tutorial victory for AI is in this system for vetting and suggesting citations on Wikipedia. After all, the AI doesn’t know what’s true or factual, however it will probably collect from context what a high-quality Wikipedia article and quotation seems like, and scrape the positioning and internet for options. Nobody is suggesting we let the robots run the famously user-driven on-line encyclopedia, nevertheless it may assist shore up articles for which citations are missing or editors are uncertain.

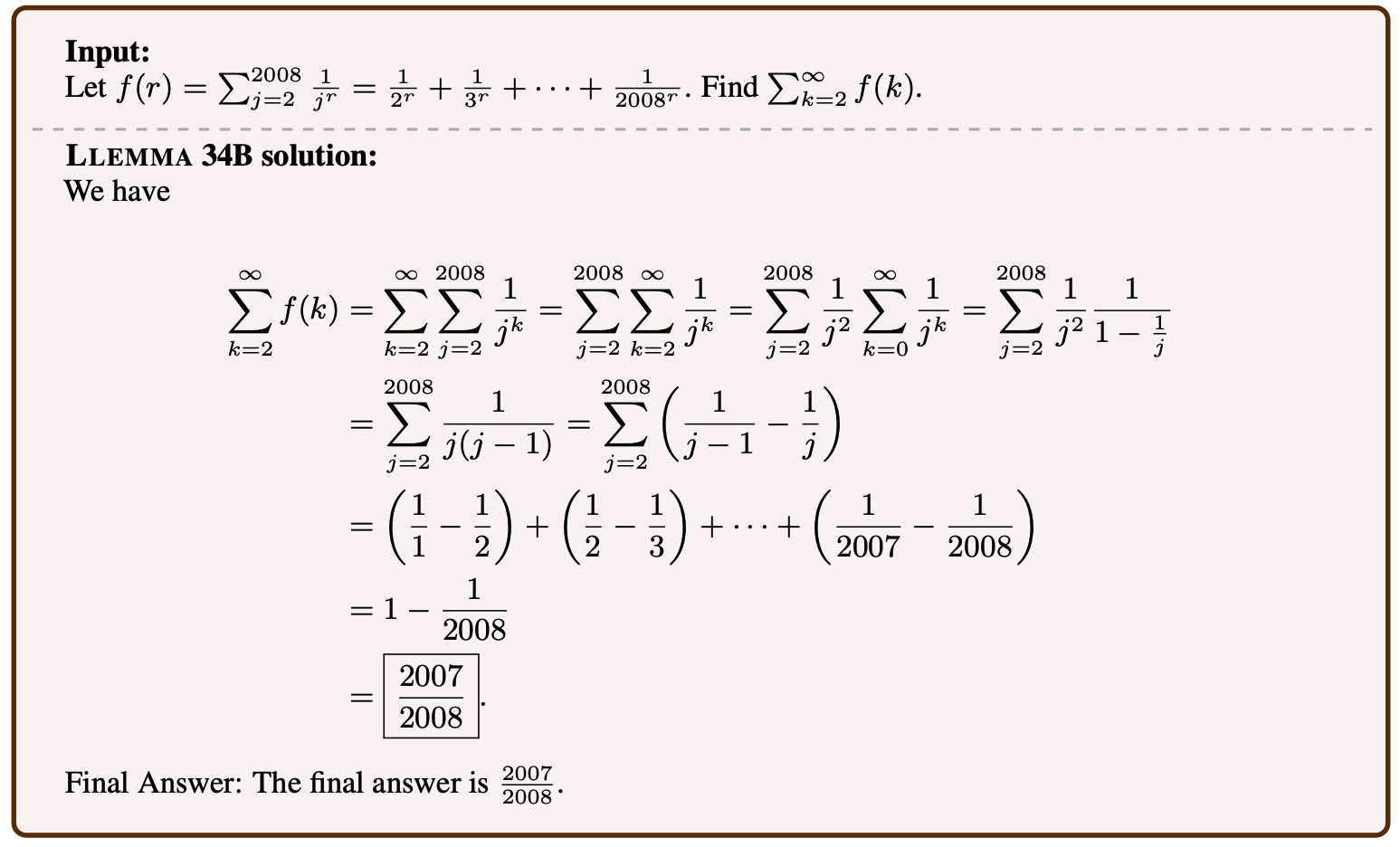

Instance of a mathematical downside being solved by Llemma.

Language fashions will be tremendous tuned on many subjects, and better math is surprisingly considered one of them. Llemma is a new open model educated on mathematical proofs and papers that may clear up pretty complicated issues. It’s not the primary — Google Analysis’s Minerva is engaged on comparable capabilities — however its success on comparable downside units and improved effectivity present that “open” fashions (for regardless of the time period is value) are aggressive on this area. It’s not fascinating that sure kinds of AI must be dominated by non-public fashions, so replication of their capabilities within the open is efficacious even when it doesn’t break new floor.

Troublingly, Meta is progressing in its personal tutorial work in direction of studying minds — however as with most research on this space, the way in which it’s offered relatively oversells the method. In a paper called “Brain decoding: Toward real-time reconstruction of visual perception,” it might appear a bit like they’re straight up studying minds.

Photos proven to individuals, left, and generative AI guesses at what the individual is perceiving, proper.

However it’s somewhat extra oblique than that. By finding out what a high-frequency mind scan seems like when persons are taking a look at pictures of sure issues, like horses or airplanes, the researchers are capable of then carry out reconstructions in close to actual time of what they assume the individual is pondering of or taking a look at. Nonetheless, it appears doubtless that generative AI has a component to play right here in the way it can create a visible expression of one thing even when it doesn’t correspond on to scans.

Ought to we be utilizing AI to learn individuals’s minds, although, if it ever turns into attainable? Ask DeepMind — see above.

Final up, a mission at LAION that’s extra aspirational than concrete proper now, however laudable all the identical. Multilingual Contrastive Studying for Audio Illustration Acquisition, or CLARA, goals to offer language fashions a greater understanding of the nuances of human speech. You recognize how one can decide up on sarcasm or a fib from sub-verbal alerts like tone or pronunciation? Machines are fairly dangerous at that, which is dangerous information for any human-AI interplay. CLARA makes use of a library of audio and textual content in a number of languages to establish some emotional states and different non-verbal “speech understanding” cues.