In right now’s digital world, Synthetic Intelligence (AI) and Machine studying (ML) fashions are used all over the place, from face detection in digital gadgets to real-time language translation. Environment friendly, fast, and cost-effective studying processes are essential for scaling these fashions.

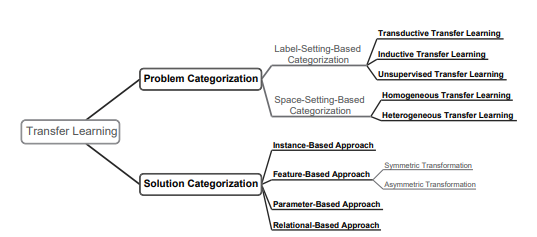

Switch Studying is a key approach applied by researchers and ML scientists to boost effectivity and cut back prices in Deep studying and Pure Language Processing.

On this weblog, we’ll discover the idea of switch studying, the way it technically works, and supply a step-by-step information to implementing it in Python.

About us: Viso Suite is our end-to-end laptop imaginative and prescient infrastructure for enterprises. The highly effective answer permits groups to develop, deploy, handle, and safe laptop imaginative and prescient functions in a single place. E book a demo to study extra.

What’s Switch Studying?

Because the title suggests, this method includes transferring the learnings of 1 educated machine studying mannequin to a different, within the type of neural community weights. This supplies a big edge to companies as they don’t want to coach a mannequin from scratch. For instance, to coach a mannequin to translate German film subtitles to English, we’ve got to normally prepare it with 1000’s of German and English textual content corpora, in order that it may perceive and translate.

However, there are open supply fashions like German-BERT which might be already educated on big information corpora, with many parameters. Via switch studying, illustration studying of German-BERT is utilized and extra subtitle information is supplied. Allow us to perceive how this works.

To grasp how switch studying works, it’s important to grasp the structure of Deep Neural Networks. Neural Networks are essentially the most extensively used algorithm to construct ML fashions for a lot of superior duties, as they’ve proven increased efficiency accuracy than conventional algorithms.

Understanding Neural Networks

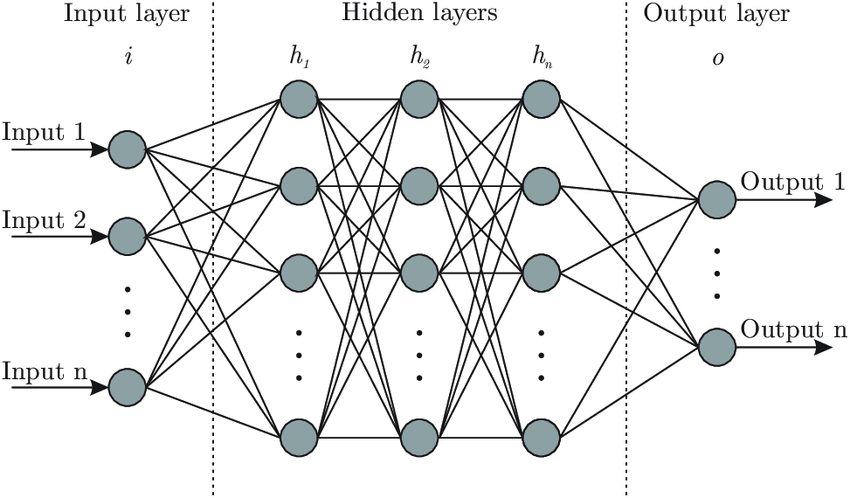

Any neural community structure consists of three primary elements: the enter layer, a number of hidden layers, and the output.

The hidden layers have neurons, that are initialized with random weights firstly. Throughout coaching, we provide the enter variables to the enter layer. Then the layers of the neural community extract options, study information patterns, and replace their weights. On the finish of coaching, all models would have realized the weights and may make predictions.

Switch Studying in Neural Networks

The principle hurdle in implementing neural networks is the lengthy coaching time and computational prices incurred. The method could be a lot faster if we might retain the realized weights of a mannequin (additionally known as ‘pre-trained weights’), and re-use them for the same use case. That is the place switch studying comes into play.

In switch studying, we initialize the neurons with pre-trained weights, fairly than random ones. The bottom mannequin leveraged for the realized weights is known as the ‘Pre-trained Mannequin’, and is normally educated with heavy parameters.

There are lots of such pre-trained fashions accessible in open-source, and in addition some that require paid subscriptions. Some frequent free-to-use pre-trained fashions embrace BERT, ResNet, YOLO and many others.

Why do we want switch studying?

Switch studying will help clear up many challenges confronted throughout real-time ML mannequin constructing. A few of them embrace:

- Diminished want for information: A number of man-hours wanted to gather high-quality information will be saved by way of switch studying. We are able to additionally keep away from the efforts required in annotation to create labels manually. We are able to take a pre-trained mannequin and fine-tune it on small datasets.

- Area Adaption: Take into account a website in a distinct segment space, for instance analyzing monetary experiences and summarizing the important thing factors. If we prepare the mannequin from scratch, it will take numerous time for it to study the fundamentals. With a pre-trained mannequin, this might already be taken care of. We are able to make the most of this time to finetune it on domain-specific phrases (KPIs and many others.).

- Decrease Prices & Sources: Each ML group needs to construct an reasonably priced and dependable mannequin. Groups can’t afford to burn money on computational sources for all of the duties. With switch studying, the reminiscence and GPU clusters wanted are decreased, reducing storage, and cloud computation prices.

- Keep away from Overfitting with restricted information: In lots of domains like credit score threat, and healthcare, information is usually restricted for small-scale corporations or startups. In such instances, the mannequin usually overfits the coaching information pattern. This results in poor generalization in direction of unseen information. This drawback will be mitigated by leveraging switch studying.

- Helps Incremental Studying: The mannequin efficiency will be iteratively improved by fine-tuning it to cowl the gaps. This may be very useful when the mannequin is operating in actual time. As a result of, the information distributions might change over intervals, or resulting from seasonality spikes, and many others.

- Promotes R&D: Switch studying accelerates R&D in ML because it supplies a base to begin. Researchers can give attention to particular elements of an issue with out restarting from scratch. Examples embrace LLMs to offer information summaries with various views, and many others.

How does switch studying work?

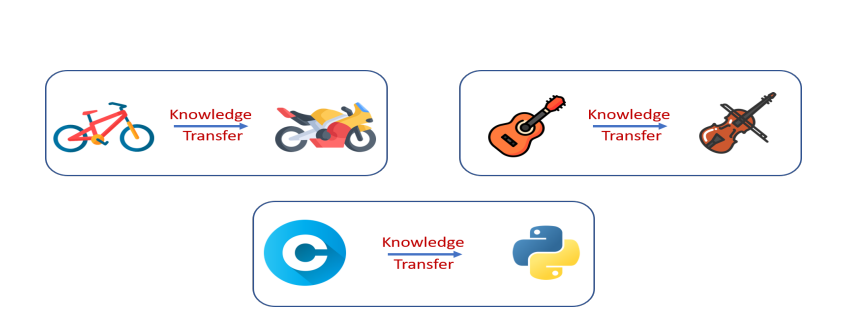

Allow us to perceive how switch studying works with a sensible instance. Take into account a situation by which we’re analyzing visitors surveillance, and need to discover out which autos are the commonest. For this, we would wish a deep studying mannequin that may classify a given enter picture right into a class of auto.

The car classes may very well be ‘Sedan’, ‘SUV’, ‘Truck’, ”Two-wheeler’, ‘Industrial vans’, and many others. Now, let’s see construct a mannequin for this shortly utilizing switch studying.

Step 1: Select a Pre-trained Mannequin

First, we select the bottom mannequin, whose pre-trained weights will likely be leveraged. There are lots of open-source and paid choices accessible for pre-trained fashions. Huggingface is a superb platform to search out open-source fashions and OpenAI is likely one of the finest paid choices.

The bottom mannequin needs to be educated on the identical information sort as the present dataset. If we’re working with photos, then we have to search for a mannequin educated on many photos, like ResNet or VGG.

We are able to select a language mannequin like BERT that may parse human textual content to construct an NLP mannequin corresponding to a textual content abstract. Subsequent, we have to search for fashions which might be educated for comparable goals as the present job. For instance, when you’ve got a text-based sentiment classification job at hand, selecting a mannequin educated for textual content classification will be useful.

For our job, we will likely be utilizing the VGG16 pre-trained mannequin. VGG16 has a CNN (Convolutional Neural Community) based mostly structure that has 16 layers. It’s educated on the “ImageNet” dataset, which has a number of photos in all classes like birds, fruits, automobiles, animals, and many others. Since it’s educated on an unlimited dataset, it may shortly decide up the preliminary low-level function representations of an enter picture like edges, shapes, and so forth.

Step 2: Pre-process your fine-tuning information

The bottom mannequin (pre-trained mannequin) is coded to just accept inputs in a particular format, relying upon the structure. The fine-tuning dataset must be transformed into the identical format in order that it’s suitable. For instance, language fashions normally take enter textual content within the type of tokens or vector embeddings. Whereas, picture recognition fashions settle for inputs within the format of pixels or Pytorch tensors.

For our job, VGG16 requires enter photos within the format of 224 x 224 pixels. So, we resize the photographs in our customized coaching information uniformly. Let’s additionally normalize the photographs, both to a regular 0–1 vary or utilizing imply and variance. This may assist in offering higher stability throughout mannequin coaching.

Information augmentation strategies can be utilized to extend the fine-tuning information measurement or add extra variation to the pattern. Just a few frequent strategies for photos embrace creating crop variations or performing flips and rotations. Observe that pre-processing is the stage the place we will make sure the mannequin will likely be strong after coaching, by cleansing up noise and making certain range within the pattern.

Step 3: Adapting the mannequin

Subsequent, we have to prepare our customized dataset on high of the bottom mannequin. There are two methods to method this: Function extraction and Positive-tuning.

Function extraction: On this method, we take the pre-trained mannequin with none modifications and use it as a function extractor. The pre-trained mannequin will extract the options from enter based mostly on its realized weights. Then, we construct a brand new classification mannequin, the place we offer these extracted options as enter. It’s a cost-effective methodology, as we don’t make any modifications within the layers of the pre-trained mannequin.

Positive-tuning: On this methodology, together with the extra classifier layer on high, we additionally re-train just a few higher layers of the bottom mannequin. The weights are frozen on the deep layers in order that realized options will not be misplaced. Positive-tuning will present higher efficiency accuracy, because it will get educated on the customized information.

In instances the place the area information has its particular nuances like medical photos and monetary threat evaluation, fine-tuning is the higher selection. The draw back of fine-tuning is comparatively increased prices than function extraction from pre-trained fashions.

We are able to select one amongst these approaches based mostly on some important elements: area necessities and sensitivity stage of duties, affordability, and availability of enough information for fine-tuning.

For our job of auto picture classification, we will go along with the function extraction methodology as VGG16 is already uncovered to photographs of automobiles and different autos. Allow us to freeze the weights of all pre-trained layers in VGG16. These layers will extract options from the enter photos we offer.

Step 4: Practice on customized information & Consider

Based mostly on the selection within the earlier step, new information must be educated accordingly. We are able to fine-tune the parameters like the educational charge and batch measurement of the brand new classifier layer to get one of the best outcomes. A excessive studying charge would possibly usually result in overfitting, whereas a low studying charge will waste sources.

We additionally have to outline the loss operate that finest represents the duty at hand. Throughout coaching, the target of the mannequin is to attenuate the loss operate. There are additionally completely different strategies to optimize the loss operate, like Stochastic Gradient descent, RMSProp (Root Imply Sq. Propagation), and Adam.

As soon as coaching is full, the mannequin will be evaluated on a set of unseen check photos. If there’s any repetition within the coaching and check pattern, then the mannequin won’t generalize nicely.

As our job is a picture classification job, we will go along with cross-entropy because the loss operate. It’s a frequent selection in multi-class classification initiatives. We are able to select the Adam optimizer (Adaptive Second Estimation), because it gives higher regularization. We are able to additionally create a confusion matrix of the check information outcomes to see how nicely the mannequin classifies completely different car classes.

Implementing Switch Studying utilizing PyTorch

First, begin by importing the mandatory Python packages. PyTorch will likely be used for constructing and coaching the neural community, torch-vision will likely be used to load and preprocess the information, and numpy will likely be used for numerical operations.

# Import packages and modules import torch import torch.nn as nn import torch.optim as optim from torch.optim import lr_scheduler import numpy as np import torchvision from torchvision import datasets, fashions, transforms import matplotlib.pyplot as plt import time import os

Subsequent, outline information transformations and cargo the dataset. We use transformations corresponding to resizing, cropping, and normalization. This part additionally includes splitting the dataset into coaching and validation units.

# Outline information transforms

data_transforms = {

'prepare': transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'val': transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

# Set information listing

data_dir="path/to/your/dataset"

# Load dataset

image_datasets = {x: datasets.ImageFolder(os.path.be a part of(data_dir, x), data_transforms[x])

for x in ['train', 'val']}

# Create dataloaders

dataloaders = {x: torch.utils.information.DataLoader(image_datasets[x], batch_size=4, shuffle=True, num_workers=4)

for x in ['train', 'val']}

# Get dataset sizes

dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'val']}

class_names = image_datasets['train'].courses

Subsequent, we have to load the pre-trained VGG16 mannequin from the torch-vision fashions. We freeze the parameters of the pre-trained layers and modify the ultimate totally related layer to match the variety of courses in our dataset.

# Loading the pre-trained base mannequin

model_ft = fashions.vgg16(pretrained=True)

# Freeze parameters of pre-trained layers

for param in model_ft.parameters():

param.requires_grad = False

# Modify the classifier

num_ftrs = model_ft.classifier[6].in_features

model_ft.classifier[6] = nn.Linear(num_ftrs, len(class_names))

# Outline loss operate and optimizer

criterion = nn.CrossEntropyLoss()

optimizer_ft = optim.SGD(model_ft.parameters(), lr=0.001, momentum=0.9)

# Decay LR by an element of 0.1 each 7 epochs

exp_lr_scheduler = lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

Right here’s the fundamental framework to coach the mannequin utilizing a loss operate, optimizer, and scheduler. Adjustments will be made as per necessities.

def train_model(mannequin, criterion, optimizer, scheduler, num_epochs=25):

since = time.time()

best_model_wts = mannequin.state_dict()

best_acc = 0.0

for epoch in vary(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

# Every epoch has a coaching and validation section

for section in ['train', 'val']:

if section == 'prepare':

mannequin.prepare() # Set mannequin to coaching mode

else:

mannequin.eval() # Set mannequin to guage mode

running_loss = 0.0

running_corrects = 0

# Iterate over information.

for inputs, labels in dataloaders[phase]:

inputs = inputs.to(gadget)

labels = labels.to(gadget)

# Zero the parameter gradients

optimizer.zero_grad()

# Ahead move

with torch.set_grad_enabled(section == 'prepare'):

outputs = mannequin(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

# Backward + optimize provided that in coaching section

if section == 'prepare':

loss.backward()

optimizer.step()

# Statistics

running_loss += loss.merchandise() * inputs.measurement(0)

running_corrects += torch.sum(preds == labels.information)

if section == 'prepare':

scheduler.step()

epoch_loss = running_loss / dataset_sizes[phase]

epoch_acc = running_corrects.double() / dataset_sizes[phase]

print('{} Loss: {:.4f} Acc: {:.4f}'.format(

section, epoch_loss, epoch_acc))

# Deep copy the mannequin

if section == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = mannequin.state_dict()

print()

time_elapsed = time.time() - since

print('Coaching full in {:.0f}m {:.0f}s'.format(

time_elapsed // 60, time_elapsed % 60))

print('Finest val Acc: {:4f}'.format(best_acc))

# Load finest mannequin weights

mannequin.load_state_dict(best_model_wts)

return mannequin

# Practice the mannequin

model_ft = train_model(model_ft, criterion, optimizer_ft, exp_lr_scheduler, num_epochs=25)

After this, you possibly can calculate metrics like F1 rating or confusion matrix to guage your mannequin. Ensure to interchange 'path/to/your/dataset' with the precise path to your dataset. Additionally, you might want to regulate parameters corresponding to batch measurement, studying charge, and variety of epochs based mostly in your particular coaching dataset and {hardware} capabilities.

Sensible Purposes of Switch Studying

- Medical Analysis: We are able to construct diagnostic fashions even with small quantities of labeled medical information utilizing the pre-trained fashions on medical photos.

- Wide selection of Chatbots: With pre-trained language fashions like BERT, and GPT, any enterprise can customise it to their wants. We are able to construct chatbots fine-tuned for taking appointments in hospitals or answering order queries on an e-commerce web site and so forth. The time taken to develop and current these chatbots to market has decreased with switch studying.

- Monetary Forecasting: Switch studying optimizes monetary forecasting fashions by leveraging pre-trained neural networks educated on comparable financial information. This method accelerates mannequin convergence and enhances accuracy.

- Makes use of in NLP: NLP duties profit massively from switch studying. A mannequin educated for sentiment evaluation on social media posts will be tailored to research buyer opinions, regardless that the language used may be completely different.

Conclusion

Total, switch studying exhibits numerous promise within the fields of deep studying and NLP. However, we must also take into account the present limitations. The mannequin chosen might study some biases from the supply information of the pre-trained mannequin.

ML groups have to verify for potential biases and take away them earlier than implementation. The group ought to repeatedly monitor the mannequin or place alert programs to catch any information distribution drifts.

To discover extra in regards to the world of laptop imaginative and prescient and several types of networks, take a look at the next blogs: