Since Ian Goodfellow offers machines the present of creativeness by creating a strong AI idea GANs, researchers begin to enhance the technology pictures each in the case of constancy and variety. But a lot of the work centered on bettering the discriminator, and the mills proceed to function as black bins till researchers from NVIDIA AI launched StyleGAN from the paper A Type-Based mostly Generator Structure for Generative Adversarial Networks, which relies on ProGAN from the paper Progressive Rising of GANs for Improved High quality, Stability, and Variation.

This text is about among the best GANs right this moment, StyleGAN, We’ll break down its parts and perceive what’s made it beat most GANs out of the water on quantitative and qualitative analysis metrics, each in the case of constancy and variety. One placing factor is that styleGAN can really change finer grain elements of the outputting picture, for instance, if you wish to generate faces you possibly can add some noise to have a wisp of hair tucked again, or falling over.

StyleGAN Overview

On this part, we’ll find out about StyleGAN’s comparatively new structure that is thought-about an inflection level enchancment in GAN, notably in its capability to generate extraordinarily life like pictures.

We’ll begin by going over StyleGAN, major targets, then we’ll speak about what the model in StyleGAN means, and eventually, we’ll get an introduction to its structure in particular person parts.

StyleGAN targets

- Produce high-quality, high-resolution pictures.

- Better variety of pictures within the output.

- Elevated management over picture options. And this may be by including options like hats or sun shades in the case of producing faces, or mixing types from two completely different generated pictures collectively

Type in StyleGANs

The StyleGAN generator views a picture as a set of “types,” the place every model regulates the consequences on a selected scale. On the subject of producing faces:

- Coarse types management the consequences of a pose, hair, and face form.

- Middles types management the consequences of facial options, and eyes.

- Effective types management the consequences of colour schemes.

Important parts of StyleGAN

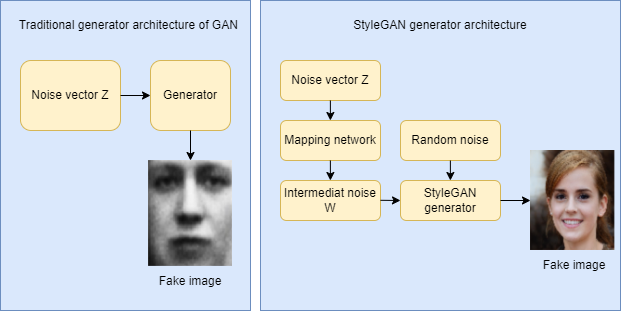

Now let’s examine how the StyleGAN generator differs from a standard GAN generator that we is likely to be extra acquainted with.

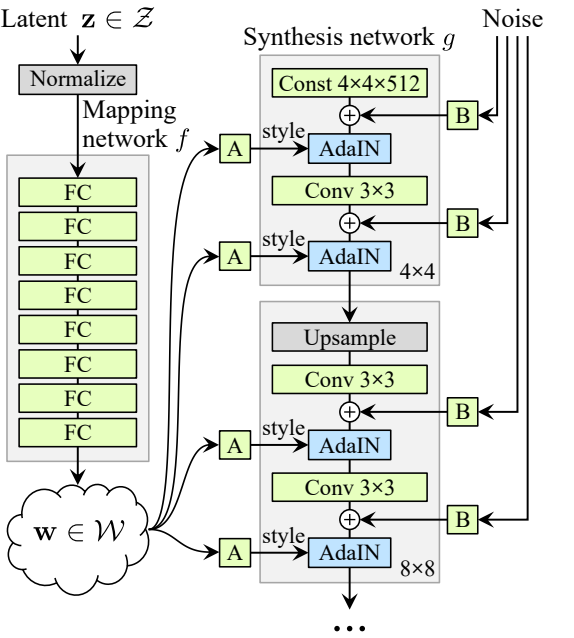

In a standard GAN generator, we take a noise vector (let’s identify it z) into the generator and the generator then outputs a picture. Now in StyleGAN, as a substitute of feeding the noise vector z straight into the generator, it goes via a mapping community to get an intermediate noise vector (let’s identify it W) and extract types from it. That then will get injected via an operation referred to as adaptive occasion normalization(AdaIN for brief) into the StyleGAN generator a number of occasions to provide a pretend picture. And likewise there’s an additional random noise that is handed in so as to add some options to the pretend picture (akin to shifting a wisp of hair in several methods).

The ultimate essential part of StyleGAN is progressive rising. Which slowly grows the picture decision being generated by the generator and evaluated by the discriminator over the method of coaching. And progressive rising originated with ProGAN.

So this was only a high-level introduction to StyleGAN, now let’s get dive deeper into every of the StyleGAN parts (Progressive rising, Noice mapping community, and adaptive occasion normalization) and the way they actually work

Progressive rising

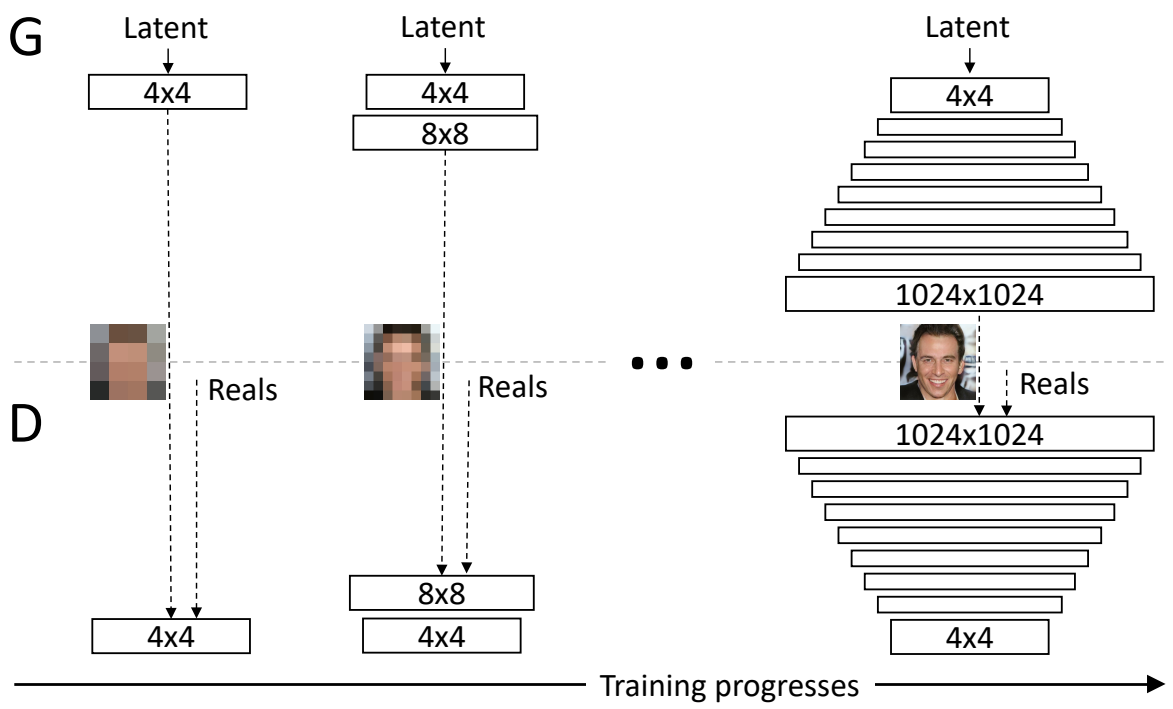

In conventional GANs we ask the generator to generate instantly a set decision like 256 by 256. If you consider it is a sort of a difficult job to straight output high-quality pictures.

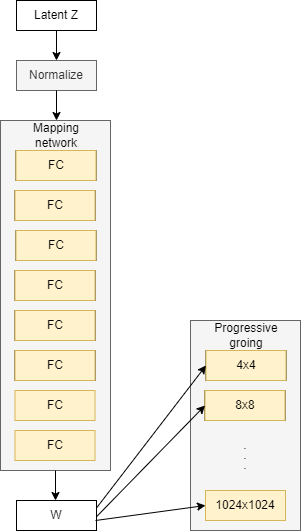

For progressive rising we first ask the generator to output a really low-resolution picture like 4 by 4, and we practice the discriminator to additionally be capable of distinguish on the identical decision, after which when the generator succeded with this job we up the extent and we ask it to output the double of the decision (eight by eight), and so forth till we attain a very excessive decision 1024 by 1024 for instance.

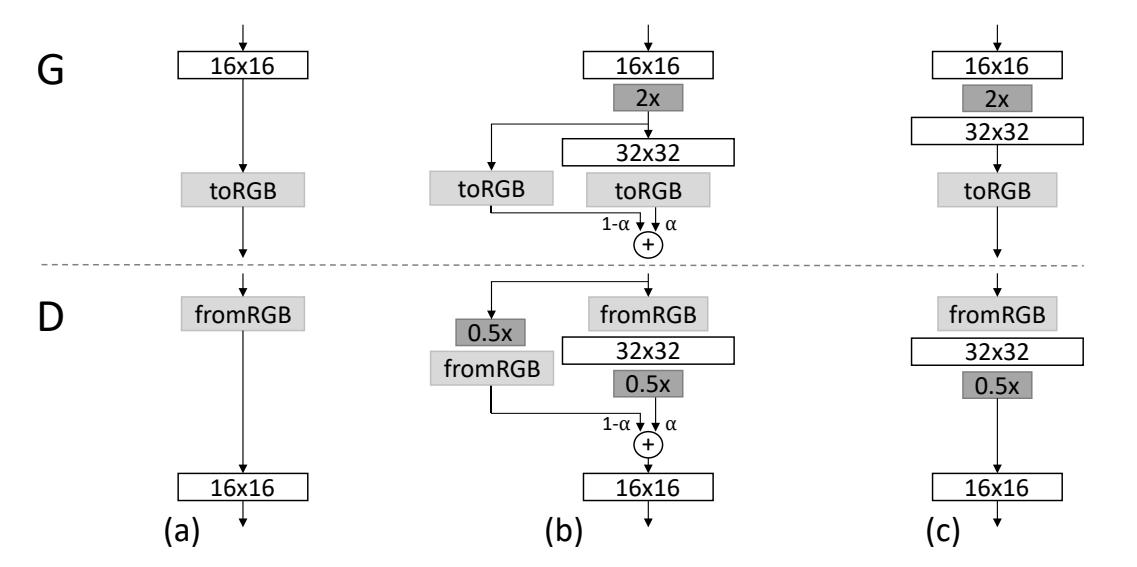

Progressive rising is extra gradual than simple doubling in dimension instantly, after we wish to generate a double-size picture, the brand new layers are easily light in. This fading in is managed by a parameter α, which is linearly interpolated from 0 to 1 over the course of many coaching iterations. As you possibly can see within the determine under, the ultimate generated picture is calculated with this system [(1−α)×UpsampledLayer+(α)×ConvLayer]

Noise Mapping Community

Now we’ll be taught concerning the Noise Mapping Community, which is a novel part of StyleGAN and helps to manage types. First, we’ll check out the construction of the noise mapping community. Then the the explanation why it exists, and eventually the place its output the intermediate vector really goes.

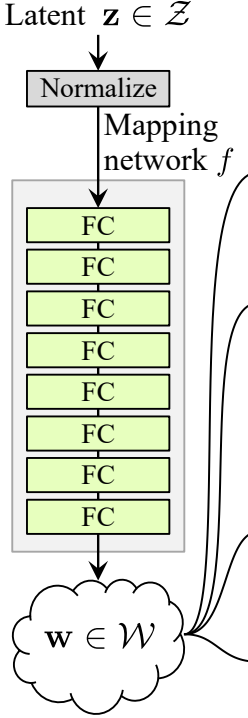

The noise mapping community really takes the noise vector Z and maps it into an intermediate noise vector W. And this noise mapping community consists of eight totally linked layers with activations in between, also called a multilayer perceptron or MLP (The authors discovered that rising the depth of the mapping community tends to make the coaching unstable). So it is a fairly easy neural community that takes the Z noise vector, which is 512 in dimension. And maps it into W intermediate noise issue, which continues to be 512 in dimension, so it simply adjustments the values.

The motivation behind that is that mapping the noise vector will really get us a extra disentangled illustration. In conventional GANs when the noise vector Z goes into the generator. The place we modify considered one of these Z vector values we will really change a number of completely different options in our output. And this isn’t what the authors of StyleGANs need, as a result of considered one of their important targets is to extend management over picture options, so that they give you the Noise Mapping Community that permits for lots of fine-grained management or feature-level management, and due to that we will now, for instance, change the eyes of a generated particular person, add glasses, equipment, and far more issues.

Now let’s uncover the place the noise mapping community really goes. So we see earlier than progressive rising, the place the output begins from low-resolution and doubles in dimension till attain the decision that we wish. And the noise mapping community injects into completely different blocks that progressively develop.

Adaptive Occasion Normalization (AdaIN)

Now we’ll take a look at adaptive occasion normalization or AdaIN for brief and take a bit nearer at how the intermediate noise vector is definitely built-in into the community. So first, We’ll speak about occasion normalization and we’ll evaluate it to batch normalization, which we’re extra acquainted with. Then we’ll speak about what adaptive occasion normalization means, and in addition the place and why AdaIN or Adaptive Occasion Normalization is used.

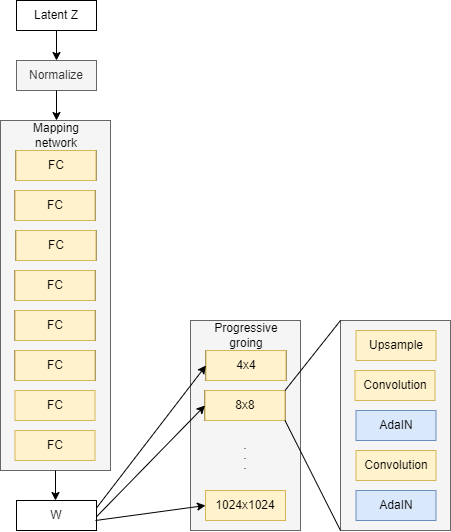

So we already speak about progressive rising, and we additionally be taught concerning the noise mapping community, the place it injects W into completely different blocks that progressively develop. Nicely, in case you are acquainted with ProGAN you realize that in every block we up-sample and do two convolution layers to assist be taught further options, however this isn’t all within the StyleGAN generator, we add AdaIN after every convolutional layer.

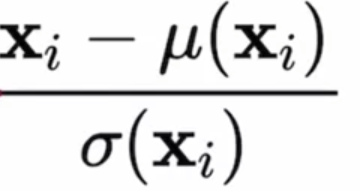

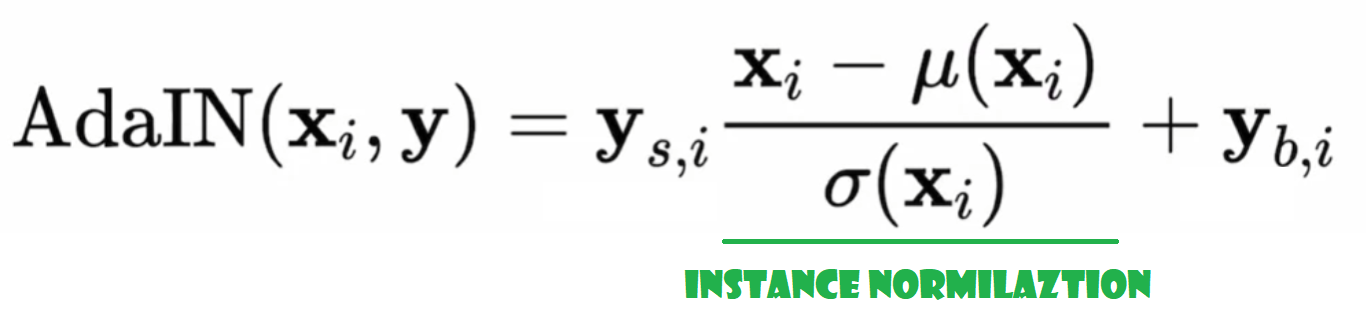

Step one of adaptive occasion normalization(AdaIN) would be the occasion normalization half. if you happen to bear in mind normalization is it takes the outputs from the convolutional layers X and places it at a imply of 0 and a normal deviation of 1. However that is not it, as a result of it is really not primarily based on the batch essentially, which we is likely to be extra acquainted with. The place batch norm we glance throughout the peak and width of the picture, we take a look at one channel, so amongst RGB, we solely take a look at R for instance, and we take a look at all examples within the mini-batch. After which, we get the imply and normal deviation primarily based on one channel in a single batch. After which we additionally do it for the subsequent batch. However occasion normalization is slightly bit completely different. we really solely take a look at one instance or one occasion(an instance is also called an occasion). So if we had a picture with channels RGB, we solely take a look at B for instance and get the imply and normal deviation solely from that blue channel. Nothing else, no further pictures in any respect, simply getting the statistics from simply that one channel, one occasion. And normalizing these values primarily based on their imply and normal deviation. The equation under represents that.

the place:

Xi: Occasion i from the outputs from the convolutional layers X.

µ(Xi): imply of occasion Xi.

𝜎(Xi): Customary deviation of occasion Xi.

So that is the occasion normalization half. And the place the adaptive half is available in is to use adaptive types to the normalized set of values. And the occasion normalization most likely makes slightly bit extra sense than nationalization, as a result of it truly is about each single pattern we’re producing, versus essentially the batch.

The adaptive types are coming from the intermediate noise vector W which is inputted into a number of areas of the community. And so adaptive occasion normalization is the place W will are available in, however really circuitously inputting there. As an alternative, it goes via realized parameters, akin to two totally linked layers, and produces two parameters for us. One is ys which stands for scale, and the opposite is yb, which stands for bias, and these statistics are then imported into the AdaIN layers. See the system under.

All of the parts that we see are pretty essential to StyleGAN. Authors did ablation research to a number of of them to know basically how helpful they’re by taking them out and seeing how the mannequin does with out them. They usually discovered that each part is kind of obligatory up.

Type mixing and Stochastic variation

On this part, we’ll find out about controlling coarse and tremendous types with StyleGAN, utilizing two completely different strategies. The primary is model mixing for elevated variety throughout coaching and inference, and that is mixing two completely different noise vectors that get inputted into the mannequin. The second is including stochastic noise for added variation in our pictures. Including small finer particulars, akin to the place a wisp of hair grows.

Type mixing

Though W is injected in a number of locations within the community, it would not really must be the identical W every time we will have a number of W’s. We will pattern a Z that goes via the mapping community, we get a W, its related W1, and we injected that into the primary half of the community for instance. Keep in mind that goes in via AdaIN. Then we pattern one other Z, let’s identify it Z2, and that will get us W2, after which we put that into the second half of the community for instance. The switch-off between W1 and W2 can really be at any level, it would not must be precisely the center for half and half the community. This might help us management what variation we like. The later the change, the finer the options that we get from W2. This improves our variety as properly since our mannequin is educated like this, so that’s always mixing completely different types and it will possibly get extra various outputs. The determine under is an instance utilizing generated human faces from StyleGAN.

Stochastic variation

Stochastic variations are used to output completely different generated pictures with one image generated by including an extra noise to the mannequin.

With the intention to try this there are two easy steps:

- Pattern noise from a traditional distribution.

- Concatenate noise to the output of conv layer X earlier than AdaIN.

The determine under is an instance utilizing generated human faces from StyleGAN. The creator of StyleGAN generates two faces on the left(the child on the backside would not look very actual. Not all outputs look tremendous actual) then they use stochastic variations to generate a number of completely different pictures from them, you possibly can see the zoom-in into the particular person’s pair that is generated, it is simply so slight by way of the association of the particular person’s hair.

Outcomes

The photographs generated by StyleGAN have higher variety, they’re high-quality, high-resolution, and look so life like that you’d suppose they’re actual.

Conclusion

On this article, we undergo the StyleGAN paper, which relies on ProGAN (They’ve the identical discriminator structure and completely different generator structure).

The essential blocks of the generator are the progressive rising which basically grows the generated output over time from smaller outputs to bigger outputs. After which we now have the noise mapping community which takes Z. That is sampled from a traditional distribution and places it via eight totally linked layers separated by sigmoids or some sort of activation. And to get the intermediate W noise vector that’s then inputted into each single block of the generator twice. After which we realized about AdaIN, or adaptive occasion normalization, which is used to take W and apply types at numerous factors within the community. We additionally realized about model mixing, which samples completely different Zs to get completely different Ws, which then places completely different Ws at completely different factors within the community. So we will have a W1 within the first half and a W2 within the second half. After which the generated output can be a mixture of the 2 pictures that have been generated by simply W1 or simply W2. And at last, we realized about stochastic noise, which informs small element variations to the output.

Hopefully, it is possible for you to to observe all the steps and get understanding of StyleGAN, and you might be able to sort out the implementation, you’ll find it on this article the place I make a clear, easy, and readable implementation of it to generate some trend.