With the development of Deep Studying (DL), the invention of Visible Query Answering (VQA) has turn into potential. VQA has lately turn into standard among the many laptop imaginative and prescient analysis neighborhood as researchers are heading in the direction of multi-modal issues. VQA is a difficult but promising multidisciplinary Synthetic Intelligence (AI) job that allows a number of purposes.

On this weblog we’ll cowl:

- Overview of Visible Query Answering

- The elemental ideas of VQA

- Engaged on a VQA system

- VQA datasets

- Functions of VQA throughout varied industries

- Current developments and future challenges

What’s Visible Query Answering (VQA)?

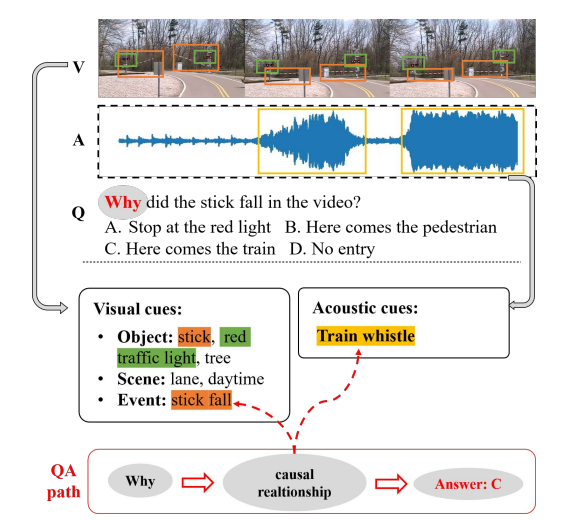

The only means of defining a VQA system is a system able to answering questions associated to a picture. It takes a picture and a text-based query as inputs and generates the reply as output. The character of the issue defines the character of the enter and output of a VQA mannequin.

Inputs could embody static pictures, movies with audio, and even infographics. Questions might be offered inside the visible or requested individually relating to the visible enter. It might reply multiple-choice questions, YES/NO (binary questions), or any open-ended questions in regards to the offered enter picture. It permits a pc program to grasp and reply to visible and textual enter in a human-like method.

- Are there any telephones close to the desk?

- Guess the variety of burgers on the desk.

- Guess the colour of the desk?

- Learn the textual content within the picture if any.

A visible query answering mannequin would have the ability to reply the above questions in regards to the picture.

On account of its advanced nature and being a multimodal job (programs that may interpret and comprehend information from varied modalities, together with textual content, footage, and typically audio), VQA is taken into account AI-complete or AI-hard (probably the most tough drawback within the AI subject) as it’s equal to creating computer systems as clever as people.

Rules Behind VQA

Visible query answering naturally works with picture and textual content modalities.

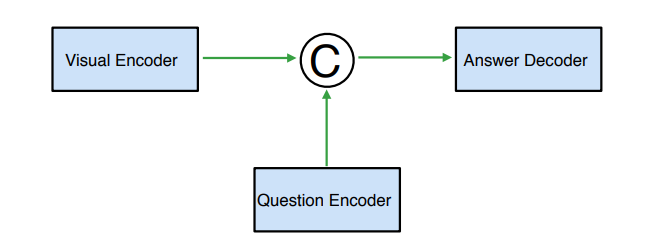

A VQA mannequin has the next components:

- Pc Imaginative and prescient (CV)

CV is used for picture processing and extraction of the related options. For picture classification and object recognition in a picture, CNN (Convolution Neural Networks) are utilized. OpenCV and Viso Suite are appropriate platforms for this method. Such strategies function by capturing the native and world visible options from a picture. - Pure Language Processing (NLP)

NLP works parallel with CV in any VQA mannequin. NLP processes the info with pure language textual content or voice. Long Short-Term Memory (LSTM) networks or Bag-Of-Words (BOW) are largely used to extract query options. These strategies perceive the sequential nature of the query’s language and convert it to numerical information numerical information for NLP. - Combining CV And NLP

That is the conjugation half in a VQA mannequin. The character of the ultimate reply is derived from this integration of visible and textual options. Completely different architectures, resembling CNNs and Recurrent Neural Networks (RNNs) mixed, Consideration Mechanisms, and even Multilayer Perceptrons (MLPs) are used on this method.

How Does a VQA System Work?

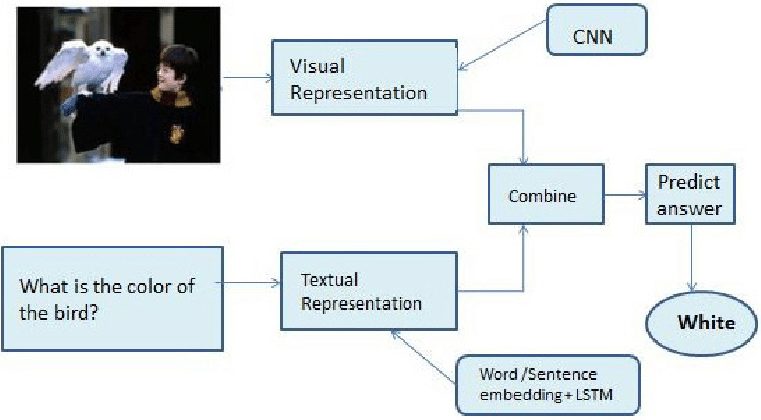

A Visible Query Answering mannequin can deal with a number of picture inputs. It might take visible enter as pictures, movies, GIFs, units of pictures, diagrams, slides, and 360◦ pictures. From a broader perspective, a visible query reply system undergoes the next phases:

- Picture Function Extraction: Transformation of pictures into readable characteristic illustration to course of additional.

- Query Function Extraction: Encoding of the pure language inquiries to extract related entities and ideas.

- Function Conjugation: Strategies of mixing encoded picture and query options.

- Reply Technology: Understanding the built-in options to generate the ultimate reply.

Picture Function Extraction

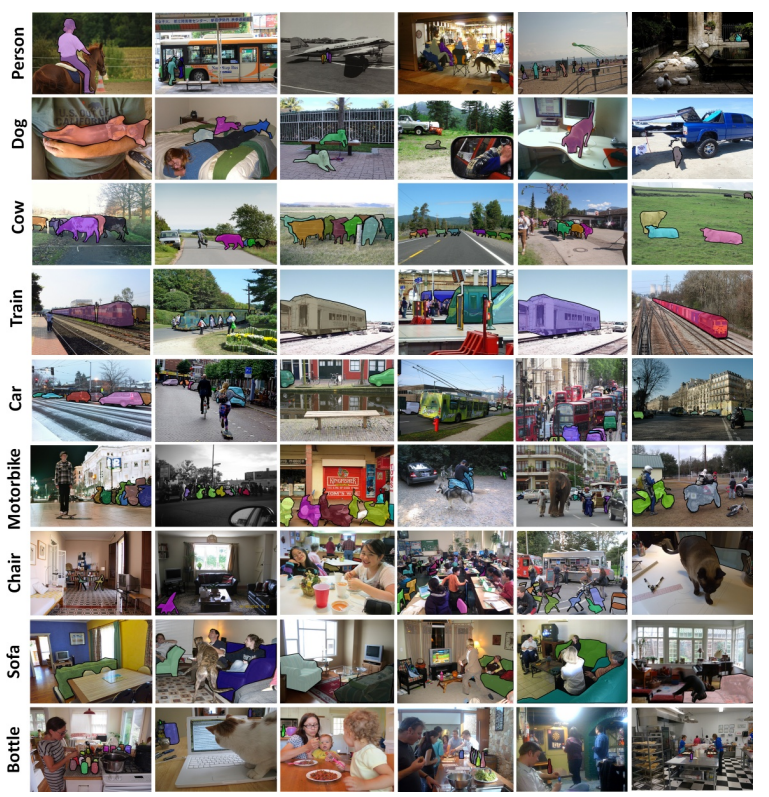

Nearly all of VQA fashions use CNN to course of visible imagery. Deep convolutional neural networks obtain pictures as enter and use them to coach a classifier. CNN’s fundamental goal for VQA is picture featurization. It makes use of a linear mathematical operation of “convolution” and never easy matrix multiplication.

Relying on the complexity of the enter visible, the variety of layers could vary from lots of to hundreds. Every layer builds on the outputs of those earlier than it to establish advanced patterns.

A number of Visible Query Answering papers revealed that a lot of the fashions used VGGet earlier than ResNets (8x deeper than VGG nets) got here in 2017 for picture characteristic extraction.

Query Function Extraction

The literature on VQA means that Lengthy Brief-Time period Reminiscence (LSTMs) are generally used for query featurization, a kind of Recurrent Neural Community (RNN). Because the title depicts, RNNs have a looping or recurrent workflow; they work by passing sequential information that they obtain to the hidden layers one step at a time.

The short-term reminiscence part on this neural community makes use of a hidden layer to recollect and use previous inputs for future predictions. The following sequence is then predicted primarily based on the present enter and saved reminiscence.

RNNs have issues with exploding and vanishing gradients whereas coaching a deep neural community. LSTMs overcome this. A number of different strategies resembling count-based and frequency-based strategies like count vectorization and TF-IDF (Time period Frequency-Inverse Doc Frequency) are additionally accessible.

For pure language processing, prediction-based strategies resembling a steady bag of phrases and skip grams are used as nicely. Word2Vec pre-trained algorithms are additionally relevant.

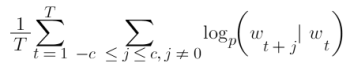

A skip-gram mannequin predicts the phrases round a given phrase by maximizing the chance of accurately guessing context phrases primarily based on a goal phrase. So, for a sequence of phrases w1, w2, … wT, the target of the mannequin is to precisely predict close by phrases.

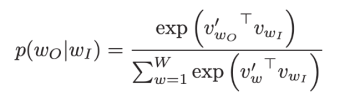

It achieves this by calculating the likelihood of every phrase being the context, with a given goal phrase. Utilizing the softmax function, the next calculation compares vector representations of phrases.

Function Conjugation

The first distinction between varied methodologies for VQA lies in combining the picture and textual content options. Some approaches embody easy concatenation and linear classification. A Bayesian method primarily based on probabilistic modeling is preferable for dealing with completely different characteristic vectors.

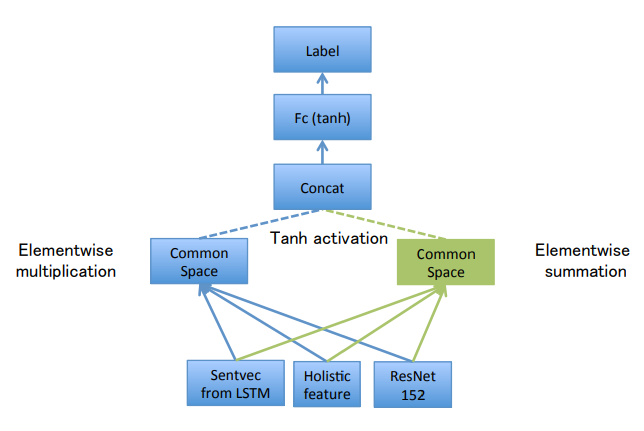

If the vectors coming from the picture and textual content are of the identical size, element-wise multiplication can also be relevant to affix the options. You too can attempt the Consideration-based method to information the algorithm’s focus in the direction of an important particulars within the enter. The DualNet VQA mannequin makes use of a hybrid method that concatenates element-wise addition and multiplication outcomes to realize larger accuracy.

Reply Technology

This section in a VQA mannequin includes taking the encoded picture and query options as inputs and producing the ultimate reply. A solution might be in binary type, counting numbers, checking the precise reply, pure language solutions, or open-ended solutions in phrases, phrases, or sentences.

The multiple-choice and binary solutions use a classification layer to transform the mannequin’s output right into a likelihood rating. LSTMs are acceptable to make use of when coping with open-ended questions.

VQA Datasets

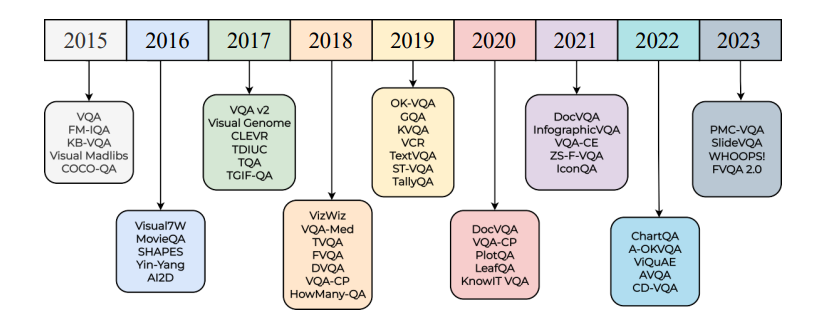

A number of datasets are current for VQA analysis. Visual Genome is at the moment the most important accessible dataset for visible query answering fashions.

Relying on the query reply pairs, listed here are among the frequent datasets for VQA.

- COCO-QA Dataset: Extension of COCO (Widespread Objects in Context). Questions of 4 sorts: quantity, coloration, object, and placement. Appropriate solutions are all given in a single phrase.

- CLEVR: Accommodates a coaching set of 70,000 pictures and 699,989 questions. A validation set of 15,000 pictures and 149,991 questions. A check set of 15,000 pictures and 14,988 questions. Solutions for all coaching and VAL questions.

- DAQUAR: Comprise real-world pictures. People query reply pairs about pictures.

- Visual7W: A big-scale visible query answering dataset with object-level floor fact and multimodal solutions. Every query begins with one of many seven Ws.

Functions of Visible Query Answering System

Individually, CV and NLP have separate units of assorted purposes. Implementation of each in the identical system can additional improve the applying area for Visible Query Answering.

Actual-world purposes of VQA are:

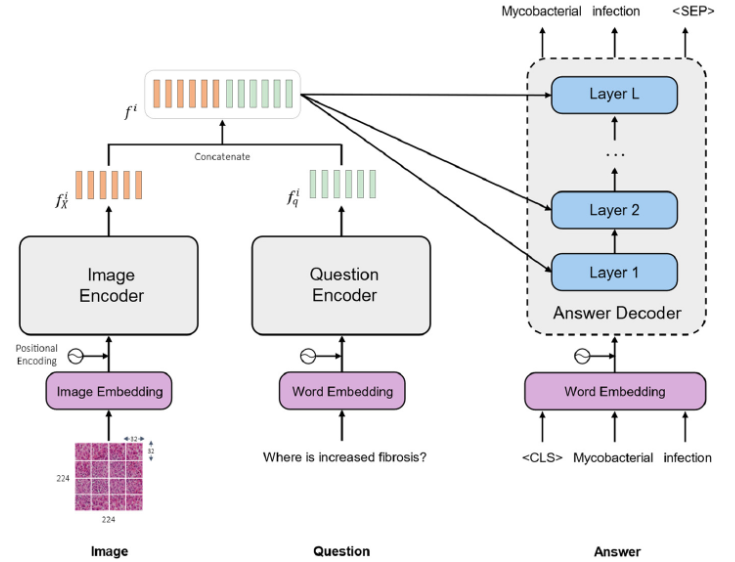

Medical – VQA

This subdomain focuses on the questions and solutions associated to the medical subject. VQA fashions could act as pathologists, radiologists, or correct medical assistants. VQA within the medical sector can tremendously cut back the workload of employees by automating a number of duties. For instance, it may lower the probabilities of disease misdiagnosis.

VQA might be applied as a medical advisor primarily based on pictures offered by the sufferers. It may be used to examine medical data and information accuracy from the database.

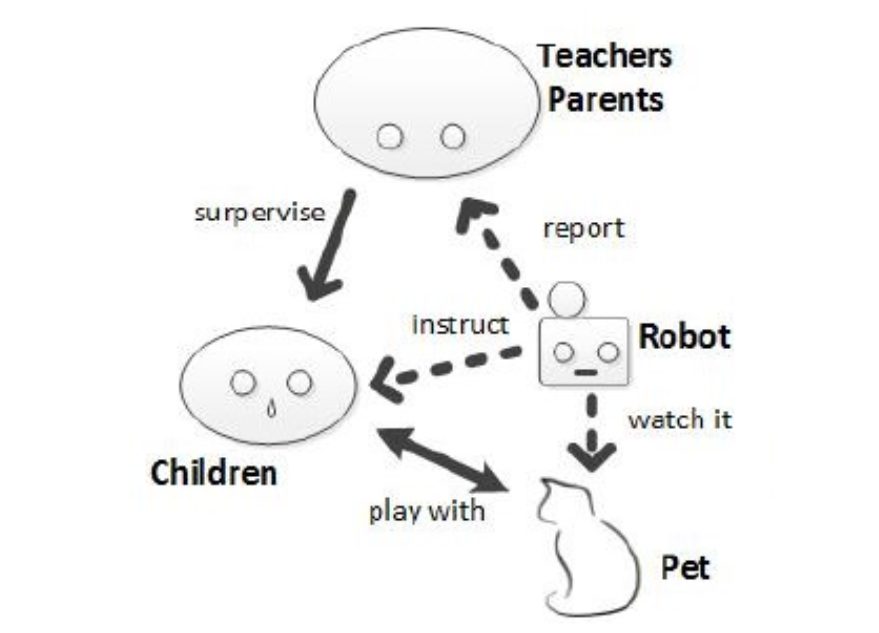

Training

The applying of VQA within the training sector can assist visible studying to an awesome extent. Think about having a studying assistant who can information and consider you with discovered ideas. A number of the proposed use instances are Automatic Robot System for Pre-scholars, Visual Chatbots for Education, Gamification of VQA Systems, and Automated Museum Guides. VQA in training has the potential to make studying kinds extra interactive and artistic.

Assistive Expertise

The prime motive behind VQA is to assist visually impaired people. Initiatives just like the VizWiz cellular app and Be My Eyes make the most of VQA programs to offer automated help to visually impaired people by answering questions on real-world pictures. Assistive VQA fashions can see the environment and assist folks perceive what’s occurring round them.

Visually impaired folks can have interaction extra meaningfully with their surroundings with the assistance of such VQA programs. Envision Glasses is an instance of such a mannequin.

E-commerce

VQA is able to enhancing the web procuring consumer expertise. Shops and platforms for on-line procuring can combine VQA to create a streamlined e-commerce surroundings. For instance, you possibly can ask questions about products (Product Query Answering) and even add pictures, and it’ll offer you all the required information like product particulars, availability, and even suggestions primarily based on what it sees within the pictures.

On-line procuring shops and web sites can implement VQA as an alternative of handbook customer support to additional enhance the consumer expertise on their platforms. It might assist clients with:

- Product suggestions

- Troubleshooting for customers

- Web site and procuring tutorials

- VQA system can even act as a Chatbot that may converse visible dialogues

Content material Filtering

One of the vital appropriate purposes of VQA is content material moderation. Primarily based on its basic characteristic, it may detect dangerous or inappropriate content material and filter it out to maintain a protected on-line surroundings. Any offensive or inappropriate content material on social media platforms might be detected utilizing VQA.

Current Improvement & Challenges In Bettering VQA

With the fixed development of CV and DL, VQA fashions are making enormous progress. The variety of annotated datasets is quickly rising due to crowd-sourcing, and the fashions have gotten clever sufficient to offer an correct reply utilizing pure language. Prior to now few years, many VQA algorithms have been proposed. Virtually each methodology includes:

- Picture featurization

- Query featurization

- An appropriate algorithm that mixes these options to generate the reply

Nevertheless, a major hole exists between correct VQA programs and human intelligence. At present, it’s onerous to develop any adaptable mannequin as a result of range of datasets. It’s tough to find out which methodology is superior as of but.

Sadly, as a result of most giant datasets don’t provide particular details about the kinds of questions requested, it’s onerous to measure how nicely programs deal with sure kinds of questions.

The current fashions can’t enhance general efficiency scores when dealing with distinctive questions. This makes it onerous for the evaluation of strategies used for VQA. At present, a number of selection questions are used to guage VQA algorithms as a result of evaluation of open-ended multi-word questions is difficult. Furthermore, VQA regarding videos nonetheless has a protracted solution to go.

Current algorithms are usually not adequate to mark VQA as a solved drawback. With out bigger datasets and extra sensible work, it’s onerous to make better-performing VQA fashions.

What’s Subsequent for Visible Query Answering?

VQA is a state-of-the-art AI mannequin that’s far more than task-specific algorithms. Being an image-understanding mannequin, VQA goes to be a serious improvement in AI. It is bridging the hole between visible content material and pure language.

Textual content-based queries are frequent, however think about interacting with the pc and asking questions on pictures or scenes. We’re going to see extra intuitive and pure interactions with computer systems.

Some future suggestions to enhance VQA are:

- Datasets must be bigger

- Datasets must be much less biased

- Future datasets want extra nuanced evaluation for benchmarking

Extra effort is required to create VQA algorithms that may suppose deeply about what’s within the pictures.

Associated matters and weblog articles about laptop imaginative and prescient and NLP: