Neural Networks have modified the best way we carry out mannequin coaching. Current day, we see many neural community varieties utilized throughout the sector of machine studying: Convolutional Neural Networks, Recurrent Neural Networks, Liquid Neural Networks, and extra. These developments have paved the best way for the emergence of a subset of machine studying: Deep Studying.

With the rise within the utilization of the pc and machine studying techniques, the supply of knowledge has develop into straightforward. Neural networks, generally known as Neural Nets, want massive datasets for environment friendly coaching. This availability of knowledge made it straightforward to coach and undertake throughout the trade.

Although they work effectively, they do have some challenges. For instance, if the mannequin we skilled encounters one thing outdoors the coaching set, it received’t work correctly because it has by no means encountered it. Additionally, with massive quantities of knowledge, the complexity of the mannequin will increase. So, what if we’ve a neural community that may adapt itself to new information and has much less complexity?

Liquid Neural Networks clear up the issues posed by conventional networks. On this article, we’ll study extra in regards to the Liquid Neural Networks (LNNs).

About us: Viso.ai gives a strong end-to-end pc imaginative and prescient answer – Viso Suite. Our software program helps a number of main organizations begin with pc imaginative and prescient and implement deep studying fashions effectively with minimal overhead for numerous downstream duties. Get a demo right here.

What’s a Liquid Neural Community?

Within the 12 months 2020, researchers at MIT’s Laptop Science and Synthetic Intelligence Laboratory (CSAIL), Ramin Hasani, Daniela Rus, and the group launched a form of neural community referred to as Liquid Neural Networks (LNNs). LNNs are a kind of Recurrent Neural Community (RNN) that’s time-continuous.

Their dynamic structure can adapt its construction based mostly on the info. That is one thing just like liquids that may take the form of the container they’re in. Therefore, they’re referred to as Liquid Neural Networks. They’ll study on the job even after coaching.

These neural networks are impressed by the nervous system of a microscopic worm referred to as C. elegans. It has 302 neurons. Nonetheless, regardless of a low variety of neurons, it could exhibit intricate behaviors which is an excessive amount of for the variety of neurons it has. Subsequent, let’s see how these liquid neural networks work.

How do Liquid Neural Networks Work?

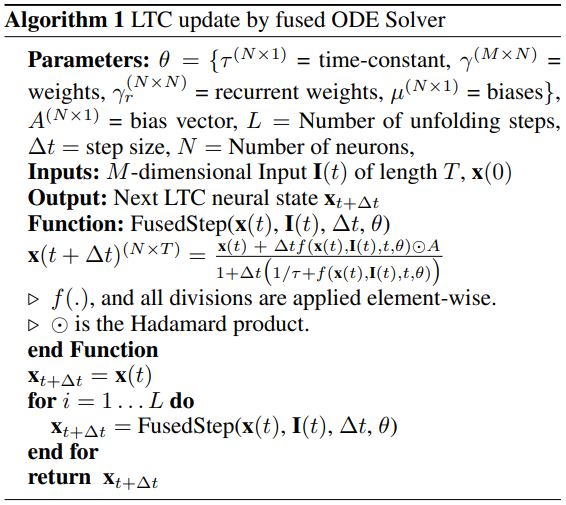

Liquid Neural Networks are a category of Recurrent Neural Networks (RNNs) which can be time-continuous. LNNs are made up of first-order dynamical techniques managed by non-linear interlinked gates. The tip mannequin is a dynamic system with various time constants in a hidden state. That is an enchancment of Recurrent Neural Networks the place time-dependent unbiased states are launched.

Numerical differential equation solvers compute the outputs. Every differential equation represents a node of that system. The closed-form answer makes certain that they carry out effectively with a smaller variety of neurons. This offers rise to fewer and richer nodes.

They present steady and bounded conduct with improved efficiency on time collection information. The differential equation solver updates the algorithm as per the below-given guidelines.

LNN structure has three layers. They’re:

- Enter layer.

- Liquid layer (Reservoir).

- Output layer.

Enter layer: That is the layer that has the inputs to the community. All of the enter information that we wish to practice the mannequin on is given to this layer. This layer feeds the enter information to the liquid layer.

Liquid layer: This layer is also referred to as a reservoir. It incorporates a big recurrent community of neurons. They’re initialized with synaptic weights. Enter information is reworked right into a wealthy non-linear area.

Output layer: It consists of output neurons. This receives the data from the liquid layer.

Now, let’s see find out how to implement a easy Liquid Neural Community utilizing TensorFlow.

Implementation of a Liquid Neural Community (LNN)

We are going to use the MNIST dataset obtainable within the TensorFlow datasets. MNIST dataset incorporates handwritten digits and is extensively used for instructional and analysis functions. It has 70000 samples, of which 60000 samples are used as a coaching dataset, and the remainder of the samples are used as a take a look at dataset. The dimension of every picture is (28,28,1).

Import Libraries

To implement LNN, we’ll start by importing the required libraries as proven beneath:

import numpy as np import tensorflow as tf from tensorflow import keras

Within the code above, we’ve imported Numpy, tensorflow, and keras.

Outline Weight Initialize, Prepare, and Predict Features

Subsequent, we outline a operate to initialize the weights of the mannequin.

def initialize_weights(input_dim, reservoir_dim, output_dim, spectral_radius):

# Initialize reservoir weights randomly

reservoir_weights = np.random.randn(reservoir_dim, reservoir_dim)

# Scale reservoir weights to attain desired spectral radius

reservoir_weights *= spectral_radius / np.max(np.abs(np.linalg.eigvals(reservoir_weights)))

# Initialize input-to-reservoir weights randomly

input_weights = np.random.randn(reservoir_dim, input_dim)

# Initialize output weights to zero

output_weights = np.zeros((reservoir_dim, output_dim))

return reservoir_weights, input_weights, output_weights

The above operate initializes the load matrices of the LNN. It begins by randomly initializing the reservoir weights matrix. Then, it scales the reservoir weights to get the suitable spectral radius.

After this, it randomly initializes the input-to-reservoir weights and the output weights as a zero matrix. Lastly, it returns reservoir weights, enter weights, and output weights.

Within the subsequent step, we outline a operate to coach our mannequin as proven beneath:

def train_lnn(input_data, labels, reservoir_weights, input_weights, output_weights, leak_rate, num_epochs):

num_samples = input_data.form[0]

reservoir_dim = reservoir_weights.form[0]

reservoir_states = np.zeros((num_samples, reservoir_dim))

for epoch in vary(num_epochs):

for i in vary(num_samples):

# Replace reservoir state

if i > 0:

reservoir_states[i, :] = (1 - leak_rate) * reservoir_states[i - 1, :]

reservoir_states[i, :] += leak_rate * np.tanh(np.dot(input_weights, input_data[i, :]) +

np.dot(reservoir_weights, reservoir_states[i, :]))

# Prepare output weights

output_weights = np.dot(np.linalg.pinv(reservoir_states), labels)

# Compute coaching accuracy

train_predictions = np.dot(reservoir_states, output_weights)

train_accuracy = np.imply(np.argmax(train_predictions, axis=1) == np.argmax(labels, axis=1))

print(f"Epoch {epoch + 1}/{num_epochs}, Prepare Accuracy: {train_accuracy:.4f}")

return output_weights

This operate trains the LNN based mostly on the enter parameters. It initializes the reservoir states as a zero matrix, iterates over the given variety of epochs, and trains the output weights. After this, the coaching accuracy is computed, and the output weights are returned.

Subsequent, we outline a operate to foretell the values based mostly on the take a look at information.

def predict_lnn(input_data, reservoir_weights, input_weights, output_weights, leak_rate):

num_samples = input_data.form[0]

reservoir_dim = reservoir_weights.form[0]

reservoir_states = np.zeros((num_samples, reservoir_dim))

for i in vary(num_samples):

# Replace reservoir state

if i > 0:

reservoir_states[i, :] = (1 - leak_rate) * reservoir_states[i - 1, :]

reservoir_states[i, :] += leak_rate * np.tanh(np.dot(input_weights, input_data[i, :]) +

np.dot(reservoir_weights, reservoir_states[i, :]))

# Compute predictions utilizing output weights

predictions = np.dot(reservoir_states, output_weights)

return predictions

This operate offers the predictions utilizing the skilled LNN. It initializes the reservoir states as a zero matrix and iterates over the variety of samples to replace the reservoir states. After this, it makes predictions utilizing the reservoir states and output weights and returns the predictions.

Knowledge Loading and Pre-processing

Now that we’ve all of the capabilities wanted for coaching the mannequin, let’s load and preprocess the info as proven beneath:

# Load and preprocess MNIST dataset (x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data() y_train = keras.utils.to_categorical(y_train) y_test = keras.utils.to_categorical(y_test) x_train = x_train.reshape((60000, 784)) / 255.0 x_test = x_test.reshape((10000, 784)) / 255.0

We load the coaching and take a look at datasets utilizing the Keras library. Within the subsequent step, we scale the info for environment friendly coaching.

Now we set the hyperparameters for coaching as proven beneath:

# Set LNN hyperparameters input_dim = 784 reservoir_dim = 1000 output_dim = 10 leak_rate = 0.1 spectral_radius = 0.9 num_epochs = 10

Initializing Weights and Coaching

Within the subsequent step, we initialize the reservoir weights utilizing the above parameters as proven beneath:

# Initialize LNN weights reservoir_weights, input_weights, output_weights = initialize_weights(input_dim, reservoir_dim, output_dim, spectral_radius)

We practice the LNN to get the output weights in order that we are able to make predictions utilizing these weights.

# Prepare the LNN output_weights = train_lnn(x_train, y_train, reservoir_weights, input_weights, output_weights, leak_rate, num_epochs)

We are able to now make the predictions utilizing the skilled weights and consider them.

Prediction and Analysis

# Consider the LNN on take a look at set

test_predictions = predict_lnn(x_test, reservoir_weights, input_weights, output_weights, leak_rate)

test_accuracy = np.imply(np.argmax(test_predictions, axis=1) == np.argmax(y_test, axis=1))

print(f"Take a look at Accuracy: {test_accuracy:.4f}")

The above code gave a take a look at accuracy of 0.3584 which is kind of low. We are able to enhance this by utilizing hyperparameter tuning.

Benefits and Disadvantages of Liquid Neural Networks

Benefits

- They’re adaptable to the enter information.

- Appropriate for multi-sensory information.

- They’ll course of multi-modal data from totally different time scales.

- These neural nets can course of time-series information very effectively.

- Mimics the mind extra precisely in comparison with standard neural networks.

Disadvantages

- Liquid neural networks face a vanishing gradient drawback.

- Hyperparameter tuning may be very troublesome as there’s a excessive variety of parameters contained in the liquid layer as a consequence of randomness.

- That is nonetheless a analysis drawback, and therefore a smaller variety of sources can be found to get began with these.

- They require time-series information and don’t work correctly on common tabular information.

- They’re very gradual in real-world situations.

Variations Between Liquid Networks and Conventional Networks

| Liquid Neural Networks | Typical Neural Networks |

|---|---|

| They don’t require an enormous coaching dataset. | They require a big coaching set as extra information means extra accuracy for them. |

| Requires much less computational sources. | They require extra computational sources. |

| It produces much less mannequin complexity and, therefore, is extra interpretable. | Fashions have excessive complexity as a consequence of extra variety of parameters. |

| Have synaptic weights that alter themselves based mostly on incoming information. | They’ve fastened weights and activation capabilities that may’t be modified after coaching. |

| Requires re-training on new information as they adapt themselves to the incoming information. | Requires coaching on new information. |

| They’re scalable on the enterprise stage with much less labeled information. | To scale conventional neural nets, we want extra labeled information. |

Functions of LNNs

Liquid Neural Networks have purposes in a number of domains as they carry out effectively on time-series information. A number of the domains the place they are often extra environment friendly than conventional neural nets are as beneath.

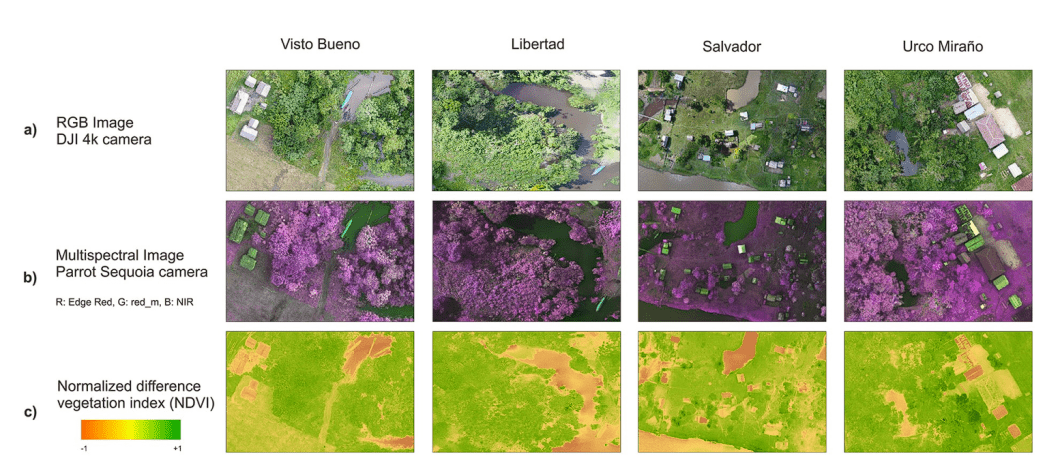

Autonomous Drones: Drones based mostly on LNNs outperformed drones based mostly on conventional AI methods. Particularly, drones carried out effectively on unknown territory as a result of adaptability of LNNs. This has lots of potential in army and catastrophe administration the place conditions are unpredictable.

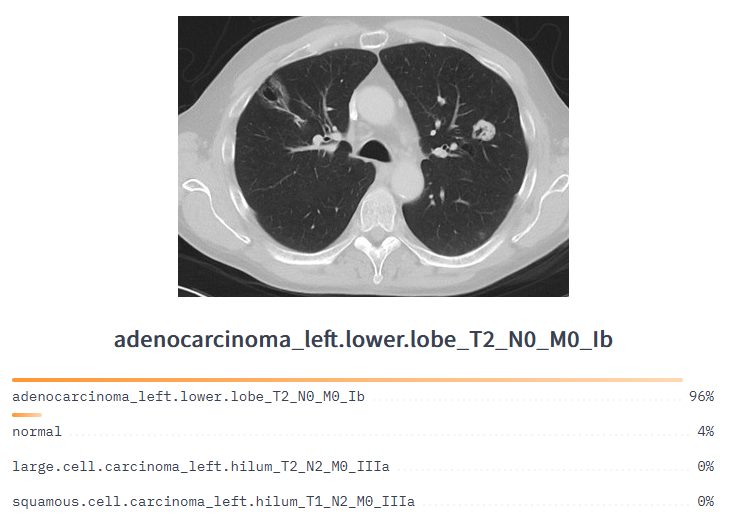

Medical Analysis: Since LNNs have a dynamic structure, they can be utilized in medical prognosis as they will adapt to new conditions with out costly coaching. These healthcare use instances can embody medical picture evaluation, well being data evaluation, and biomedical sign processing.

Self-Driving Automobiles: The dynamic structure of LNNs has the potential to drastically enhance the efficiency of self-driving vehicles. Generally AI fashions utilized in vehicles encounter information that’s not a part of their coaching information. The mannequin doesn’t know what to do on this situation and this may result in accidents. LNNs can assist keep away from this as they study on the job and adapt their conduct.

Pure language Processing (NLP): Coaching enormous textual information utilizing Typical Neural Networks (CNNs) for sentiment evaluation, entity recognition, and so on., generally is a time-consuming course of requiring enormous computational sources. LNNs clear up this drawback as they require much less computational sources and may adapt to the incoming information with out repeated coaching of the mannequin.

What’s Subsequent?

Liquid Neural Networks symbolize a brand new space of analysis that’s nonetheless unexplored and has lots of potential in numerous sectors. They provide lots of advantages over conventional neural networks. Nonetheless, in addition they have some drawbacks. Analysis is happening on this route, and hopefully, in the future, they could change the panorama of Synthetic Intelligence.

If you wish to study extra in regards to the various kinds of networks and perceive them, learn the beneath blogs for additional data.