The preliminary analysis papers date again to 2018, however for many, the notion of liquid networks (or liquid neural networks) is a brand new one. It was “Liquid Time-constant Networks,” printed on the tail finish of 2020, that put the work on different researchers’ radar. In the meanwhile, the paper’s authors have offered the work to a wider viewers by way of a collection of lectures.

Ramin Hasani’s TEDx talk at MIT is without doubt one of the greatest examples. Hasani is the Principal AI and Machine Studying Scientist on the Vanguard Group and a Analysis Affiliate at CSAIL MIT, and served because the paper’s lead creator.

“These are neural networks that may keep adaptable, even after coaching,” Hasani says within the video, which appeared on-line in January. Whenever you practice these neural networks, they will nonetheless adapt themselves primarily based on the incoming inputs that they obtain.”

The “liquid” bit is a reference to the pliability/adaptability. That’s a giant piece of this. One other massive distinction is measurement. “Everybody talks about scaling up their community,” Hasani notes. “We need to scale down, to have fewer however richer nodes.” MIT says, for instance, {that a} crew was capable of drive a automobile by way of a mixture of a notion module and liquid neural networks comprised of a mere 19 nodes, down from “noisier” networks that may, say, have 100,000.

“A differential equation describes every node of that system,” the college defined final 12 months. “With the closed-form resolution, in case you substitute it inside this community, it will provide the actual conduct, because it’s approximation of the particular dynamics of the system. They’ll thus remedy the issue with an excellent decrease variety of neurons, which implies it will be sooner and fewer computationally costly.”

The idea first crossed my radar by means of its potential purposes within the robotics world. In actual fact, robotics make a small cameo in that paper when discussing potential real-world use. “Accordingly,” it notes, “a pure software area can be the management of robots in continuous-time remark and motion areas the place causal buildings akin to LTCs [Liquid Time-Constant Networks] may help enhance reasoning.”

Need the highest robotics information in your inbox every week? Join Actuator right here.

One of many advantages of those methods is that they are often run with much less computing energy. Meaning — doubtlessly — utilizing one thing so simple as a Raspberry Pi to execute complicated reasoning, quite than offloading the duty to exterior {hardware} by way of the cloud. It’s simple to see how that is an intriguing resolution.

One other potential profit is the black field query. This refers to the truth that — for complicated neural networks — researchers don’t fully perceive how the person neurons mix to create their ultimate output. One is reminded of that well-known Arthur C. Clarke quote, “Any sufficiently superior expertise is indistinguishable from magic.” That is an oversimplification, in fact, however the level stays: Not all magic is nice magic.

The adage “rubbish in, rubbish out” comes into play right here, as effectively. All of us intuitively perceive that dangerous knowledge causes dangerous outputs. This is without doubt one of the locations the place biases grow to be an element, impacting the last word outcome. Extra transparency would play an essential position in figuring out causality. Opacity is an issue — notably in these situations the place inferred causality could possibly be — fairly actually — a matter of life or demise.

Paper co-author and head of MIT CSAIL, Daniela Rus, factors me within the course of a deadly Tesla crash in 2016, the place the imaging system failed to tell apart an oncoming tractor trailer from the intense sky that backdropped it. Tesla wrote on the time:

Neither Autopilot nor the driving force observed the white aspect of the tractor trailer in opposition to a brightly lit sky, so the brake was not utilized. The excessive trip top of the trailer mixed with its positioning throughout the street and the extraordinarily uncommon circumstances of the impression brought on the Mannequin S to cross below the trailer, with the underside of the trailer impacting the windshield of the Mannequin S.

Hasani says the fluid methods are “extra interpretable,” due, partly, to their smaller measurement. “Simply altering the illustration of a neuron,” he provides, “you’ll be able to actually discover some levels of complexity you couldn’t discover in any other case.

As for the downsides, these methods require “time collection” knowledge, in contrast to different neural networks. That’s to say that they don’t presently extract the knowledge they want from static pictures, as an alternative requiring a dataset that includes sequential knowledge like video.

“The actual world is all about sequences,” says Hasani. “Even our notion — you’re not perceiving pictures, you’re perceiving sequences of pictures,” he says. “So, time collection knowledge truly create our actuality.”

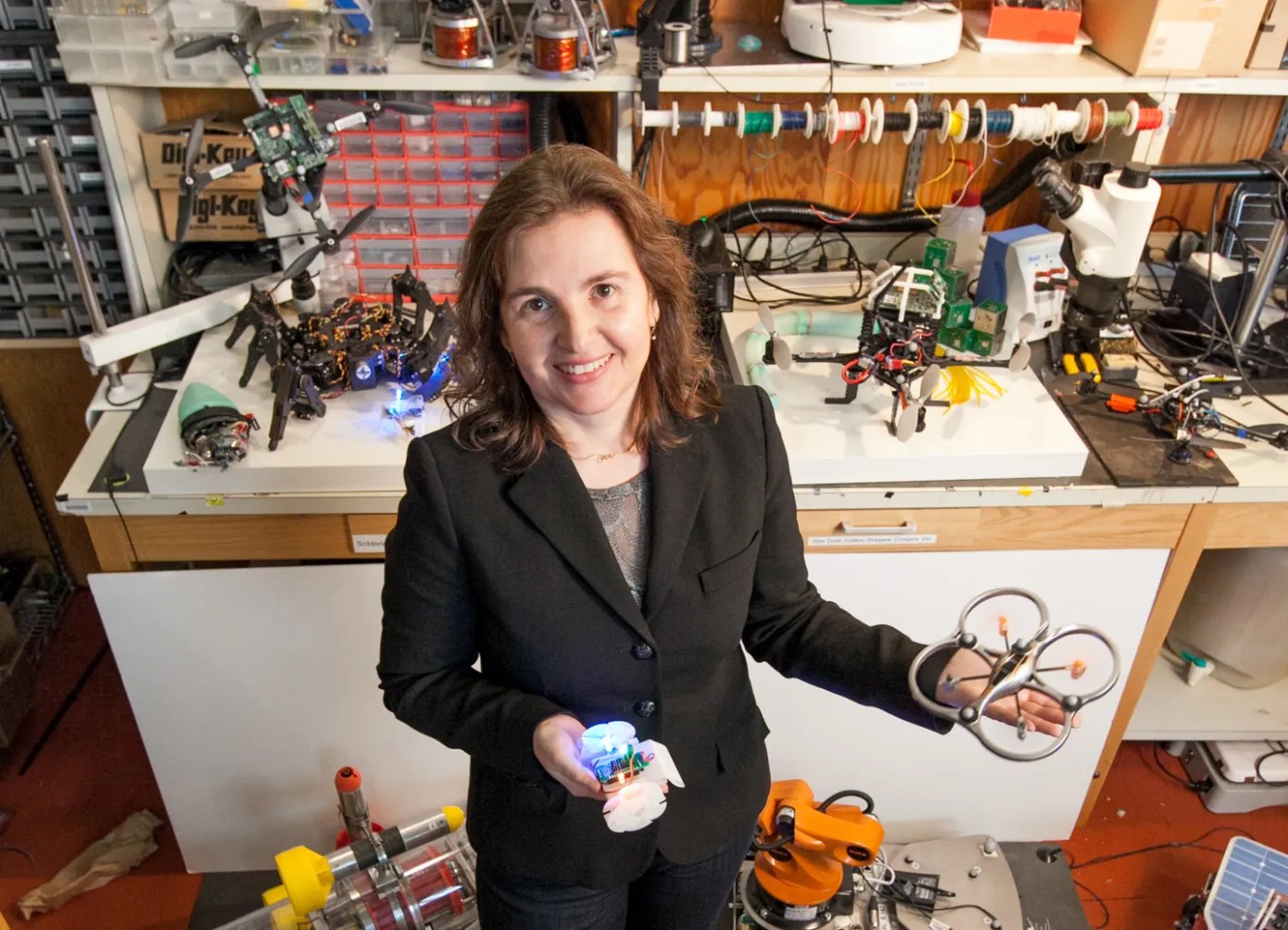

I sat down with Rus to debate the character of those networks, and assist my artistic writing-major mind perceive about what the rising expertise may imply for robotics, transferring ahead.

Picture Credit: MIT CSAIL

TechCrunch: I get the sense that one of many motivating elements [in the creation of liquid neural networks] are {hardware} constraints with cell robotic methods and their lack of ability to run extraordinarily complicated computations and depend on these very complicated neural networks.

Daniela Rus: That’s a part of it, however most significantly, the rationale we began fascinated with liquid networks has to do with a number of the limitations of at the moment’s AI methods, which stop them from being very efficient for security, important methods and robotics. A lot of the robotics purposes are security important.

I assume the general public who’ve been engaged on neural networks wish to do extra with much less if that had been an choice.

Sure, but it surely’s not nearly doing extra with much less. There are a number of methods through which liquid networks may help robotic purposes. From a computational standpoint, the liquid networkers are a lot smaller. They’re compact, they usually can run on a Raspberry Pi and EDGE gadgets. They don’t want the cloud. They’ll run on the sorts of {hardware} platforms that we now have in robotics.

We get different advantages. They’re associated as to if you’ll be able to perceive how the machine studying system makes choices — or not. You may perceive whether or not the choice goes to satisfy some security constraints or not. With an enormous machine studying mannequin, it’s truly inconceivable to know the way that mannequin makes choices. The machine studying fashions which can be primarily based on deep networks are actually large and opaque.

And unpredictable.

They’re opaque and unpredictable. This presents a problem, as customers are unable to grasp how choices are made by these methods. That is actually an issue if you wish to know in case your robotic will transfer appropriately — whether or not the robotic will reply appropriately to a perceptual enter. The primary deadly accident for Tesla was as a result of a machine studying imperfection. It was as a result of a notion error. The automobile was driving on the freeway, there was a white truck that was crossing and the perceptual system believed that the white truck was a cloud. It was a mistake. It was not the sort of mistake {that a} human would make. It’s the sort of mistake {that a} machine would make. In security important methods, we can not afford to have these sorts of errors.

Having a extra compact system, the place we are able to present that the system doesn’t hallucinate, the place we are able to present that the system is causal and the place we are able to perhaps extract a choice tree that presents in comprehensible human kind how the system reaches a conclusion, is a bonus. These are properties that we now have for liquid networks. One other factor we are able to do with liquid networks is we are able to take a mannequin and wrap it in one thing we name “BarrierNet.” Meaning including one other layer to the machine studying engine that includes the constraints that you’d have in a management barrier operate formulation for management theoretic options for robots. What we get from BarrierNet is a assure that the system will probably be secure and fall inside some bounds of security.

You’re speaking about deliberately including extra constraints.

Sure, and they’re the sort of constraints that we see in management theoretic, mannequin predictive management options for robots. There’s a physique of labor that’s creating one thing known as “management barrier capabilities.” It’s this concept that you’ve got a operate that forces the output of a system inside a secure area. You might take a machine studying system, wrap it with BarrierNet, and that filters and ensures that the output will fall throughout the security limits of the system. For instance, BarrierNet can be utilized to verify your robotic is not going to exert a power or a torque outdoors its vary of functionality. If in case you have a robotic that goals to use power that’s larger than what it might probably do, then the robotic will break itself. There are examples of what we are able to do with BarrieNet and Liquid Networks. Liquid networks are excitig as a result of they handle points that we now have with a few of at the moment’s methods.

They use much less knowledge and fewer coaching time. They’re smaller, they usually use a lot much less inference time. They’re provably causal. They’ve motion that’s rather more centered on the duty than different machine studying approaches. They make choices by someway understanding elements of the duty, quite than the context of the duty. For some purposes, the context is essential, so having an answer that depends on context is essential. However for a lot of robotic purposes, the context isn’t what issues. What issues is the way you truly do the duty. In case you’ve seen my video, it reveals how a deep community would maintain a automobile on the street by bushes and bushes on the aspect of the street. Whereas the liquid networks have a distinct means of deciding what to do. Within the context of driving, meaning wanting on the street’s horizon and the aspect of the street. That is, in some sense, a profit, as a result of it permits us to seize extra in regards to the process.

It’s avoiding distraction. It’s avoiding being centered on each facet of the surroundings.

Precisely. It’s rather more centered.

Do these methods require completely different varieties and quantities of coaching knowledge than extra conventional networks?

In our experiments, we now have skilled all of the methods utilizing the identical knowledge, as a result of we need to evaluate them with respect to efficiency after coaching them in the identical means. There are methods to cut back the information that’s wanted to coach a system, and we don’t know but whether or not these knowledge discount methods could be utilized broadly — in different phrases, they will profit all fashions. Or if they’re notably helpful for a mannequin that’s formulated within the dynamical methods formulation that we now have for liquid networks.

I need to add that liquid neural networks require time collection knowledge. A liquid community resolution doesn’t work on datasets consisting of static knowledge objects. We can not use ImageNet with liquid neural networks proper now. Now we have some concepts, however as of now, one actually wants an software with time collection to make use of liquid networks.

The strategies for decreasing the quantity of data that we feed into our fashions are someway associated to this notion that there’s loads of repetition. So not all the information objects offer you efficient new info. There may be knowledge that brings and widens info range, after which there’s knowledge that’s extra of the identical. For our options, we now have discovered that if we are able to curate the datasets in order that they’ve range and fewer repetition, then that’s the nice solution to transfer ahead.

Ramin [Hasani] gave a TEDx speak at MIT. One of many issues he stated, which makes loads of sense on the face of it’s, “dangerous knowledge means dangerous efficiency.”

Rubbish in, rubbish out.

Is the chance of dangerous knowledge heightened or mitigated once you’re coping with a smaller variety of neurons?

I don’t assume it’s any completely different for our mannequin than another mannequin. Now we have fewer parameters. The computations in our fashions are extra highly effective. There’s process equivalence.

If the community is extra clear, it’s simpler to find out the place the issues are cropping up.

After all. And if the community is smaller, it’s simpler from the standpoint of a human. If the mannequin is small and solely has just a few neurons, then you’ll be able to visualize the efficiency. You can too mechanically extract a choice tree for a way the mannequin makes choices.

Whenever you speak about causal relationships, it appears like one of many issues with these large neural networks is that they will join issues nearly arbitrarily. They’re not essentially figuring out causal relationships in every case. They’re taking additional knowledge and making connections that aren’t at all times there.

The actual fact is that we actually don’t perceive. What you say sounds proper, intuitively, however nobody truly understands how the large fashions make choices. In a convolutional resolution to a notion drawback, we predict that we perceive that the sooner layers basically establish patterns at small scale, and the later layers establish patterns at larger ranges of abstraction. In case you have a look at a big language mannequin and also you ask, ‘how does it attain choices?’ You’ll hear that we don’t actually know. We need to perceive. We need to examine these massive fashions in higher element and proper now that is tough as a result of the fashions are behind partitions.

Do you see generative AI itself having a serious position in robotics and robotics studying, going ahead?

Sure, in fact. Generative AI is awfully highly effective. It actually put AI in everybody’s pockets. That’s extraordinary. We didn’t actually have a expertise like this prior to now. Language has grow to be a sort of working system, a programming language. You may specify what you need to do in pure language. You don’t should be a rock-star pc scientist to synthesize a program. However you additionally should take this with a grain of salt, as a result of the outcomes which can be centered on code era present that it’s principally the boiler plate code that may be generated mechanically by massive language fashions. Nonetheless, you’ll be able to have a co-pilot that may enable you to code, catch errors, mainly the co-pilot helps programmers be extra productive.

So, how does it assist in robotics? Now we have been utilizing generative AI to resolve complicated robotics issues which can be tough to resolve with our current strategies […] With generative AI you may get a lot sooner options and rather more fluid and human-like options for management than we get with mannequin predictive options. I believe that’s very highly effective. The robots of the long run will transfer much less mechanistically. They’ll be extra fluid, extra humanlike of their motions. We’ve additionally used generative AI for design. Right here we want greater than sample era for robots. The machines should make sense within the context of physics and the bodily world.