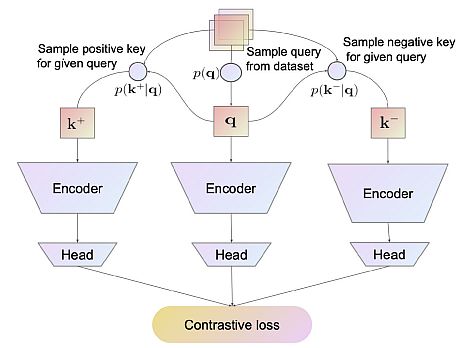

The aim of contrastive studying is to extract significant representations by evaluating pairs of optimistic and unfavourable situations. It assumes that dissimilar instances must be farther aside and put the comparable situations nearer within the studying embedding house.

Contrastive studying (CL) permits fashions to establish pertinent traits and similarities within the information by presenting studying as a discrimination process. In different phrases, samples from the identical distribution are pushed other than each other within the embedding house. As well as, samples from different distributions are pulled to 1 one other.

About us: Viso Suite makes it potential for enterprises to seamlessly implement visible AI options into their workflows. From crowd monitoring to defect detection to letter and quantity recognition, Viso Suite is customizable to suit the particular necessities of any utility. To study extra about what Viso Suite can do in your group, ebook a demo with our staff of specialists.

The Advantages of Contrastive Studying

Fashions can derive significant representations from unlabeled information by means of contrastive studying. Contrastive studying permits fashions to separate dissimilar situations whereas mapping comparable ones shut collectively by using similarity and dissimilarity.

This technique has proven promise in a wide range of fields, together with reinforcement studying, laptop imaginative and prescient, and pure language processing (NLP).

The advantages of contrastive studying embody:

- Utilizing similarity and dissimilarity to map situations in a latent house, CL is a potent technique for extracting significant representations from unlabeled information.

- Contrastive studying enhances mannequin efficiency and generalization in a variety of functions, together with information augmentation, supervised studying, semi-supervised studying, and NLP.

- Knowledge augmentation, encoders, and projection networks are essential parts that seize pertinent traits and parallels.

- It contains each self-supervised contrastive studying (SCL) with unlabeled information and supervised contrastive studying (SSCL) with labeled information.

- Contrastive studying employs totally different loss features: Logistic loss, N-pair loss, InfoNCE, Triplet, and Contrastive loss.

The right way to Implement Contrastive Studying?

Contrastive studying is a potent technique that permits fashions to make use of huge portions of unlabeled information whereas nonetheless enhancing efficiency with a small amount of labeled information.

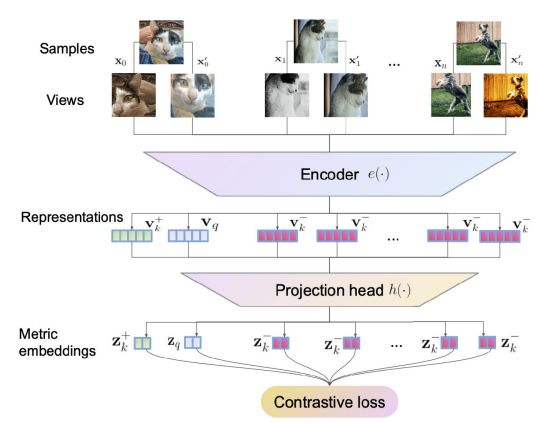

The primary aim of contrastive studying is to drive dissimilar samples farther away and map comparable situations nearer in a discovered embedding house. To implement CL you need to carry out information augmentation and practice the encoder and projection community.

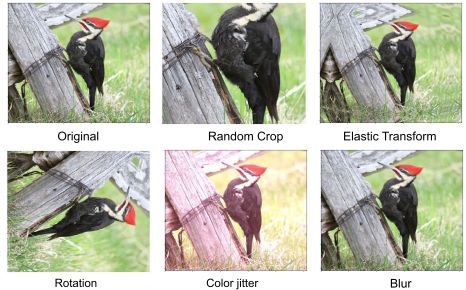

Knowledge Augmentation

The aim of knowledge augmentation is to reveal the mannequin to a number of viewpoints on the identical occasion and enhance the information variations. Knowledge augmentation creates numerous situations or augmented views by making use of totally different transformations (perturbations) to unlabeled information. It’s usually step one in contrastive studying.

Cropping, flipping, rotation, random cropping, and colour adjustments are examples of widespread information augmentation methods. Contrastive studying ensures that the mannequin learns to gather pertinent info regardless of enter information adjustments by producing numerous situations.

Encoder and Projection Community

Coaching an encoder community is the following stage of contrastive studying. The augmented situations are fed into the encoder community, which then maps them to a latent illustration house the place important similarities and options are recorded.

Often, the encoder community is a deep neural community, like a recurrent neural community (RNN) for sequential information or a CNN for visible information.

The discovered representations are additional refined utilizing a projection community. The output of the encoder community is projected onto a lower-dimensional house, often known as the projection or embedding house (a projection community).

The projection community makes the information easier and redundant by projecting the representations to a lower-dimensional house, which makes it simpler to differentiate between related and dissimilar situations.

Coaching and Optimization

After the loss perform has been established, a big unlabeled dataset is used to coach the mannequin. The mannequin’s parameters are iteratively up to date throughout the coaching part to attenuate the loss perform.

The mannequin’s hyperparameters are ceaselessly adjusted utilizing optimization strategies like stochastic gradient descent (SGD) or variations. Moreover, batch measurement updates are used for coaching, processing a portion of augmented situations without delay.

Throughout coaching, the mannequin positive aspects the flexibility to establish pertinent traits and patterns within the information. The iterative optimization course of leads to higher discrimination and separation between related and totally different situations, which additionally improves the discovered representations.

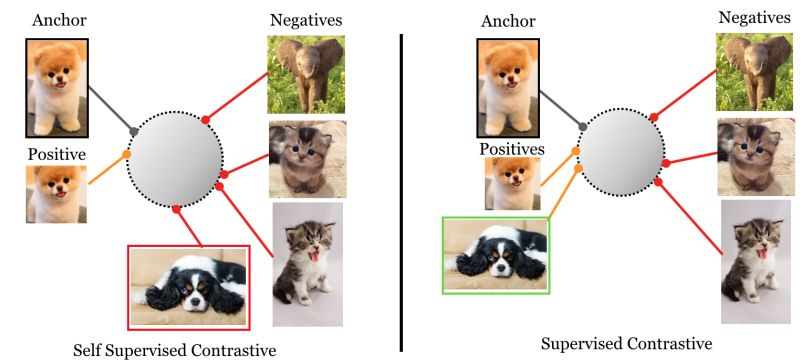

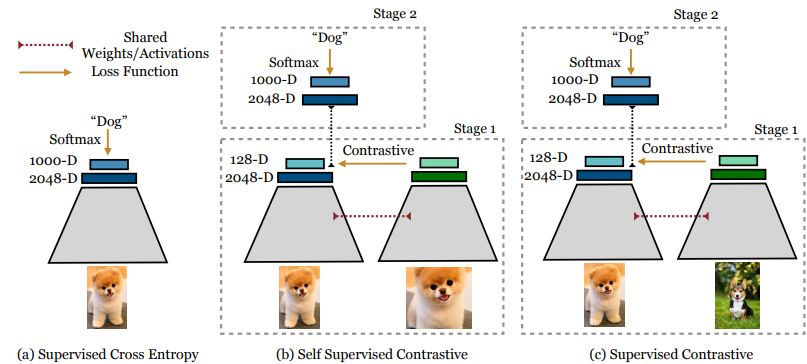

Supervised vs Self-supervised CL

The sphere of supervised contrastive studying (SCL) systematically trains fashions to differentiate between related and dissimilar situations utilizing labeled information. Pairs of knowledge factors and their labels – which point out whether or not the information factors are related or dissimilar, are used to coach the mannequin in SCL.

The mannequin positive aspects the flexibility to differentiate between related and dissimilar instances by maximizing this aim, which boosts efficiency on subsequent challenges.

A special technique is self-supervised contrastive studying (SSCL), which doesn’t depend on specific class labels however as a substitute learns representations from unlabeled information. Pretext duties may also help SSCL to generate optimistic and unfavourable pairings from the unlabeled information.

The aim of those well-crafted pretext assignments is to encourage the mannequin to establish important traits and patterns within the information.

SSCL has demonstrated outstanding outcomes in a number of fields, together with pure language processing and laptop imaginative and prescient. SSCL can be profitable in laptop imaginative and prescient duties akin to object identification and picture classification issues.

Loss Features in CL

Contrastive studying makes use of a number of loss features to specify the training course of’s targets. These loss features let the mannequin distinguish between related and dissimilar situations and seize significant representations.

To establish pertinent traits and patterns within the information and enhance the mannequin’s capability, we must always know the assorted loss features employed in contrastive studying.

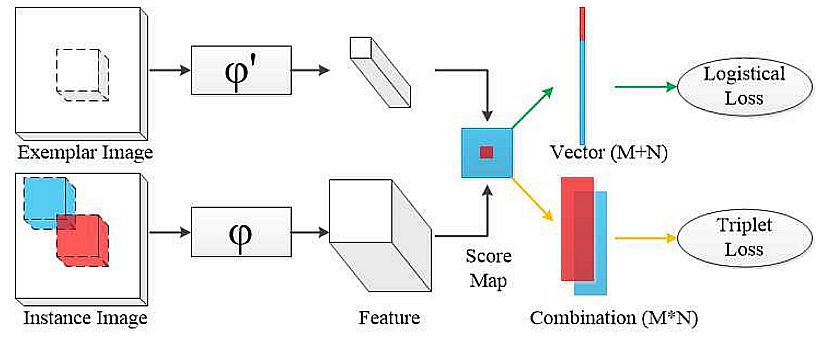

Triplet Loss

A typical loss perform utilized in contrastive studying is triplet loss. Preserving the Euclidean distances between situations is its aim. Triplet loss is the method of making triplets of situations: a foundation occasion, a unfavourable pattern that’s dissimilar from the premise, and a optimistic pattern that’s corresponding to the premise.

The aim is to ensure that, by a predetermined margin, the space between the premise and the optimistic pattern is lower than the space between the premise and the unfavourable pattern.

Triplet loss could be very helpful in laptop imaginative and prescient duties the place accumulating fine-grained similarities is crucial, e.g. face recognition and picture retrieval. Nevertheless, as a result of deciding on significant triplets from coaching information may be troublesome and computationally expensive, triplet loss could also be delicate to triplet choice.

N-pair Loss

An growth of triplet loss, N-pair loss considers a number of optimistic and unfavourable samples for a particular foundation occasion. N-pair loss tries to maximise the similarity between the premise and all optimistic situations whereas lowering the similarity between the premise and all unfavourable situations. It doesn’t evaluate a foundation occasion to a single optimistic (unfavourable) pattern.

N-pair loss gives robust supervision studying, which pushes the mannequin to know delicate correlations amongst quite a few samples. It enhances the discriminative energy of the discovered representations and might seize extra intricate patterns by making an allowance for many situations without delay.

N-pair loss has functions in a number of duties, e.g. picture recognition, the place figuring out delicate variations amongst related situations is essential. By using a wide range of each optimistic and unfavourable samples it mitigates among the difficulties associated to triplet loss.

Contrastive Loss

One of many primary loss features in contrastive studying is contrastive loss. Within the discovered embedding house, it seeks to scale back the settlement between situations from separate samples and maximize the settlement between optimistic pairs (situations from the identical pattern).

The contrastive loss perform is a margin-based loss during which a distance metric, and measures how related two examples are. To calculate the contrastive loss – researchers penalized optimistic samples for being too far aside and unfavourable samples for being too shut within the embedding house.

Contrastive loss is environment friendly in a wide range of fields, together with laptop imaginative and prescient and pure language processing. Furthermore, it pushes the mannequin to develop discriminative representations that seize important similarities and variations.

Contrastive Studying Frameworks

Many contrastive studying frameworks have been well-known in deep studying in recent times due to their effectivity in studying potent representations. Right here we’ll elaborate on the most well-liked contrastive studying frameworks:

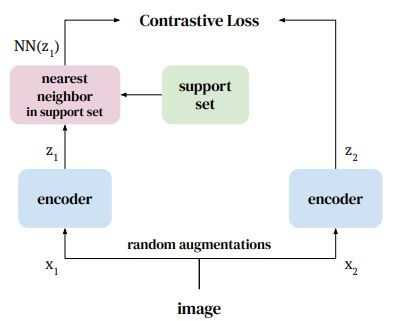

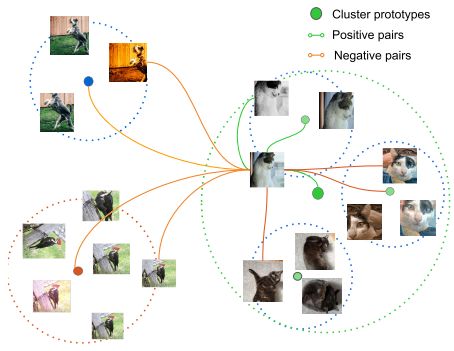

NNCLR

The Nearest-Neighbor Contrastive Studying (NNCLR) framework makes an attempt to make use of totally different photos from the identical class, as a substitute of augmenting the identical picture. In reverse, most strategies deal with totally different views of the identical picture as positives for a contrastive loss.

By sampling the dataset’s nearest neighbors within the latent house and utilizing them as optimistic samples, the NNCLR mannequin produces a extra various collection of optimistic pairs. It additionally improves the mannequin’s studying capabilities.

Just like the SimCLR framework, NNCLR employs the InfoNCE loss. Nevertheless, the optimistic pattern is now the premise picture’s closest neighbor.

SimCLR

The efficacy of the self-supervised contrastive studying framework Easy Contrastive Studying of Representations (SimCLR) in studying representations is somewhat excessive. By using a symmetric neural community structure, a well-crafted contrastive goal, and information augmentation, it expands on the concepts of contrastive studying.

SimCLR’s important aim is to attenuate the settlement between views from numerous situations. Additionally, it maximizes the settlement between augmented views of the identical occasion. To offer efficient and environment friendly contrastive studying, the system makes use of a large-batch coaching strategy.

In a number of fields, akin to CV, NLP, and reinforcement studying, SimCLR has proven excellent efficiency. It demonstrates its efficacy in studying potent representations by outperforming earlier approaches in quite a lot of benchmark datasets and duties.

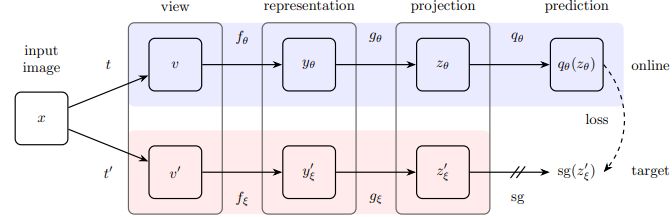

BYOL

Bootstrap Your Personal Latent (BYOL) updates the goal community parameters within the self-supervised contrastive studying framework on-line. Utilizing a pair of on-line and goal networks, it updates the goal community by taking exponential transferring averages of the weights within the on-line community. BYOL additionally emphasizes studying representations with out requiring unfavorable examples.

The strategy decouples the similarity estimation from unfavourable samples whereas optimizing settlement between enhanced views of the identical occasion. In a number of fields, akin to laptop imaginative and prescient and NLP, BYOL has proven outstanding efficiency. As well as, it has produced state-of-the-art outcomes and demonstrated notable enhancements in illustration high quality.

CV Purposes of Contrastive Studying

Contrastive Studying has been efficiently utilized within the area of laptop imaginative and prescient. The primary functions embody:

- Object Detection: a contrastive self-supervised technique for object detection employs two methods: 1) multi-level supervision to intermediate representations, and a couple of) contrastive studying between the worldwide picture and native patches.

- Semantic Segmentation: making use of contrastive studying for the segmentation of actual photos. It makes use of supervised contrastive loss to pre-train a mannequin and the standard cross-entropy for fine-tuning.

- Video Sequence Prediction: The mannequin employs a contrastive machine studying algorithm for unsupervised illustration studying. Engineers make the most of among the sequence’s frames to boost the coaching set as optimistic/unfavourable pairs.

- Distant Sensing: The mannequin employs a self-supervised pre-train and supervised fine-tuning method to phase information from distant sensing photos.

What’s Subsequent?

Immediately, contrastive studying is gaining recognition as a way for enhancing present supervised and self-supervised studying approaches. Strategies based mostly on contrastive studying have improved efficiency on duties involving illustration studying and semi-supervised studying.

Its primary concept is to check samples from a dataset and push or pull representations within the authentic photos in accordance with whether or not the samples are a part of the identical or totally different distribution (e.g., the identical object in object detection duties, or class in classification fashions).

Incessantly Requested Questions

Q1: How does contrastive studying work?

Reply: Contrastive studying drives dissimilar samples farther away and maps comparable situations nearer in a discovered embedding house.

Q2: What’s the function of the loss features in contrastive studying?

Reply: Loss features specify the targets of the machine studying mannequin. They let the mannequin distinguish between related and dissimilar situations and seize significant representations.

Q3: What are the primary frameworks for contrastive studying?

Reply: The primary frameworks for contrastive studying embody Nearest-Neighbor Contrastive Studying (NNCLR), SimCLR, and Bootstrap Your Personal Latent (BYOL).

This autumn: What are the functions of contrastive studying in laptop imaginative and prescient?

Reply: The functions of contrastive studying in laptop imaginative and prescient embody object detection, semantic segmentation, distant sensing, and video sequence prediction.